Musk All-in on xAI, Challenging OpenAI

![]() 07/26 2024

07/26 2024

![]() 640

640

Author: Sun Pengyue

Editor: Da Feng

After four months, the world's largest supercomputing center, "Supercluster," officially commenced operation.

Elon Musk announced on social media that at 4:20 AM US time on July 22, the "Supercluster," built jointly by xAI, X, NVIDIA, and others, had begun training. Comprising 100,000 H100 GPUs, it is currently the world's most powerful training cluster.

100,000 NVIDIA GPUs represent a "chip Great Wall" built by Musk in the AI world, quadrupling the 25,000 GPUs used by OpenAI to train GPT4.

It's worth noting that construction on this global supercomputing center only began in March this year, with a planned completion date of 2025. Surprisingly, it was completed four months ahead of schedule.

The official launch of the supercomputing center will sound the horn for Musk's counterattack against OpenAI.

Costly Supercomputing Center

The root cause of Musk's construction of the supercomputing center is to train xAI's chatbot, Grok.

Musk founded xAI in July 2023, with core founding members from OpenAI, Google DeepMind, Microsoft Research, Tesla, and other renowned enterprises. To compete directly with ChatGPT, Musk has devoted most of his energy to xAI over the past two years.

To ensure the implementation of the supercomputing center, Musk publicly stated that he would personally lead and oversee the development of this supercomputer.

Site selection is undoubtedly crucial for building a supercomputing center.

According to Forbes, Musk's team held discussions with seven or eight cities before finally settling on Memphis, Tennessee, in March due to the city's abundant electricity supply and rapid construction capabilities.

It's worth mentioning that the supercomputing center requires immense resources. In addition to basic hardware like NVIDIA chips, the facility may consume up to 150 megawatts of electricity per hour, equivalent to the power needed by 100,000 households, posing a significant burden on the local area.

Apart from electricity, the supercomputing center's cooling system is estimated to require 1.3 million gallons of water per day, necessitating daily withdrawals from Memphis's main water source.

This sparked discontent among Memphis residents and environmental organizations, who jointly issued an open letter protesting: "We must consider how an industry consuming such vast amounts of energy will further impact already polluted and overburdened communities."

To complete the project, xAI pledged to improve Memphis's public infrastructure to support the supercomputing center's development, including building a new substation and a wastewater treatment facility.

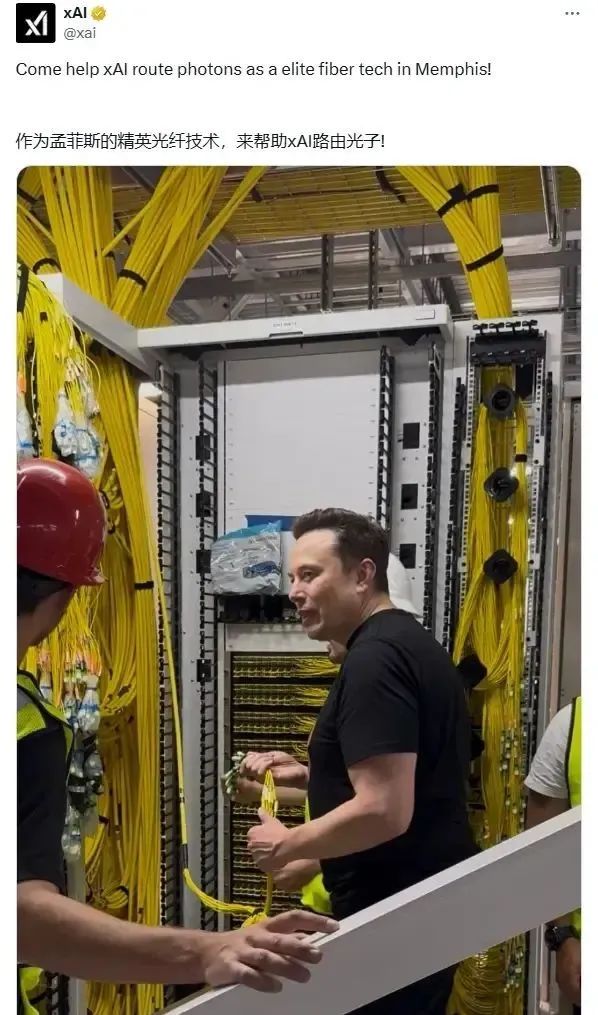

Musk in the Supercluster

Musk has invested a staggering amount in the supercomputing center. Based on cost estimates, each of the 100,000 NVIDIA H100 GPUs costs $30,000 to $40,000, totaling $4 billion (over 29 billion RMB).

Even as the world's richest man, Musk struggled to bear the enormous financial burden