Boosting Speed by Dozens of Times: Reshaping AI Computing Clusters with Photonic Computing

![]() 11/04 2024

11/04 2024

![]() 678

678

Preface:

To achieve superior AI capabilities, ultra-large-scale models are required. Training these models necessitates the coordination of thousands, or even tens of thousands, of GPUs.

This poses several challenges: high energy consumption due to the large number of GPUs, communication latency between computing cards, and latency and computing power loss between computing clusters.

What if we use light for computation and transmission?

Author | Fang Wensan

Image Source | Network

Photonic computing startup Lightmatter

Lightmatter recently announced a successful $400 million funding round, which will be used to break through the bottlenecks of modern data centers.

As an innovative company in the field of optics, Lightmatter has successfully applied its optical technology to AI computing clusters, achieving a leap in performance.

Traditional electronic transmission is gradually facing bottlenecks in data processing speed, while Lightmatter's optical technology leverages the properties of photons to transmit and process data at the speed of light, significantly reducing data transmission latency and thereby improving the operational efficiency of the entire computing cluster.

This technological breakthrough is the culmination of long-term research and development by the Lightmatter team. They have conducted in-depth research and innovation in key areas such as optical chip design and optical communication protocols, overcoming numerous technical challenges and ultimately achieving the perfect integration of optical technology with AI computing clusters.

Lightmatter was founded in 2017 by Nicholas Harris, Darius Bunandar, and Thomas Graham. Nicholas Harris, a member of the Quantum Photonics Laboratory at MIT, achieved a "programmable nanophotonic processor" (PNP) with his collaborators in 2012. This is an optical processor based on silicon photonics that can perform matrix transformations on light.

Photonic Computing Reshapes AI Computing Clusters

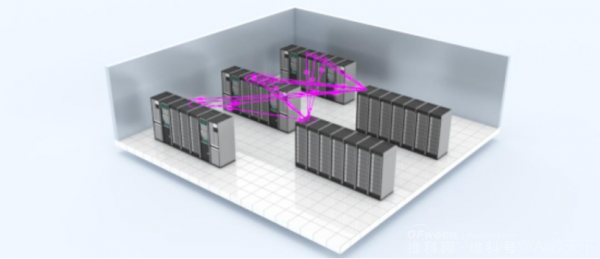

Lightmatter possesses photonic computing units, optical chip packaging and transmission technologies, enabling systematic enhancement of computational power and efficiency across the entire AI computing cluster while reducing power consumption.

Lightmatter's optical interconnect layer technology allows hundreds of GPUs to work synchronously, greatly simplifying the complexity and cost of AI model training and operation.

With the rapid development of AI technology, the data center industry is experiencing unprecedented growth. However, simply increasing the number of GPUs is not a solution.

High-performance computing experts have long pointed out that if the nodes of a supercomputer are idle while waiting for data input, the speed of the nodes becomes irrelevant.

The interconnect layer is crucial for integrating CPUs and GPUs into a massive computer, and Lightmatter has built the fastest interconnect layer to date using photonic chips developed since 2018.

Nick Harris, CEO and co-founder of the company, stated that ultra-large-scale computing requires more efficient photonic interconnect technology, which traditional Cisco switches cannot provide.

Currently, the top technologies in the data center industry are NVLink and NVL72 platforms, but they still have bottlenecks in network speed and latency.

Lightmatter's photonic interconnect technology significantly enhances data center performance with pure optical interfaces capable of 1.6 terabits per fiber.

Founder Harris noted that the development of photonic technology has surpassed expectations, and after seven years of arduous research and development, Lightmatter is ready to meet market challenges.

Ultra-Fast Computation and Connectivity with Software Compatibility

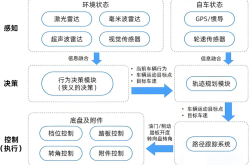

Lightmatter's products are divided into three parts: the photonic computing platform (Envise), chip interconnect product (Passage), and compatible software (Idiom).

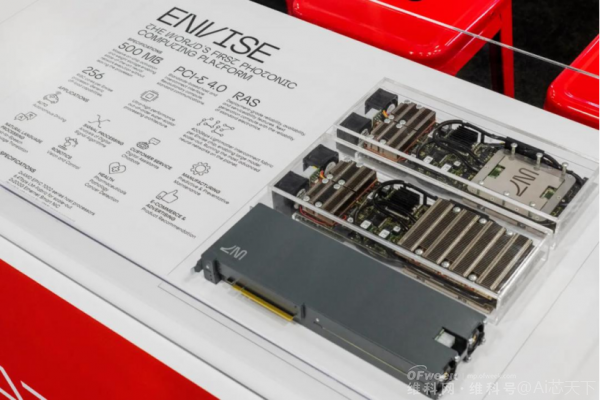

Envise: The world's first photonic computing platform, each Envise processor has 256 RISC cores, providing 400Gbps of inter-chip interconnect bandwidth and supporting the PCI-E 4.0 standard interface for good compatibility.

The principle of the Envise processor is that light computes through waveguides, and each additional color of light source can increase computational speed accordingly.

With the same computing core, when the number of light source types reaches 8, computational performance improves by 8 times, and computational efficiency reaches 2.6 times that of a conventional computing core. When the computing core and the number of light source types increase simultaneously, computational performance can be boosted by dozens of times.

Passage: A technology that utilizes photons for chip interconnect, belonging to I/O technology. Any supercomputer consists of many small, independent computers. To perform optimally, they must constantly communicate with each other to ensure that each core is aware of the progress of others and coordinates the extremely complex computational problems addressed by the supercomputer design.

Lightmatter's technology utilizes waveguides rather than optical fibers to interconnect and transmit data between different types of computing cores on a large chip, providing extremely high parallel interconnect bandwidth.

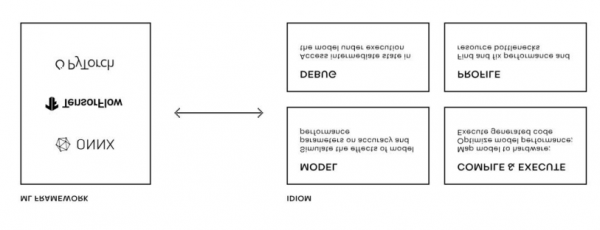

Idiom: A workflow tool that allows models built on frameworks such as Pytorch, TensorFlow, or ONNX to be directly used on the Envise computing infrastructure without modifying the Pytorch, TensorFlow, or ONNX files.

Additionally, it provides developers with a series of convenient tools, such as automatically virtualizing each Envise server, partitioning between multiple Envise servers, and personalized allocation of chip usage for different users.

Market Competitive Landscape

Lightmatter's photonic interconnect technology has not only enhanced data center performance but also attracted the attention of many large data center companies, including Microsoft, Amazon, xAI, and OpenAI.

This $400 million Series D funding round values Lightmatter at $4.4 billion, making it a leading company in the field of photonic computing.

However, Lightmatter is not the only company focused on photonic computing. Celestial AI also received a $175 million Series C funding round in March this year, primarily utilizing light for data movement within and between chips, similar to Lightmatter's Passage.

There are also many companies working in the field of photonic computing in the Chinese market, albeit on a smaller scale.

Currently, AI computing hardware companies in the Chinese market actually face an opportunity to overtake competitors. This situation is somewhat similar to the Chinese new energy vehicle industry, which does not follow the old system architecture of foreign giants but instead uses new technologies to meet new demands and establish its own advantages.

On the one hand, AI computing is a relatively new field where overseas companies have a lead but have not built insurmountable barriers. On the other hand, AI is proprietary computing, and there are many open-source computing architectures suitable for AI.

As long as Chinese companies can develop some proprietary IP and leverage their strong engineering capabilities, they have a good chance of developing computing hardware that is at least comparable to that of overseas companies.

Conclusion:

In the future, Lightmatter will not only continue to optimize interconnect technology but also develop new chip substrates to further enhance the performance of photonic computing. Harris predicts that interconnect technology will become the core of Moore's Law in the next decade.

Content Source: AlphaFounders; Anten: A New Era of Photonic Computing: Lightmatter Raises $400 Million to Lead the AI Data Center Revolution