Large Models: Navigating Intensifying Competition to Find an Exit Strategy

![]() 12/31 2024

12/31 2024

![]() 561

561

In 2024, the evolution of large models has continued unabated, from the pioneering Sora at the start of the year to the latest o3, with an unending stream of improved models being introduced. Has this marked a significant intensification of competition?

To understand this, we must first define "intensifying competition" as a scenario where, after reaching a certain maturity, an industrial model falls into a "high-level equilibrium trap," resulting in stagnant "growth without development" that cannot be broken, leading to crisis and stagnation.

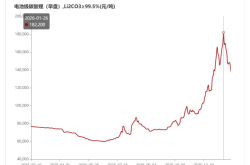

This year, the scaling law of large models has increasingly been challenged. Training clusters have expanded tenfold, from 10,000 to 100,000 GPUs, yet model intelligence has not scaled proportionately. There have been no groundbreaking applications, and model vendors have resorted to price wars, incurring losses to gain market share... These characteristics align with the definition of "intensifying competition."

The next logical questions are: Has this intensification plunged large models into crisis? And where lies the path out of this competition?

During periods of intensifying competition, an industry often struggles to maintain vitality and innovation momentum. The intensification of competition among large models has similarly pushed the sector into an adjustment phase.

A notable impact is the waning enthusiasm of the public and investors. In 2023, AI development was hailed as "a day in AI is like a year on Earth." The "Seven Sisters" of the US stock market (Apple, Microsoft, Google's parent company Alphabet, Amazon, NVIDIA, Tesla, Meta) repeatedly hit new highs amidst this fervor. However, recently, this enthusiasm has visibly waned.

Shareholders of OpenAI and service providers accessing model APIs have publicly voiced their dissatisfaction with the lack of significant AI capability advancements. The recent 12-day OpenAI conference largely focused on refining existing models, products, or technical roadmaps, meeting expectations but lacking highlights and failing to provide strong support for AGI. Ilya Sutskever, former Chief Scientist of OpenAI, proposed at NeurIPS 2024 that "as we know it, pre-training will end," further dampening public enthusiasm.

Skepticism from academia and industry is a perilous sign, as historical AI winters have often stemmed from a loss of confidence and withdrawal of investments.

Another crisis signal is the escalation of product homogeneity competition and elimination races.

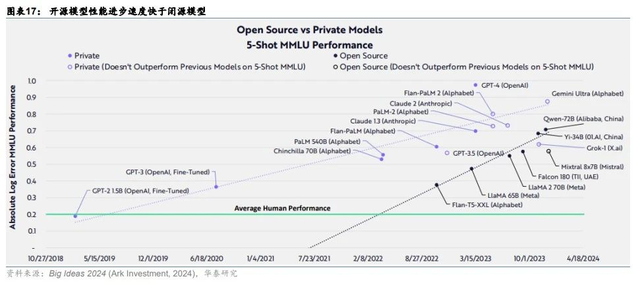

Competition among foundation models also intensified in 2024. Firstly, there is an over-density of models with converging performance, particularly as the gap between open-source and closed-source models rapidly narrows, leading to homogeneous competition.

Secondly, models from the same vendor are also accelerating their elimination process. For instance, GPT-3.5-Turbo has been retired and replaced by GPT-4o mini, and domestic model vendors have generally followed a similar pattern. Users prefer new models offering more without a price increase, rendering older models obsolete. After the release of GPT-4o Mini, API usage doubled.

This fierce homogeneous competition has made model vendors hesitant to reduce investments in training new models while forcing them to lower token prices to cope with price wars, increasing financial burdens. It can be said that currently, large models are not as optimistic as they were in 2023, both in terms of the external macro environment and the micro-management state of enterprises.

At the model level, underlying technological routes and data bottlenecks cannot be effectively overcome in the short term. Therefore, seeking a business-oriented exit strategy has become imperative.

In 2024, we can observe the numerous challenges that intensifying competition among large models poses to business models.

The first is the cloud+API model, where incurring losses and engaging in price wars are far from optimal solutions.

Pay-per-use API calls are a primary monetization model for large models. Lowering token prices to attract more large model business to the cloud for long-term benefits is the basic logic behind price wars among cloud vendors. However, it currently appears that trading price for volume is ineffective.

The reason is that B-end customers prioritize the long-term viability and model quality of vendors over price. Hence, cloud vendors that have successfully traded price for volume inherently possess strong model capabilities. For example, after Baidu made its two main models, ERNIE Bot and ERNIE 3.0 Zeus, free, Baidu Intelligent Cloud's daily API calls increased tenfold in a month. Volcano Cloud, leveraging the Doubao large model family, also witnessed a significant surge in token calls, with some customers experiencing a 5,000-fold increase in token usage. This demonstrates that new users prefer top models, while existing users either do not consider replacing their current models or diversify investments across multiple top vendors, leveraging price reductions to access more models and ultimately retaining the most cost-effective ones. Cloud vendors that avoid price wars, such as Huawei Cloud, positioning the Pangu large model as a "spearhead product," have also achieved notable success in the B-end market, collaborating with industry partners to create coal, pharmaceutical, and digital intelligence solutions reused across multiple enterprises in various verticals this year. Many industry users choose Huawei Cloud due to its robust anti-risk capabilities, continued investment in foundation models, and stable business operations.

These enterprises underscore that the fundamental success of the cloud+API model hinges on "quality over price."

The second is the subscription model, which, due to intensifying competition among large models, faces low user stickiness and loyalty, resulting in a highly fragmented membership market.

Because large models evolve rapidly, new models are often superior in quality and cost-effectiveness, making users more inclined to wait and see. Meanwhile, many older models are no longer updated or retired, making members less willing to be long-term platform users. This forces model vendors to continuously attract new users, making it challenging to halt new user acquisition campaigns. The high cost of acquiring customers remains and affects the user experience, necessitating high-frequency pop-up ads that disturb users. Developing multiple membership levels and paid benefits packages increases user decision fatigue. Newly acquired customers often switch to the free version or cheaper competitor products after some usage, leading to low long-term renewal rates.

It is evident that intensifying competition among large models makes it difficult for most model vendors to convince customers and developers to establish long-term trust relationships. This poses significant challenges for subsequent commercial monetization and value extraction.

To transcend intensifying competition, we must look outward for an exit strategy. The plethora of homogeneous large models has created a densely packed "quake lake." To escape this competition, it is crucial to dredge channels and alleviate congestion. Therefore, 2025 will be a year when large model commercial infrastructure becomes increasingly sophisticated, enabling large model users and developers to access them more conveniently through more comprehensive "water conservancy facilities."

There are several criteria for judging whether a large model is "outward-looking":

The first is the openness or compatibility of the model.

As mentioned earlier, during periods of intensifying competition, users are reluctant to be overly reliant on a single vendor or long-term bound to a specific model vendor. This necessitates models with strong openness and compatibility. For example, Tencent's free resource package for its Hunyuan large model supports multiple models like Hunyuan-pro, Hunyuan-standard, and Hunyuan-turbo, enabling third-party platforms and ISV service providers to offer customers flexible selection and switching between multiple models and model arenas, catering to end-customers' diverse and multimodal needs.

The second criterion is more detailed development tools.

Converting large model technology into productivity also necessitates more detailed support, such as processing tools and workflows. For instance, OpenAI created three professional tools for Sora: Remix, Blend, and Loop, to support better video generation. Many Pro users pay $200 per month for these tools. Domestically, we have tested development tools from platforms like ByteDance's Kouzi development platform and Baidu's ERNIE Bot intelligent entity development platform, which are already user-friendly.

The third criterion is "end-to-end" support for large model applications, from development to commercialization.

In 2024, there were no nationally recognized third-party AI applications. On one hand, model capabilities still need improvement. Some AI agent platforms are flooded with low-level, easily replicable personal agents offering mediocre dialogue experiences, understanding abilities, and multimodal task performance, lacking significant commercial value. On the other hand, many developers are unsure how to commercialize AI applications, so they have not invested much effort in developing products lacking in the market to meet unsatisfied demands. This necessitates platforms increasing commercial resource support for developers.

Ultimately, as the technological ceiling is difficult to break through in the short term, the situation of market saturation and homogeneous competition among large models will persist. For large models to achieve commercial success, the prerequisite is the success of users' and developers' businesses. This underscores the importance of a well-established commercial infrastructure.

To escape the "quake lake" of intensifying competition, all model vendors must answer the following question in 2025: If large models are water and electricity, what exactly will users and developers gain when they flip the switch?