Racing to Create AI-Era Video Editing: The Hidden Ecological Battles and Dawn of Commercialization for Domestic AI

![]() 02/18 2025

02/18 2025

![]() 505

505

Content/Liu Ping

Editor/Yibai

Proofreader/Yong'e

When DeepSeek achieves GPT-4-level performance with a fraction of the computing power, it sends a profound message to all practitioners in the text-to-video industry: Chinese AI innovation must transcend mere technological replication and strive for original paradigms. The true breakthrough may lie in the innovative path of "scenario-defining technology," where technology research and development are deeply integrated with industrial needs, enabling Chinese companies to pioneer new racetracks that surpass the Sora paradigm. Just as the MoE architecture innovation revolutionized large models, the next leap in text-to-video could emerge from the synergy between technology and industrial needs.

This Spring Festival, DeepSeek went viral globally, reminiscent of the stir caused by OpenAI's release of Sora.

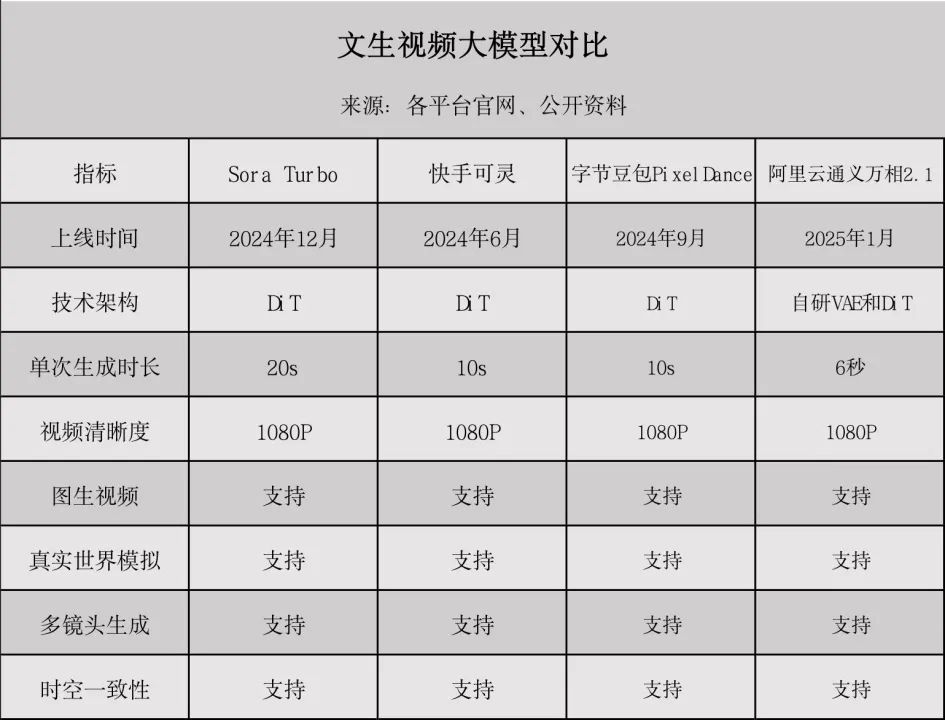

On February 15, 2024, OpenAI's text-to-video model, Sora, emerged, instantly captivating the world with its lifelike effects, intricate camera transitions, and ability to generate one-minute videos. This breakthrough spurred domestic players to rush into the field, with models like Kuaishou's Keling, ByteDance's Jimeng, Alibaba's Tongyi Wanxiang, and Tencent's Hunyuan following suit. Behind them lies a vast commercial space, akin to Jianying's 800 million monthly active users and nearly 10 billion in revenue.

However, after a year of intense competition, manufacturers still adhere to the "small steps, quick iterations, and trial and error" mindset from the internet era. Recently, Kuaishou's Keling released version 1.6 with improved semantic understanding and text responsiveness, but the pricing remained unchanged. A month later, Alibaba Cloud unveiled Tongyi Wanxiang 2.1, featuring comprehensive upgrades in complex movements, physical law adherence, artistic expression, and the pioneering ability to generate Chinese character videos.

DeepSeek's approach of achieving GPT-4-level results with minimal GPU and low deployment costs offers text-to-video manufacturers a model for resolving dilemmas and shifting the competitive landscape.

If 2024 marked the initial exploration of text-to-video manufacturers, amid the rapid evolution of AIGC technology and fierce competition among giants, 2025 holds the promise of scaling from 1 to 10, and even 100. Who will emerge as the pioneer leading the new wave of text-to-video trends? And who will fall into the list of failed products from their respective tech giants?

Part 1

Innovation Dilemmas Amid Consensus on Technical Routes

Chasers Struggle to Break the Duration Curse

When OpenAI unveiled Sora on February 15, 2024, this AI model capable of generating 60-second high-quality videos not only redefined industry standards for text-to-video but also inadvertently set a benchmark for technological pursuit in China's AI sector.

While the traditional U-Net architecture requires complete image forward and backward propagation, Sora's Transformer-based patch training mechanism reduces computational costs by over 40%. This efficiency gain offers hope to domestic manufacturers with limited computing power—similar to how DeepSeek achieves GPT-4-level performance with a fraction of the GPU resources. A similar "shortcut" seems plausible in the text-to-video domain.

From Kuaishou's Keling to Alibaba's Tongyi Wanxiang, and from ByteDance's Jimeng to Tencent's Hunyuan, domestic manufacturers have collectively embarked on a technological arms race to "Replicate Sora."

However, mastering Sora's core technology, the DiT architecture (Diffusion+Transformer), does not guarantee a smooth replication. The critical gap lies in the completeness of the technological system. Beyond the disclosed technological route, undisclosed details like parameter scale and algorithm design still exhibit generational gaps.

Domestic manufacturers mainly compete based on factors like duration and video resolution, rather than breakthroughs in computing power, algorithms, and data.

This chase, which began with imitation, has gradually revealed deeper innovation dilemmas. RealAI, jointly incubated by Tsinghua University, Ant Group, and Baidu, debuted its 16-second video-generating model Vidu in April last year but only offered 4-second and 8-second videos upon official launch in July.

Zhipu AI released its text-to-video model Qingying in July 2024, also based on the DiT architecture, but could only generate 6-second videos, increasing to 10 seconds in November. From RealAI's shrinking promise to Zhipu AI's arduous climb, domestic models remain stuck in the "second-level" arena.

Even Kuaishou's Keling, a leading player, achieves 3-minute stitched videos through the "continue writing" function, but single-generation videos still hover around the 10-second mark. This dilemma becomes more ironic after the release of Sora Turbo, where OpenAI proactively compressed video duration to 20 seconds, implying the initial one-minute video was a meticulously edited masterpiece.

In generative AI, there's a vast gap between the transparency of technological routes and implementation capabilities, revealing a brutal reality: pure imitation of routes is insufficient to overcome core challenges like physical simulation and spatiotemporal continuity. Like the "process catch-up paradox" faced by domestic chips, the text-to-video field also grapples with "diminishing returns from parameter stacking."

When the industry falls into a homogeneous competition trap, so-called technological breakthroughs often degrade into a numbers game of parameter tuning.

Part 2

Data Scarcity and Technological Ethics: The Dual Challenges of Building Ecological Barriers

If technological routes are the visible battlefield, then data competition is the hidden war beneath the surface. In September 2024, iQIYI's copyright dispute with MiniMax exposed the dark side of large model training—"data scarcity."

Algorithms, computing power, and data are the three pillars supporting AI text-to-video models, determining technological breakthroughs. Data is the raw material for model training; the more data, the more powerful the model. Without a stable data source, large model training is impossible. Post the "Hundred Models War," high-quality data has become increasingly expensive and scarce.

Even mighty OpenAI cannot escape the "data scarcity" plight. In 2023, OpenAI angered mainstream European and American media for unauthorized use of their data, eventually resolving the issue with financial settlements and paid agreements with Politico, Time, The Financial Times, and others. The same year, OpenAI CEO Sam Altman publicly acknowledged that AI companies would soon exhaust all internet data.

In August 2024, over 100 YouTube streamers collectively sued OpenAI for unauthorized transcription of millions of videos to train large models. When asked whether YouTube videos were used to train Sora, OpenAI's former CEO Mira declined to comment.

As public internet data nears exhaustion, platforms with private data pools possess a competitive edge. This is believed to be one reason why manufacturers like Kuaishou, ByteDance, Alibaba, and Tencent, which own long and short video platforms, are scrambling to enter the field.

Short video platforms like Kuaishou and Douyin naturally possess data resource endowments, having accumulated massive amounts of rich video data over the years. Alibaba's Youku, as one of China's three major video platforms, also sits on high-quality video resources.

Google's text-to-video model Veo2 is considered more powerful than Sora. Without delving into technical complexities, Google's ownership of YouTube alleviates data sourcing challenges compared to OpenAI.

When technological levels are still within a unified competitive dimension, failing to transcend computing power, algorithms, and data constraints, competition in the text-to-video sector has evolved into a confrontation between platform-level ecosystems.

Part 3

Commercialization Vanguard Battle: Exploring the Path from Traffic Frenzy to Value Precipitation

Gunfight versions of "Empresses in the Palace," martial arts dramas like "Dream of the Red Chamber," pandas doing housework... These "magically modified" and highly creative short videos on Kuaishou, Douyin, and Xiaohongshu have repeatedly set new playback records.

While creators have reaped the first benefits of increased traffic, similar to the commercialization journey of general-purpose language large models, the development window for text-to-video is short.

In 2025, text-to-video is poised for a paradigm shift from technological adoration to commercial rationality.

Sora Turbo's subscription-based charging model, offering 50 video generations for $20 per month, sets the industry benchmark. ChatGPT Plus users paying $20 per month can directly use Sora but are limited to 50 480p videos or fewer 720p videos, each 5 seconds long. ChatGPT Pro users paying $200 per month enjoy more generations and higher resolutions, up to 20-second videos.

Chinese manufacturers are actively exploring localized monetization paths. Kuaishou offers a free quota and a monthly fee gradient divided into three tiers: 66 yuan, 266 yuan, and 666 yuan. Users receive a certain number of inspiration points for free upon login and need to subscribe for more points to continue generating videos.

Perhaps to foster content ecosystem prosperity, on October 18, 2024, Kuaishou's Keling launched the first phase of the "Future Partner Program," introducing a one-stop AIGC ecological cooperation platform to lower creation barriers for creators. However, this 2C model faces dual challenges: individual users' willingness to pay has an obvious ceiling, while professional creators are constrained by platform traffic control strategies.

As AI videos are prone to copyright disputes, and an overabundance can annoy users and disrupt community ecology, manufacturers are exploring diverse monetization paths to break the deadlock.

Douyin collaborated with Bona on the AI sci-fi short series "Sanxingdui: Revelation of the Future," while Kuaishou joined forces with directors like Jia Zhangke and Li Shaohong to produce nine AIGC film shorts using Keling. However, the specific benefits remain to be seen.

Beyond film and television collaborations, e-commerce is a crucial test field for B-end commercialization. For instance, Alibaba opens exclusive image-generated videos to platform merchants to drive marketing, while Keling provides beta test spots to MCN agencies like Yaowang Technology to accelerate technology application and promotion.

These explorations reveal a new value logic: when the technology race reaches a stalemate, scenario-based landing capabilities accelerate the race.

Part 4

Industry Endgame Reflection: Stepping Out of OpenAI's Paradigm "Shadow"

DeepSeek broke computing power bottlenecks with the MoE architecture, and the text-to-video field similarly requires architectural-level changes.

The fusion of multimodal large models and neural rendering, the quantum computing acceleration of diffusion models, or even cognitive architectures inspired by brain science—these frontier explorations, though risky, offer the only path out of homogeneous competition.

When technological breakthroughs, data ecosystems, and commercial landings form a positive cycle, Chinese AI enterprises can truly forge their own competitive advantages.

In this competition blending reality and fiction, the ultimate winners will not be holders of specific technical parameters but rule-makers who redefine the relationship between video generation and the physical world.

Just as the smartphone revolution was more than a communication tool upgrade, the ultimate value of text-to-video lies in creating a new paradigm for human cognition to interact with the digital world. This path is long, but it is precisely this adherence to long-term vision that nurtures truly world-changing innovations.