Cloud Vendors Leveraging DeepSeek Traffic Must Navigate the Risks of Losses

![]() 02/24 2025

02/24 2025

![]() 714

714

Post-Spring Festival, cloud computing has surged as a hot topic in the capital market.

However, this time, it’s not the cloud giants like Alibaba Cloud and Tencent Cloud leading the charge but rather small and medium-sized cloud vendors.

For instance, QingCloud’s stock price soared by the daily limit of 20% for six consecutive trading days from February 5 to February 12, jumping from 34.07 yuan per share to 101.72 yuan per share, peaking at 115.31 yuan per share on February 14.

UCloud, long struggling in the capital market, has seen a surge after this year's Spring Festival, with four 20% daily limit gains. In just eight trading days, its stock price surged by 177%, and its market value reached 17.3 billion yuan.

Kingsoft Cloud in Hong Kong stocks rose from its September 2024 low to a high of 10.88 yuan per share on February 14, 2025, marking an increase of over eightfold.

Previously, these small and medium-sized cloud vendors hovered on the brink of survival, struggling with sluggish performance and consistent losses.

This time, benefiting from the release and open-sourcing of DeepSeek R1, which has reduced the cost of training and deploying large models, and fueled a surge in demand for AI applications, these vendors have experienced a sharp rise in the capital market and have truly stepped onto the stage of AI large models.

Undoubtedly, large models are the "traffic gateway" for cloud vendors, who have always been direct beneficiaries of their promotion and application. Compared to small and medium-sized cloud vendors, cloud giants with more abundant computing power resources have been more aggressive and comprehensive in their layout of the large model race.

After all, when customers use models like DeepSeek, they consume computing power and data, which in turn drives the sales of other basic cloud products (computing, storage, networking, databases, software).

Therefore, since DeepSeek's explosion at the beginning of the year, in addition to small and medium-sized cloud vendors, Alibaba Cloud, Huawei Cloud, Tencent Cloud, Volcano Engine, Baidu Intelligent Cloud, and telecom operators like China Telecom Tianyi Cloud, China Mobile Cloud, and China Unicom Cloud have all quickly adopted DeepSeek.

It’s evident that DeepSeek presents an opportunity for small and medium-sized cloud computing vendors to turn things around with the help of new technologies, although it won’t significantly impact the current cloud computing market landscape.

However, in terms of traffic, small and medium-sized cloud vendors have indeed seized some of the traffic from cloud giants, especially among small and medium-sized enterprises and individual users. After all, small and medium-sized cloud vendors offer higher cost-effectiveness.

Nevertheless, from the perspective of the overall cloud computing market, analysts from Minsheng Securities also stated that as more and more public cloud vendors embrace DeepSeek, the underlying computing power resources return to the same starting line, thereby shifting focus to considerations of the depth of the computing power pool and the breadth of user coverage.

After all, while large models are the "traffic gateway" for cloud vendors, what ultimately determines the winner is the depth and breadth of the industrial implementation of large models. From the current situation, the advantages of cloud giants are undoubtedly more pronounced.

Simultaneously, DeepSeek’s "low cost" has sparked a new round of price wars. Cloud vendors must be vigilant as increasingly lower prices may eventually lead MaaS services to fall into the SaaS trap.

DeepSeek Brings Small and Medium-sized Cloud Vendors to the Forefront

"Previously, cloud computing was primarily built around CPUs, with GPUs serving as accessories. Nowadays, GPUs have become an independent business segment," Wang Wei, CTO of Cloud Uranium Technology, said to Guangzhui Intelligence at the end of last year. "This business segment primarily helps customers manage and operate computing power."

After years of development, CPU-based cloud computing has become very mature. Whether it’s large cloud vendors or small and medium-sized cloud vendors, they mostly provide cloud computing power services based on CPUs. However, in the era of AI large models, CPUs can no longer meet the computing power demands of large model pre-training, and GPUs have gradually become the mainstream application chips. Consequently, NVIDIA, which started with GPUs, has also flourished.

Comparatively speaking, cloud giants have laid out the GPU racetrack earlier and invested more.

Around 2016, with the rapid development of deep learning technology and the sharp increase in demand for GPUs, cloud giants led by Alibaba Cloud and Tencent Cloud began to increase their investment in the GPU field, expand the scale of GPU servers, and launch a series of GPU cloud service products.

Due to factors such as limited financial strength, small and medium-sized cloud vendors began to layout the GPU racetrack on a large scale after the end of 2022, accompanied by the rise of domestic GPU chips.

This has led to a widespread shortage of high-end computing power graphics cards among small and medium-sized cloud vendors, as well as a lack of large-scale, high-performance computing power clusters, making it impossible to provide relevant computing power services to large customers, especially those focused on large model pre-training.

Moreover, it’s challenging for small and medium-sized cloud vendors to directly transform their CPU-dominated business foundations and technical accumulations into intelligent computing services. After all, small and medium-sized cloud vendors themselves have not accumulated sufficient GPU technical capabilities, and the overall GPU technology stack is still not mature at this stage. Therefore, it’s also difficult for small and medium-sized cloud vendors to provide comprehensive, one-stop AI cloud service solutions like large cloud vendors.

This limits their capabilities during the AI training phase. As a result, in the previous large model racetrack, small and medium-sized cloud vendors did not truly step onto the "stage" of AI large model services and only touched the edge business of the large model racetrack.

However, the popularity of DeepSeek has provided small and medium-sized cloud vendors with an opportunity to get on the "stage".

At the beginning of 2025, DeepSeek proposed a technical path of "leveraging a small force to achieve great results."

It claims to have trained a model with performance close to that of OpenAI’s flagship model using only 2,048 NVIDIA H800 chips and a training cost of US$5.5 million. The training cost is only one-tenth of that of other giants, and the inference computing power cost is only 1/30 of GPT-o1’s.

Simultaneously, compared to other large models, DeepSeek’s intelligent distillation technology and other advancements have allowed AI inference to break through hardware limitations, extending deployment costs from high-end GPUs to consumer-grade GPUs and adapting to many domestic GPU chips.

This allows small and medium-sized cloud vendors to provide AI services to customers without the need for substantial investment in high-end computing power hardware and relying solely on domestic GPU chips.

Furthermore, in terms of computing power cluster resources, most of the original hundred-card-level computing power clusters cannot meet the demand for large model inference applications. Many enterprises have also hoarded computing power resources at the level of tens to hundreds of cards, resulting in many idle computing power clusters.

However, with the optimization and acceleration of DeepSeek technology, tasks that were previously difficult to handle can now be completed with hundred-card-level computing power, improving the economic value of small-scale card clusters and allowing small and medium-sized cloud vendors to more efficiently utilize limited computing power resources to provide services to customers.

More importantly, the pricing of DeepSeek’s various model APIs is quite affordable.

For example, the API pricing for DeepSeek-R1 is: 1 yuan per million input tokens (cache hit) and 16 yuan per million output tokens. In contrast, OpenAI’s o3-mini charges 0.55 US dollars (4 yuan) for input (cache hit) and 4.4 US dollars (31 yuan) for output per million tokens.

Recently, after the end of the preferential period, DeepSeek-V3, although increased from the original 0.1 yuan per million input tokens (cache hit) and 2 yuan per million output tokens to 0.5 yuan and 8 yuan, respectively, its price is still lower than other mainstream models.

The affordable and easy-to-use DeepSeek has also driven more and more small and medium-sized enterprises and individuals to use cloud services to deploy and run AI models.

The explosive development of small AI applications has led more small and medium-sized enterprises and individual users to choose the service model provided by small and medium-sized cloud vendors, which offers more flexibility and cost-effectiveness. This has also brought a large number of business opportunities and a broad market space for small and medium-sized cloud vendors.

Undoubtedly, in the past, due to their inability to afford high computing power costs, small and medium-sized cloud vendors struggled to get involved in the field of large model services. Now, with the help of DeepSeek technology, they can carry out related businesses at a lower cost, expand into the AI computing power service market with higher profits, and have the opportunity to overtake.

Comprehensively Occupying DeepSeek: The Ecological Battle of Cloud Giants

For small and medium-sized cloud vendors with a limited number of training cards, DeepSeek’s training logic provides them with an opportunity to enter the field of AI large model services and thereby achieve new revenue growth.

For cloud giants, in addition to "riding on the traffic," the more critical aspect lies in the battle for the cloud ecosystem and the right to speak in the future AI infrastructure.

Therefore, in addition to deploying DeepSeek large model services at the MaaS layer, cloud giants have basically fully adapted DeepSeek large model products from the underlying infrastructure to the upper-level applications.

Industry insiders also stated that although cloud vendors are now accessing DeepSeek, "the real competition is not in the access list but in the deep adaptation of the model and cloud architecture."

After all, under the competition of various players, only by ensuring that the DeepSeek large model can achieve superior performance and efficiency on their own cloud platforms compared to other platforms, and can better compatible with hardware and software platforms to avoid excessive resource consumption, can they attract more enterprises and developers into their cloud ecosystem.

In this process, each cloud giant has taken a different development path based on its core advantages to achieve differentiated strategies.

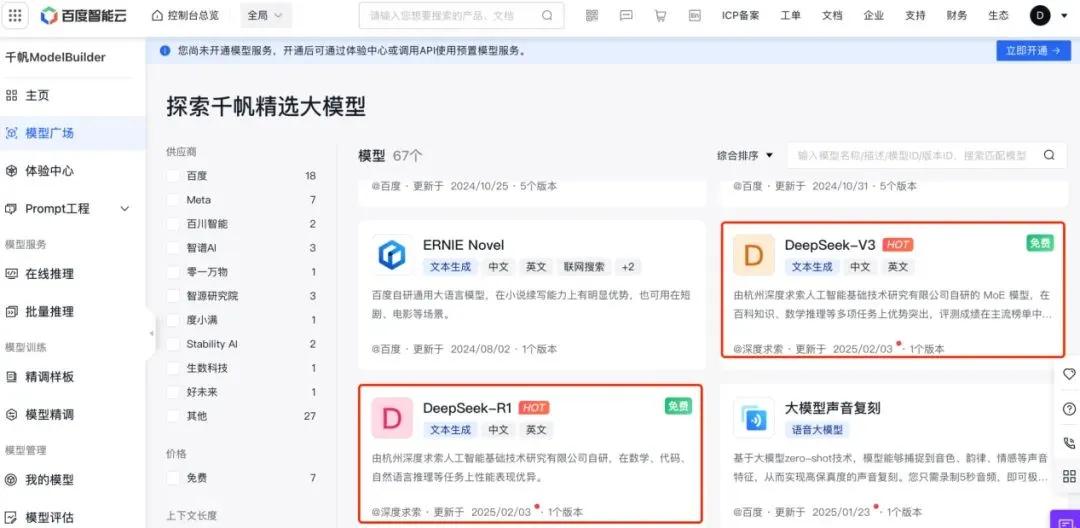

Baidu Intelligent Cloud focuses on cost-effectiveness and comprehensive services. The Qianfan large model platform recently launched DeepSeek-R1 and DeepSeek-V3 models and simultaneously introduced industry-leading ultra-low-price solutions. Baidu Intelligent Cloud’s underlying Wanka cluster built on Kunlun Chip P800 can provide higher computing power support for enterprises deploying DeepSeek. Qianfan ModelBuilder provides one-click deployment, distillation, and other capabilities based on the model development tool chain, enabling enterprise customers to better utilize DeepSeek.

Four large model application products under Baidu Intelligent Cloud, namely Keyue, Xiling, Yijian, and Zhenzhi, have also officially launched new versions integrated with the DeepSeek model. Simultaneously, Baidu Intelligent Cloud is accelerating the adaptation and verification of industry application products such as finance, transportation, government affairs, automobiles, healthcare, and industry with the DeepSeek model.

Huawei Cloud binds its domestic computing power chain with Ascend Cloud Services. Recently, the domestic computing power service provider SiliconFlow’s large model cloud service platform SiliconCloud launched a full-blooded version of DeepSeek-R1&V3 based on Huawei Cloud Ascend Cloud, taking the lead in deploying the DeepSeek model on domestic chips, reducing model inference time and cost, and achieving results comparable to global high-end GPU-deployed models.

Alibaba Cloud covers the entire lifecycle through a "zero-code" process. Previously, Alibaba Cloud announced support for one-click deployment of DeepSeek-V3 and DeepSeek-R1, allowing users to achieve the entire process from training to inference without writing cumbersome code. Additionally, Alibaba Cloud also stated that to achieve more cost-effective integration of the DeepSeek series of models with existing businesses, users can also deploy the distilled DeepSeek-R1-Distill-Qwen-7B.

Tencent Cloud enhances developer friendliness with "3-minute deployment." Previously, Tencent Cloud officially announced that the DeepSeek-R1 large model can be one-click deployed to Tencent Cloud HAI, allowing developers to access and invoke it in just 3 minutes. Recently, the Tencent Cloud TI platform can support enterprise-level fine-tuning and inference for the "full range of models" of DeepSeek, helping developers better solve problems such as "difficult data preprocessing," "high model training thresholds," and "complex online deployment and operation and maintenance."

Although the paths taken by various cloud giants to access open-source large model products are different, their ultimate core goal is to reduce the barriers to AI deployment for enterprises and compete for developer ecosystems and customer growth.

After all, the essence of cloud platforms is to sell computing power and services, and their positioning is an "open ecosystem." Each large cloud service provider hopes to attract as many customers as possible into the ecosystem, which can drive more computing power consumption and sales of cloud product services.

Certainly, if cloud platforms restrict customers to using only their proprietary models, customer choices would be severely constrained. Not only might customers who prefer not to utilize their own extensive models be lost, but more crucially, these customers might opt for other more open cloud platforms.

Therefore, only by offering a variety of options can the diverse needs of customers be met, enabling cloud providers to reap benefits from multiple levels. DeepSeek's technological breakthrough in delivering low-cost, high-performance solutions has emerged as a highly cost-effective "weapon" in this competitive landscape.

For major cloud providers, whether dealing with open-source or closed-source large models, the fees charged for API calls are modest, and the revenue they generate constitutes a relatively small proportion of overall earnings.

Returning to the core essence of cloud computing, only by capturing the traffic inflow with large models and continually expanding the ecosystem and user base can the revenue growth of the entire cloud ecosystem be truly fueled.

Therefore, facing the future, cloud providers must transform DeepSeek's "low-cost" advantage into the "high returns" of cloud services to ultimately secure a dominant position in this AI competition.

Monthly Losses Exceeding 400 Million Yuan: Beware of MaaS Turning into SaaS

From both technical and application perspectives, cloud providers have deeply integrated with DeepSeek. However, the most pragmatic and effective strategy to swiftly capture this "traffic" is to engage in price wars or even rush to offer free quotas to users.

For instance, Tencent Cloud's TI platform introduced a developer gift pack, encompassing one-click deployment of the full spectrum of DeepSeek models and free trial experiences for select models. Baidu Intelligent Cloud offers ultra-low inference prices for this model launch, as low as 3-5% of DeepSeek's official list price, and also provides limited-time free services. Volcano Engine offers a two-week limited-time 50% discount to help enterprises enjoy the "full-featured" DeepSeek-R1.

Yet, amidst this fierce price war, cloud providers are also grappling with losses.

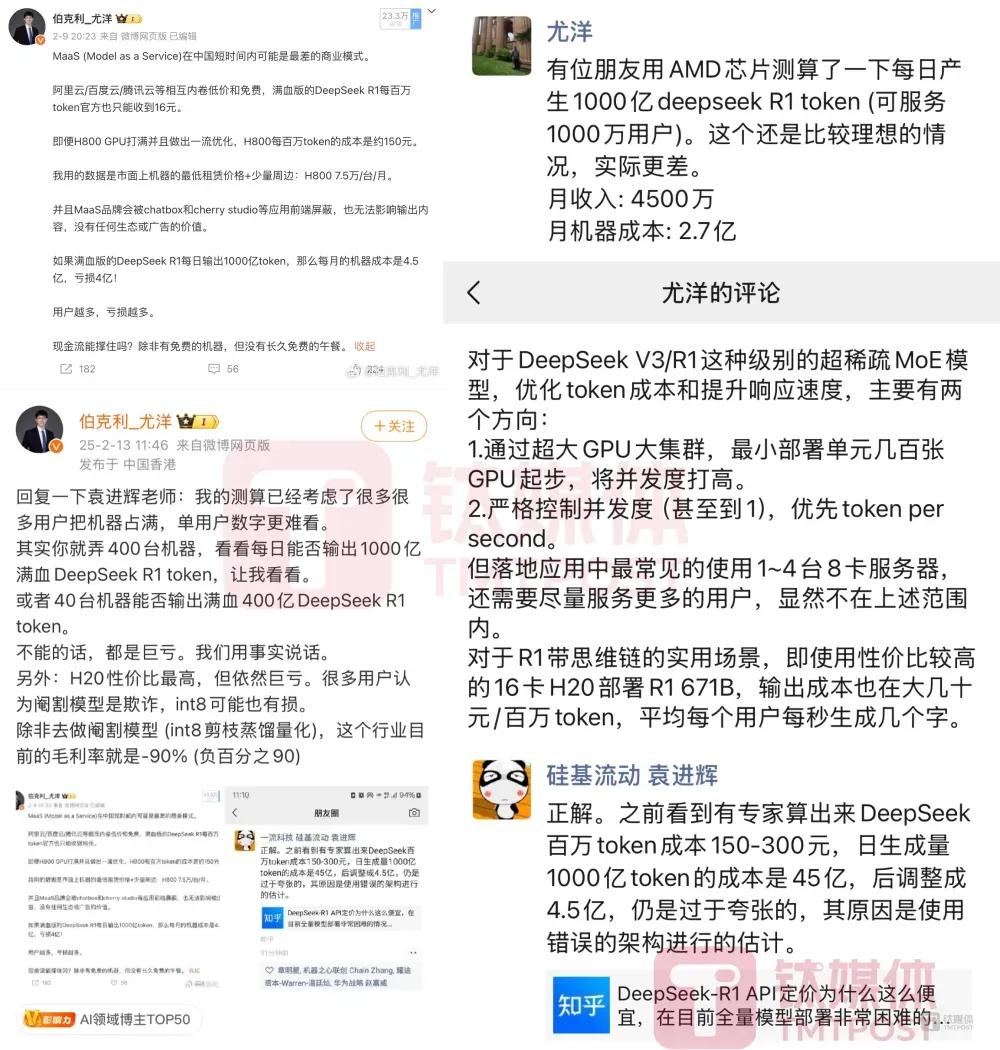

Recently, You Yang, the founder of Luchen Technology, expressed on Weibo and WeChat Moments that major players are competing fiercely with low prices and free services, with the full-featured version of DeepSeek R1 charging only 16 yuan per million tokens (output). If the daily output is 100 billion tokens, the service based on DeepSeek will incur losses of at least 400 million yuan per month, and even using AMD chips will result in losses exceeding 200 million yuan.

Min Kerui, CEO of Myta Search, also noted that many cloud provider service prices are the same as or lower than DeepSeek's, but all are incurring losses.

From last year to present, cloud providers have initiated wave after wave of price wars, with ByteDance, Baidu, Tencent, Alibaba, and others all following suit with price cuts, recently even sparking an online "war of words" between two former Baidu employees.

Recently, Shen Dou, President of Baidu Intelligent Cloud Business Group, mentioned at an all-hands meeting: "The 'malicious' price war among domestic large models last year has resulted in the industry's overall revenue generation lagging behind foreign counterparts by several orders of magnitude." He specifically mentioned Doubao, a subsidiary of ByteDance, arguing that Doubao has been most directly impacted by DeepSeek due to its high training and advertising costs.

In response, Tan Dai immediately retaliated on his social media, stating: "The pre-training and inference costs of the Doubao 1.5Pro model are both lower than those of DeepSeek V3 and are far lower than other domestic models. At the current price, it boasts very good gross margins."

It is a common tactic for large companies to seize the traffic market and users through substantial investments in the early stages. However, during this process, one must remain vigilant. The initially favored MaaS model might degrade into a SaaS model, characterized by intense competition, internal consumption, and a lack of profitability.

Even You Yang candidly stated that in the short term, China's MaaS model might be the worst business model. Nevertheless, AI development is still in its early to mid-stage at this juncture. When large models truly permeate and land in various industries, the current situation may change.