From Code to the Physical World: The Evolution Path and Future Vision of AI Agents

![]() 03/13 2025

03/13 2025

![]() 529

529

On March 12, 2025, the global AI community witnessed a groundbreaking moment. In a mere 19-minute virtual event, OpenAI unveiled its proprietary Agent SDK and Responses API, two revolutionary tools heralding a new era of "standardized collaboration" in agent development.

This event marked not only a technological milestone but also a declaration of the reimagining of the symbiotic relationship between humans and AI. As AI transcends mere dialogue interaction to permeate every facet of the real world as a "task executor," a transformation encompassing efficiency, creativity, and ethics is quietly unfolding.

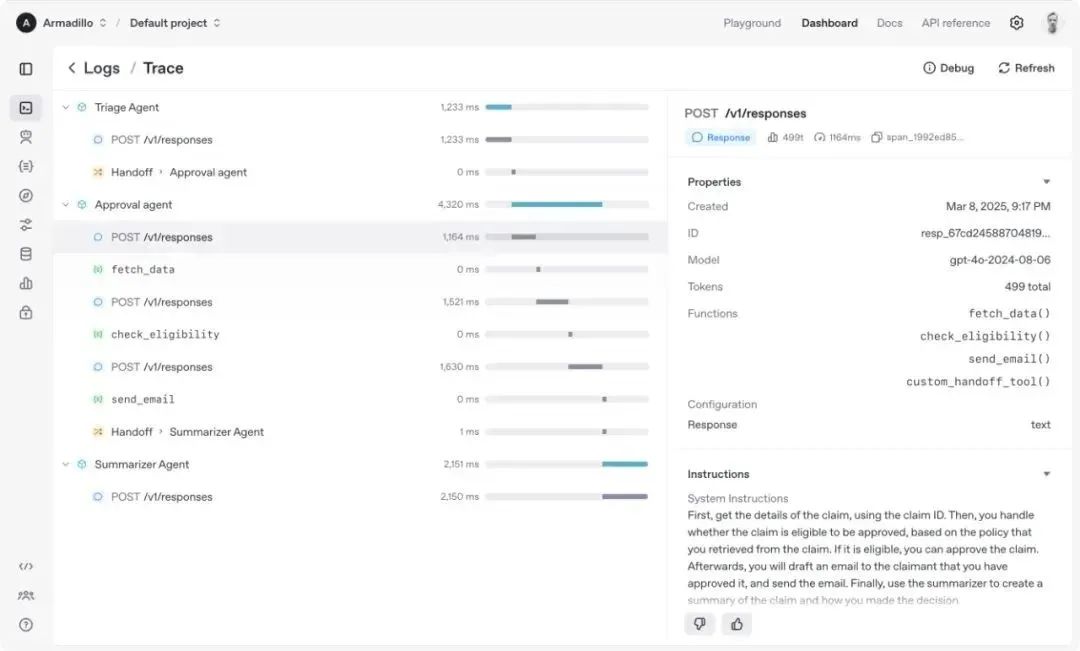

The toolkit released by OpenAI directly addresses three major challenges in agent development: the complexity of multi-task collaboration, the intricacies of tool invocation, and high development costs. Taking cross-border e-commerce as an example, traditional development requires the construction of distinct agents for language recognition, inventory inquiry, order updates, and other processes. However, with the newly launched Agent SDK, developers can orchestrate the collaborative workflows of multiple agents with a single click, achieving an automated closed loop from customer inquiries to after-sales service. Even more revolutionary is the Responses API, which seamlessly integrates over 20 built-in tools such as web search, document parsing, and computer operations. Developers need only invoke the API once to construct complex task chains, freely combining agents' "capability modules" akin to LEGO bricks.

Notably, OpenAI has introduced a value-based pricing model for the first time: high-end "PhD-level" agents cost $20,000 per month, targeting data-intensive industries like finance and healthcare; the basic version is priced at $2,000, offering lightweight services such as web processing and meeting minutes for knowledge workers. This disruptive business model transforms AI from a "cost center" to a "profit engine," signifying that agents are transitioning from the laboratory to large-scale commercial applications.

Amidst the global spotlight on the Chinese team Manus, OpenAI's swift response with this toolkit is not only an active reshaping of the market landscape but also a profound exploration of the proposition, "How can AI truly change the world?" This event could very well be a pivotal turning point in the symbiotic evolution of silicon-based intelligence and carbon-based civilization.

Data Revolution: The Cognitive Leap from Static Text to Dynamic Reality

In 2023, when ChatGPT captivated the world, people marveled at the "omniscience" of large language models (LLMs), but a critical limitation persisted: data was static. Whether it was GPT-4 or Claude, their knowledge was frozen at the time the training dataset was created, unable to perceive real-time changes in the real world. "The model knows the definition of a traffic light, but it doesn't know if it's red or green at this moment."

This limitation was shattered on March 12, 2025. The Responses API launched by OpenAI supports multi-modal input for the first time, enabling agents to invoke web search, document retrieval, and even computer operation tools. This means that agents' data sources have expanded from closed training sets to the open internet, achieving true dynamic perception. For instance, when a user asks, "What's the weather like today?", the agent no longer relies on outdated weather databases but crawls real-time data from weather websites to generate an answer.

Meanwhile, the vehicle-road-cloud integration projects in Tongxiang, Zhejiang, and Jiading, Shanghai, exhibit an even more radical evolution. By connecting cameras, LiDAR, and V2X devices to the MogoMind large model, a real-time digital twin system for traffic at certain intersections is constructed. Here, the perceived data is no longer text or images but dynamic parameters of the physical world: vehicle speed, pedestrian trajectories, traffic light states, etc.

Training Paradigm Disruption: From Supervised Learning to Self-Evolution

Traditional AI training relies on manually labeled data, akin to assembly line workers feeding knowledge into the model. However, the emergence of Manus AI completely altered the game. This agent developed by a Chinese team evolves by self-generating data: it first executes tasks to generate code, then uses the generated code to complete tasks, forming a closed loop. Much like AlphaGo improving its chess skills through self-play, Manus achieves a data flywheel effect in scenarios such as programming and document processing.

OpenAI's Deep Research Agent pushes reinforcement learning to new heights. Through end-to-end reinforcement learning, it demonstrates astonishing efficiency in market analysis tasks—when a user requests to "analyze the M&A trends in a certain industry," the agent can integrate 500 news articles, financial reports, and patents within 30 minutes to generate a structured report. Behind this is an innovative reward mechanism: the system not only pursues answer accuracy but also optimizes information retrieval speed and resource consumption ratio.

Even more disruptive is the "data-standard" positive cycle. While empowering urban traffic management, MogoMind optimizes vehicle-road-cloud standards in reverse by real-time perception and computation of real-time data from intelligent agents such as autonomous vehicles. This "application-driven data, data-driven standards" model is solving the problem of industry data silos, forming a sustainable and evolving intelligent ecosystem.

Scenario Reconstruction: The Evolution from Office Assistants to New Urban Infrastructure

Early agents were confined to enterprise-level applications, such as OpenAI's Operator Agent for web search. However, the rise of Manus AI proves that personal scenarios are the tipping point. This agent, which supports resume screening, stock market analysis, and travel planning, has fetched astronomical prices on the second-hand market, reflecting consumers' urgent demand for general-purpose agents.

OpenAI's Responses API is blurring the boundaries between enterprises and individuals. Developers can use the same set of tools to build customer service robots (handling work orders) or personal assistants (managing schedules), flexibly combining built-in tools like web search and file operations akin to LEGO bricks. "In the future, every enterprise will have a customized agent, just like everyone had an official website ten years ago."

When agents step out of the screen, the changes in the physical world are even more remarkable. The vehicle-road-cloud system not only enables autonomous driving to take over complex intersections (increasing the takeover rate from 1/100 kilometers to 1/1000 kilometers) but also reconstructs urban governance models. AI traffic lights dynamically optimize traffic flow efficiency by 30%, and drone patrols reduce the response time to traffic accidents by 40%. These seemingly sci-fi scenarios are becoming a reality.

Three Trends in the Evolution of AI Agents

1. Perception Upgrade to Hardware

From cameras to millimeter-wave radars, from GPS to quantum sensors, the development of multi-modal perception hardware is pushing the cognitive boundaries of agents to nanometer-level precision.

2. Democratization of Decision-Making

The combination of open-source models and cloud computing is lowering the barriers to agent development. OpenAI's Agent SDK allows developers to orchestrate multi-agent workflows quickly using Python, while Camel-AI's no-code framework enables even elementary school students to train their own learning assistants. This "agent democratization" movement is mirroring the explosive growth curve of smartphone apps.

3. Reconstruction of Economic Paradigms

As agents begin to create data, optimize processes, and even make autonomous decisions, traditional production relations are facing reshaping.

Symbiosis from Silicon-Based Intelligence to Carbon-Based Civilization

A decade ago, people feared that AI would replace humans; today, we see a brighter picture: agents becoming humanity's super external brains. On the New York Stock Exchange, quantitative funds utilize Deep Research Agents to mine alpha returns... These scenarios collectively outline the contours of the intelligent era—AI is not a replacement but an amplifier.

"The real danger is not that computers will begin to think like humans, but that humans will begin to think like computers." Perhaps the ultimate mission of agent technology is to bridge this alienation and return technology to its human-centric origins. We have reason to believe that the essence of intelligence will ultimately empower every living being to live more freely and with more dignity.