What Technical Milestones Has Autonomous Driving Traversed?

![]() 04/27 2025

04/27 2025

![]() 525

525

With the surge in electrification and intensifying market competition, traditional internal combustion engines are gradually being supplanted by new energy vehicles. As intelligent development continues to evolve, intelligent driving technology, as the cornerstone of the next stage, is garnering widespread attention. From initial assisted driving to today's AI-driven autonomous driving systems, major automakers are consistently escalating their R&D investments in a bid to dominate the future market. So, what technical milestones has autonomous driving traversed since its inception?

Development Timeline of Autonomous Driving Systems

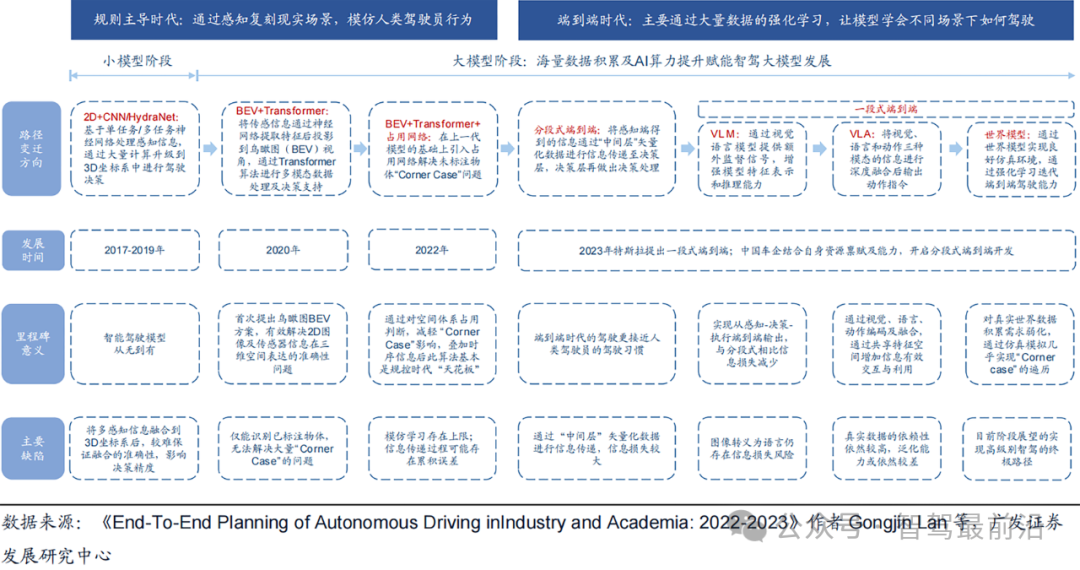

The evolution of autonomous driving technology has been a journey from theoretical exploration to gradual maturity. Initially, autonomous driving systems predominantly relied on traditional rule-driven methods, gathering environmental information through sensors such as cameras, radars, and LiDARs, and subsequently processing and interpreting this data through preset rules and models to mimic human driving decision-making processes. During this period, the "2D+CNN" perception architecture was prevalent, utilizing convolutional neural networks to extract features and recognize scenes from camera images. However, these methods grappled with issues like limited recognition capabilities for complex scenes and error accumulation during information transmission.

Transition from Rule-Based to End-to-End Autonomous Driving

With technological breakthroughs by pioneers like Tesla, autonomous driving systems have progressively entered the era of multi-task learning and large models. From 2017 to 2019, Tesla spearheaded the HydraNet multi-task learning neural network architecture, enabling a single model to handle multiple visual tasks such as lane detection, pedestrian recognition, and traffic light judgment, significantly enhancing data processing efficiency and real-time performance. Subsequently, between 2020 and 2021, Tesla introduced the "BEV+Transformer" architecture, converting 2D camera images into Bird's Eye View (BEV), achieving a unified representation of multi-sensor data in three-dimensional space, thereby addressing the shortcomings of traditional 2D images in distance estimation and occlusion issues. Following this, the advent of Occupancy Networks in 2022 effectively reduced the dependence on labeled data and bolstered the system's recognition capabilities for "corner case" scenarios by directly determining voxel occupancy in 3D space.

Tesla's End-to-End Architecture Diagram

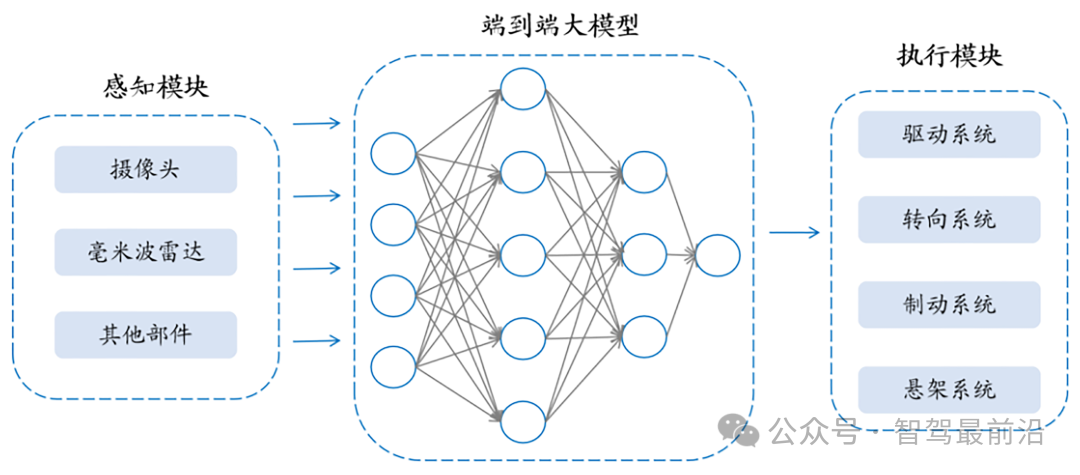

Currently, with the advancement of large models and reinforcement learning technology, the end-to-end integrated architecture is gradually becoming the focal point of industry attention. By amalgamating perception, planning, decision-making, and control into a unified neural network system, the end-to-end model can directly output specific control instructions from sensor data, thereby mitigating information loss and delay in intermediate processes. However, this approach still poses certain challenges in interpretability due to its relatively "black box" internal decision-making process, making fault diagnosis and system optimization more intricate.

Algorithm Architecture: Transition from Rule-Based Control to End-to-End

The heart of an autonomous driving system lies in its algorithm. How to evolve from the traditional rule-based control (RBC) architecture to an end-to-end model is a pivotal topic of current technological change. In the RBC era, systems predominantly relied on manually designed rules, achieving environmental interpretation through preprocessing of sensor data, feature extraction, and manually set logical rules. While this approach could mimic human driving behavior well in the early stages, its limitations included insufficient adaptability to complex scenes and potential information transmission errors during multi-sensor information fusion.

With the rapid proliferation of deep learning technology, data-driven end-to-end models have gradually emerged. Companies epitomized by Tesla, through large-scale data collection and immense computing power, have developed integrated neural networks that amalgamate traditionally independent modules (perception, decision-making, control) through joint training. This end-to-end model can directly extract features from raw sensor data and perform scene understanding and decision-making through neural networks, significantly reducing information loss during transmission between modules. Although end-to-end models exhibit obvious advantages in simplifying system structure and improving response speed, their "black box" characteristics also pose greater challenges to system safety and fault analysis. Therefore, the industry has commenced exploring segmented end-to-end solutions, which maintain the independence of some modules while achieving efficient transmission of data and decision-making information through neural network connections.

The crux of this transformation lies in achieving efficient fusion and scene reconstruction of multi-sensor data. For instance, converting 2D camera images into BEV using BEV technology not only eliminates the perspective differences between different sensors but also provides a unified representation of environmental information in a higher dimension. The Transformer model, on the other hand, achieves deep fusion of multi-modal information through a self-attention mechanism, enabling the system to more accurately capture key features in complex dynamic scenarios. For handling special cases, Occupancy Networks realize the recognition and processing of unlabeled objects by directly measuring the occupancy status of object volumes in space, further augmenting the system's robustness.

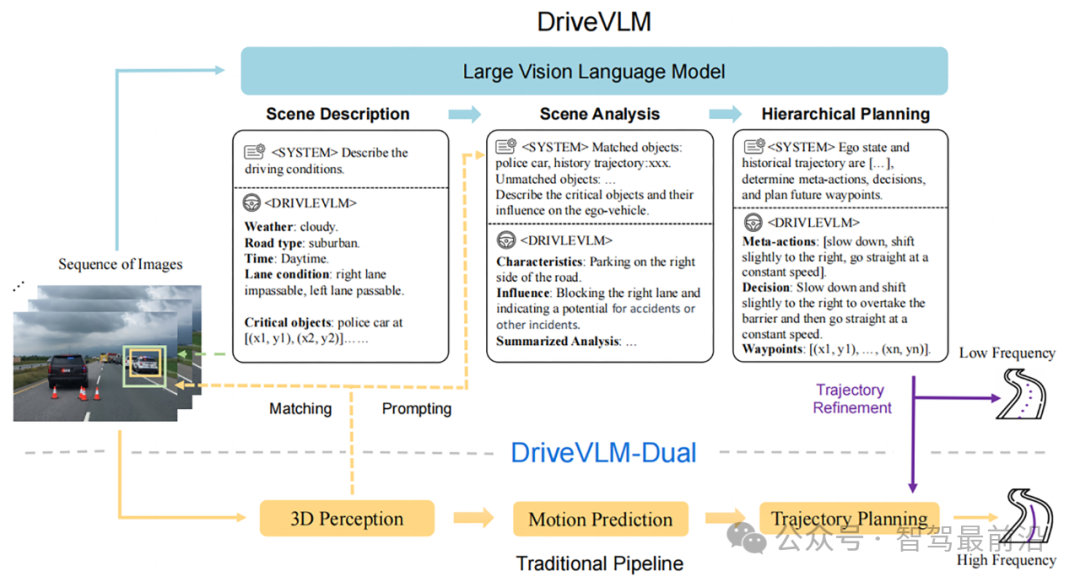

Although end-to-end large models have achieved significant breakthroughs, there are still some pressing issues to be resolved in practical applications. To further enhance the system's performance in complex scenarios, the industry has begun to focus on the fusion application of Vision-Language Models (VLM) and Vision-Language-Action Models (VLA).

By deeply integrating visual and linguistic information, VLMs can provide the system with additional semantic supervision signals. For example, in the recognition of road signs and traffic instructions, VLMs can not only interpret image information but also combine natural language descriptions to achieve accurate recognition and understanding of traffic rules in complex scenarios. VLA further introduces an action encoder on this foundation, enabling closed-loop optimization from perception to decision-making and execution by fusing historical driving data. Such a multi-modal fusion architecture can not only effectively reduce transmission delays within the system but also significantly improve decision accuracy and response speed in extreme driving scenarios.

VLM End-to-End Model Technology Diagram

With the progression of large model technology, World Models have gradually entered the research domain of autonomous driving. World Models can not only reconstruct the current environment statically but also predict the evolution of scenes over a period of time, thereby providing more proactive guidance for driving decisions. By learning from extensive real-world driving scene videos, World Models can to some extent traverse "corner case" scenarios, reducing the risks and costs of actual road testing. During research, technology practitioners should closely monitor the development dynamics of these emerging models and continuously explore more efficient multi-modal fusion solutions and real-time feedback mechanisms in conjunction with actual scenario needs.

Importance of Multi-Modal Information Fusion and Data Closed-Loop

Multi-modal information fusion is the cornerstone technology for achieving comprehensive perception in autonomous driving systems. In traditional methods, information collected by various sensors such as cameras, radars, and LiDARs often differs in data format, resolution, and latency. How to effectively integrate these heterogeneous data into an accurate and unified environmental model is the key to enhancing the safety and decision-making accuracy of autonomous driving systems.

Taking BEV technology as an example, projecting 2D images into 3D space not only resolves the limitations of information expression but also provides subsequent algorithms with input data from a more global perspective. The Transformer architecture, through a self-attention mechanism, enables information from different sources to complement and optimize each other in a shared feature space. Building on this, Occupancy Networks further introduce quantitative analysis of spatial occupancy, achieving precise judgment of the status of various obstacles in complex environments by dividing voxels.

The data closed-loop mechanism is also imperative in autonomous driving systems. The closed-loop data collection and feedback system enables algorithms to continuously learn and optimize during actual road driving. Through iterative training with real driving data and simulation data, the system can gradually cover various long-tail scenarios and special cases. This continuous iteration process not only improves the system's fault tolerance for abnormal situations but also provides solid data support for the continuous optimization of large models. For technology practitioners, constructing a comprehensive data collection, processing, and feedback closed-loop system is an essential guarantee for ensuring the system's continuous and stable operation.

Market Response May Provide Directional Reference for Automakers

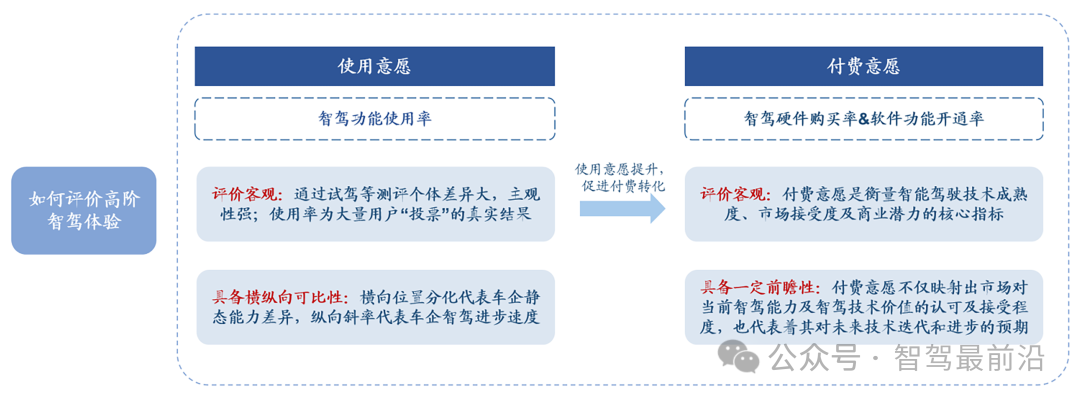

Consumer acceptance of autonomous driving systems can be gauged by "willingness to pay" and "usage rates". The design intent of intelligent driving technology is to alleviate driver fatigue, but from a market perspective, the commercialization of intelligent driving technology also underscores consumers' pursuit of this technology.

Two Evaluation Indicators for Intelligent Driving Functions

Willingness to pay is typically reflected in the purchase rate of high-end intelligent driving versions of vehicles. Currently, many automakers adopt a "hardware standard/optional + software paid" model, observing the sales proportion of intelligent driving versions by statistically analyzing actual vehicle purchase data and compulsory traffic insurance data, thereby objectively reflecting consumers' recognition of autonomous driving technology. At this juncture, the proportion of intelligent driving versions in some domestic automakers' models has reached a relatively high level, indicating that driven by continuous technological advancements, consumers have a high willingness to pay for products with advanced autonomous driving capabilities.

Usage rates directly mirror consumers' reliance and trust in autonomous driving functions during actual driving. A commonly used quantitative indicator is the proportion of intelligent driving functions activated during every 100 kilometers of driving, with usage rates in different scenarios (such as all scenarios, urban, and highway) each holding representative significance. The all-scenario usage rate reflects the system's universality and robustness in various complex environments; the urban scenario usage rate places higher demands on the system's response capabilities in complex traffic environments; while the highway scenario usage rate primarily tests the system's stability in relatively simple but highly continuous driving environments. Technology practitioners can continuously track these key indicators, promptly adjust algorithm models and system parameters, and ensure that the autonomous driving system achieves the anticipated performance level in different scenarios.

Conclusion

Autonomous driving technology is at a critical juncture of rapid transformation. From initial rule-based control to the current application of end-to-end large models, and to the continuous enhancement of multi-modal information fusion and data closed-loop mechanisms, every technological breakthrough has laid a solid foundation for the system's safety, robustness, and intelligence. Through an in-depth analysis of the milestones of high-end intelligent driving experiences, we can discern that future autonomous driving technology will not only necessitate continuous improvements at the algorithmic level but also the formation of a closed loop in data collection, processing, and feedback mechanisms to provide inexhaustible momentum for system optimization. Meanwhile, through quantitative assessment of key indicators such as willingness to pay and usage rates, technology practitioners can more intuitively understand market demand and system performance, thereby making targeted technological enhancements and product iterations.

-- END --