NVIDIA's "Transformation" Highlights Greater Need for the Chinese Market

![]() 05/22 2025

05/22 2025

![]() 686

686

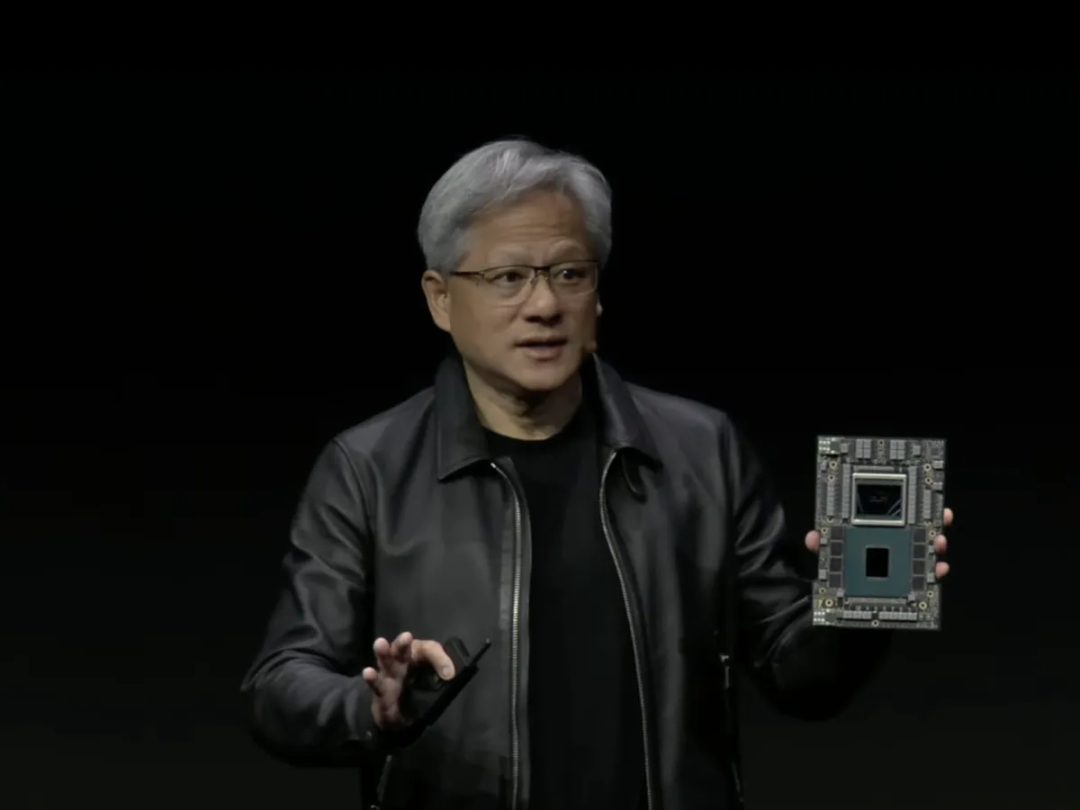

Jen-Hsun Huang aims to keep everyone within the NVIDIA ecosystem.

From Supplier to Global AI Factory

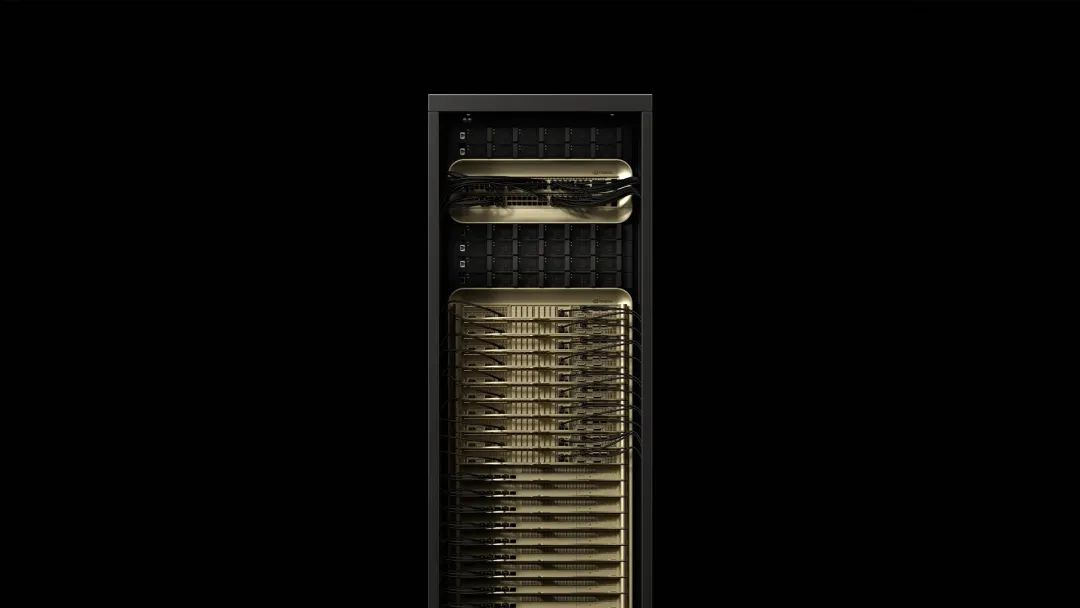

On May 19, Jen-Hsun Huang delivered his keynote speech at Computex 2025, one of Asia's largest electronic technology exhibitions. During the event, he unveiled new products such as the NVIDIA GB300 NVL72 platform, NVIDIA NVLink Fusion semi-custom AI chips, and the RTX PRO Server enterprise-grade AI computing platform. These announcements reposition NVIDIA as a leading AI infrastructure construction company.

NVIDIA GB300 NVL72

Huang introduced the new concept of an "AI Factory" and elaborated on the technical advantages and upgrade roadmap of the Grace Blackwell system. Unlike 2024, which focused on hardware performance improvements, 2025 places greater emphasis on the evolution of AI technology roadmaps. This evolution spans from perceptive AI to reasoning AI and then to physical AI, while deeply penetrating into emerging fields such as telecommunications and quantum computing.

Huang stated that based on NVIDIA's product calculations, AI computing power will increase by approximately 1 million times every decade in the future. For Huang, the transition from Agentic AI to physical AI is a crucial step on the path to AGI, and general-purpose robots will usher in the next trillion-dollar industry.

It appears Jen-Hsun is painting another ambitious picture, and an unprecedentedly huge one at that. But who can blame him? Jen-Hsun Huang is the man even Elon Musk respects. NVIDIA is not just an AI equipment supplier; it's an AI dream maker. Without powerful computing power, any AI story would lack conviction.

Data suggests that NVIDIA products are not experiencing sluggish sales. In 2024, NVIDIA shipped approximately 3.76 million GPUs to data centers, an increase of over 1 million units compared to the previous year's 2.64 million, making it the fastest-growing hardware company in history. It is estimated that NVIDIA will sell 6.5 to 7 million GPUs in 2025.

Bank of America even considers AI chips as the "new currency," playing a crucial role in geopolitical negotiations. Recent large-scale projects in the Middle East have highlighted the long-term demand for AI computing. The largest chip supplier is NVIDIA, and it can be said that most AI companies cannot avoid NVIDIA. So we don't need to worry that NVIDIA has no stories to tell, but telling a good story is not easy either.

The most imaginative and low-threshold option is the DGX Spark, capable of deploying large models. At the conference, Jen-Hsun Huang announced that the world's smallest AI supercomputer, the DGX Spark, has entered full production and will be ready within weeks. Huang was even optimistic, saying, "Today, everyone can own their own AI supercomputer, and... it can be plugged into a kitchen socket."

With the explosion of generative AI applications, developers' demand for localized computing power has surged. According to GF Securities calculations, global AI inference computing power demand in 2025 will be more than three times that of training computing power. Traditional cloud deployments face challenges related to data privacy, latency, and cost. The emergence of the DGX Spark enables small and medium-sized enterprises and individual developers to obtain supercomputing-level computing power at consumer-level costs, significantly lowering the threshold for AI innovation.

Furthermore, NVIDIA is rapidly transitioning to the quantum computing market, with Jen-Hsun Huang admitting to few judgment errors. In January of this year, Huang said that quantum computing would not be operational for at least 20 years, but in March, he publicly stated that his prediction about the timing of quantum computing applications was wrong. During this speech, Huang revealed that NVIDIA is developing a quantum-classical or quantum GPU computing platform, predicting that all future supercomputers will have quantum acceleration components, including GPUs, QPUs (Quantum Processing Units), and CPUs.

On the quantum computing front, NVIDIA announced the opening of the Global Commercial Research and Development Center for Quantum AI Technology (G-QuAT), which boasts the world's largest research supercomputer dedicated to quantum computing, ABCI-Q. This center is expected to enhance AI supercomputers, solve some of the world's most complex challenges in industries such as healthcare, energy, and finance, and take an important step towards the practical acceleration of quantum systems.

Want to leave the NVIDIA ecosystem? Jen-Hsun says, "Stay."

In recent years, the excessive profits from NVIDIA chips have been evident to all. You pay ten times the premium and still have to thank Jen-Hsun Huang for selling the chips. In the long run, no one can tolerate this. Therefore, Meta replaced NVIDIA with ASIC for some of its largest DLRM workloads, and Google did the same for critical video encoding workloads on YouTube. Amazon replaced its hypervisor with Nitro as early as 2012 and replaced a significant number of Intel CPUs with Graviton. Cloud giants and major design partners such as Marvell, Broadcom, Astera, Arista, and AIchip have invested heavily in funds and engineering talent to weaken NVIDIA's monopoly.

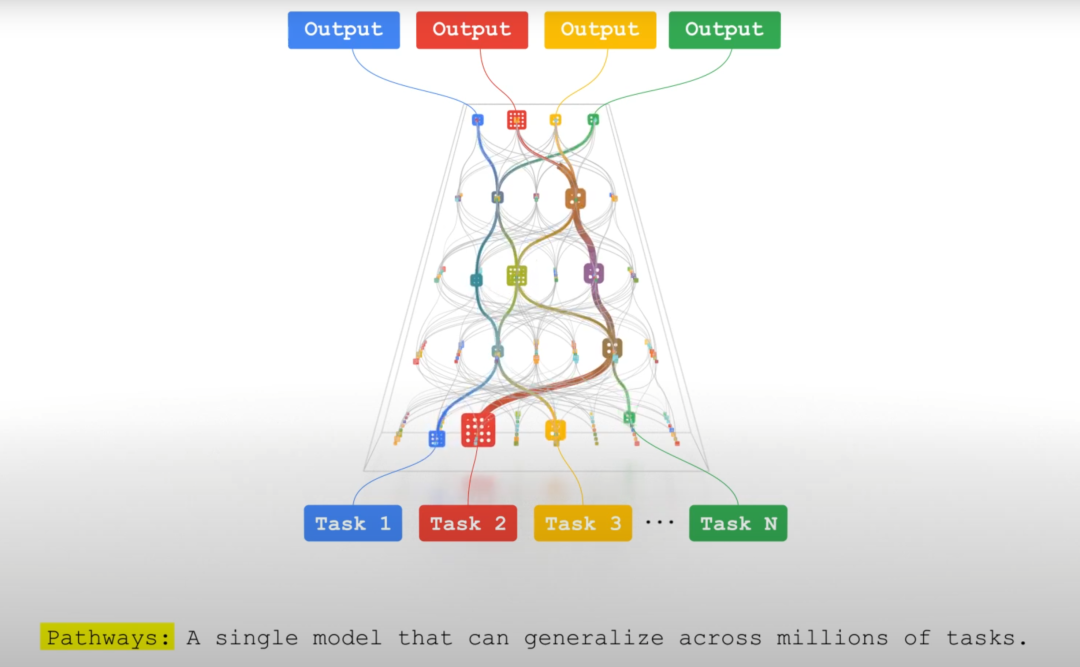

Today, many giants rely on internal solutions: Google's Pathways outperforms other solutions in edge cases and failure types while flexibly handling synchronous and asynchronous data streams. Pathways also excels at detecting and repairing barely perceptible GPU memory issues that bypass ECC, while NVIDIA's diagnostic tool (DCGM) is much less reliable. NVIDIA also struggles to provide competitive partitioning and cluster management software, with its BaseCommand system (built on Kubernetes) aimed at cross-platform compatibility and heterogeneous systems.

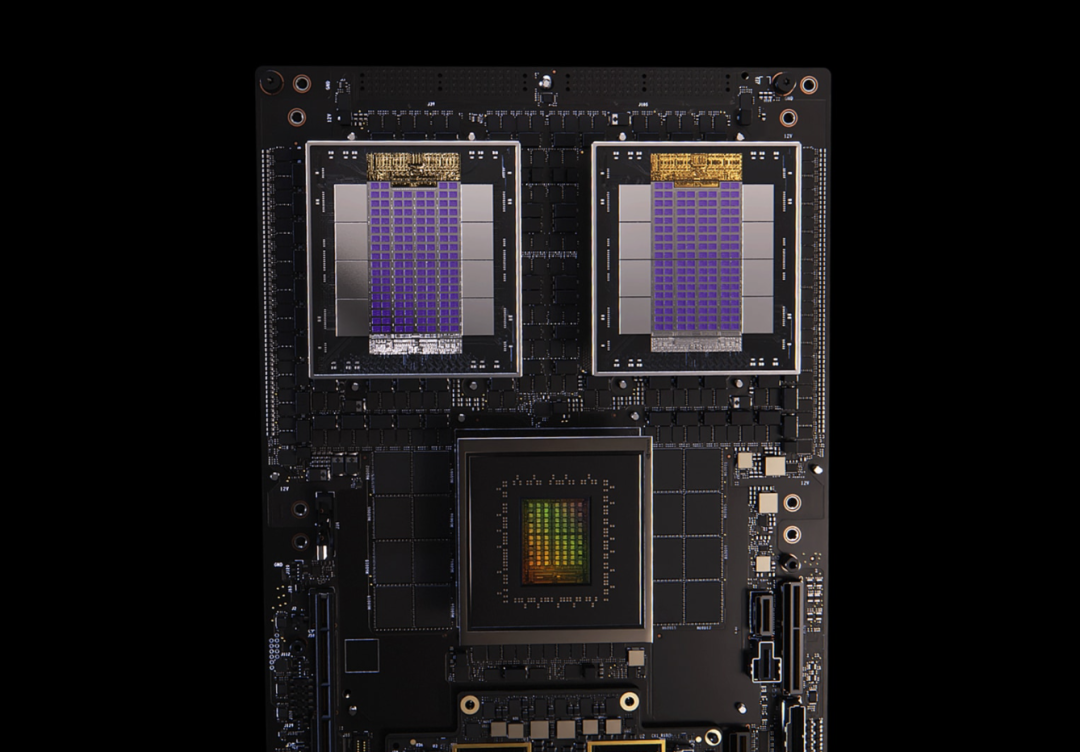

Since the closed NVIDIA ecosystem has driven away major customers, it might as well adopt a semi-open approach, allowing customers to buy NVIDIA chips or use their own custom chips with NVIDIA's CUDA.

Jen-Hsun Huang views the "AI Factory" as the key to the next five years. In this industrial transformation, each "AI Factory" has different tasks, and the optimal configuration of the required AI infrastructure may also vary slightly. NVIDIA has decided to open up moderately, allowing customers to "semi-customize" their "AI Factories," choosing to use their own CPUs alongside NVIDIA's GPUs or NVIDIA's GPUs alongside other custom AI chips. Even the custom AI chips don't necessarily have to be used to accelerate transformer architecture models.

NVLink Fusion opens the AI ecosystem to new players, especially those aiming to gain a foothold in the ASIC chip market. However, NVIDIA may also risk reducing demand for its products in the market. For cloud service providers that are rushing to develop custom AI chips and for Intel and AMD, which jointly support the competing UAlink solution, this is a proactive move by NVIDIA. In theory, it allows Google TPU, Amazon Trainium, Microsoft Maia, and META's MTIA to seamlessly integrate into its "AI Factory," including the CUDA ecosystem and more software and hardware technology stacks.

NVLink Fusion

For large European and American companies, completely divorcing from NVIDIA is not a wise choice, but doing nothing in the face of the "NVIDIA tax" is also wrong. Jen-Hsun Huang's "semi-open strategy" is the optimal transitional choice for large companies, allowing them to advance or retreat as needed, while also prompting Huang to reconsider whether his chips are priced too high.

NVIDIA Doesn't Want to Miss Out on the Chinese Market

During his speech, Jen-Hsun Huang didn't mention the Chinese mainland business too much. However, after the speech, in a conversation with Ben Thompson, the author of the tech blog Stratechery, Huang said that recently, the Trump administration abolished the AI proliferation ban, reopened the market, and set about "reclaiming" the Middle East market. But this is only a recovery of about 10% of the past market. Without entering the Chinese market, the other 90% will remain unattainable.

While the outside world is focusing on NVIDIA's new center in Taiwan, surprisingly, recent media reports have revealed that NVIDIA plans to establish a research center in Shanghai. During his April visit to China, NVIDIA CEO Jen-Hsun Huang discussed this plan with the Shanghai Municipal Government and has already leased new office space in Shanghai.

With Huang's two visits to China in the past six months and new investment actions, it is evident that NVIDIA cannot afford to lose the Chinese market, even if it is caught in a dilemma due to geopolitical conflicts. This business cannot be abandoned.

NVIDIA's financial report shows that in the fiscal year ending in January 2024, NVIDIA's annual revenue in China was $17.108 billion (approximately RMB 123.5 billion), the highest in history, an increase of 66% from the previous year's $10.306 billion. Meanwhile, in NVIDIA's fiscal year 2025, 53% of its revenue came from regions outside the United States, with mainland China being the company's second-largest sales region: the United States accounted for 47% in first place, and mainland China accounted for 13% in second place.

Globally, China and the United States are the most active and willing countries to invest in computing power. According to data from international market research firm Omdia, the top five buyers of NVIDIA's Hopper series chips globally in 2024 were Microsoft (485,000 units), ByteDance (230,000 units), Tencent (230,000 units), Meta (224,000 units), and Amazon (196,000 units).

In the fourth quarter of 2024, the capital expenditure growth rates of Tencent and Alibaba reached 386% and 258%, respectively, ranking first and second among the world's major technology companies. According to Caijing Magazine, the total capital expenditures of ByteDance, Alibaba, and Tencent are expected to increase by approximately 69% in 2025, while those of Amazon, Microsoft, Google, Meta, and Oracle are expected to increase by only about 29% this year.

Therefore, Jen-Hsun Huang stated that not being able to enter the Chinese market would be a "huge loss" for the company. He estimates that the Chinese AI market could reach $50 billion in the next two to three years.

So what can NVIDIA do if it wants to stay in the Chinese market?

First, it requires appropriate technical compromises: reducing the number of transistors from the original 208 billion in B200 to about 150 billion, potentially increasing HBM capacity to 120GB to compensate for computing power deficiencies, and using multi-chip interconnect technology (NVLink) to piece together computing power units. Second, compliance needs to be further tightened: replacing HBM with GDDR7 and limiting interconnect bandwidth to below 500GB/s to meet US "performance density" indicators. Third, customized production: expected to be launched in the later quarters of 2025, aiming to maintain a generational advantage over local chips like Huawei's Ascend while complying with regulations.

In short, NVIDIA can only provide mid-to-low-end chips in the Chinese mainland market for now, and continuing with "modifications and overhauls" is unavoidable. Some experts believe that in the future, NVIDIA could also continue to provide computing power services to Chinese enterprises through "cloud services and computing power leasing." The author believes that while this idea is good, the US BIS department has directly provided guidance on "how to prevent US AI chips from being used to help train large Chinese models," which will inevitably limit NVIDIA's "adjustment space."

Final Thoughts

For this series of new products released by NVIDIA, Jen-Hsun Huang specifically changed the promotional slogan. It shifted from "The more you buy, the more you save" to "The more you buy, the more you create." It seems that Jen-Hsun has realized that at this stage, everyone is more concerned about how AI can be implemented and whether it can help businesses and individuals make money. NVIDIA can have various stories, but businesses need more cost reduction and efficiency enhancement. NVIDIA needs to tell everyone what to do right now.

References:

Understanding Jen-Hsun's Computex Speech in One Article

Source: Wallstreetcn

Imagining NVIDIA's Compatibility with Domestic Chips

Source: Unfinished Research

How Important is the Chinese Market to NVIDIA?

Source: Market Value Observation

Why Can't NVIDIA Do Without China?

Source: Hexun