Surpassing OpenAI? The "Frenzy" of Domestic Reasoning Models in H1 2025

![]() 05/29 2025

05/29 2025

![]() 669

669

By | Intelligent Relativity

At the dawn of this year, DeepSeek-R1 garnered significant attention within the global AI community due to its cost-effectiveness, high performance, and open-source nature, briefly eclipsing OpenAI and igniting a sustained "frenzy" among domestic reasoning models.

Reflecting on the first half of 2025, "Intelligent Relativity" statistics revealed that, beyond DeepSeek, companies such as Alibaba, iFLYTEK, Xiaomi, Dark Side of the Moon, and SenseTime Technology have successively announced their pursuit of, or surpassing of, OpenAI's series of model products with their own large-scale models.

Graphic by Intelligent Relativity

The accelerated "frenzy" surrounding domestic models is undeniable. In April of this year, OpenAI shifted gears and released the o3/o4 mini models, potentially in response to the pressure exerted by latecomers. However, for domestic models, rather than merely catching up and surpassing in performance, their own developmental pace has gradually crystallized in the first half of the year, with initial signs of momentum.

"Overtaking in Curves" for Domestic Models

DeepSeek's popularity was not solely due to its performance surpassing OpenAI but rather the disruptive experience brought about by its low-cost advantage and open-source ecosystem, enabling it to rapidly "break out" and become a globally renowned AI model. Over the past six months, following DeepSeek's breakout logic, domestic models appear to be pursuing a similar trajectory.

1. **Low-Cost Breakthrough**: Catching Up with OpenAI's Performance with Less Computing Power

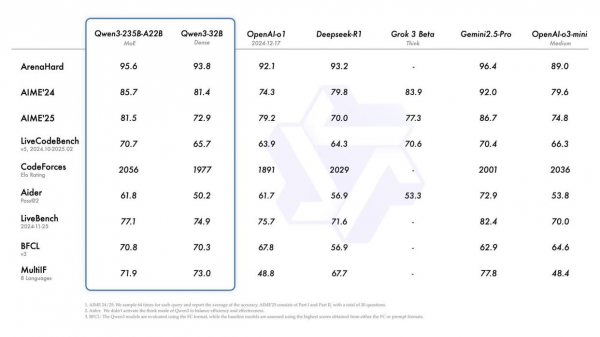

Not long ago, Alibaba's QianWen 3 topped the list of the world's most powerful open-source models, achieving a certain degree of breakthrough for domestic models. The key to this phenomenon was not just the swift launch of the Tongyi App with the model but also its high performance and low-cost attributes. While surpassing OpenAI-o1 and DeepSeek-R1 in performance, its deployment cost was significantly reduced, requiring only 4 H20s to deploy the full-fledged version of QianWen 3, with video memory occupancy being just one-third of models with similar performance.

While it remains challenging for domestic models to significantly outperform OpenAI in terms of performance, the continuous optimization of deployment costs has indeed accomplished catching up with OpenAI from another perspective. Almost simultaneously, Spark X1 also underwent an upgrade, with overall performance benchmarked against OpenAI-o1 and DeepSeek-R1. In terms of computing power costs, Spark X1 is not only the industry's sole deep reasoning model trained entirely on domestic computing power but has also successfully achieved deployment with only 4 Huawei 910B chips, once again emphasizing the low-cost characteristics of domestic models.

As Sino-US rivalry intensifies, the United States' control over China's computing power becomes increasingly stringent. To some extent, this is a reluctant move but also a direction for a strong breakout. Relying on the advantage of low-cost deployment, domestic models exhibit greater adaptability in AI inclusivity and industry applications. If they can continue to make breakthroughs along this path, the industry penetration rate of domestic models will continue to maintain robust growth in the second half of this year.

2. **Open Source Ecosystem Breakthrough**: Redefining Industry Rules, Breaking Technical Hegemony

DeepSeek-R1 adopts the MIT license, and QianWen 3 adopts the Apache 2.0 license, both being extremely permissive open-source licenses. Under OpenAI's closed-source hegemony, domestic models are more eager to employ open-source strategies to attract the attention of global developers and cultivate a broader model ecosystem, thereby balancing OpenAI's first-mover advantage and performance orientation. This strategy is being replicated by more domestic models, with Xiaomi also open-sourcing MiMo.

Currently, China's model vendors are continuously developing their unique characteristics on the open-source path, not only unveiling model products with diverse parameter specifications but also incorporating various quantized versions, complete training datasets, and data templates required for fine-tuning, addressing actual needs across different scenarios. They even provide detailed API designs and documentation to facilitate rapid integration and utilization by developers.

In open sourcing, domestic models have demonstrated a proactive stance. Rather than merely catching up in performance, domestic models are also striving to find a balance between open-sourcing and performance, achieving model surpassing based on enhanced services and ecosystems. It is noteworthy that DeepSeek-R1 and QianWen 3 have received positive feedback from developers on open-source communities such as HuggingFace. The global derivative models of the entire QianWen series exceed 100,000, surpassing the American Llama to rank first, indicating that the open-source strategies of domestic models are gaining recognition from an increasing number of global developers.

3. **Enhancement of Specific Capabilities**: Tailored for Industry Applications, Domestic Pragmatism at Its Peak

At this juncture, domestic models' surpassing of OpenAI is more pronounced in specific capabilities or task handling. Ririxin V6's multimodal processing capability benchmarks OpenAI-o1 while also iterating to become the first large model in China that supports in-depth analysis of 10-minute medium and long videos.

Why strengthen specific capabilities in this manner? SenseTime Technology believes that compared to textual content consumption, video and image-text integration represent a larger content consumption market. Therefore, Ririxin V6 integrates speech, video, and text into a unified context expression aligned with the timeline, achieving more natural and efficient human-computer interaction. This approach is more driven by practical industry applications, and the enhancement of Ririxin V6's capabilities is precisely to prepare for the breakthrough of embodied intelligence.

This approach echoes the ancient Chinese wisdom of "Tian Ji's Horse Racing." While OpenAI-o1 has a significant advantage in structured reasoning, domestic models engage in asymmetric competition in the Chinese context, multimodal capabilities, and cost efficiency, avoiding direct confrontation with OpenAI's strengths. Simultaneously, this aligns with the current needs of local industries for model capabilities in AI applications, facilitating domestic models to enter the enterprise market faster and accelerate commercialization.

Model "Frenzy": OpenAI Turns Left, Domestic Turns Right

In the first half of this year, OpenAI launched the more powerful and intelligent o3 and o4-mini models. Although they cannot match the exaggerated cost-efficiency levels of domestic models, OpenAI has achieved a superior performance experience with the same latency and cost as the previous generation.

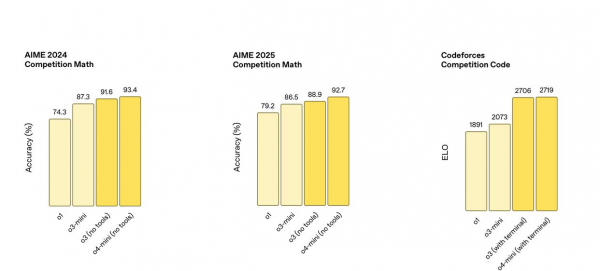

OpenAI's pursuit of model performance remains the industry benchmark. As the latest flagship models, o3 and o4-mini (without tool versions) achieved accuracy rates of 91.6% and 93.4%, respectively, in the AIME 2024 Mathematics Competition, far surpassing o1's score of 74.3%. In the Codeforces programming competition ratings, o3 and o4-mini with terminal tool support also achieved ELO scores of 2706 and 2719, respectively, with their leading positions evident, demonstrating significant breakthroughs in mathematics and coding capabilities.

Furthermore, OpenAI's new models have also demonstrated enhanced capabilities in knowledge question answering and multimodal reasoning, once again establishing their leading position in the AI industry. In contrast, domestic models tend to focus more on industry applications, pursuing more suitable AI solutions under the conditions of integrated specific capabilities, cost efficiency, and application processes.

Recently, StepStar released and open-sourced the 3D model Step1X-3D, simultaneously announcing a comprehensive data cleaning strategy, data preprocessing strategy, and 800K high-quality 3D assets. The full-link training codes for 3D VAE, 3D geometry Diffusion, and texture Diffusion were also open-sourced. Based on these robust measures, while benefiting developers, domestic models are also spearheading a nearly revolutionary brand-new 3D community ecosystem.

Enhanced capabilities in vertical fields, open-source strategies to attract developers, and various cost-efficiency advantages... By synthesizing these strengths, domestic models are formulating similar strategies in the first half of the year to seek market breakthroughs. Of course, despite this, domestic models have not abandoned the mainstream direction of advancement. For instance, multimodal capabilities remain a primary iteration direction for both OpenAI and domestic models, and the research and development of basic model capabilities and breakthroughs are also within the purview of domestic vendors.

Written at the End

The first half of 2025, which is drawing to a close, was a pivotal moment for domestic models and a crucial stage for a cohort of domestic models to continuously seek breakthroughs amidst OpenAI's aggressive offensive and fierce global market competition. In this AI competition, although domestic models are still keen on benchmarking OpenAI, the path they are treading is gradually forging its own distinct characteristics.

To a certain extent, domestic models are no longer mere followers but are evolving into independent leaders. In the development trajectory of many domestic models, this trend may become increasingly evident in the second half of the year.

*All images in this article are sourced from the internet