Understanding the World Model in Autonomous Driving

![]() 06/24 2025

06/24 2025

![]() 512

512

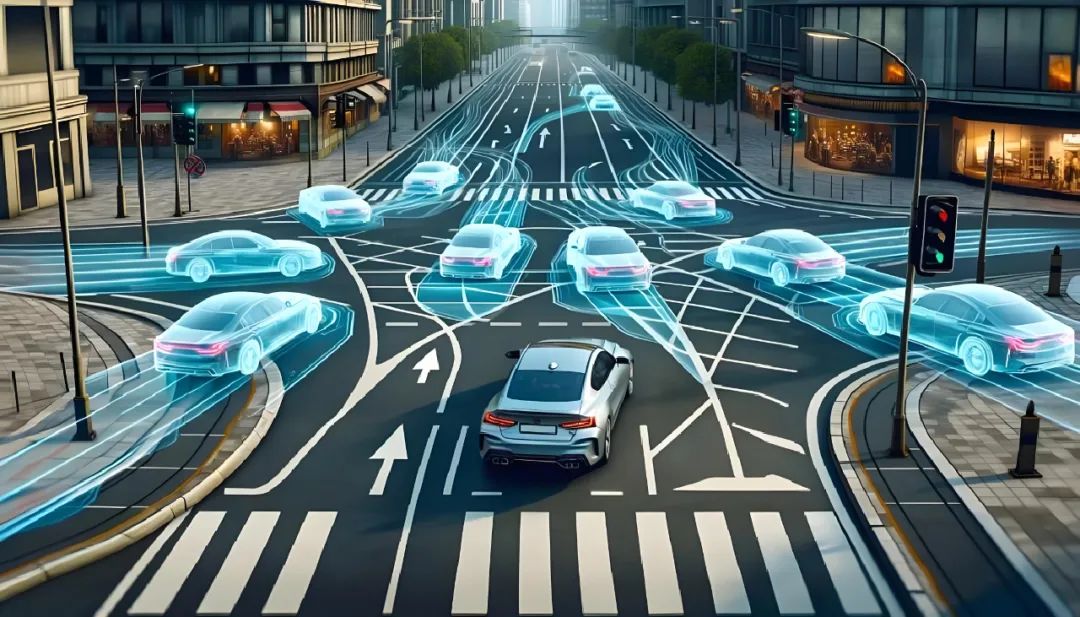

As autonomous driving technology continues to advance, vehicles must navigate complex and ever-changing road environments safely. This necessitates a system capable of not only "seeing" the surrounding world but also "understanding" and "predicting" future changes. The world model serves as a technology that abstracts and models the external environment, enabling the autonomous driving system to describe and predict the real world within a compact internal "miniature," thereby providing robust support for critical processes such as perception, decision-making, and planning.

What is a World Model?

Imagine the "world model" as a fusion of a "digital map" and a "future predictor." Traditional maps inform us of our current location, road shapes, and static information, but the world model goes beyond this. It not only records current road conditions but also simulates potential changes in the upcoming seconds or minutes. For instance, when an autonomous vehicle navigates urban roads, it continuously gathers information about the surroundings, such as pedestrians, other vehicles, traffic lights, etc., using cameras, lidars, and other sensors. The world model converts these input data into a smaller, more abstract internal "state," akin to compressing a high-resolution street view into a series of digital codes.

When the vehicle needs to assess whether the car in front is decelerating or accelerating, or if pedestrians might cross the road, it simulates various action outcomes multiple times in this "digital space" to swiftly determine the safest course of action. Directly performing prediction calculations on raw pixels or point clouds from cameras or radars when acquiring and understanding real images would be slow and resource-intensive. However, by first "compressing" the environment into a low-dimensional digital representation and making multi-step deductions in this space, computation efficiency significantly increases, and it becomes easier to handle uncertainty due to sensor noise.

Achieving such "abstraction and simulation" involves automatic learning through neural networks. The entire process can be divided into three key steps: "compression," which transforms original high-dimensional perception data like images and point clouds into a more concise vector representation; "prediction," which learns how the environment evolves over time in this vector space; and "restoration," which "decodes" the predicted vector back into images or other visual information to aid the system in assessing whether the simulation results align with reality.

In academia and industry, this encoding-prediction-decoding approach is predominantly implemented using a technology called "Variational Autoencoder" (VAE) or its advanced version, "Recurrent State Space Model" (RSSM). VAE initially learns to compress each frame of camera images into a "latent vector" and then attempts to reconstruct similar images from this vector. RSSM, on the other hand, adds a time dimension to the latent vector, capturing dynamic changes between consecutive frames through recurrent neural networks (such as LSTM or GRU). In this manner, the world model can establish a stable digital representation for the current environmental state and make short- and long-term multi-step predictions within this space.

Why Does Autonomous Driving Need a World Model?

The application of the world model to simulation training for autonomous driving simplifies the process. Instead of risking real-world experiments, the computer "rehearses" in a simulated environment. Historically, most autonomous driving algorithms relied on "model-free training," requiring numerous attempts, collisions, and corrections in real or highly simulated scenarios, consuming vast simulation resources and time. The "model-based training" approach introduced by the world model involves the vehicle first collecting sufficient real driving data to train a model that accurately replicates the real world. Subsequently, the algorithm performs continuous reinforcement learning and strategy optimization within this model, only returning to the real environment for verification when necessary, significantly reducing the dependence on real vehicles and roads. This approach is akin to pilots training repeatedly in simulators before flying real planes, enhancing safety and significantly lowering training costs.

Once the world model accurately reflects real traffic rules and dynamics, it accelerates the autonomous driving system's learning of how to avoid hazards, follow cars, overtake, and manage emergencies, without the need for constant real-world testing.

Due to variations in traffic conditions across cities and road segments, an algorithm trained in a single scenario may perform poorly in new environments. The world model simulates various scene changes in the latent space, including urban roads during peak hours, dimly lit suburban highways at night, rainy road sections with water accumulation, and even extreme situations like sudden accidents or pedestrian intrusions. By integrating the characteristics of different scenarios within a single model, the autonomous driving algorithm can repeatedly practice multiple extreme operating conditions during the "internal simulation" stage, thereby enhancing its adaptability and robustness when facing new scenarios on real roads. In essence, the world model provides a "versatile training ground" for the algorithm, enabling it to "practice" under various complex situations in advance and improve its generalization ability.

When deploying the world model on actual automotive hardware, several interesting technical details arise. The on-board computing unit (ECU) typically has limited computing power and memory, necessitating the use of techniques like knowledge distillation to prune, quantize, or compress the trained world model, ensuring low latency during real-time operation. Many manufacturers also utilize dedicated hardware acceleration platforms, such as NVIDIA Drive or NVIDIA's Xavier module, to load deep neural network models onto specialized chips. In such a software-hardware integrated architecture, the vehicle can complete the encoding and prediction of the world model within milliseconds, providing rapid and reliable "future scene" information to the decision-making module. For example, if it predicts a pedestrian might rush out from the right side within the next three seconds, the vehicle can swiftly calculate the optimal braking or steering plan to ensure safety.

Difficulties in Deploying the World Model

Implementing and leveraging the advantages of the world model is not trivial. The first major challenge lies in data collection and diversity. For the world model to accurately replicate reality, it requires a vast amount of high-quality data spanning various scenarios like roads, weather, traffic density, etc. However, collecting sufficient samples for extreme or risky scenarios, such as road flooding during heavy rain, pedestrians or vehicles suddenly appearing on sharp curves, or vehicle out-of-control situations, is often difficult in real environments. If the model performs well only on "ordinary" data, it may struggle when rare scenarios actually occur. To address this, some technologies propose combining real data with simulation data, first using virtual simulators to generate "supplementary samples" of extreme conditions and then fine-tuning with real data. Simultaneously, domain adaptation and other technologies are employed to reduce the loss when the model is transferred between different data sources, thereby minimizing the performance gap from "simulation to reality."

The second major challenge is the accumulation of errors in long-term predictions. Since the world model predicts the next step in the latent space based on the previous step's result, as the number of prediction steps increases, small errors accumulate, eventually leading to a significant deviation from the real environment. This is acceptable for short-term predictions (like one or two seconds) but requires special attention for longer-term planning. This can be mitigated by using a training strategy that combines "semi-supervised, autoregressive" and "teacher forcing," allowing the model to learn to use its own predicted output as the next input while occasionally correcting it with real observation data. Additionally, a penalty for multi-step prediction errors is added to the loss function, making the model more sensitive to the stability of long-distance time series. During real vehicle testing, if the deviation between the model prediction and real observation exceeds a threshold, an online calibration mechanism is activated to forcibly align the model state with the real data, thereby preventing error explosion over long time horizons.

The third major challenge is ensuring the world model has a certain degree of interpretability and safety guarantees. Autonomous driving is a safety-critical system. If the "latent vectors" within the model are as inscrutable as a black box, tracing the root cause of abnormal vehicle decisions becomes difficult. Moreover, the model might be disrupted by adversarial attacks, causing it to output vastly different predictions for the same road condition, posing a severe threat to driving safety. To address this, interpretable designs can be added to the world model, such as having specific latent vectors correspond to lane lines, traffic signs, or other geometric information, making part of the model's internal structure a "white box" for easier troubleshooting and verification. Additionally, large-scale adversarial sample testing is conducted before deployment to assess robustness under noise or intentional tampering, and security checks are performed on the latent vector space to ensure timely triggering of emergency braking or safety warnings under abnormal inputs.

Future Trends of the World Model

With the advancement of self-supervised learning and multi-source data fusion technologies, the world model will undergo further optimization. Currently, most world models still require substantial labeled or weakly labeled data for learning. In the future, an ideal approach would enable the model to mine temporal and spatial patterns from millions of unlabeled driving videos, using contrastive learning to ensure latent representations remain consistent across different times or perspectives, thereby enabling continuous improvement without relying on manual annotation. Furthermore, future world models are expected to integrate symbolic reasoning, such as expressing traffic rules, road network topology, driving intentions, etc., using logical symbols, complementing the representations learned by neural networks. This "hybrid" world model will be more stable, reliable, and easier to pass regulatory and safety certifications.

With the proliferation of Vehicle-to-Everything (V2X) technology, the world model can collaboratively perceive with the cloud and other vehicles to achieve real-time online updates. When a large-scale congestion or accident suddenly occurs in a certain area, road condition information detected by other vehicles and high-precision map updates from the cloud can be immediately fed back to the world model of each vehicle, allowing them to swiftly adjust predictions and enhance their sensitivity to extreme situations.

The world model imparts an ability to "simulate in the mind" to the autonomous driving system, enabling vehicles to make multi-step predictions about the future environment within a smaller and more efficient internal space. This accelerates decision-making, reduces the risk of misjudgment, and enables calmer performance when facing diverse and complex road scenarios. However, to maximize the benefits of this ability, continuous optimization and breakthroughs are needed in data collection, long-term prediction stability, interpretability, safety, and vehicle-end deployment efficiency. With the ongoing progress in deep learning, hardware acceleration, and V2X technologies, the world model will play an increasingly pivotal role in the field of autonomous driving, helping us achieve a safer and more intelligent self-driving travel experience.

-- END --