The Evolution of Mobile HBM: Bridging the Gap to Jarvis-Like Intelligence

![]() 08/08 2025

08/08 2025

![]() 516

516

In the Marvel Cinematic Universe, Iron Man's AI butler Jarvis showcases a futuristic AI application, capable of understanding complex commands and providing real-time information, enhancing the armor's efficiency. Today, mobile High Bandwidth Memory (HBM) technology is propelling our smartphones towards similar powerful AI capabilities. But how close are we to experiencing a Jarvis-like intelligence on our mobile devices?

To understand this, let's first delve into the limitations of traditional memory technology in mobile devices. With the rise of AI photography, voice assistants, and other advanced features, smartphones must process vast amounts of data instantaneously. For instance, when capturing an AI-optimized photo, the phone performs real-time image analysis and processing, requiring swift memory read/write operations. While traditional LPDDR memory meets daily needs, it struggles with high-performance requirements like gaming and multitasking, leading to latency and sluggishness.

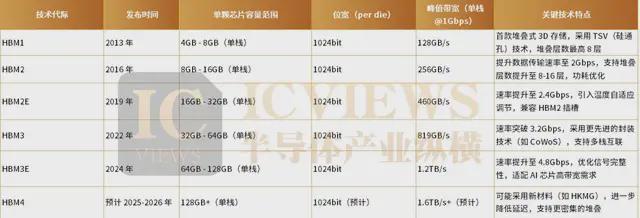

LPW DRAM (Low Power Wide I/O DRAM), a form of mobile HBM, stacks LPDDR DRAM to boost memory bandwidth. Similar to HBM, it stacks conventional DRAM in 8 or 12 layers to enhance data throughput while maintaining low power consumption. The key difference lies in customization: LPDDR is a general-purpose product, while mobile HBM is tailored to specific application and customer requirements, necessitating product-specific design optimizations.

HBM employs micro-holes in DRAM and connects layers with electrodes. Mobile HBM adopts a similar stacking concept but uses a staircase approach and vertical wires for substrate connection. Both Samsung and SK Hynix recognize mobile HBM's potential but take different technical routes.

The popularity of AI has propelled HBM into the spotlight. Yole's "Status of the Memory Industry 2025" report highlights HBM's outperformance across the DRAM sector. By 2025, HBM revenue is projected to nearly double, reaching $34 billion, driven by AI and high-performance computing (HPC) demands. Yole predicts a 33% CAGR through 2030, with HBM revenue surpassing 50% of total DRAM market revenue. Storage giants are upgrading HBM technology and increasing production while also exploring mobile HBM.

Samsung announced its first mobile product with LPW DRAM in 2028, specifically designed to optimize AI performance. As a Mobile High Bandwidth Memory, LPW DRAM promises high performance and low power consumption, aiming to solidify Samsung's leadership in the mobile memory market for device-side AI.

At the 2025 International Solid-State Circuits Conference (ISSCC), Samsung's CTO Song Jae-hyuk revealed the 2028 launch of the first LPW DRAM mobile product tailored for device-side AI. This marks Samsung's first clear disclosure of the LPW/LLW DRAM launch date.

LPW DRAM, also known as LLW or "custom memory," aims to reduce power consumption and enhance performance by increasing input/output channels and reducing individual channel speeds. It utilizes vertical wire bonding (VWB) packaging to straighten electrical signal paths. Samsung has set ambitious performance targets for LPW DRAM, expecting it to offer 166% faster I/O speeds than LPDDR5X (exceeding 200 GB/s) and 54% lower power consumption (1.9 pJ/bit).

However, mobile HBM chips, represented by LPDDR, are unsuitable for HBM's TSV connection due to their smaller size and HBM's high cost, low yield characteristics. Hence, Samsung and SK Hynix adopt advanced packaging methods:

Samsung's VCS (Vertical Copper Pillar Stacking) method stacks DRAM chips in a stepped formation, hardens them with epoxy, drills holes, and fills them with copper. Compared to traditional wire bonding, VCS improves I/O density and bandwidth by 8x and 2.6x, respectively, and enhances production efficiency by 9x over VWB.

SK Hynix uses copper wires instead of pillars, connecting stacked DRAM with copper wires and hardening the gaps with epoxy resin (VFO technology). This combines FOWLP and DRAM stacking, shortening electrical signal paths, reducing line length to less than a quarter of traditional memory, and improving energy efficiency by 4.9%. While increasing heat dissipation by 1.4%, it reduces package thickness by 27%.

HBM, a cornerstone of high-performance computing, is rapidly infiltrating the smartphone market, reshaping edge AI capabilities and igniting a technological race between Apple and leading domestic manufacturers.

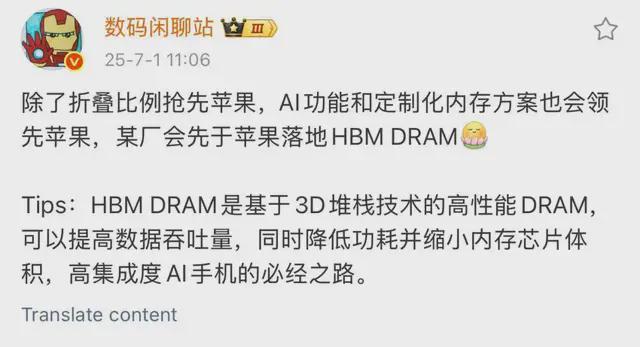

Apple plans to equip its 2027 flagship (marking the iPhone's 20th anniversary) with a mobile HBM version. This reflects Apple's ecosystem synergy strategy, working with Samsung and SK Hynix to develop mobile-suitable HBM solutions. Both solutions aim for mass production in 2026, maintaining Apple's technological dominance and paving the way for performance upgrades in the 2027 iPhone.

Domestic mobile phone manufacturers are integrating mobile HBM technology, with one leading manufacturer including it in its upcoming model's configuration. Through collaborative design of self-developed memory controllers and chip architectures, the manufacturer aims to realize technology implementation.

This "hardware-software-algorithm" integration supports technological advancement: the self-developed 3D graphene heat dissipation film dissipates HBM stacking heat, and the proprietary OS's memory dynamic scheduling mechanism balances power consumption and bandwidth performance.

The manufacturer's expertise in new-generation communication and multi-device interconnection may expand mobile HBM applications. When smartphones serve as intelligent hubs for automotive, smart homes, and other devices, high-bandwidth memory could enhance cross-device AI task response efficiency, a key feature of their technological layout.

Industry insiders believe domestic enterprises may lead in launching mobile HBM-equipped phones due to their self-developed SoC chips, OS support, and heat dissipation patents, forming a technological closed loop. Strategically, they are eager to break into the high-end market through edge AI experiences.

Apple and domestic manufacturers' technical paths reflect different strategic considerations. Apple focuses on user experience, optimizing hardware parameters and advancing Core ML framework and HBM adaptation. Domestic enterprises prioritize independent technological system construction, aiming to increase mobile HBM yield from 50% to over 70% through local TSV technology progress, optimizing cost structures for mass production.

Achieving a Jarvis-like intelligent experience with mobile HBM is still a long way off. While mobile HBM enhances memory bandwidth and performance, more powerful processors and efficient AI chips are needed. Current mobile hardware lags behind the ideal Jarvis platform in computing power and energy efficiency, struggling with large AI models' fast response and efficient processing requirements.

Software and algorithms are another challenge. Jarvis possesses highly intelligent algorithms for complex human language and emotion understanding, far surpassing current mobile AI assistants. Developing ultra-intelligent algorithms compatible with mobile HBM is crucial.

Data-wise, Jarvis accesses vast amounts of data for analysis and decision-making. Mobile devices' storage and data access limitations hinder such extensive data acquisition. Safely and effectively utilizing data while ensuring user privacy is another hurdle.

Cost and popularity are also factors. Mobile HBM's high cost escalates when combined with other high-end hardware for a Jarvis-like experience, affecting product popularity. Only with technology maturity and cost reduction can more consumers enjoy a Jarvis-like intelligent experience.

Despite the distance to a Jarvis-like intelligence, mobile HBM technology marks a significant step forward. Mobile devices' intelligent forms will evolve towards greater efficiency and depth, driving the entire semiconductor and intelligent terminal industries' collaborative upgrading.