The "turning point" of AI, with the trinity of technology, algorithms, and data fully aligned

![]() 08/06 2024

08/06 2024

![]() 619

619

Author: Intelligent Relativity

Recently, NVIDIA CEO Jen-Hsun Huang and Meta founder Mark Zuckerberg held a fireside chat.

As leaders in the field of AI today, they occupy a prominent position in computational power due to the absolute advantage of AI chips and have emerged as benchmarks in open-source with the strong rise of the open-source large model Llama 3.1. This dialogue presents different perspectives on the future development trend of AI.

Jen-Hsun Huang in Conversation with Mark Zuckerberg

The dialogue between these two luminaries paints a blueprint for the future development of AI technology: from open-source AI algorithms to advanced humanoid robots to soon-to-be ubiquitous smart glasses, AI technology development is full of opportunities and challenges. In the future, AI phones, AIPC, AI cars, smart glasses, servers, and various other products will all undergo intelligent upgrades, with complex models, massive data, and computations heavily reliant on AI computational power support.

AI computational power is also expanding from specialized computing to all computing scenarios, gradually forming a pattern of "all computing is AI."

In fact, the actions of computational power vendors also reflect the market's demands for computational power development. On the one hand, CPUs, GPUs, NPUs, and various other PUs are also used for AI computations.

On the other hand, Inspur Information is also committed to providing high-performance and low-cost options for general-purpose servers that fit different application scenarios. Recently, based on the 2U4-way flagship general-purpose server NF8260G7, Inspur Information innovatively adopted leading tensor parallel computing and NF4 model quantization technologies, enabling the server to run the "Yuan 2.0" large model with 100 billion parameters using only 4 CPUs, once again setting a new benchmark for general-purpose AI computational power.

In today's market, the industrial status of computational power is rapidly rising. Corresponding to the trinity driving AI development—computational power, algorithms, and data—the three have finally reached an equivalent status, moving towards parallel development.

It is worth noting that in the early stages of AI technology development, China's vast internet user base and abundant online data resources focused on data development. In contrast, the United States, with its long research tradition in computer science, mathematics, and statistics, focused more on algorithm development. Comparing the two, computational power received relatively less attention in the early stages.

Today, the trinity is moving in parallel. The public's understanding of AI development has become increasingly clear—the explosion of the AI industry is the result of the collaborative development of algorithms, computational power, and data. This state represents a new phase for the AI industry.

The AI Industry Arrives at a "Turning Point"

At this stage, the accelerated iteration of large model technology has led to the continuous emergence and refinement of 100-billion-parameter large models. Related AI applications are also infiltrating various industries at an unprecedented speed and scale, integrating into daily life and work.

The AI industry is transitioning from initial exploration to widespread application at a "turning point." In this process, the trinity of AI—computational power, algorithms, and data—has reached a critical juncture of comprehensive collaborative development to provide necessary technical support for leapfrogging upgrades in scenario applications.

Taking the anti-fraud system of banks as an example, the early system was built on big data, relying on experience-based preset rules and statistical models to judge and detect suspicious transactions. Today, integrating big data systems and AI models for financial fraud prevention with higher-performance general-purpose computational power, bank anti-fraud systems have achieved functional upgrades, offering higher accuracy, lower false positives, and the ability to self-learn and adapt to new fraud patterns based on new data.

The collaboration of algorithms, computational power, and data constitutes the basic paradigm of current AI applications. A successful AI project often requires appropriate investments and optimizations in all three aspects.

Algorithms act as the brain of AI, responsible for processing information, learning knowledge, and making decisions. Data serves as the foundation for algorithms; without sufficient data, even the most advanced algorithms cannot deliver their intended effects.

Furthermore, whether it's algorithm execution or data processing, both rely on computational power support. Especially in scenarios involving massive data processing, complex model training, and real-time inference demands, AI's requirements for computational power must also consider cost-effectiveness as the scenarios scale up.

Today, upgrades in algorithms, computational power, and data levels for the AI industry's trinity are still proceeding synchronously, with the collaboration between the three reaching new heights driven by the development of the AI industry. The accelerated development of the AI industry requires a more consistent pace among the trinity.

It's Time to Fully Align the Trinity

The widespread application of AI must be built on the collaborative development of the trinity. In the coming period, upgrading the AI industry requires addressing a crucial question: how to maintain a stable state of parallel development among the trinity.

1. "Parallel Technology": Leading the way alone is not optimal; moving forward together is most stable.

Computational power, algorithms, and data complement each other. A single technological lead cannot trigger a comprehensive explosion in the AI industry; the other two must quickly catch up to address related technical issues.

For instance, the accelerated development of 100-billion-parameter, or even trillion-parameter, large models brings stronger information processing and decision-making capabilities, laying the foundation for intelligent emergence. However, breakthroughs at the algorithmic level necessarily require upgrades in computational power and data to demonstrate application effectiveness. Simply put, without sufficient computational power to drive the training and inference demands of 100-billion-parameter large models, even the most powerful models have no practical application.

To accelerate AI development and support the widest range of general scenarios across industries, 100-billion-parameter large models must integrate with big data, databases, clouds, and other scenarios for efficient operation.

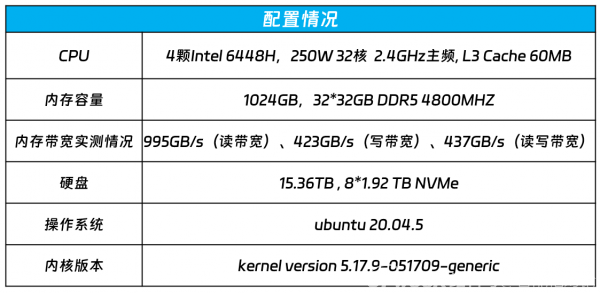

However, this goal demands significant hardware resources like computing, memory, and communication. To meet the AI computational power demands of more users, computational power vendors must consider targeted solutions to overcome existing computational power bottlenecks. Taking the NF8260G7 AI general-purpose server, capable of handling 100-billion-parameter large model inference, as an example, Inspur Information has made professional designs in this regard.

Addressing the low latency and immense memory requirements during 100-billion-parameter large model inference, the NF8260G7 server is equipped with four Intel Xeon processors featuring AMX AI acceleration capabilities. In terms of memory, the NF8260G7 is configured with 32 sticks of 32G DDR5 4800MHZ memory, achieving measured memory bandwidth values of 995GB/s (read bandwidth), 423GB/s (write bandwidth), and 437GB/s (read-write bandwidth), laying the foundation for low latency and multi-processor concurrent inference computations for 100-billion-parameter large models. Simultaneously, Inspur Information has optimized the high-speed interconnect signal routing paths and impedance continuity between CPUs and between CPUs and memory to better support large-scale concurrent computations.

This design and upgrade aim to optimize computational power for algorithms, providing a crucial support for the upcoming large-scale application of 100-billion-parameter large models.

2. "Systematic Synchronization": When three horses pull a cart, the focus is on systematic optimization.

As AI technology evolves, the systematic nature of computational power, algorithms, and data becomes increasingly pronounced. Many tech giants are competing to find AI solutions with "high model performance and low computational power thresholds." AI-related solutions are no longer single-technology applications but rather comprehensive systemic upgrades achieved through breakthroughs in multiple fields.

For instance, Google's EfficientNet model enhances precision by about 6% on the ImageNet dataset compared to traditional models while reducing the required computations by 70% through optimized network architecture. It is evident that major large model vendors, in driving computational power upgrades, also consider software-level innovations to improve the compatibility and operational capabilities between computational power and algorithms.

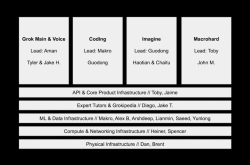

To enable general-purpose servers to better run 100-billion-parameter large models, Inspur Information has optimized not only the server itself but also the parameter scale of these large models. Based on the algorithmic development of Yuan 2.0, Inspur Information tensor-splits the convolutional operators of the 102.6-billion-parameter Yuan 2.0 large model, enabling efficient tensor parallel computing for general-purpose servers, ultimately improving inference computation efficiency.

Parallel Computing Based on CPU Servers

Simultaneously, Inspur Information employs NF4 quantization technology to "slim down" the model, enhancing decoding efficiency during inference.

NF4 Quantization Technology

When computational power and algorithms move towards collaboration, systematic optimization results are built upon this collaboration, ultimately aiming to provide a stable and robust technical foundation for the implementation of the AI industry. In the future, the comprehensive explosion of the AI industry will require a more systematic approach to driving the development of the trinity.

3. "Application Acceleration": Industrial implementation necessitates the optimal solution integrating the trinity.

AI is no longer a laboratory product but a commodity in market competition. Whether it's the emergence of 100-billion-parameter large models or upgrades to computational power solutions, the ultimate goal is to accelerate the implementation of AI applications, bringing them to the masses and generating tangible economic benefits. Therefore, besides technical considerations, the industry must also address economic issues.

In comparison, while AI servers centered on NVIDIA GPU chips excel at handling high-performance computing tasks like machine learning and deep learning, computational power vendors like Inspur Information continue to focus on developing and upgrading general-purpose servers centered on CPUs. Why is this?

The fundamental reason lies in the irreplaceability of CPUs in general-purpose computing, power efficiency, and cost-effectiveness. Particularly, economic issues related to cost-effectiveness are currently the crucial factor limiting the large-scale implementation of various scenarios. The high cost of AI-specific infrastructure makes it unaffordable for ordinary enterprises. Inspur Information offers a more cost-effective and high-performance option, precisely what the market demands.

Based on the collaborative innovation of hardware and software for the general-purpose server NF8260G7, Inspur Information successfully deploys the inference of 100-billion-parameter large models on general-purpose servers while providing more powerful and cost-effective options. This enables AI large models to integrate more closely with cloud, big data, databases, and other applications, boosting high-quality industrial development. This optimal comprehensive solution is essential for the large-scale explosion of the industry.

Conclusion

The systematic nature of the AI trinity has taken shape, with more powerful computational power supporting more complex algorithmic models to better handle massive data. Meanwhile, high-quality datasets enhance algorithmic effectiveness, which, in turn, requires even more powerful computational power. Algorithmic advancements can also reduce computational power demands by designing more efficient models to lower computation costs.

This systematic formation will significantly propel the development of the AI industry, providing a critical direction for AI vendors' product upgrades, technological iterations, and service advancements. However, it also poses new challenges: how to integrate technologies and resources among computational power, algorithms, and data to achieve new breakthroughs.

*All images in this article are sourced from the internet