Big models are not trapped by a "knowledge fortress"

![]() 08/27 2024

08/27 2024

![]() 451

451

Recently, two major knowledge platforms have begun "counterattacking" big models.

The first is CNKI, the platform that sparked the academic uproar and initiated the "Tianlin Era," requiring Meta AI Search to cease searching and linking to their content.

The second is Zhihu. Users have noticed that in Microsoft Bing and Google search results, Zhihu content titles and bodies may appear as gibberish, likely an attempt to prevent content from being used to train AI models.

A defining characteristic of these two platforms, setting them apart from other internet communities, is their rich and high-quality knowledge content.

For big models, "knowledge density" is a crucial indicator, akin to the "advanced process" in integrated circuits. Just as high-process chips can integrate more transistors within the same area, big models with high knowledge density can learn and store more knowledge within the same parameter space, better fulfilling tasks in specific domains.

Blocking advanced processes in the semiconductor industry has long been an effective means of restraining China's chip industry.

So, will the "closed-door policy" adopted by leading knowledge platforms towards big models affect their advancement and the sophistication of AI products?

Our view, as stated in the title, is that big models will not be trapped by a "knowledge fortress."

More importantly than the conclusion, it's worth exploring why conflicts arise between model factories and platforms, given that big model training does not heavily rely on platform content.

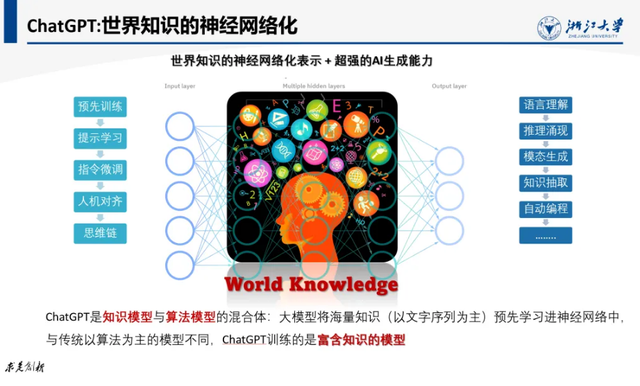

Many readers are familiar with the three elements of AI: data, computing power, and algorithms. But where does knowledge fit in? Why is the knowledge density of big models as crucial as the "advanced process" in semiconductors?

Academician Zhang Bo of Tsinghua University noted that current big models face an insurmountable ceiling. "To promote innovative AI applications and industrialization, knowledge, data, algorithms, and computing power must all play a role. However, we prioritize the importance of knowledge and place it first," he said.

Some may wonder if academicians are always right? Not necessarily. Let's also consider the perspectives of frontline practitioners.

I heard of an AI startup that found that even with a performance-leading base model like GPT4-Turbo, AI struggled to answer many questions. Some scenarios require implicit knowledge necessary for further reasoning, but models often fail to grasp it.

For example, when generating a recipe that mentions adding chili peppers, which can be spicy (implicit knowledge), the AI should ideally inquire if the user likes spicy food. While human chefs instinctively know this, it's challenging for AI to recognize and inquire proactively.

This stems from a lack of "general knowledge."

A financial institution tried to replace human financial advisors with big models but found their advice generic and lacking the insights of human experts. A financial practitioner noted that fine-tuning big models for specific business knowledge proved less effective than traditional small models.

One startup discovered that integrating LLM with domain-specific knowledge could achieve over 97% accuracy, meeting industry standards. In fact, many AI startups' big model ToB projects help enterprises build customized knowledge bases (KB systems).

Domain knowledge poses the second barrier for big models to tackle complex, specialized tasks and achieve commercial success.

Thus, many model factories aspire for their models to continuously absorb new knowledge through learning, raising a new issue: modifying core parameters could compromise the model's existing performance, potentially rendering it dysfunctional – a significant business concern.

So, what's the solution? Knowledge is key.

High-knowledge-density big models, akin to humans with a strong general knowledge base, can quickly learn and apply knowledge across domains, enhancing their generalization ability. This knowledge density enables cross-domain, self-learning capabilities through a "knowledge loop," reducing manual intervention and failure rates.

Efficient, precise knowledge editing allows for adding or erasing knowledge within big models, facilitating iterative upgrades at minimal cost. This maintains models' sophistication while ensuring business continuity, appealing to industries like finance, government, power, and manufacturing that cannot tolerate interruptions.

In practical scenarios, AI should avoid generating sensitive, harmful, or politically biased content. Knowledge editing techniques "detoxify" AI by accurately identifying and erasing toxic content, essentially "brainwashing" big models.

Clearly, knowledge is crucial throughout the AI commercialization process. The industry once embraced the notion that "he who controls knowledge controls the world." Some model factories propose a "Moore's Law" for big model knowledge, suggesting that knowledge density should double every eight months, halving model parameters for the same knowledge volume.

Conversely, those who lose knowledge risk losing everything.

Knowledge platforms are vital conduits for human knowledge. Overseas AI companies like OpenAI and Google collaborate with premium media content platforms to train their models using licensed content.

Given this, why aren't big models concerned about platform "knowledge blockades"?

Because human knowledge platforms are no longer essential "fortresses" for models.

If raw data is "grass" and knowledge is milk, traditional knowledge acquisition is like making machines "drink milk and produce milk." Like 20th-century expert systems, they solved problems by simulating expert thinking processes based on human knowledge and experience.

In such cases, achieving machine intelligence relies on human domain experts and expert knowledge bases, akin to paying tolls to enter a "city" to acquire knowledge.

Big models differ. They can "drink grass instead of milk," directly mining and extracting knowledge from raw data. Demis Hassabis, co-founder of DeepMind, envisioned future big models summarizing knowledge through deep learning algorithms during interactions with the objective world, directly informing decision-making.

They can also "produce milk without humans," leveraging data-driven, large-scale automated knowledge acquisition to nourish models.

ChatGPT and GPT4 demonstrate robust knowledge graph construction capabilities, extracting knowledge with up to 88% accuracy. This "production efficiency" far surpasses human writing and Q&A platforms.

Further, the industry is exploring Large Knowledge Models (LKMs) capable of encoding and processing various knowledge representations at scale. From LLMs to LKMs, reliance on existing human knowledge decreases.

Thus, inclusion or exclusion of human knowledge platform content minimally impacts big model training.

Models that "eat grass and produce milk" can thrive in the wilderness of big data, unencumbered by the "fortress" of knowledge platforms.

After receiving CNKI's letter, Meta AI Search stated that its "Academic" section only included abstracts and citations, not full articles. It voluntarily "broke links," no longer indexing CNKI citations and abstracts, opting for other authoritative Chinese and English knowledge bases. Similarly, despite Zhihu's interference, Google and Microsoft Bing searches maintained their model prowess.

Are knowledge platforms overreacting or fighting a phantom enemy? What are they truly trying to "lock down"? This is the critical question.

Firstly, while big models don't necessarily require human knowledge for training, it doesn't mean they're immune to infringement.

Currently, global model factories face a shortage of high-quality corpora. In the thirst for data, unauthorized use of intellectual property data is possible.

In an interview, OpenAI's CTO dodged questions about whether video training data came from public websites like YouTube. Previously, The New York Times sued OpenAI and Microsoft for allegedly training AI models with millions of its articles without authorization after failed content licensing negotiations.

Recently, Microsoft signed a $10 million deal with academic publisher Taylor & Francis, granting access to its data to improve AI systems.

While AI intellectual property issues remain murky, copyright partnerships with knowledge platforms should be considered by model factories and their clients for AI compliance and sustainability.

Even without infringement, value erosion occurs.

Specifically, new-generation AI products like AI search impact knowledge platforms in two ways:

1. Traffic value erosion: Though Meta AI Search claims to provide CNKI abstracts and citations, users must visit CNKI for full articles, reducing platform traffic and potential revenue, similar to how WeChat blocked Baidu from indexing public account content.

2. Knowledge value erosion: Big model-powered AI search can summarize and generate content. Due to "overfitting," AI might output content highly similar to the original, akin to infringement without technically violating copyright.

Many novelists have found AI-generated story outlines similar to their own work, suspecting cloud documents were used to train AI models, though it's more likely AI and human authors simply converged on similar ideas.

The core value of the big model economy lies in knowledge creation and distribution.

A friend said, "I used to ask Zhihu questions online, but for sensitive issues, I'll now consult a professional bot powered by a base model + domain knowledge + AI Agent." Perplexity's CEO has stated their ambition to be "the world's most knowledge-centric company," with Meta AI Search often compared to China's Perplexity.

Evidently, even without infringement disputes, AI enterprises and products directly compete with knowledge platforms at a commercial level.

"He who loses knowledge loses the world" holds true in this context.

As big models surge ahead in the data wilderness, can knowledge platforms safeguard their core values by "locking doors"? Perhaps we all have our answers.

Unlock Key Insights

Knowledge platform blockades cannot stop AI's quest for knowledge