Meta's Llama3.2 large model, possibly superior to Claude3 Haiku and GPT 4o-mini in visual understanding

![]() 10/05 2024

10/05 2024

![]() 534

534

At the Connect conference held on September 25 local time in the US, Meta introduced Llama3.2, the first visual model developed by Meta AI capable of understanding both images and text.

Llama3.2 includes small and medium-sized models (with 11B and 90B parameters, respectively) as well as lighter text-only models (with 1B and 3B parameters, respectively), suitable for specific mobile and edge devices.

Meta CEO Mark Zuckerberg said in his keynote speech, "This is our first open-source multimodal model, making AI visual understanding possible."

Like its predecessors, Llama3.2 can understand contexts up to 128,000 characters long, allowing users to input large amounts of text (equivalent to several hundred pages of textbooks). Higher parameter counts indicate greater accuracy and the ability to handle more complex tasks.

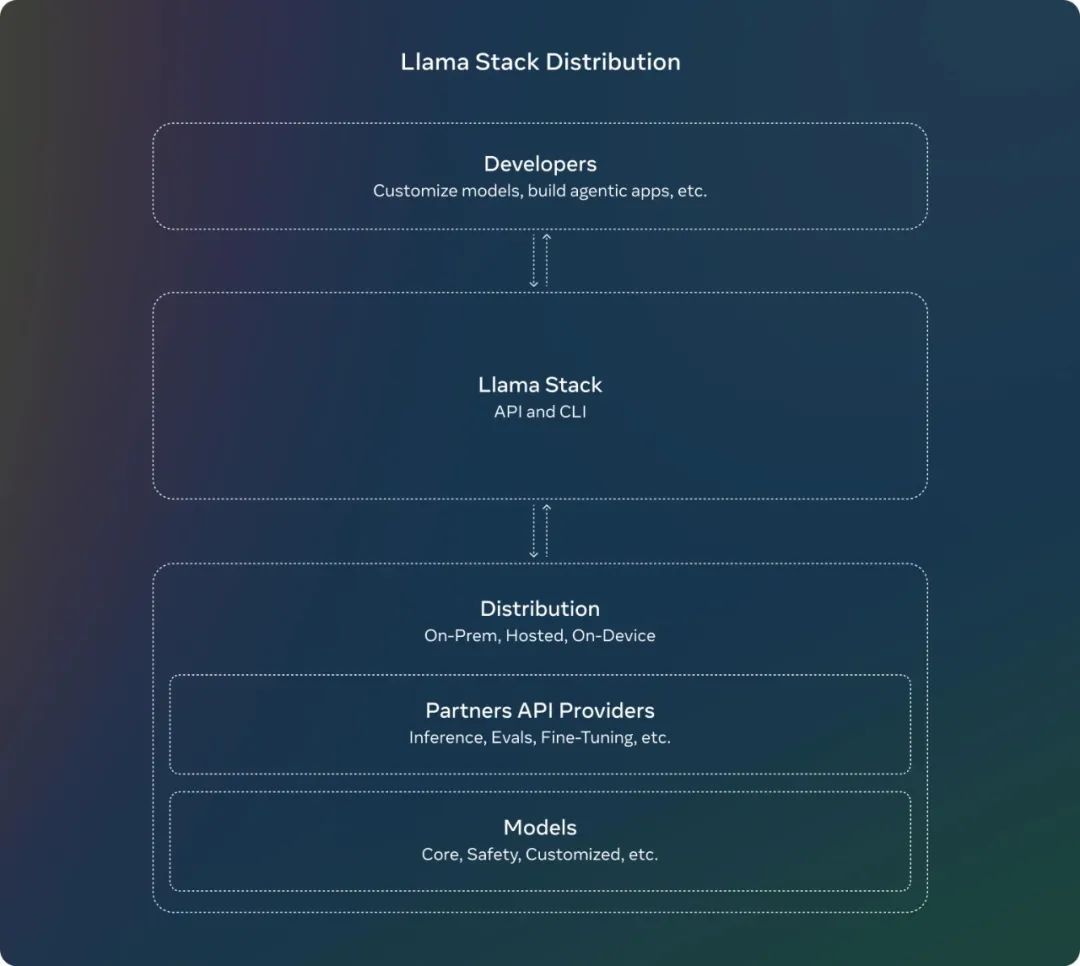

Meta also shared the official Llama stack release for the first time at the Connect conference. Based on this version, developers can use the Llama model in various environments, including on-premises deployments, devices, clouds, and single nodes.

Zuckerberg added, "Open-source is now the most cost-effective option for customization, trust, and high performance. We've reached an industry inflection point where Llama3.2 is becoming the industry standard, akin to Linux for AI."

01. Competing with Claude and GPT4o

Meta released Llama3.1 over two months ago and reported a tenfold growth in its usage since then.

Zuckerberg also stated, "Llama continues to improve rapidly, gaining more and more capabilities."

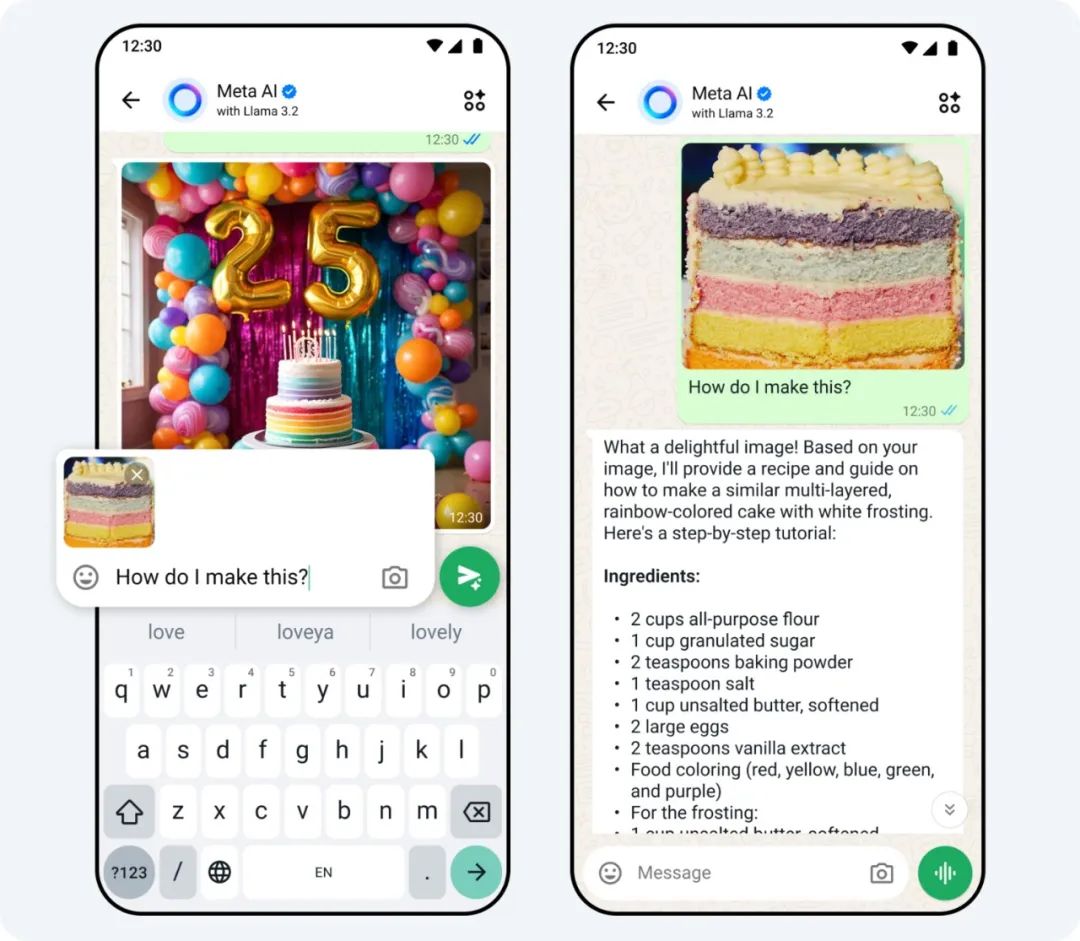

Now, both the largest Llama3.2 models (11B and 90B) support image use cases, understand charts and graphs, caption images, and accurately locate objects based on natural language descriptions.

For example, users can ask which month their company had the highest sales, and the model will deduce the answer based on the existing chart. Larger models can also extract details from images and create captions.

Meanwhile, the lightweight models assist developers in building personalized agent applications in private environments, such as summarizing recent information or sending calendar invitations for follow-up meetings.

Meta claims that Llama3.2 is competitive with Anthropic's Claude3 Haiku and OpenAI's GPT 4o-mini in image recognition and other visual understanding tasks.

Additionally, it outperforms Gemma and Phi 3.5-mini in instruction following, summarization, tool usage, and prompt rewriting.

The Llama3.2 model is available for download on llama.com, HuggingFace, and Meta's partner platforms.

While the release of Llama3.2 garnered significant attention, Meta's long-time rival OpenAI has faced some challenges in its development.

Previous news reports indicated that OpenAI was in talks with potential investors for a new round of $7 billion in funding. However, sources revealed that Apple, a long-time supporter of OpenAI, recently withdrew from the negotiations and will not participate in the funding round.

Coupled with recent management shuffles and power struggles within OpenAI, some external investors are questioning whether these events will impact its development.

02. Meta Launches New Features

Also on September 25, Meta began rolling out its commercial AI ads for businesses to view on WhatsApp and Messenger.

Meta reported that over one million advertisers use its generative AI tools, creating 15 million ads last month using these tools. Compared to campaigns without GenAI, those using MetagenAI had an 11% higher average click-through rate and a 7.6% higher conversion rate.

Furthermore, Meta AI now has a "voice."

The new Llama3.2 supports new multimodal capabilities in Meta AI, notably the ability to respond in celebrity voices, including Dame Judi Dench, John Cena, Keegan Michael Key, Kristen Bell, and Awkwafina.

Zuckerberg mentioned in his keynote speech, "I believe that voice will become a more natural way of interacting with AI than text."

The model will respond to voice or text commands in celebrity voices on WhatsApp, Messenger, Facebook, and Instagram.

Meta AI can also reply to photos shared in chats, add, delete, or modify images, and apply new backgrounds. Meta stated that it is also experimenting with new translation, video dubbing, and lip-sync tools for Meta AI.

Zuckerberg expressed confidence, stating, "Meta AI has the potential to become the world's most widely used AI assistant."