Who owns the most Nvidia H100 GPUs?

![]() 10/23 2024

10/23 2024

![]() 638

638

Written by Semiconductor Industry Insight (ID: ICVIEWS)

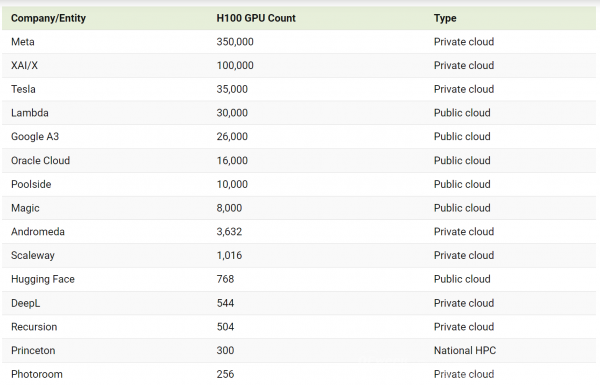

This article visually presents the companies and organizations that own the most Nvidia H100 GPUs.

As demand for artificial intelligence surges, companies across various industries are competing to expand their computing capabilities and investing billions of dollars in upgrading the infrastructure required to support AI models.

Nvidia's H100 Tensor Core GPU is a top-tier graphics processing unit designed specifically for artificial intelligence (AI) and high-performance computing (HPC) workloads.

The chart in this article uses data from the 2024 State of AI Report (updated as of October 2024) to visually show the companies and organizations that own the most Nvidia H100 GPUs.

Meta leads in H100 GPU procurement

Below, this article showcases the number of Nvidia H100 GPUs owned by various companies and organizations as of October 2024.

Companies like Meta and Tesla have been purchasing thousands of H100 GPUs to support their ambitious AI initiatives. While Nvidia has not officially disclosed the pricing of its H100 products, estimates suggest that the 80GB version costs between $30,000 and $40,000 per unit.

Nvidia's high-performance H100 GPU has significantly contributed to the company's revenue growth, particularly in the datacenter sector.

In January 2024, Mark Zuckerberg announced that Meta plans to acquire 350,000 Nvidia H100 GPUs by the end of the year, significantly boosting the company's AI computing capabilities.

This massive investment in hardware aims to support Meta's development of advanced AI models, including Artificial General Intelligence (AGI), a theoretical branch of AI where machines achieve "human-level learning, perception, and cognitive flexibility."

During Tesla's Q1 2024 earnings call, CEO Elon Musk revealed that Tesla has 35,000 H100 chips and plans to use them for various AI projects, including Robotaxi and a supercomputer called Dojo.

While increasing procurement efforts, most companies in the chart are also simultaneously advancing their chip development plans to reduce reliance on Nvidia chips.

For instance, in April this year, Meta unveiled the latest version of its self-developed chip, MTIA. MTIA is a custom chip series designed specifically for AI training and inference tasks. Compared to Meta's first-generation AI inference accelerator, MTIA v1, the latest version boasts significant performance improvements and is tailored for ranking and recommendation systems in Meta's social apps. Analysts note that Meta aims to reduce its dependence on chip vendors like Nvidia.

As its name suggests, MTIA stands for "Meta Training and Inference Accelerator." Despite the name, this chip is not optimized for AI training but focuses on inference, the task of running AI models in production environments.

Meta wrote in a blog post that MTIA is a "critical component of our long-term plan" to use AI to build infrastructure for Meta's services. "To realize our ambition for custom chips, this means investing not just in compute chips but also in memory bandwidth, networking, and capacity, as well as other next-generation hardware systems," it stated.

The new MTIA chip is "fundamentally focused on providing the right balance of compute, memory bandwidth, and memory capacity," according to Meta. While the first-generation MTIA v1 chip uses TSMC's 7nm process, the new MTIA chip employs TSMC's 5nm process and boasts more processing cores. It will feature 256MB of on-chip memory running at 1.3GHz, compared to the 128MB and 800GHz of the MTIA v1. Meta's early test results show that the new chip performs three times better than its predecessor by testing the performance of "four key models."

To support the next-generation chip, Meta developed a large rack-mounted system that can accommodate up to 72 accelerators. It consists of three chassis, each containing 12 boards with two accelerators each. This system can increase the chip's clock frequency from 800 MHz in the first generation to 1.35GHz and operate at 90 watts, compared to the first-generation design's 25 watts.

On the software side, Meta emphasizes that the software stack running on the new chip system is similar to that of MTIA v1, expediting team deployment. Furthermore, the new MTIA is compatible with code developed for MTIA v1. Since Meta has integrated the complete software stack into the chip, developers can launch and run Meta's traffic using this new chip within days, enabling Meta to deploy the chip in 16 regions within nine months for production models.

According to Meta, test results to date indicate that the MTIA chip can handle low-complexity (LC) and high-complexity (HC) ranking and recommendation models that are components of Meta's products: "Because we control the entire stack, we can achieve higher efficiency compared to commercial GPUs."

Currently, the new MTIA chip has been deployed in Meta's datacenters and has shown positive results: "The company is able to commit and invest more compute power for denser AI workloads. The chip has proven to be highly complementary to commercial GPUs in providing the best combination of performance and efficiency for Meta-specific workloads."

Tesla also relies on Nvidia to power its current Dojo training computers, but it does not want to put all its eggs in one basket—especially since Nvidia chips are expensive. Tesla also aims to create better products to increase bandwidth and reduce latency. That's why the automaker's AI division has decided to launch its custom hardware program, designed to train AI models more efficiently than traditional systems.

At the heart of this program is Tesla's proprietary D1 chip, which the company claims is optimized for AI workloads.

Tesla unveiled the D1 chip at its 2021 AI Day, a palm-sized silicon wafer. The D1 chip is in production. TSMC manufactures the chip using a 7nm process node. Tesla claims that the D1 boasts 50 billion transistors and a large chip size of 645 square millimeters. This suggests that the D1 is poised to be powerful and efficient, capable of quickly processing complex tasks.

However, the D1 still lags behind Nvidia's A100 chip, which is also manufactured by TSMC using a 7nm process. The A100 contains 54 billion transistors and has a chip size of 826 square millimeters, providing slightly better performance than Tesla's D1.

To achieve higher bandwidth and computing power, Tesla's AI team combines 25 D1 chips into a block to function as a unified computer system. Each block offers 900 petaFLOPS of compute power and 36 terabytes per second of bandwidth, including all the necessary hardware for power, cooling, and data transmission.

Tesla is also developing the next-generation D2 chip, aiming to address information flow bottlenecks. Instead of connecting individual chips, the D2 chip will place the entire Dojo chip on a single silicon wafer.

*Disclaimer: This article is written by the original author. The content reflects their personal views, and our sharing of this article is solely for discussion purposes and does not necessarily imply our endorsement. For any objections, please contact us through the back-end system.