NVIDIA Q1 FY2026 Earnings Report: Revenue Soars 69% Year-over-Year

![]() 05/30 2025

05/30 2025

![]() 1052

1052

Produced by Zhineng Zhixin

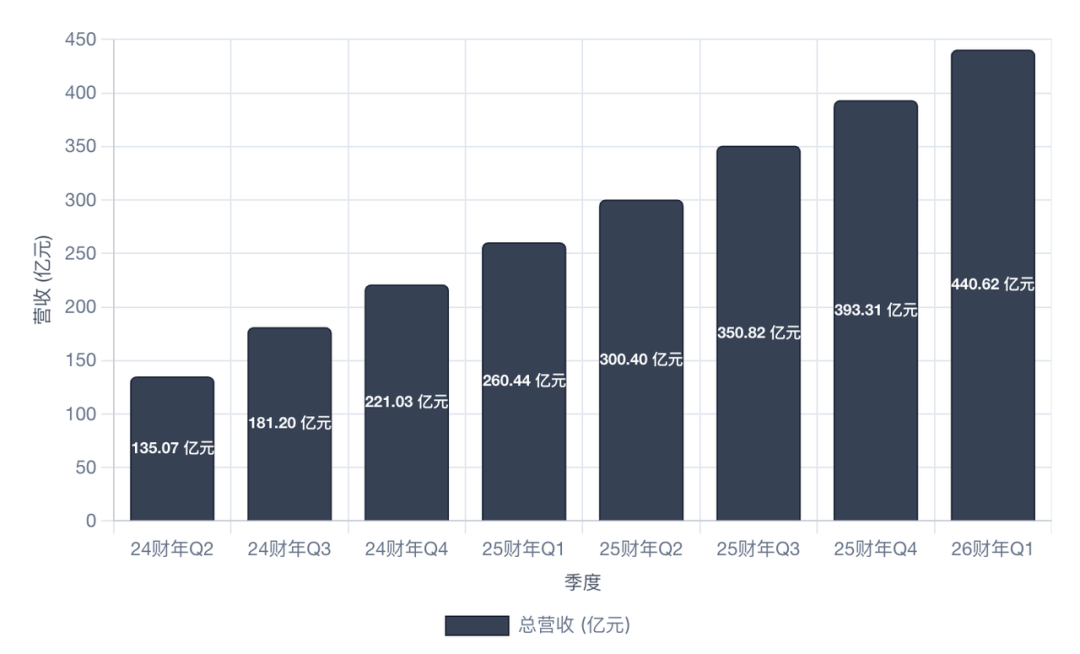

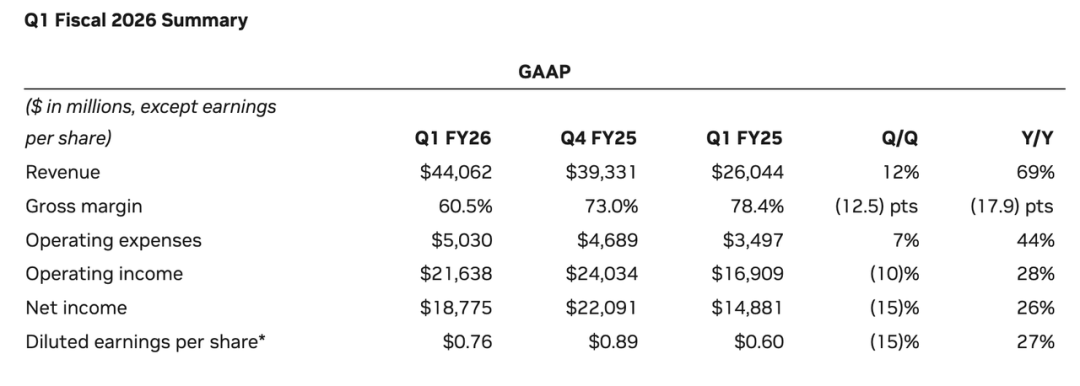

NVIDIA has unveiled its earnings report for the first quarter of FY2026, setting a new benchmark with revenue of $44.1 billion, marking a 69% year-over-year surge, and net income of $18.775 billion.

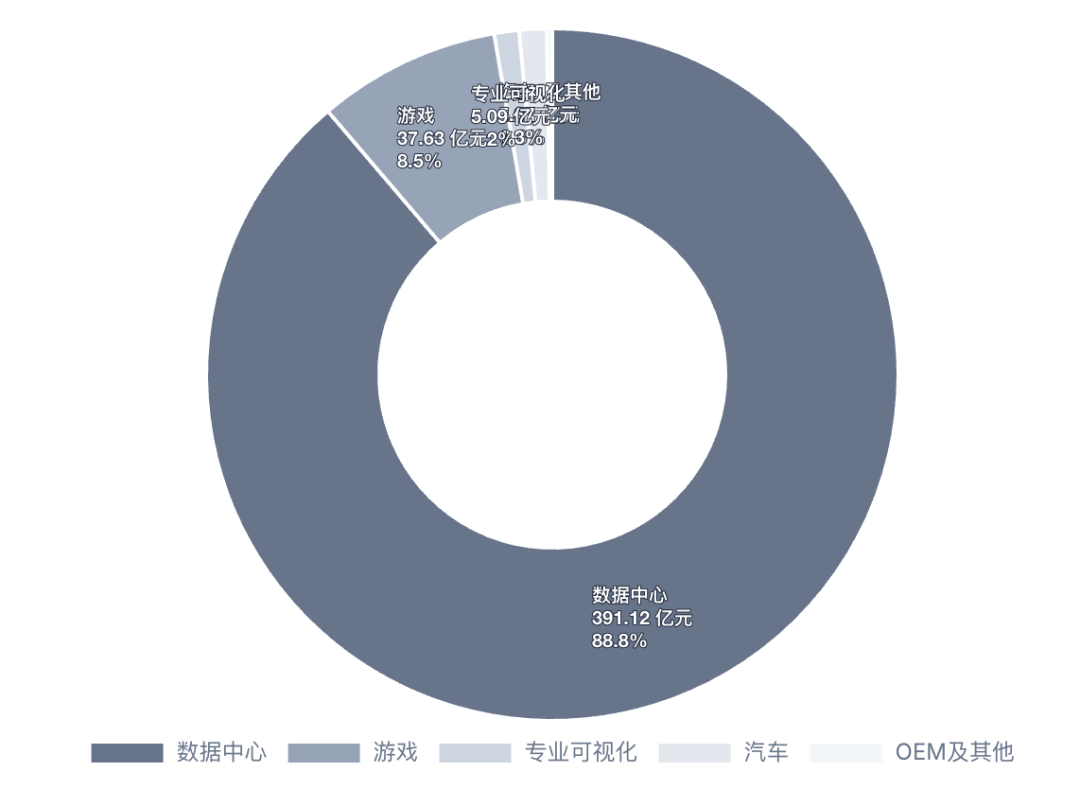

The data center business remains the linchpin, contributing nearly 90% of total revenue, particularly fueled by the surge in AI inference. The introduction of the Blackwell architecture has further cemented NVIDIA's market-leading position.

Amidst challenges such as setbacks in H20 chip sales and inventory write-downs due to export restrictions, NVIDIA is actively navigating new growth paths, including collaborations with Musk's xAI and the "AI Awakening" initiative to bolster its presence in the European market.

Part 1

A Record-Breaking Quarter: AI Inference Propels Revenue Growth

NVIDIA has once again stunned Wall Street. For the first quarter of FY2026:

◎ Revenue reached $44.062 billion, a 12% quarter-over-quarter increase and a 69% year-over-year jump, surpassing market expectations by $800 million;

◎ Net income hit $18.775 billion, a 26% year-over-year rise.

This remarkable report underscores the robust growth fueled by the AI inference boom.

◎ The core impetus continues to stem from the data center business, with quarterly revenue reaching $39.1 billion, accounting for 89% of NVIDIA's total revenue, a 73% year-over-year increase, and a 10% quarter-over-quarter rise. This sustained growth is primarily driven by AI inference emerging as the "main event."

As Jen-Hsun Huang noted, "AI inference is swiftly becoming the predominant AI workload." From ChatGPT, Gemini, to Grok and various agent services, cloud providers and tech giants are deploying large-scale inference platforms at an unprecedented pace.

Breaking it down further:

◎ Compute revenue in the data center amounted to $34.155 billion, a 76% year-over-year increase;

◎ Networking revenue also reached $4.957 billion, a 56% year-over-year jump.

These figures indicate that generative AI has transitioned from the training phase to the inference phase, sparking a surge in demand for inference-related GPUs and high-speed networking equipment.

◎ While the gaming business is no longer the primary growth driver, it still contributed $3.763 billion in revenue, a 42% year-over-year increase;

◎ Professional visualization and automotive businesses recorded $509 million and $567 million, respectively, up 19% and 72% year-over-year;

◎ OEM and other business revenue stood at $111 million, up 42% year-over-year.

Amidst the bright spots, there are also concerns.

NVIDIA's costs soared to $17.394 billion year-over-year (an increase of 209%), primarily due to the inability to ship H20 chips and inventory backlog, resulting in a $4.5 billion charge in the quarter. Consequently, the gross margin plummeted from 78.4% to 60.5%. This highlights that export restrictions on H20 chips have not only incurred opportunity costs but also tangibly impacted the financial structure.

However, NVIDIA maintains robust profitability.

◎ Its operating profit was $21.638 billion, with an operating profit margin of 49%;

◎ Non-GAAP adjusted operating profit reached $23.275 billion;

◎ Adjusted net income amounted to $19.9 billion.

For the next quarter, NVIDIA anticipates revenue to reach $45 billion, with a 2% fluctuation, slightly lower than Wall Street expectations. However, given that it has already accounted for an $8 billion revenue loss from H20, this projection still remains robust.

Part 2

Navigating Export Restrictions and the Rise of AI Inference

NVIDIA is not sailing in smooth waters. The earnings report highlights the disruption in H20 chip shipments due to US export controls, resulting in an inability to deliver approximately $2.5 billion in related products in the first quarter and incurring a $4.5 billion charge for inventory backlog and procurement obligations. Policy uncertainty has emerged as the paramount risk facing NVIDIA in the short to medium term.

Despite these obstacles, NVIDIA has continued to grow, propelling AI inference to the forefront, transforming its dominance in the era of training GPUs into a core competency in the inference era.

The linchpin lies in the successful launch of the Blackwell platform. This architecture, hailed by Jen-Hsun Huang as a "home run," not only achieves a 30-fold leap in inference performance but also introduces innovative designs such as MVLink 72 in system integration, making the Grace Blackwell superchip the most competitive AI computing solution on the market.

With an integration density of 208 billion transistors, Blackwell forms the bedrock of a genuine "thinking machine."

Supply chain capabilities are also rapidly expanding. NVIDIA is collaborating with partners like TSMC to jointly enhance capacity to meet the surging demand for Blackwell orders. Although the H20 chip has lost ground in the Chinese market, the Blackwell chip has gained widespread acceptance among global customers.

As NVIDIA CFO Colette Kress revealed, hyperscale customers (such as Amazon, Google, Microsoft) account for nearly half of the data center business revenue, and these customers are the primary drivers accelerating the deployment of inference services.

Emerging growth opportunities stem from Musk's xAI projects and the Tesla ecosystem. NVIDIA has deepened its collaboration with them in projects like the Grok language model, autonomous driving AI, and the autonomous robot Optimus.

This not only ties NVIDIA to prominent customers at both the training and inference ends but also opens the door to the potential trillion-dollar market of "AI robots."

The European market is also awakening. Jen-Hsun Huang plans to visit the UK, France, Germany, Belgium, and other countries to discuss the construction of AI infrastructure with government leaders.

He emphasized that "AI has become the core infrastructure after electricity and the internet" and renamed NVIDIA's AI data centers "AI Factories," underscoring their ability to produce high-value "tokens," or AI-generated content.

Summary

NVIDIA's performance at the onset of FY2026 is nearly impeccable: from revenue and profit to customer structure and technological evolution, it showcases hallmarks of high-quality growth. NVIDIA's true strength lies in its ability to harmonize software, hardware, systems, and ecosystems, fostering an unprecedented AI infrastructure platform.

As the inference era dawns, the Blackwell platform and the concept of AI Factories will sustain its leadership. Deepened collaboration with the Musk ecosystem and the European AI industry has also infused the company with new growth engines for the coming years.