From Perception to Prediction: How World Models Break Through the Bottleneck of 'Experienced Drivers' in Autonomous Driving

![]() 07/15 2025

07/15 2025

![]() 454

454

When Waymo's driverless cars complete an average of 14,000 rides per day on the streets of San Francisco, drivers' evaluations always carry a hint of mockery - 'This car is a bit slow-witted.' It can accurately stop in front of a red light but cannot understand the intention of a delivery person suddenly changing lanes; it can recognize lane lines in heavy rain but cannot fathom the emergency situation behind the flashing hazard lights of the car ahead. Autonomous driving technology seems to be approaching the threshold of practicality but is always separated by a layer of 'common sense' window paper. Behind this window paper lies the evolutionary path of AI models from 'seeing' to 'understanding' to 'imagining', and the emergence of world models is accelerating the progress of autonomous driving towards the intuitive thinking of 'experienced drivers'.

From 'Modular Assembly Line' to 'Cognitive Closed Loop'

The mainstream architecture of currently mass-produced autonomous driving systems resembles a precisely operated 'modular assembly line.' Cameras and LiDAR disassemble the real world into 3D point clouds and 2D semantic labels, the prediction module calculates the next action of the target based on historical trajectories, and finally, the planner calculates the steering wheel angle and throttle force. This fragmented design of 'perception - prediction - planning' is like equipping the machine with high-precision eyes and limbs but forgetting to give it a thinking brain.

In complex traffic scenarios, the shortcomings of this system are exposed. When a cardboard box is blown up by a gust of wind, it cannot predict the landing point; when children chase a ball on the roadside, it struggles to imagine the possibility of them running out into the road. The core of the problem lies in the lack of cognitive ability in machines to perform 'limited observation → complete modeling → future deduction' like the human brain. When human drivers see a puddle on the road, they automatically slow down, not because they recognize the 'puddle' label, but based on the physical common sense that 'water films reduce the friction coefficient' - this intrinsic understanding of the laws governing the world is precisely what current AI lacks the most.

The groundbreaking significance of world models lies in their construction of a 'digital twin brain' that can be dynamically deduced. Unlike traditional models that only handle single-time perception-decision, they can simulate a miniature world internally: input the current road conditions and hypothetical actions, and it can generate visual flows, LiDAR point cloud changes, and even fluctuations in the friction coefficient between the tires and the ground for the next 3-5 seconds. This ability to 'rehearse in the mind' allows machines to possess human-like 'predictive intuition' for the first time. For example, the MogoMind large model launched by Mushroom Auto has demonstrated this characteristic in intelligent connected vehicle projects in multiple cities in China as the first physical world cognitive AI model - by perceiving real-time global traffic flow changes and predicting intersection conflict risks 3 seconds in advance, it improves traffic efficiency by 35%.

Evolution Tree of AI Models

Pure Vision Model: 'Primitive Intuition' through Brute Force Fitting

The emergence of NVIDIA Dave-2 in 2016 ushered in the era of pure vision autonomous driving. This model, which uses CNN to directly map camera pixels to steering wheel angles, is like a baby just learning to walk, imitating human operations through 'muscle memory' from millions of driving clips. Its advantage lies in its simple structure - requiring only cameras and low-cost chips, but its fatal flaw is that 'it knows what it has seen but is confused by what it hasn't.' When encountering scenarios outside the training data, such as overturned trucks or motorcycles driving against traffic, the system instantly fails. This 'data dependency' keeps pure vision models stuck in the 'conditioned reflex' stage.

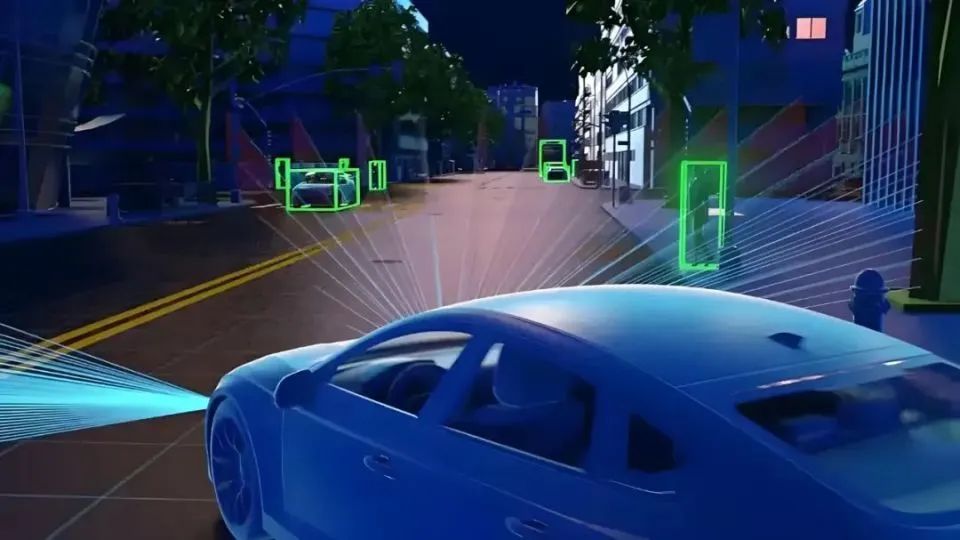

Multi-modal Fusion: 'Wide-angle Lens' for Enhanced Perception

After 2019, BEV (Bird's Eye View) technology became the new darling of the industry. LiDAR point clouds, millimeter-wave radar signals, and high-precision map data are uniformly projected onto a top-down view and then fused across modalities through Transformer. This technology solves the physical limitation of 'camera blind spots' and can accurately calculate the spatial position of 'a pedestrian 30 meters to the left front.' But it is essentially still 'perception enhancement' rather than 'cognitive upgrade.' It's like equipping the machine with a 360-degree surveillance camera with no dead angles but not teaching it to think about 'a pedestrian carrying a bulging plastic bag might obstruct the line of sight next.'

Vision-Language Model: A Perceptor that Can 'Talk'

The rise of vision-language large models (VLM) such as GPT-4V and LLaVA-1.5 has enabled AI to 'describe images' for the first time. When seeing a car ahead brake suddenly, it can explain 'because a cat darted out'; when recognizing road construction, it will suggest 'take the left lane as a detour.' This ability to convert visual signals into language descriptions seems to endow machines with 'understanding' capabilities, but it still has limitations in autonomous driving scenarios.

As language serves as an intermediate carrier, physical details are inevitably lost - internet image-text data does not record specialized parameters like 'the friction coefficient of a wet manhole cover decreases by 18%.' More crucially, VLM's reasoning is based on text correlation rather than physical laws. It might give a correct decision because 'heavy rain' and 'slow down' are highly correlated in the corpus but fails to understand the underlying fluid mechanics principles. This 'knowing how but not why' characteristic makes it difficult to cope with extreme scenarios.

Vision-Language-Action Model: The Leap from 'Talking' to 'Doing'

The VLA (Vision-Language-Action) model, introduced in 2024, took a crucial step forward. NVIDIA VIMA and Google RT-2 can directly convert language instructions such as 'pass me the cup' into joint angles for a robotic arm; in driving scenarios, they can generate steering actions based on visual input and voice navigation. This 'end-to-end' mapping skips complex intermediate logic, allowing AI to evolve from 'saying' to 'doing.'

However, the shortcomings of VLA are still evident: it relies on internet-level image-text-video data and lacks a differential understanding of the physical world. When facing scenarios like 'triple the braking distance on icy roads,' data-statistics-based models cannot derive precise physical relationships and can only rely on experience transfer from similar scenarios. In the ever-changing traffic environment, this 'empiricism' is prone to failure.

World Model: A Digital Brain that Can 'Imagine'

The essential difference between world models and all the above models lies in their realization of a closed-loop deduction of 'prediction - decision.' Its core architecture, V-M-C (Vision-Memory-Controller), forms a cognitive chain similar to the human brain:

The Vision module uses VQ-VAE to compress 256×512 camera frames into 32×32×8 latent codes, extracting key features like the human visual cortex; the Memory module stores historical information through GRU and Mixture Density Networks (MDN) to predict the distribution of the next frame's latent codes, similar to the hippocampus processing temporal memory in the brain; the Controller module generates actions based on current features and memory states, akin to the decision-making function of the prefrontal cortex.

The most ingenious aspect of this system is the 'dream training' mechanism. After the V and M modules are trained, they can be detached from the real car and deduced in the cloud at 1000 times real-time speed - equivalent to the AI 'racing' 1 million kilometers per day in a virtual world, accumulating extreme scenario experience at zero cost. When encountering similar situations in the real world, the machine can make optimal decisions based on the rehearsal in its 'dreams.'

Equipping World Models with a 'Newton's Laws Engine'

For world models to truly be competent in autonomous driving, they must solve a core problem: how to make 'imagination' conform to physical laws? NVIDIA's concept of 'Physical AI' is injecting a 'Newton's Laws Engine' into world models, enabling virtual deductions to transcend 'empty thinking' and possess practical guiding significance.

The neural PDE hybrid architecture is a key technology. By approximating fluid mechanics equations through Fourier Neural Operators (FNO), the model can calculate physical phenomena in real-time, such as 'tire splash trajectories on rainy days' and 'the influence of crosswinds on vehicle attitude.' In test scenarios, the prediction error for 'braking distance on wet roads' of systems equipped with this technology has been reduced from 30% to within 5%.

The physical consistency loss function acts like a strict physics teacher. When the model 'fantasizes' about scenarios that violate the laws of inertia, such as a '2-ton SUV laterally translating 5 meters in 0.2 seconds,' it receives severe punishment. Through millions of similar corrections, world models gradually learn to 'keep their feet on the ground' - automatically abiding by physical laws in their imaginations.

The multi-granularity token physical engine takes it a step further by disassembling the world into tokens with different physical properties such as rigid bodies, deformable bodies, and fluids. When simulating a scenario where 'a mattress falls from the car ahead,' the model simultaneously calculates the rigid body motion trajectory of the mattress and the thrust of the airflow field, ultimately generating an aerodynamic drift path. This fine-grained modeling improves prediction accuracy by over 40%.

The combined effect of these technologies endows autonomous driving with the ability of 'counterfactual reasoning' - precisely the core competitiveness of human experienced drivers. When encountering emergencies, the system can simulate multiple possibilities such as 'if not slowing down, there will be a collision' and 'if swerving abruptly, there will be a rollover' within milliseconds and ultimately choose the optimal solution. Traditional systems can only 'react afterward,' whereas world models can 'foresee the future.' Mushroom Auto's MogoMind has practical applications in this regard, with its real-time road risk warning function remind drivers of the risk of water accumulation on the road ahead 500 meters in advance during rainy weather, a typical case of combining physical law modeling with real-time reasoning.

Three-Step Leap of World Models to Market Implementation

For world models to transition from theory to mass production, they must overcome the three major hurdles of 'data, computing power, and safety.' The industry has formed a clear roadmap for implementation and is steadily advancing along the path of 'offline enhancement - online learning - end-to-end control.'

The 'offline data augmentation' stage, initiated in the second half of 2024, has already demonstrated practical value. Leading domestic automakers utilize world models to generate videos of extreme scenarios such as 'pedestrians crossing in heavy rain' and 'obstacles shed by trucks' for training existing perception systems. Actual test data shows that the false alarm rate for such corner cases has decreased by 27%, equivalent to giving the autonomous driving system a 'vaccine.'

2025 will enter the stage of 'closed-loop shadow mode.' Lightweight Memory models will be embedded in mass-produced vehicles to 'imagine' road conditions for the next 2 seconds at a frequency of 5 times per second. When there is a deviation between the 'imagination' and the actual plan, the data will be transmitted back to the cloud. This crowdsourced learning mode of 'dreaming while driving' allows world models to continuously accumulate experience through daily commutes like human drivers. Mushroom Auto's deployment of a holographic digital twin intersection in Tongxiang provides a real data foundation for online learning of world models by collecting traffic dynamics within a 300-meter radius of the intersection in real-time.

The 'end-to-end physical VLA' stage in 2026-2027 will achieve a qualitative leap. When onboard computing power exceeds 500 TOPS and algorithm latency drops below 10 milliseconds, the V-M-C full link will directly take over driving decisions. At that time, vehicles will no longer distinguish between 'perception, prediction, planning' but will 'see the whole picture at a glance' like experienced drivers - automatically slowing down when seeing children leaving school and changing lanes in advance when detecting abnormalities on the road. NVIDIA's Thor chip is already prepared for this in terms of hardware, with its 200 GB/s shared memory specifically designed for the KV cache of the Memory module to efficiently store and retrieve historical trajectory data. This 'software-hardware collaboration' architecture transforms the onboard deployment of world models from 'impossible' to 'achievable.'

'Growing Pains' of World Models

The development of world models has not been smooth sailing, facing multiple challenges such as 'data hunger,' 'computing power black holes,' and 'safety ethics.' The solutions to these 'growing pains' will determine the speed and depth of technology implementation.

The data bottleneck is the most pressing issue. Training physical-level world models requires video data with annotations such as 'speed, mass, friction coefficient,' which is currently only held by giants like Waymo and Tesla. The open-source community is attempting to replicate the 'ImageNet moment' - the Tsinghua University MARS dataset has opened 2000 hours of driving clips with 6D poses, providing an entry ticket for small and medium-sized enterprises.

The high cost of computing power is also daunting. Training a world model with 1 billion parameters requires 1000 A100 cards running for 3 weeks, with a cost exceeding one million dollars. However, technological innovations such as mixed-precision training and MoE architecture have reduced computing power requirements by 4 times; 8-bit quantized inference further controls onboard power consumption at 25 watts, paving the way for mass production.

The controversy over safety and interpretability touches on deeper trust issues. When the model's 'imagination' does not match reality, how to define responsibility? The industry consensus is to adopt a 'conservative strategy + human-machine co-driving' approach: when the predicted collision probability exceeds 3%, the system automatically degrades to assisted driving and reminds humans to take over. This 'leaving room' design builds a safety defense before technology is perfected.

The discussion of ethical boundaries is more philosophical. If the model "runs over" digital pedestrians in virtual training, will it form a preference for violence? The "Digital Twin Sandbox" developed by MIT is trying to solve this problem - rehearsing extreme scenarios such as the "Trolley Problem" in a simulated environment, and ensuring the moral bottom line of the model through value alignment algorithms.

Redefining Intelligence Through World Model Reconstruction

Autonomous driving is just the first battleground for world models. When AI can accurately simulate physical laws and deduce causal chains in the virtual world, its impact will radiate to multiple fields such as robotics, the metaverse, and smart cities.

In home service scenarios, robots equipped with world models can predict that "knocking over a vase will cause it to break", thereby adjusting the amplitude of their movements; in industrial production, the system can simulate in advance "thermal deformation of a robotic arm grasping high-temperature parts" to avoid accidents. The essence of these abilities is the evolution of AI from a "tool executor" to a "scene understander".

A more profound impact lies in the reconstruction of the definition of "intelligence". From the "recognition" of CNN to the "association" of Transformer, and then to the "imagination" of world models, AI is continuously breaking through along the evolutionary path of human cognition. When machines can "rehearse the future in their minds" like humans, the boundaries of intelligence will be completely rewritten.

Perhaps one day in five years, when your car plans a "zero red light" route three intersections in advance, and when the robot actively helps you hold the coffee cup that is about to tip over, we will suddenly realize: the world model brings not only technological progress, but also a cognitive revolution about "how machines understand the world".