"Apple Unveils Its AI Big Move: Can the Next-Generation iPhone Catch Up with Android AI Phones?

![]() 06/12 2024

06/12 2024

![]() 540

540

The annual technology circle Spring Festival Gala has begun, and AI has unsurprisingly become the focus of this event.

On the early morning of June 11, Beijing time, Apple held a keynote speech at WWDC (Worldwide Developers Conference) lasting 1 hour and 43 minutes, with the latter half of the speech dedicated to "Apple Intelligence" (AI). In approximately 40 minutes, Apple mentioned Apple Intelligence over 60 times:

Truly AI.

Apple's marketing team has indeed racked their brains, not using terms like "artificial intelligence" or "machine intelligence" like other manufacturers, but cleverly using the term "Apple Intelligence," which allows users to understand it's AI while distinguishing it from other AI systems, after all, it's Apple's AI.

However, these are more superficial efforts that make it easier to remember, but whether they can "retain" people depends on the actual functionality and performance.

Fortunately... compared to the many "AI phones" that have already been launched, it seems like Apple hasn't brought much of a surprise?

iOS 18 Upgrades Smoothly, with the Grand Finale of Large Models

As the most important platform at WWDC, every iteration of iOS can be said to affect countless iOS developers and iPhone users. On the latest iOS 18, Apple can be said to have made a "decision to violate its ancestors," allowing users to freely place apps on the home screen while also supporting batch moves - Chinese Android is ahead by a few versions.

In addition, iOS 18 also supports Material You color scheme design. Okay, this is Google's (starting from Android 12), but in summary, iOS 18 supports "redrawing" all app icons into a unified color, which can intelligently extract colors from wallpapers or be customized.

Of course, iOS 18 has also undergone significant renovations to the Photos app, opening up more widget entry points, adding game mode, offline maps, and more. For more details, you can check out "iOS 18 is Released, Is That It?" by LeiTech.

In fact, compared to regular updates, iOS 18 or this WWDC's most anticipated aspect by the outside world is AI.

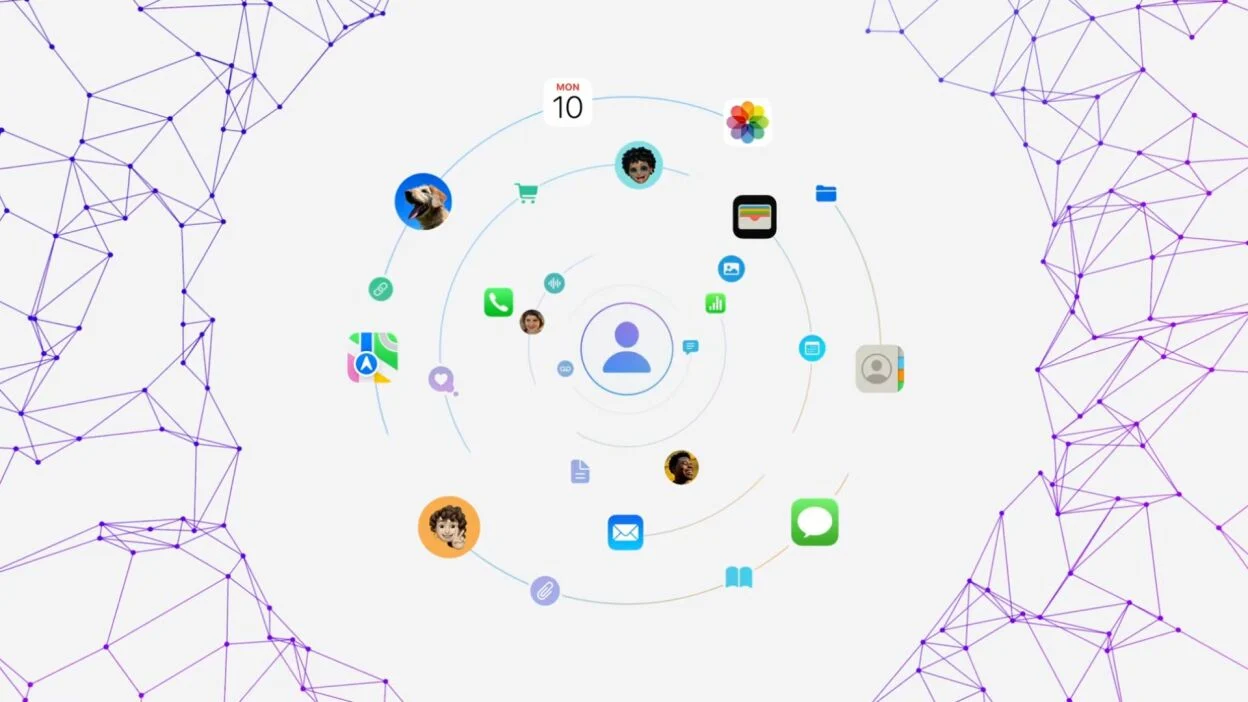

Image/Apple

As expected by the audience, Apple indeed served up a table of AI feast in the latter half of the keynote speech, introducing how Apple Intelligence is changing the experience on iPhone, iPad, and Mac platforms, as well as the potential for cross-platform and cross-app operations.

One of the most typical changes is happening to Siri.

Like other manufacturers' AI assistants driven by large models, Apple claims that Apple Intelligence has made Siri smarter - able to more accurately understand users' questions and needs, deeply integrate information from emails, photos, texts, maps, calendars, and the current screen on iPhone, iPad, and Mac, and more intelligently perform operations and answer questions.

By the way, Siri has also integrated OpenAI's GPT-4o model. When encountering complex problems, Siri will prompt whether to use ChatGPT for answers. If agreed, it will output for free through GPT-4o.

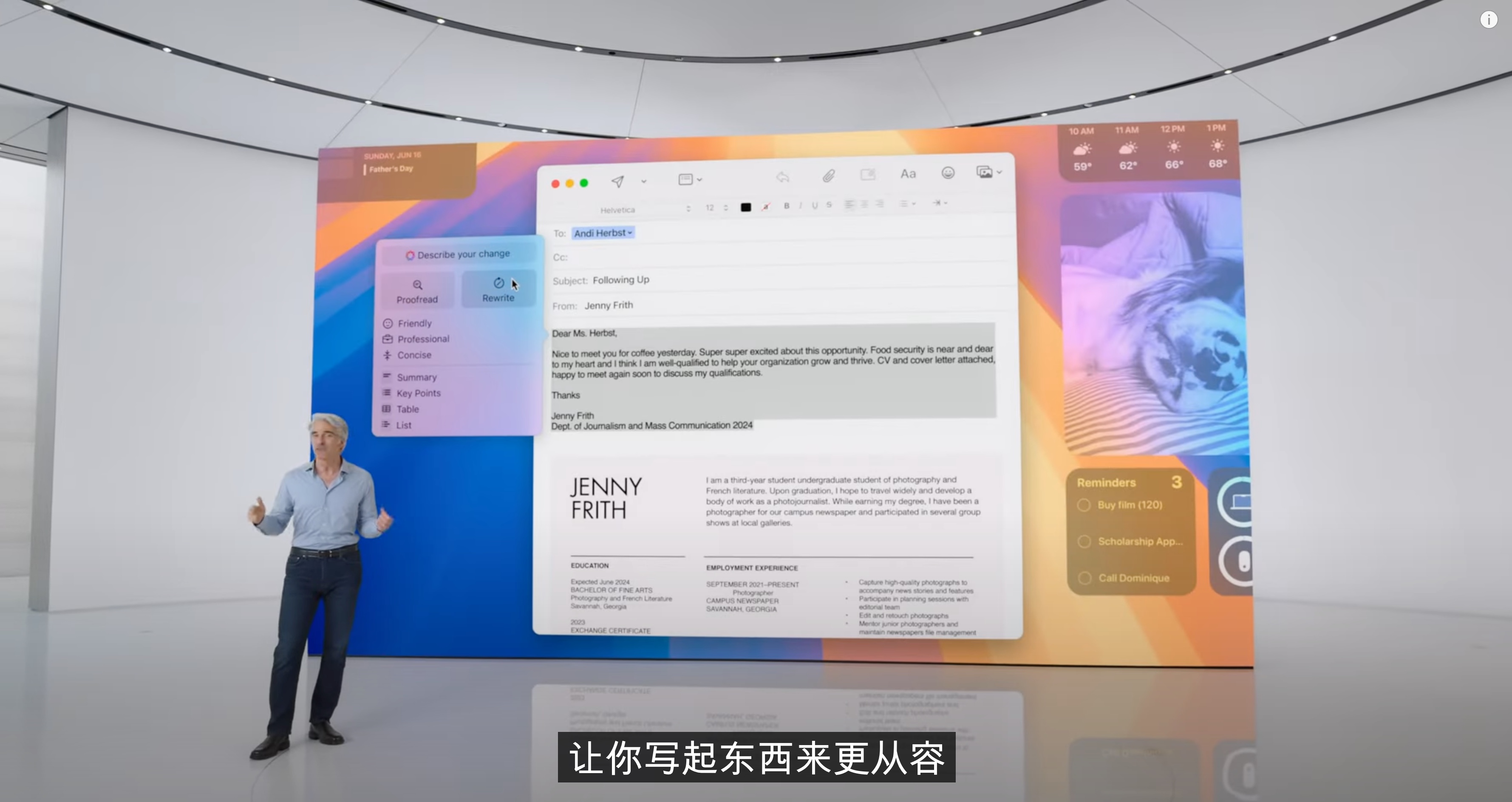

The AI writing assistant driven by Apple Intelligence has also arrived, supporting almost all native and third-party apps involving text input. Theoretically, users can use it in the WeChat input box as well. However, the corresponding capabilities are somewhat limited, only allowing for rewriting, polishing, and proofreading, not generating text from scratch.

Image/Apple

There are also AI-generated images and Genmoji features, which also cover almost all native and third-party apps. In addition, Apple has also implemented AI notification organization, AI call transcription, and summary features on iPhone, iPad, and Mac platforms.

However, it should be noted that the number of models supported by Apple Intelligence is not large, and unlike iPad and Mac products equipped with M-series chips, which are almost fully supported, only the latest iPhone 15 Pro series equipped with the A17 Pro chip supports Apple Intelligence:

iPhone users are not AI.

Apple Intelligence and GPT-4o: Apple Chooses a Different AI Path

Strictly speaking, Apple Intelligence is actually a personal intelligence system deeply integrated into iOS 18, iPadOS, and macOS Sequoia, consisting of multiple generative models. But generally speaking, you can divide these models into device-side (on-device) and cloud-side (server-side) models.

In other words, after considering cost, energy consumption, performance, privacy, security, and personalization advantages, Apple has also chosen hybrid AI. This is not surprising as it is almost a consensus among global personal device manufacturers, from overseas to domestic, from top giants to mainstream manufacturers.

But Apple is still Apple:

Image/Apple

Under the Apple Intelligence architecture, the device-side semantic index will organize and refine information from various apps. Users' questions will first be distributed and operated through the semantic index. Most models involving personal data run locally on devices such as iPhone, iPad, Mac, while larger and more powerful models run in Apple's self-built data center based on "private cloud computing" technology.

Yes, even when running in the cloud, Apple emphasizes privacy. Apple's official blog even separately published an article detailing "private cloud computing" technology. Simply put, private cloud computing does not store user data, and Apple, as the server side, cannot access it, while users control server conversation permissions.

In addition, Apple has another layer of cloud-side models in a general sense - GPT-4o.

Although there are various large models today, GPT-4o is still the top model, representing the smartest AI. According to the demonstration, GPT-4o is at least integrated into Siri and AI writing functions, capable of outputting better answers, as well as generating text and images from scratch. Whether GPT-4o will be integrated into more places, we may have to wait until the official launch in the fall to determine.

In contrast, other phone manufacturers have adopted different strategies. Honor's approach is to build a large model platform that accommodates as many general and vertical large models as possible, with more scenarios using device-side models to analyze user intentions and then invoke third-party large models to achieve them.

OPPO's approach is the opposite, with its self-trained Andes large model divided into three levels, including the Tiny version on the device side, the Turbo and Titan versions on the server side, while also self-building a data center and focusing more on vertical capabilities, only opening up to third parties at the level of agents. Vivo generally follows this approach as well.

OPPO Andes Large Model, Image/LeiTech

Of course, it's still too early to say which route will go the farthest, but Apple has indeed chosen the most suitable strategy for its own situation.

At the same time, in terms of experience, Apple's main focus is to provide a more comprehensive AI experience at the system level, breaking down the capabilities of large models into individual APIs (Application Programming Interfaces) and functional modules, allowing users to access global AI functions while also enabling developers to utilize Apple Intelligence's capabilities.

The Next-Generation iPhone: A iPhone? Ai Phone?

Will AI change phones? For many people, the answer is yes. But how will AI change phones? I'm afraid no one or manufacturer can be sure.

During the keynote speech at WWDC, Apple actually demonstrated how AI changes the user experience on iPhone, iPad, and Mac, especially emphasizing that Apple Intelligence operates based on users' personal information and context. For example, in simple scenarios like "retrieve the file sent to me by xx last week," "see all photos of my mother and me," "play the podcast my wife sent me a few days ago," etc.

Or, for example, Craig Federighi, Apple's senior vice president of software engineering, cited a relatively complex scenario, asking whether it was possible to catch up with one's daughter's performance in the event of a postponed meeting. At this time, Apple Intelligence will find relevant information about the subject (daughter)'s performance (time, location) from the device, as well as the time and location of the meeting, and then provide a final answer based on common transportation methods and estimated time.

Image/Apple

This idea is not uncommon, and many manufacturers have actually talked a lot about the beautiful vision of "personal digital assistants" when painting the cake for AI phones, but they haven't actually achieved it.

Assuming that Apple can truly achieve true personalized intelligence, providing truly valuable answers based on information and services scattered across various devices, it will undoubtedly be a significant turning point for AI phones and will largely determine whether the next-generation iPhone is:

A iPhone? Or Ai Phone?

In terms of timing, Apple Intelligence is not yet installed. According to Apple's plan, testing of the American English version will begin this summer, with an official launch in the fall. In other words, iPhone 16 should come preinstalled with Apple Intelligence, and the iPhone 15 Pro series, as well as iPad and Mac models equipped with M-series chips, may gradually support it.

However, this is limited to the American English version, with support for Chinese and other language versions not expected until next year, and there is currently no more specific timeline. Moreover, for Chinese users in China, Apple Intelligence is destined to be significantly discounted, including the freely usable GPT-4o.

Additionally, in terms of timing, Apple's rollout speed is indeed slow, once again demonstrating Apple's haste. Even in the speech, Apple only repeatedly emphasized that Siri and Apple Intelligence will have more/better features in the future, making it somewhat hollow to say they are "painting the cake."

Overall, I tend to think that Apple may not have figured out the roadmap for Apple Intelligence.

Will Apple Redefine AI Phones?

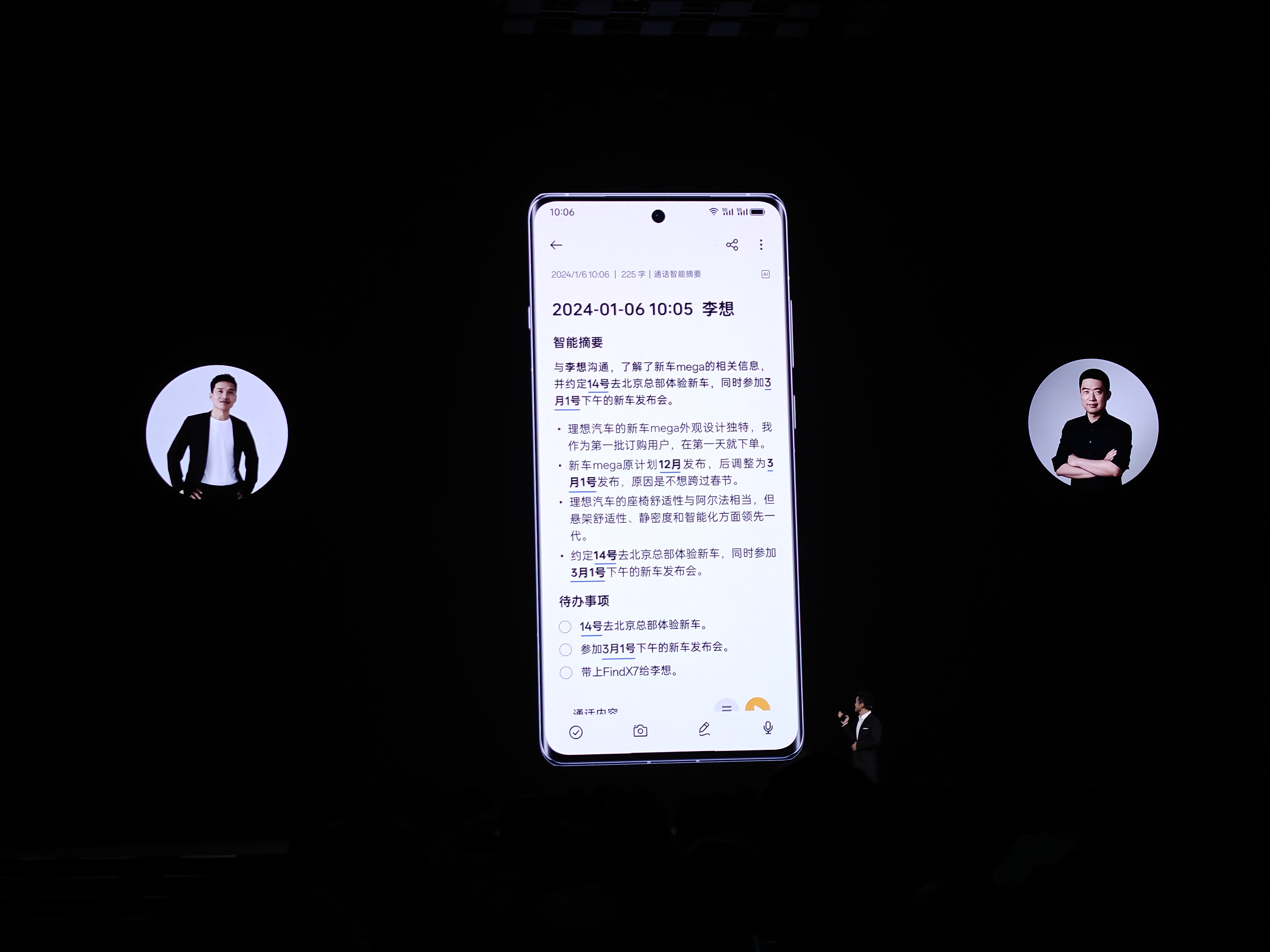

Not talking about AI phones promoted by manufacturers in the past, the introduction of end-side generative AI phones can probably be traced back to the Find X7 series released in January this year. In addition to new AI assistants, AI-generated images, etc., the Find X7 series also includes more practical AI functions such as AI elimination, AI call/article summaries, which are gradually becoming popular in various systems and reaching mass consumers.

AI Call Summary, Image/LeiTech

In terms of functionality alone, Apple has hardly brought any brand-new AI features. These AI writing assistance, image generation, transcription, and summaries, etc., we have all seen on various flagship phones. However, compared to other phone manufacturers, Apple has indeed chosen a different route for device AI-ization, both in system architecture and implementation across multiple devices and applications.

From a developer's perspective, only Apple is truly ready to open up AI capabilities to third-party apps, starting with the Core ML framework in 2017.

From this perspective, Apple Intelligence may completely change the current scattered and disorderly development ecosystem of third-party AI applications, and even influence the development ecosystems of Android and Windows through iPhone, iPad, and Mac.

But the question is, in the era of generative AI, will it still be an application-centric era? We don't know, but generative AI represented by ChatGPT has actually spawned a completely different interaction method and ecosystem, and it may change at any time with the progress of large models.

From this perspective, how Apple will redefine AI phones and AI PCs may not be important. What's important is how OpenAI and GPT large models will define the interaction between humans and machines.

Source: LeiTech