NVIDIA GTC 2025: Rubin Chip Makes Its Debut, Marking a Breakthrough in AI Full-Stack Technology

![]() 10/30 2025

10/30 2025

![]() 439

439

Authored by Zhineng Zhixin

At the 2025 GTC Conference held in Washington, Huang Renxun (Jensen Huang) introduced the Vera Rubin, a pivotal force propelling NVIDIA towards a staggering $5 trillion market valuation. What are the defining characteristics of this innovation?

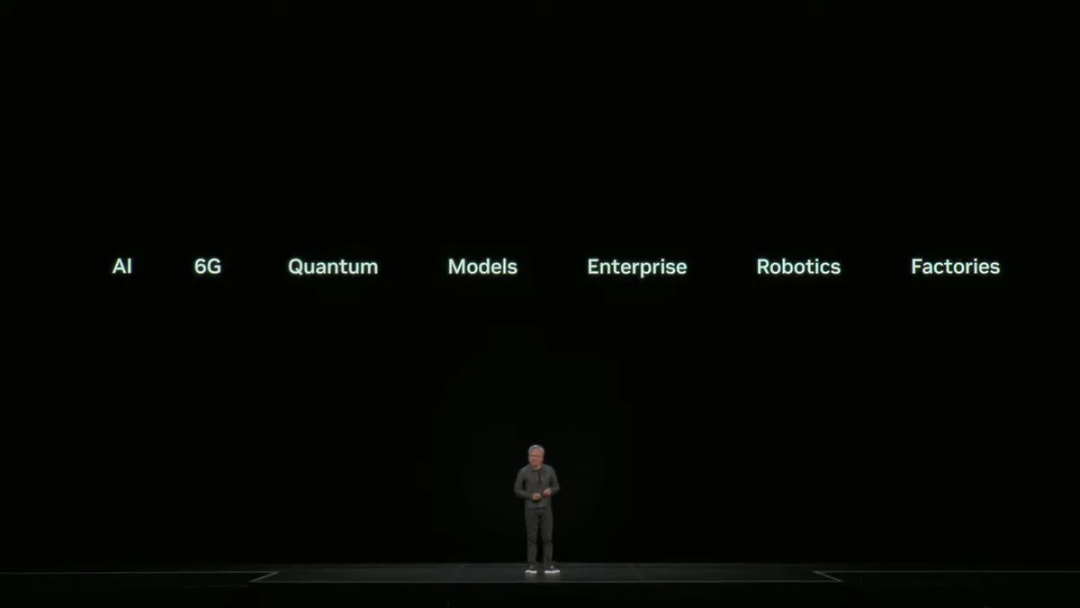

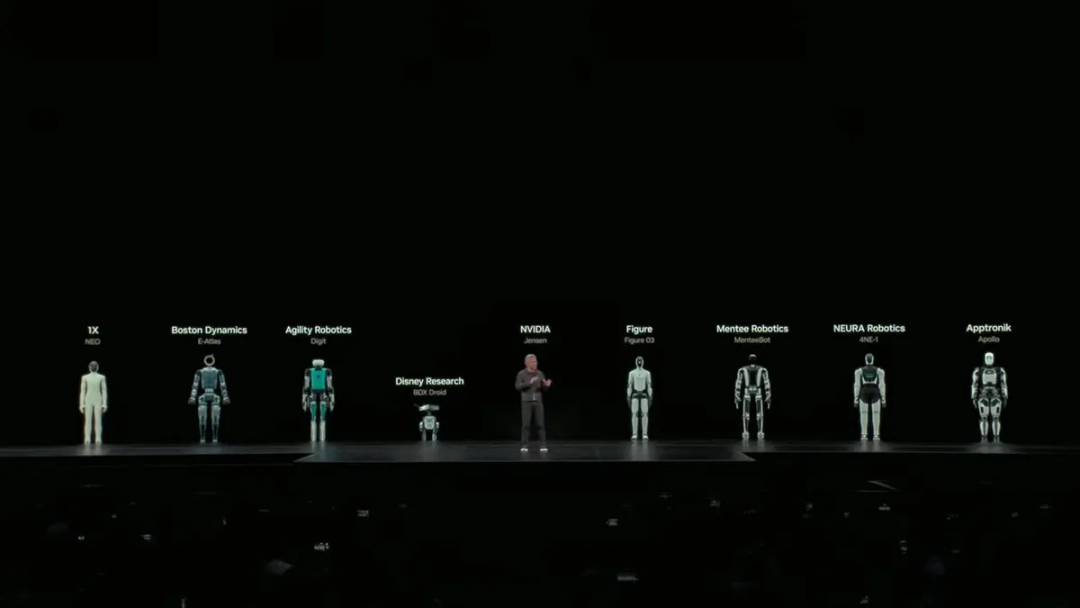

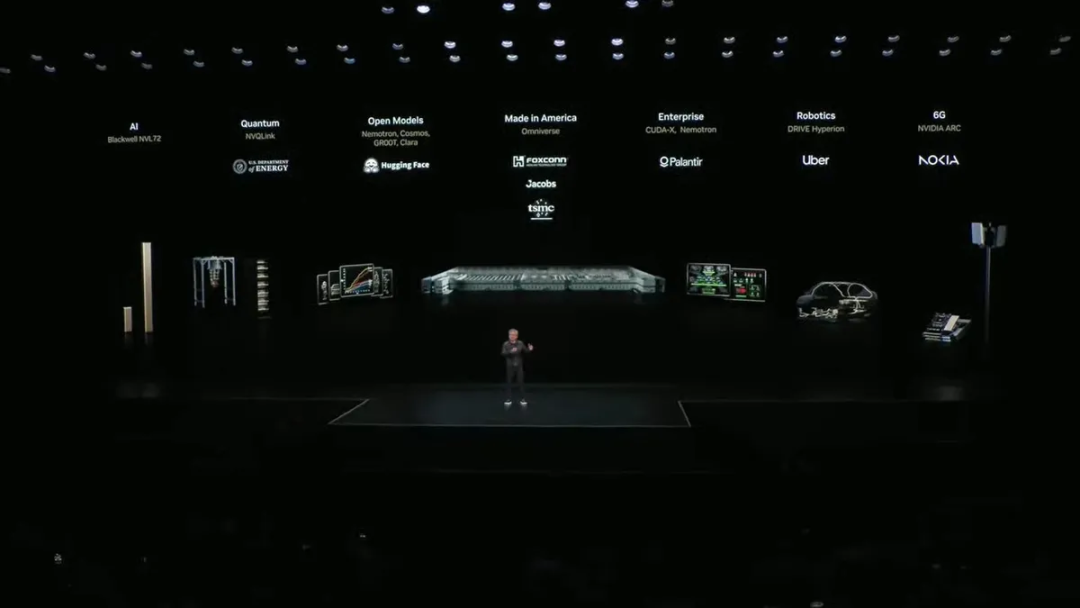

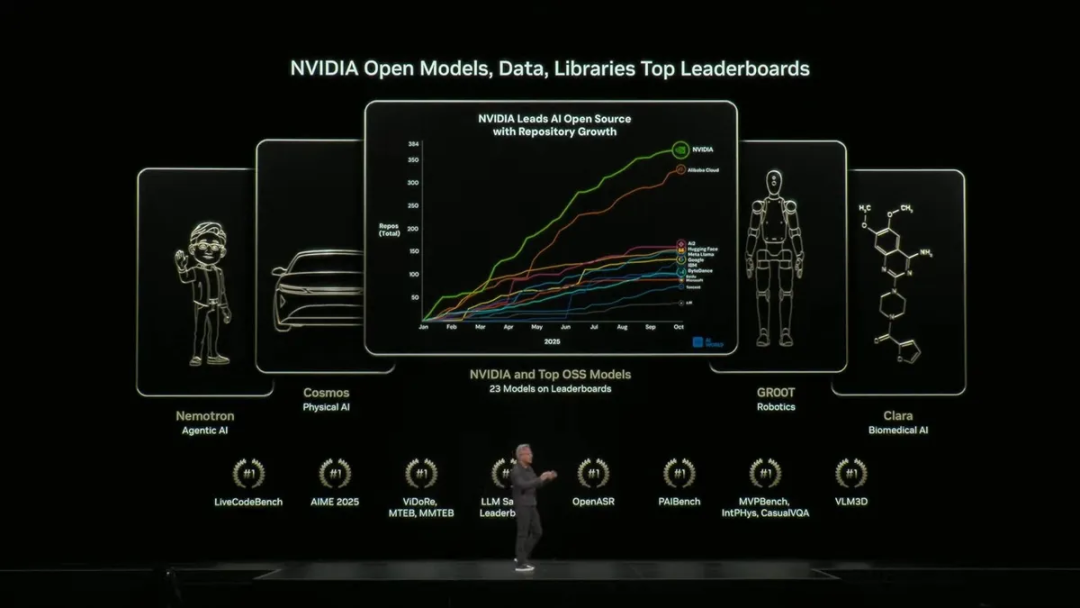

◎ Rubin Single GPU Computing Power: Boasting 50 PFLOPS (three times that of the previous generation), it slashes electricity costs by millions, reducing the training time for large models from three months to just two weeks. Mass production is slated for 2026. ◎ Robotics/Edge Computing: The IGX Thor's computing power is accelerated by eightfold, while the BlueField-4 facilitates the construction of large AI factories. ◎ Autonomous Driving: The Hyperion platform, in collaboration with Uber, aims to launch 100,000 Robotaxis by 2027. ◎ Open Source & Collaboration: 650 AI models are made freely available, with a $1 billion investment in Nokia for 6G development; seven supercomputers are built in partnership with the U.S. Department of Energy; and the Quantum Interconnect NVQLink features ultra-fast error correction.

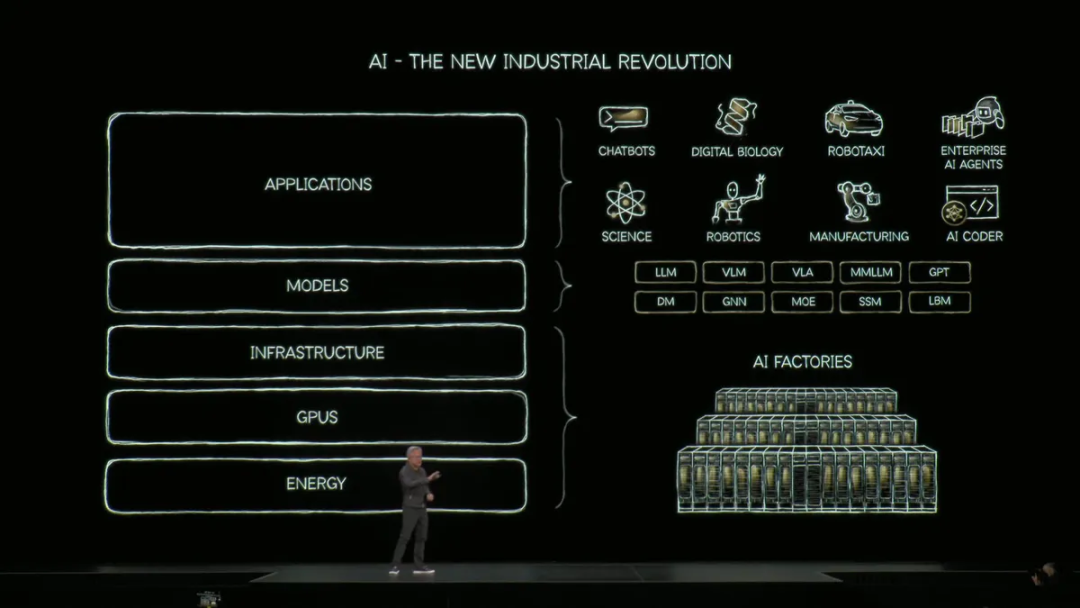

The unveiling of a suite of core technologies, including the Vera Rubin super chip, BlueField-4 DPU, IGX Thor robotics platform, and the Omniverse DSX AI factory blueprint, lays the foundation for a computing power ecosystem that spans AI, autonomous driving, quantum computing, 6G communication, and robotics.

Part 1: Rubin Super Chip

The highlight of the GTC Conference was undoubtedly NVIDIA's unveiling of the new-generation Vera Rubin super chip. Codenamed Rubin, this GPU epitomizes the pinnacle of human achievement in microfabrication and system architecture integration.

The Rubin chip seamlessly integrates over 20 billion transistors onto a silicon wafer no larger than a fingernail. It is tightly coupled with the Vera CPU through a cutting-edge NVLINK-C2C interconnect architecture, forming a unified computing engine.

The Vera CPU, built on a custom Arm architecture, boasts 88 cores and 176 threads. It enables a staggering 1.8 TB/s data exchange bandwidth with two Rubin GPUs, marking a transition from mere 'CPU+GPU' collaboration to true fusion.

The Rubin GPU achieves 50 PFLOPS in FP4 precision computing. Meanwhile, the NVL144 platform (comprising 144 GPUs) delivers 3.6 ExaFLOPS in FP4 inference and 1.2 ExaFLOPS in FP8 training, representing an over threefold improvement over the GB300 NVL72.

This translates to a drastic reduction in the training time for GPT-5-level ultra-large language models, from three months to just two weeks, while also cutting energy consumption by over 40%.

Manufactured using TSMC's N3P process, the transistor density soars to 180 million/mm², enhancing energy efficiency by 3.5 times compared to the previous generation. For data centers, this improvement is revolutionary, saving over $200 million in annual electricity costs for a single 100-megawatt AI center.

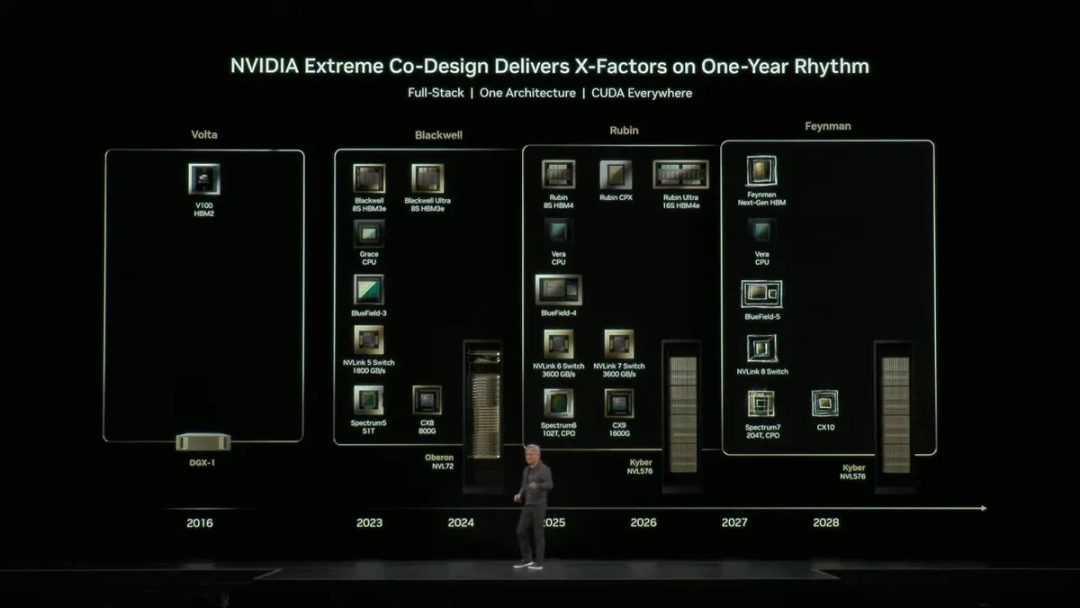

The Rubin Ultra NVL576 platform, planned for 2027, is set to further elevate FP4 inference performance to 15 ExaFLOPS, nearly 14 times that of the GB300.

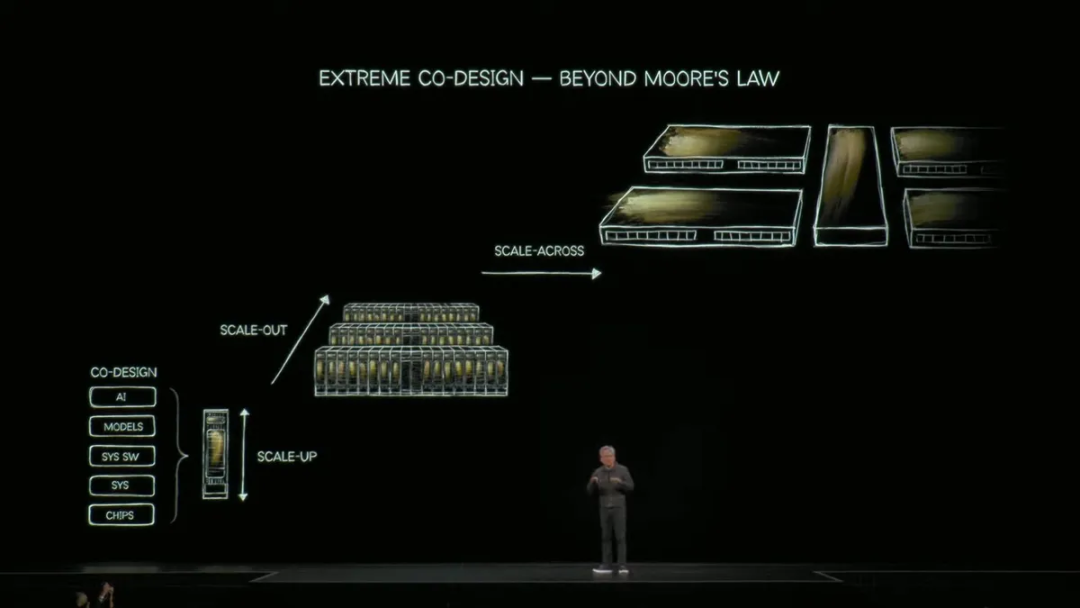

NVIDIA emphasizes a 'Holistic Co-Design' approach, integrating chips, networks, software, and cooling systems into a unified physical and logical framework.

This philosophy is extended in the Omniverse DSX AI factory blueprint, where the data center transforms into a programmable 'AI manufacturing site,' optimized for token generation, model training, and inference tasks.

The Rubin GPU's interconnect design introduces the NVQLink quantum interconnect architecture, facilitating high-speed communication between GPUs and quantum processors (QPUs). This enables AI and quantum computing to collaborate seamlessly within the same computing system.

Part 2: From AI Factories to the Intelligent World

At the GTC Conference, Huang Renxun (Jensen Huang) unveiled a comprehensive five-year full-stack roadmap covering key areas such as GPUs, CPUs, DPUs, networks, 6G, and robotics.

This roadmap showcases not just the evolution of individual products but a self-reinforcing ecological cycle.

● In the realm of data centers and AI computing, NVIDIA disclosed that it holds $500 billion in AI chip orders, primarily from the world's top six cloud computing giants—Amazon, Google, Meta, Microsoft, Oracle, and CoreWeave.

The driving force behind these orders is the efficient token generation capability of the Blackwell and Rubin platforms, which offer superior unit TCO (Total Cost of Ownership).

Huang Renxun (Jensen Huang) stated, 'While the world's second-best GPU is NVIDIA's H200, the GB200's performance is ten times that.'

NVIDIA also introduced the BlueField-4 DPU for AI storage and network security scenarios, supporting 800Gb/s throughput. Additionally, the IGX Thor robotics computing platform, based on the Blackwell architecture, delivers a staggering 5581 FP4 TFLOPS in AI computing power, providing a unified platform for smart manufacturing and autonomous driving.

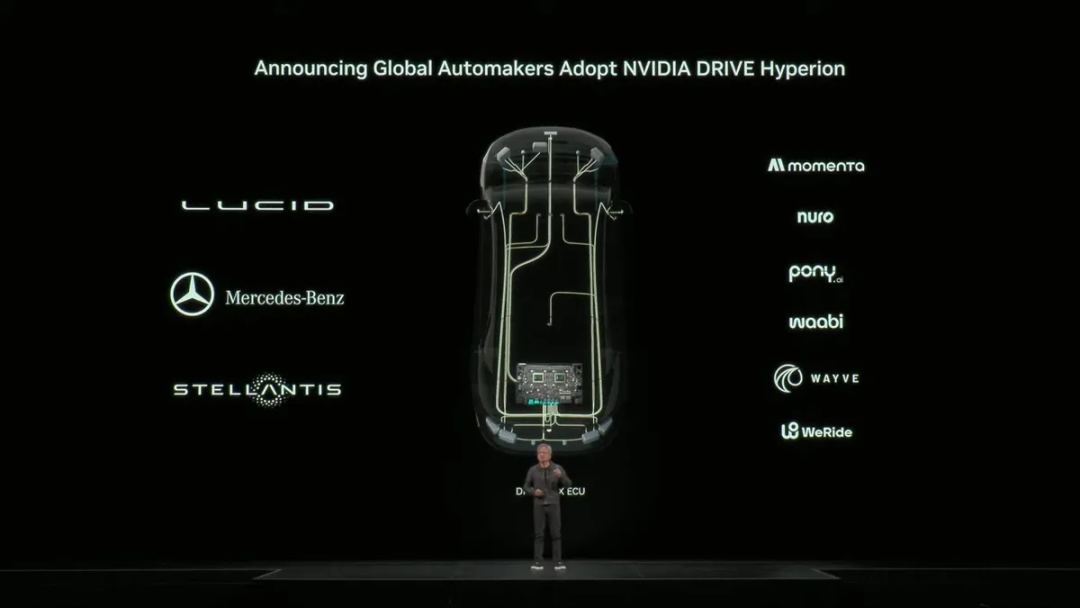

● In the automotive sector, NVIDIA announced the launch of the Drive Hyperion platform and a partnership with Uber for autonomous taxi services.

● In the telecommunications sector, NVIDIA introduced the Aerial ARC 6G platform and invested $1 billion in Nokia, acquiring a 2.9% stake, to jointly advance AI-RAN and AI-native 6G network research and development.

NVIDIA proposed the concept of the 'AI Factory,' treating AI as a new 'manufactured product,' where computing power, energy consumption, and cooling become 'production factors.'

The Omniverse DSX platform enables collaborative design of architecture, power, and AI stacks, allowing enterprises to build AI production lines akin to operating factories. This model signifies that computing power is emerging as a new strategic resource, following in the footsteps of land and capital.

To mitigate policy and supply risks, NVIDIA emphasized its 'Made in America' strategy at GTC, announcing that all future AI factories will be constructed domestically. It also collaborated with the U.S. Department of Energy to build seven AI supercomputers, with the largest housing 100,000 Blackwell GPUs for nuclear safety and scientific research tasks. NVIDIA has evolved from a technology company into a key pillar of America's AI infrastructure.

Summary

At NVIDIA's GTC 2025 Conference, the launch of the Vera Rubin super chip reaffirmed NVIDIA's leadership in AI computing performance. The strategic layout of AI factories, 6G communication, quantum interconnects, and robotics platforms creates a 'smart ecological closed loop' that encompasses hardware, software, algorithms, energy, and manufacturing.