Is There Hope for the Relationship-Inexperienced? Enter AI: Your Dating Guru!

![]() 09/26 2025

09/26 2025

![]() 430

430

On the international crowdfunding platform Kickstarter, an intriguing AI project named Red Flag Scanner Ai has surfaced. It promises that by merely uploading a screenshot of your chat history, the AI can swiftly pinpoint potential 'red flags' in your romantic endeavors.

Is this a revolutionary breakthrough set to rescue countless love-struck souls, or merely another 'IQ tax' scheme exploiting our anxieties?

Layout: Yanzi

What Exactly Is Red Flag Scanner Ai?

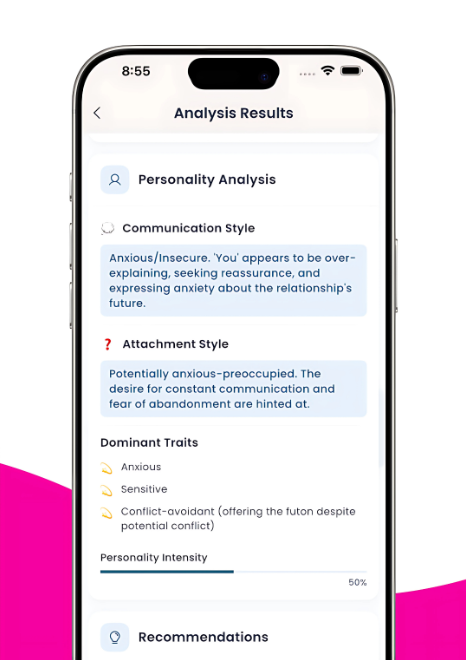

In essence, it's a mobile app currently in the works. According to its Kickstarter project description, its main function is simple: users can grant access or upload screenshots of their chat exchanges with others. The built-in AI model then scrutinizes the text, ultimately producing a relationship health report that clearly flags potential 'red flags' (warning signs) and 'green flags' (positive indicators).

It leverages cutting-edge natural language processing (NLP) technology. The AI goes beyond mere keyword matching; it delves deeper into the conversation's context, tone, emotional undertones, and communication patterns. For instance, it can detect emotional manipulation tactics like 'gaslighting,' assess if one party is consistently dodging communication, or evaluate if the investment levels between both sides are balanced based on response frequency and word count.

By precisely targeting the pain point of 'emotional insecurity,' Red Flag Scanner Ai has successfully garnered numerous backers and smoothly met its crowdfunding target. This at least indicates that the quest for a touch of emotional certainty in the digital age has found initial resonance and support among a significant number of people.

Tempting, isn't it? An always-available, utterly rational 'emotional relationship analyst' seems to offer a shortcut through the labyrinth of relationships. Yet, when we entrust our most intimate conversations and delicate emotions to an algorithm for judgment, several potential issues emerge. We must be aware:

First and foremost, the paramount concern is privacy risk.

Your chat records harbor some of your most personal and private information. Uploading these screenshots to a third-party app, we can't be entirely sure if the data will be securely protected, if it will be used for secondary training purposes, or even if there's a risk of leakage. In today's world, where data security is paramount, this is akin to dancing on the edge of a precipice.

Secondly, there's the risk of over-reliance and the erosion of skills.

If we rely on AI for everything, will we gradually lose our ability to think independently and perceive emotions? Human relationships are rife with ambiguity, exploration, and irrationality, which are precisely what make them captivating. Fully entrusting judgment to a cold algorithm might render us lazy, causing us to distrust our intuition and feelings, and ultimately making us more inept in real-life relationships.

Finally, we must be wary of algorithmic bias.

The AI's 'judgment criteria' are shaped by its developers and training data. Can this set of standards be universally applied across all cultural backgrounds, personality types, and gender combinations? An expression deemed 'enthusiastic' in Culture A might be flagged by the algorithm as 'overly clingy' in Culture B. This 'standard answer' defined by a select few could easily morph into a new, subtle form of bias that misguides our judgment.

Is an AI tool like Red Flag Scanner Ai truly feasible?

The answer may not be so straightforward. We needn't view it as a monster, nor should we treat it as gospel. The wisest approach is to use it as an 'auxiliary mirror' rather than the ultimate 'arbiter.'

When you're feeling lost in a relationship and can't see the situation clearly, the 'second opinion' provided by AI might help you step out of the emotional maelstrom and examine your communication patterns from a fresh, purely rational vantage point. This has its own merit.

However, algorithms can only analyze what has already transpired in the 'past' and cannot predict the unpredictable 'future.' They can only interpret cold 'text' but cannot feel the genuine 'warmth.'

Ultimately, any healthy relationship cannot bypass sincere communication, courageous exploration, and heartfelt emotions. AI can help you identify a 'gaslighting' phrase in your conversation, but it cannot replace the empowerment you feel when you muster the courage to say, 'I don't like the way you talk to me.'

In the end, the most potent and reliable 'Red Flag Scanner' has always resided within ourselves—your intuition, your feelings, and the genuine sense of whether you're thriving or wilting in this relationship.

So, here's the question:

Are you willing to seek AI's assistance in matters of the heart?