The Dilemma of Humanoid Robot Movement: Not About Being Soft or Hard, But About 'Collaborative Aphasia'

![]() 09/26 2025

09/26 2025

![]() 601

601

There's a well-known saying in the industry: "A company focused on embodied intelligence that doesn't delve into hardware isn't truly competitive." The underlying logic is straightforward. To excel in software development, one must possess an in-depth understanding of hardware characteristics. Conversely, to push hardware to its limits, software must seamlessly integrate at the architectural and scheduling levels. This integration should be ingrained from the design phase. However, in reality, manufacturers frequently lament that "algorithm developers lack hardware insight, while hardware developers are oblivious to algorithms," leading to nearly independent operations between the two groups.

Editor: Lv Xinyi

What's impeding the movement of humanoid robots? Is it a hardware limitation or a software capability gap? This question has ignited debates within the industry.

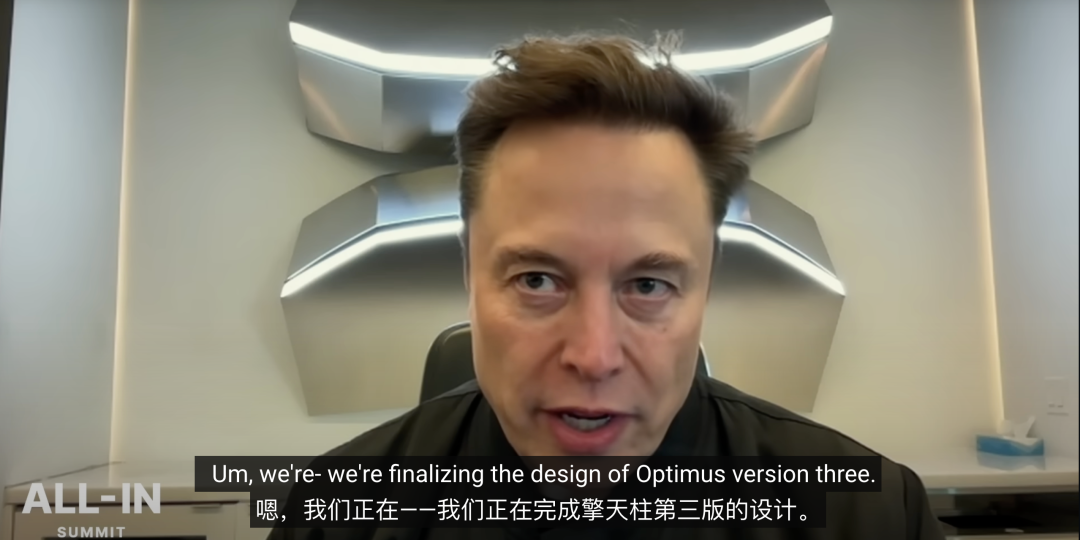

At the recent ALL-IN SUMMIT, Elon Musk was questioned about whether the hardware or software of Optimus posed a greater challenge for breakthroughs. He candidly admitted, "We're still grappling with the final hardware design." When pressed further by the host, asking if resolving hardware challenges would facilitate natural human-robot interaction—enabling robots to comprehend instructions and execute tasks—based on advancements in large language models (LLMs), Musk responded with confidence, "No problem."

Image Source: ALL-IN SUMMIT

In stark contrast to Musk's hardware anxieties, Wang Xingxing of Unitree Technology has repeatedly stated that "the hardware is adequate." Instead, he contends that the AI field resembles a "desert," where "effectively harnessing AI remains a formidable challenge." Wang argues that data and models currently act as bottlenecks for robots. AI models lack the capability to make robots truly functional, such as struggling to control dexterous hands with precision.

These seemingly contradictory viewpoints actually reflect the same underlying dilemma. The core issue may not stem from a lag in either "software" or "hardware" alone but rather from the absence of effective collaboration and integration between the two.

The solution to this problem shouldn't solely originate from robot manufacturers but also from upstream suppliers.

Recently, the Embodied Intelligence Research Society engaged in discussions with Analog Devices, Inc. (ADI). As an upstream core hardware and solution provider, ADI's perspective transcends the binary opposition of software versus hardware, adopting a "collaborative" approach instead.

Chen Baoxing, an ADI Fellow and VP of Technology, stated that the crux lies in the deep integration of AI and hardware. "For instance, grasping an egg or another object necessitates optimization. The robot must understand the object's properties, apply the appropriate force, and prevent slipping. All these require seamless integration between hardware, software, AI, and control. I believe there's still a significant amount of work to be done."

This may elucidate why we rarely witness agile, intelligent, and purposeful robots. The challenge extends beyond what software or hardware alone can resolve. Systematic collaboration between hardware and software may be the primary focus for future breakthroughs.

Is Hardware Truly Adequate?

Since Wang Xingxing declared at WRC that "current hardware is, in a sense, entirely sufficient," it has sparked significant industry debate. However, Wang immediately highlighted a common hardware challenge: "The bigger issue is mass-producing it (hardware)." Similarly, Elon Musk bluntly stated that humanoid robots lack a supply chain and must be designed from scratch, a major reason for his delayed production plans.

Thus, the first apparent hardware challenge is the "lack of standards." While thousands of reusable components from industrial and automotive fields exist, few are tailored specifically for robots. Simply put, hardware is usable but not optimized, leading to the industry perception that "hardware constrains software," hindering model deployment.

The root cause lies in two factors. Firstly, humanoid robots are in their infancy, and large suppliers are reluctant to invest in a low-profit, non-revenue-boosting product line. Consequently, component mismatches, mass production capabilities, and yield rates create barriers. While technically solvable, engineering challenges persist, impeding robot deployment.

Secondly, differing technical approaches among humanoid robot manufacturers—whether in hardware design or "brain" models—have yet to converge. Mismatches between AI algorithms and hardware platforms create another obstacle: hardware "lacks AI capability."

In essence, hardware and software are overly decoupled, akin to a puppet with severed strings. The real challenge is the absence of "AI-native design," as emphasized by Chen Baoxing: "To accelerate innovation and deployment, AI and physical intelligence must integrate deeply."

Chen compares "AI and physical intelligence" to the "brain and body." AI serves as the "brain" for learning, reasoning, and decision-making, while physical intelligence acts as the "body" for perception, movement, and environmental interaction. Only through deep integration can machines become as agile, intelligent, and reliable as humans.

The core of physical intelligence lies in high-performance sensors. ADI's robotics team is integrating sensor and actuator models into NVIDIA's Isaac Sim platform, enabling real-world physical feedback simulation. This trains deployable control strategies, achieving a Sim2Real breakthrough.

ADI identifies two key points for physical intelligence, its current focus. Firstly, compatibility with the "brain" (central processing unit); secondly, tight integration with the "cerebellum" (spinal reflex-related functions), including neuronal-level features like sensory and motor neurons and dexterity.

For instance, can motors and drivers execute AI's "non-standard" instructions swiftly and precisely? How can a joint instantly generate explosive force (e.g., jumping) while achieving fine force control (e.g., holding an egg)? This necessitates low-latency, high-bandwidth, high-precision hardware capable of multidimensional sensor data transmission (including touch) for edge-cloud communication and computation, all deeply adapted to AI algorithms.

Image Source: Tesla

Thus, the hardware issue extends beyond the "lack of industry standards" or specific metrics like "strength," "cost," "size," and "reliability." It centers on how hardware can be efficiently, precisely, and with low latency driven and controlled by AI—a fundamental hardware-software collaborative design challenge.

From "Full-Stack Hardware-Software" to "Hardware-Software Fusion"

For an extended period, companies pursuing both hardware and software have been favored by capital markets. However, there's a misconception: "full-stack hardware-software" should transcend mere public relations and achieve genuine "collaboration" and "fusion," akin to "AI-native hardware." Robots, as large AI hardware, must be designed and built around AI from the planning stage.

The industry adage "A company focused on embodied intelligence that doesn't delve into hardware isn't truly competitive" has its counterparts. The underlying logic is that to excel in software, one must possess an in-depth understanding of hardware, and to push hardware to its limits, software must seamlessly integrate architecturally. This fusion must be ingrained from the design phase. Yet, in reality, manufacturers frequently lament that "algorithm developers lack hardware insight, while hardware developers are oblivious to algorithms," leading to nearly independent operations between the two groups.

In summary, soft-hard integration strategies have yielded numerous successes in the smartphone sector, exemplified by Apple, Xiaomi, and Huawei. Even narrowly defined model vendors, such as OpenAI acquiring io for AI-native hardware, Meta developing AI glasses, ByteDance creating AI earphones, and DingTalk launching AI recording hardware, demonstrate this trend.

Currently, some embodied intelligence companies recognize the importance of fusion. These firms can be categorized into two groups. Firstly, those aware of hardware-software collaboration, designing hardware with clear interfaces and divisions from the outset—a mainstream approach. These companies reserve development interfaces, design hardware configurations and sizes for specific scenarios, and install customized components and functional modules.

Of course, this is common practice. A higher-order state is "fusion," which breaks traditional hardware-software boundaries. Hardware is designed for software algorithms, and software is written for hardware characteristics.

For instance, designing dedicated computing chips and sensors for specific reinforcement learning algorithms; modeling hardware physical responses (e.g., elasticity, friction) in AI training; optimizing hardware design to align with AI's decision-making frequency, such as enabling end-side chips to autonomously adjust tactile reflexes during model inference gaps.

Currently, only a few industry leaders have begun exploring "fusion," with most companies still not reaching the "collaboration" stage.

Thus, hardware-software fusion will be a competitive edge and new opportunity for embodied intelligence companies, driving further industry adoption. However, this responsibility doesn't solely rest on robot manufacturers; upstream suppliers must also contribute.

For instance, ADI, as an upstream vendor, focuses on four key areas to enable machines with thought, touch, and action: sensing, connectivity, interpretation, and control. These areas link hardware-software collaboration in robots.

From sensing, robots require visual and tactile capabilities, leveraging "multimodal perception fusion" to judge object shapes and enhance dexterity. From connectivity, robots necessitate high-speed, stable "neural network" links. Interpretation involves analyzing raw sensor data and component dynamics. Control, acting as the robot's "cerebral cortex," handles motion planning and execution, with AI-driven algorithms enabling multi-joint coordination and complex movements.

We can observe significant gaps—and opportunities—at the "interface" between hardware and software.

The development of humanoid robots will undoubtedly be a journey of hardware-software co-evolution. It's not about hardware catching up to software or vice versa but about them shaping, coupling, and becoming inseparable, like the brain and body in biology. Future breakthroughs will depend on our ability to accelerate this "co-evolution" through technological innovation and engineering wisdom.