The Tripartite Struggle in Computing Power: NVIDIA, Oracle, and OpenAI's Trillion-Dollar Chess Game

![]() 09/26 2025

09/26 2025

![]() 500

500

When NVIDIA announced its $100 billion investment in OpenAI in Silicon Valley, the entire tech community sought to understand the logic behind these astronomical figures. This sum, equivalent to the global chip industry's annual R&D investment, is poised to reshape the power dynamics of the AI industry while also igniting a multi-trillion-dollar infrastructure arms race. In this contest, NVIDIA, Oracle, and OpenAI have formed a 'Tripartite Struggle in Computing Power' of both cooperation and restraint, with each move redefining the future boundaries of artificial intelligence. What stories lie behind this massive funding?

NVIDIA's Closed-Loop Empire: A $100 Billion 'Self-Investment' Game

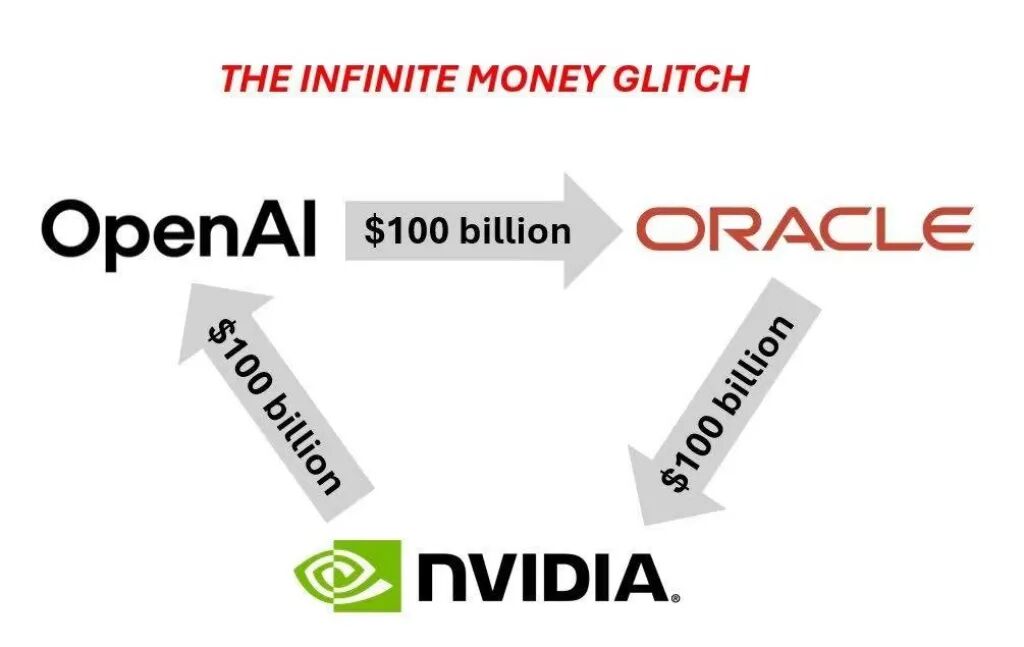

'NVIDIA invests $100 billion in OpenAI, which then returns the money to NVIDIA'—Requisite Capital Management partner Bryn Talkington's comment cuts to the heart of the matter. This seemingly paradoxical capital maneuver is, in fact, Jensen Huang's masterstroke in building a computing power empire. According to the agreement, the investment will be disbursed gradually with the completion of each gigawatt of computing infrastructure. Each gigawatt represents the deployment of approximately 400,000–500,000 GPUs, which, as Huang puts it, equals the company's entire annual chip shipment volume—double last year's.

This 'mutual dependency' cooperation model forms a perfect business closed loop (closed loop): NVIDIA secures long-term orders from its largest customer, OpenAI, through the investment, while OpenAI gains critical funding and technical support for building next-generation AI infrastructure. More profoundly, the two sides will jointly optimize their hardware-software roadmaps, meaning future OpenAI large models will be deeply integrated with NVIDIA's chip architectures, creating a technical barrier difficult for competitors to breach.

NVIDIA's confidence in this bet stems from its upcoming Vera Rubin platform. Dubbed by Huang as 'another leap forward at the frontier of AI computing,' the system delivers 8 exaFLOPS of AI computing power per rack—7.5 times that of the previous generation. Its new Rubin CPX GPU, designed for long-context reasoning, supports processing millions of tokens of knowledge at once, a core capability needed for training next-generation Artificial General Intelligence (AGI). When the first Vera Rubin-based systems deploy in the second half of 2026, they will power OpenAI's model evolution.

But NVIDIA's ambitions extend further. A week before announcing the OpenAI investment, the chip giant also spent $5 billion acquiring a stake in Intel, with the two companies collaborating on data center and PC products. This 'dual-play' strategy aims to build a more complete ecosystem—by integrating Intel's x86 CPU advantages, NVIDIA is addressing its weaknesses in general-purpose computing, offering customers like OpenAI a one-stop solution from chips to systems. Facing challenges from AMD and cloud vendors' in-house chips, NVIDIA is using capital and technical ties to solidify its dominance in AI computing power.

Oracle's Comeback: The Cloud Infrastructure Ambitions Behind a $300 Billion Contract

When Larry Ellison briefly became the world's richest person amid Oracle's soaring stock price, few realized the traditional software giant had quietly secured a pivotal position in the AI infrastructure race. Oracle's five-year, $300 billion cloud services contract with OpenAI, starting in 2027, not only skyrocketed the company's remaining performance obligations (RPO) to $455 billion but also established it as a force to reckon with in AI infrastructure.

Oracle's comeback is no accident. After OpenAI's exclusive partnership with Microsoft loosened, the database and enterprise software giant seized the opportunity. The initial $3 billion cloud services contract was a trial run, while the subsequent $300 billion mega-order cemented its status as OpenAI's core partner. Unlike NVIDIA's chip focus, Oracle provides full-stack services from data center construction to cloud platform operations, a differentiated positioning that complements rather than competes with NVIDIA.

More noteworthy is Oracle's role in the 'Stargate' project. Backed by the Trump administration and planned as a $500 billion AI infrastructure initiative, the project was initially seen as a U.S. strategy to counter global AI competition. Despite slower-than-expected progress—shrinking from an immediate $100 billion investment pledge to just one data center by late 2025—Oracle remains responsible for eight data centers in Abilene, Texas, expected to be fully completed by late 2026. These facilities will synergize with OpenAI's other infrastructure projects, forming crucial nodes in its computing network.

However, Oracle's high-stakes gamble carries risks. The company reported negative free cash flow of $394 million in fiscal 2025 yet pledged to skyrocket cloud infrastructure revenue from $18 billion to $144 billion in the coming years. This aggressive growth forecast heavily relies on business expansion from clients like OpenAI, leaving Oracle vulnerable to significant performance pressure if the AI industry undergoes a cyclical downturn. But for Ellison, this is a must-win bet—having missed opportunities in the cloud era, Oracle is attempting a comeback via AI infrastructure.

OpenAI's Balancing Act: Building Computing Autonomy Amid Tech Giants

'We must excel at three things: outstanding AI research, products people need, and solving unprecedented infrastructure challenges.' Sam Altman's words encapsulate OpenAI's current core strategy. Facing computing demands from 70 million weekly active users and the long road to AGI, the AI pioneer is meticulously weaving a computing network amid encroaching tech giants to ensure sufficient support without sacrificing technological autonomy.

OpenAI's strategy centers on 'multi-vendor checks and balances.' From initially relying solely on Microsoft Azure's cloud services to now establishing deep collaborations with NVIDIA, Oracle, Microsoft, and others, its approach reflects profound considerations for computing security. The $100 billion partnership with NVIDIA secures chip supply, Oracle's $300 billion contract provides cloud infrastructure support, and the non-binding memorandum with Microsoft maintains strategic flexibility. This 'not putting all eggs in one basket' tactic gives OpenAI greater leverage in negotiations.

This balancing act is most evident in OpenAI's insistence on AGI control. Reports indicate that negotiations with Microsoft centered on 'AGI clauses'—whether Microsoft could continue sharing profits upon achieving AI surpassing human intelligence. Through its unique nonprofit parent company structure, OpenAI ensures all safety-related decisions adhere to the principle of 'benefiting humanity,' a governance model that allows it to maintain control over core technological directions while accepting massive investments.

However, the enormous infrastructure investments have also burdened OpenAI. The company is projected to burn $115 billion in cash by 2029, with server leasing costs alone reaching $100 billion in 2030. This 'burn rate' demands that OpenAI balance technological breakthroughs with commercial viability. Fortunately, its growing user base provides a foundation for monetization, while diversified computing layouts reduce risks of single partners overcharging.

The Energy Crisis and Industry Reshuffling Amid the Infrastructure Boom

When Jensen Huang predicted global AI infrastructure spending would reach $3–4 trillion by the end of the decade, he not only revealed a massive market opportunity but also highlighted severe challenges facing the industry. This unprecedented infrastructure boom is encountering constraints from energy supply, environmental costs, and geopolitics—factors that may determine the AI race's final outcome.

Energy consumption has become the most urgent bottleneck. International Energy Agency data shows global data center electricity consumption reached 415 terawatt-hours in 2024, accounting for 1.5% of global power use, and is projected to surge to 945 terawatt-hours by 2030—exceeding Japan's current annual consumption. A typical AI data center consumes as much electricity as 100,000 households, while OpenAI's 10-gigawatt project alone has power demands sufficient to supply millions of households. This level of energy need is reshaping global energy dynamics—BloombergNEF predicts renewable energy generation will spike 84% over the next five years due to AI demand.

Companies are adopting vastly different energy strategies. Meta's Hyperion data center in Louisiana partners with nuclear plants to provide 5 gigawatts of computing power, while Musk's xAI data center in Tennessee, using natural gas turbines, has become the region's largest polluter, allegedly violating the Clean Air Act. This contrast underscores AI infrastructure's environmental ethical dilemma: how to balance growth with sustainability amid explosive computing demand remains unresolved.

Geopolitical factors are also profoundly influencing infrastructure layouts. The U.S. accounts for 45% of global data center electricity consumption, with its data center power demand growth expected to contribute nearly 50% of the national total. This makes AI infrastructure a national security issue—the political backing of the 'Stargate' project, NVIDIA's strategic investment in Intel, all reflect efforts to maintain U.S. dominance in AI chips and data centers. Globally, data centers have become new battlegrounds for technological sovereignty, potentially exacerbating supply chain fragmentation.

Industry reshuffling is inevitable. As infrastructure thresholds rise dramatically, smaller AI firms will increasingly struggle to afford model training and deployment costs, concentrating resources further among giants like OpenAI and Meta. Meanwhile, traditional tech companies' fates are being rewritten—Oracle is leveraging AI infrastructure for a comeback, Intel is seeking revival through NVIDIA partnerships, while those unable to keep pace face elimination. This computing power arms race is reshaping the entire tech industry's power structure.

Computing Power as the New Civilization Pact

Examining the intricate relationships among NVIDIA, Oracle, and OpenAI, along with the global AI infrastructure boom, reveals not just commercial rivalries but a reconstruction of future civilization's foundations. From Huang's 'computing power as the new oil' to Altman's 'infrastructure will become the economy's foundation,' tech leaders increasingly agree: whoever controls AI infrastructure holds the key to the future.

This multi-trillion-dollar gamble is essentially laying the physical groundwork for the AI era. Every data center completion, every chip performance breakthrough, and every giant partnership agreement is defining the basic rules of AI's interaction with human society. Like railways and power grids during the Industrial Revolution, today's AI infrastructure will shape technological paths and economic landscapes for decades.

Yet, rationality must underpin technological acceleration. When data center electricity consumption surpasses traditional high-energy industries like steel and cement, sustainable development models become imperative. As a few tech giants concentrate increasingly powerful computing resources, ensuring technological inclusivity rather than exacerbating inequality warrants vigilance. When AI infrastructure becomes a geopolitical tool, international collaboration and regulatory frameworks grow urgent.

The 'Tripartite Struggle in Computing Power' among NVIDIA, Oracle, and OpenAI continues, with each move scripting a new civilization pact. The ultimate winners of this game will need not just capital and technological hard power but also wisdom to balance commercial interests, social values, and environmental sustainability. After all, a true AI revolution requires not only formidable computing power but also a people-centric development philosophy—a principle all participants should remember.