Why Does Uncertainty Exist in End-to-End Large Models for Autonomous Driving?

![]() 09/28 2025

09/28 2025

![]() 685

685

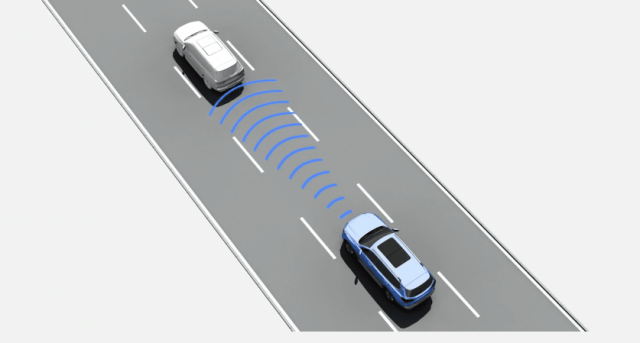

When the topic of autonomous driving comes up, the first thing that often springs to mind is whether the system can "keep the vehicle running smoothly." While this may seem like a straightforward objective, it actually places stringent demands on the system's capacity to make accurate and predictable decisions in a variety of real-world situations. To equip autonomous vehicles with the ability to perform driving actions that are correct, safe, and logical, end-to-end large models have been introduced.

End-to-end large models are designed to accomplish tasks ranging from sensor input to control output using a single, extensive network. Their main advantage lies in their ability to directly learn complex mappings and do away with cumbersome intermediate modules. However, this comes at the expense of making the system's behavior harder to fully predict and verify, leading to what is known as "uncertainty." The term "uncertainty" refers to situations where, given identical input or similar scenarios, the model might generate ambiguous, inconsistent, or incorrect outputs. Moreover, quantifying the likelihood or root cause of such errors is a challenging task. So, why does uncertainty arise in end-to-end large models for autonomous driving?

What Exactly Constitutes Uncertainty in End-to-End Large Models?

The term "uncertainty" may sound abstract, but it becomes more understandable when dissected. For end-to-end large models, part of the uncertainty originates from the data itself. Some scenarios are inherently difficult to assess. For instance, distant objects may appear blurry during heavy rain, or strong backlighting at night might cause pedestrians to blend into the background. Even humans would find themselves hesitating in such situations. This unavoidable noise is referred to as statistical noise or "irreducible noise." Another source of uncertainty stems from the model's lack of knowledge, such as when it encounters extreme or rare scenarios not covered in the training data. Models often perform with confidence but incorrectly on inputs they have "never seen before," highlighting the model's "cognitive blind spots." In technical terms, these two types are commonly referred to as "aleatoric uncertainty" (randomness inherent in the data) and "epistemic uncertainty" (uncertainty due to limited model knowledge). The "uncertainty" in end-to-end large models encompasses both aspects and can be further exacerbated by factors such as model architecture, large sample bias, training objectives, and optimization instability.

The output of end-to-end models is often not as straightforward as "a single, clear action" but rather a probability distribution or a continuous value directly mapped to control variables. If the model's probability estimates are unreliable (a phenomenon known as "miscalibration"), the system may exhibit overconfidence in decisions that are low in confidence but actually dangerous. Another crucial factor is temporal closure. Autonomous driving is not a static process of classifying a single image frame and then stopping; rather, it involves continuous decision-making, where vehicle actions feed back into the environment to generate new inputs. Errors can accumulate and amplify, causing small-probability deviations to evolve into severe consequences. Therefore, the uncertainty in end-to-end models is not a single-frame issue but a closed-loop system-level risk source.

Why Are End-to-End Architectures More Susceptible to Exposing or Amplifying Uncertainty?

Combining perception, prediction, planning, and control into a single large network may seem to enhance efficiency, but it introduces numerous challenges that amplify uncertainty. The first challenge is poor interpretability. In modular systems, when a vehicle deviates from its trajectory, you can investigate whether it was due to missed perception, trajectory prediction errors, or control execution delays. In contrast, the internal representations of end-to-end models are high-dimensional and difficult to interpret intuitively. When issues arise, engineers struggle to pinpoint the root cause and thus find it challenging to implement targeted fixes. The second challenge is increased verification difficulty. Traditional modules have clearly defined roles and metrics (e.g., detection recall rate, trajectory prediction error), allowing for itemized verification. In contrast, the overall performance of end-to-end models relies heavily on "end-to-end scenario coverage." Relying solely on statistical metrics makes it difficult to prove safety across all long-tail scenarios. The third challenge is the enormous data demand and strong dependence on data distribution. End-to-end models require massive, diverse samples to learn the mapping from "pixels to actions." If the training set deviates from the actual operating environment (distribution shift), model performance may plummet. The fourth challenge is the risk of overconfidence. Without proper uncertainty estimation, deep models often assign high confidence to incorrect outputs, which is highly dangerous in safety-critical systems.

End-to-end models often optimize for empirical risk (e.g., average loss), causing them to perform exceptionally well in common scenarios. However, their performance may degrade significantly in rare but high-stakes scenarios where errors have severe consequences. The risks in autonomous driving are not uniformly distributed across all scenarios; long-tail scenarios (complex intersections, extreme weather, abnormal road infrastructure, etc.) are rare but more dangerous. Therefore, simply optimizing for average metrics underestimates real-world safety risks.

What Specific Impacts Does Uncertainty Have on Autonomous Driving?

Uncertainty in end-to-end large models affects the safety, user experience, and deployment costs of autonomous driving systems at multiple levels. In unseen or ambiguous scenarios, end-to-end models may make incorrect steering or acceleration decisions. Without reliable confidence estimates and safety fallback strategies, such errors can directly lead to collisions or accidents.

Unpredictable behavior also erodes trust from the public and regulatory agencies. Even if the average accident rate is low, unexplainable incidents can lead regulators, vehicle owners, and the public to question system safety, hindering road approval and commercialization. Additionally, operational and maintenance costs increase. Uncertainty necessitates extensive real-world data collection, replay analysis, data annotation, and more frequent software updates and regression testing, all of which significantly raise long-term investments.

For the user experience, uncertainty often manifests as inconsistent or overly conservative vehicle behavior. To avoid accidents, the system may tend to brake or reduce speed when uncertain, making passengers feel that the autonomous vehicle drives "indecisively," affecting comfort and traffic efficiency. In other uncertain situations, the system may become overly confident and fail to decelerate or avoid obstacles appropriately. Such "confident but incorrect" behavior is even more dangerous. For commercial products, striking a balance between avoiding excessive conservatism (which impairs usability) and recklessness requires fine-grained quantification and engineering control of uncertainty.

What Methods Exist to Measure and Estimate Uncertainty?

Given that uncertainty in end-to-end large models is inevitable, are there methods to quantify and monitor it? Multiple approaches exist. The first involves calibrating the model's probabilistic outputs, such as using Expected Calibration Error (ECE) to assess whether the model's confidence aligns with its actual accuracy. Misalignment indicates unreliable output probabilities. The second approach uses ensemble or Bayesian methods to estimate epistemic uncertainty. A simple method is model ensembling, where training multiple models or using different random seeds and data subsets helps observe output disagreements. Greater disagreement indicates higher model uncertainty. More formal methods include Bayesian neural networks and Bayesian approximations (e.g., MC Dropout), which provide posterior uncertainty estimates. The third approach develops specialized anomaly detection or out-of-distribution detection modules to identify inputs that differ significantly from the training distribution. These methods can issue warnings like "I do not recognize this scenario" during online operation. The fourth approach directly trains the model to output uncertainty quantification, such as through deep evidence learning or predicting distribution parameters (e.g., simultaneously predicting mean and variance during regression), embedding uncertainty into the model's outputs.

However, it is important to note that measurement does not equate to usability. Many uncertainty estimation methods perform well on academic datasets but lose accuracy under the dynamic closed-loop conditions of real vehicles. Therefore, in addition to these estimation techniques, system-level evaluations are necessary to verify their reliability in real-world operations and their effectiveness in aiding safety decisions.

How Do Decision-Making and Control Systems Handle Uncertainty Information?

Even if uncertainty can be estimated, the core challenge for autonomous driving remains how to effectively transmit this information to decision-making and control systems. A straightforward approach is for the planner to treat uncertainty as an additional "cost" or risk factor. When perception or prediction confidence decreases, the planner executes more conservative trajectories, reduces speed, or increases lateral spacing. While this reduces accident probabilities in many cases, it may also lead to decreased efficiency. In a more systematic framework, driving can be viewed as a Partially Observable Markov Decision Process (POMDP), where a Bayesian filter maintains a "belief state," and planning considers both state uncertainty and goal-related reward/risk trade-offs. Although theoretically complete POMDP solutions can balance uncertainty and decision-making, they are computationally intensive and complex to implement in real vehicles. Therefore, approximate methods or Model Predictive Control (MPC) combined with uncertainty bounds are commonly used for risk control.

Regardless of decision complexity, a set of hard safety constraints—such as limiting maximum yaw rate or defining minimum safe distances for emergency stops—should be built-in. Even if the primary model makes errors, these rules can take over in extreme cases, forming what is known as runtime assurance. In hybrid architectures, a common practice is to use the end-to-end model as a suggester while employing traditional control or rule-based modules as the final safety filter.

How Can We Mitigate Uncertainty in End-to-End Large Models?

Mitigating uncertainty in end-to-end large models requires a multifaceted approach involving data, training, architecture, and operation. At the data level, it is essential to systematically construct datasets covering long-tail scenarios. This involves not only passive collection but also active learning strategies to selectively gather high-risk or highly uncertain scenarios. Synthetic data and domain randomization can supplement real-world data by generating rare extreme conditions in simulated environments, addressing coverage gaps. At the training level, uncertainty-aware loss functions, evidence learning, or multi-task learning can be introduced to enable the model to output uncertainty information, improving robustness in rare scenarios.

Architecturally, hybrid approaches can be explored, combining end-to-end networks with modular subsystems or adopting parallel "end-to-end + modular" schemes. This preserves the learning advantages of end-to-end models while retaining traditional perception/localization/planning modules as validation or redundancy mechanisms. Lightweight uncertainty detectors and rule-based safety nets can also be deployed on the vehicle to immediately switch to conservative strategies or request remote assistance (if vehicle-cloud collaboration is feasible) when high uncertainty is detected. During operation, a comprehensive monitoring and replay system should record all high-uncertainty and anomalous events for offline analysis and targeted data supplementation.

Final Thoughts

Uncertainty is not a "fatal flaw" of end-to-end models but a reality that must be confronted on the path to mature autonomous driving. The key lies in not treating the model as a black box but instead viewing uncertainty as a design variable. By purposefully measuring, transmitting, and making reasonable risk trade-offs at the decision level, and through data strategies, hybrid architectures, real-time monitoring, and formalized safety constraints, "unknown risks" can gradually be transformed into "manageable risks."

-- END --