In 2026, Security Emerges as the 'New Benchmark' for AI Adoption

![]() 01/14 2026

01/14 2026

![]() 540

540

The crux of whether AI can truly penetrate core business operations on a grand scale doesn't hinge on the extent of model capability enhancements. Rather, it depends on the system's ability to be swiftly halted, processes traced, and responsibilities clearly delineated when issues arise. Until these challenges are addressed, security will remain the most pragmatic yet formidable obstacle in the AI deployment journey.

Author | Doudou

Editor | Piye

Produced by | Industry Pioneer

Once AI transforms attacks into a form of 'productive force', the dynamic between attack and defense undergoes a fundamental structural imbalance.

This paradigm shift is already evident in the real world.

At 10 PM on December 22, Kuaishou was hit by a large-scale assault from illegal and gray-market industries. Monitoring data revealed that during peak hours, approximately 17,000 controlled zombie accounts simultaneously went live, broadcasting pornographic and vulgar content. The attack methods weren't novel; what truly altered the landscape was the 'amplification effect' introduced by AI. With AI augmentation, attack costs plummeted to unprecedentedly low levels, while efficiency soared, and for the first time, the defender's response capabilities were outpaced by the attacker's.

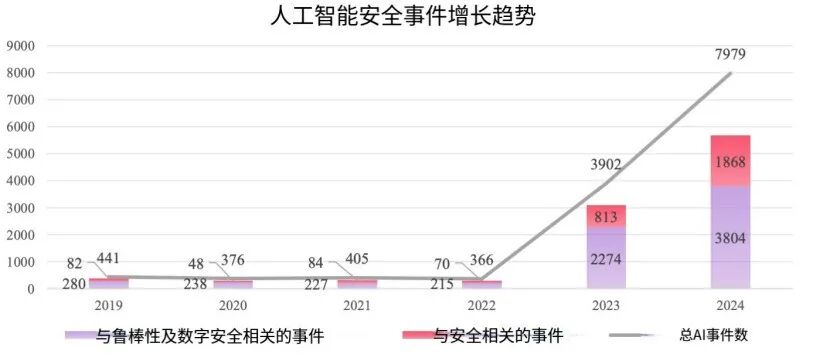

This is not an isolated case. Data from the OECD's AI Incident Monitoring indicates that the total number of AI risk incidents in 2024 was approximately 21.8 times that of 2022. Around 74% of incidents recorded between 2019 and 2024 were related to AI security, with security and reliability incidents surging by 83.7% compared to 2023.

Against this backdrop, the security paradigm is being rewritten.

Traditionally, when selecting large-scale model or Agent service providers, performance, price, and ecosystem naturally took precedence, with security viewed as a remedial measure. However, after rounds of practical engagement, more and more enterprises are realizing that once business processes, user interactions, and even decision-making power are entrusted to AI, security is no longer a retrospective consideration but must be prioritized during the selection phase.

This subtle yet profound shift is compelling enterprises to reevaluate the hierarchy of decisions related to AI. An AI security report jointly released by Alibaba Cloud and Omdia reveals that the proportion of enterprises viewing security and data privacy as major obstacles to AI adoption has surged from 11% in 2023 to 43% in 2024.

For the first time, security has transitioned from an 'optional' feature to a prerequisite for AI deployment.

1. From Exploration to Deep Integration:

AI Security Becomes a Prerequisite for Deployment

In 2025, enterprises' sensitivity to 'security' is rapidly escalating.

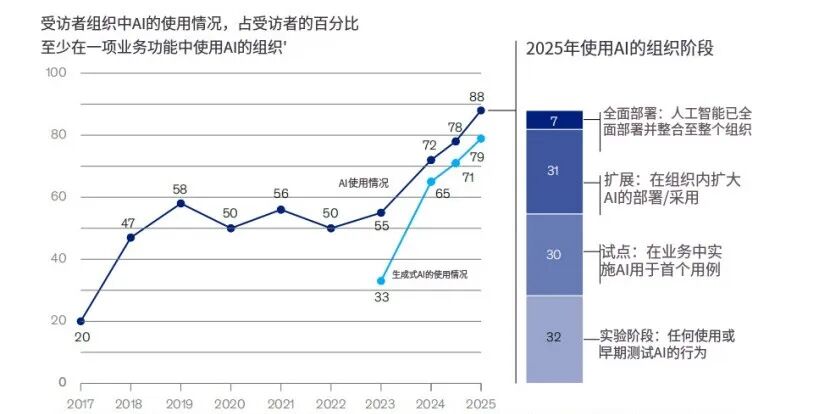

As 2025 unfolds, AI has transitioned from technological exploration to deep integration within enterprise operations. According to McKinsey's 'The State of AI in 2025' report, 88% of surveyed enterprises stated they were already leveraging AI technology in at least one business function, a full 10 percentage points higher than the previous year.

As the scope and capabilities of AI usage expand, enterprises' security concerns are intensifying. Early AI applications were primarily confined to auxiliary tasks such as copywriting, content generation, and basic data analysis. Even if misjudgments or deviations occurred, the impact on the business was limited. However, as AI accelerates its integration, it is being granted stronger permissions, including reading business data, accessing internal systems, and participating in process decision-making.

Gartner predicts that by 2028, 33% of enterprise software will incorporate AI agent functionality, capable of autonomously completing 15% of daily human work decisions, with the risk exposure from expanded permissions continuing to grow.

Against this backdrop, if AI malfunctions, it is no longer just a matter of outputting errors but could directly compromise sensitive data or disrupt production and transaction processes.

These concerns are not unfounded. An analysis by Harmonic Security reveals that in the second quarter of 2025, over 4% of dialogues and more than 20% of uploaded files on various GenAI platforms used by enterprises contained sensitive corporate data. This indicates that inadequate control could continuously amplify risks during daily use.

As a result, security is no longer an afterthought. According to the '2025 Global Trustworthy AI Governance and Data Security Report' released by the Cyber Research Institute, model accuracy and stability are the factors most valued by enterprises, followed closely by compliance and privacy protection in data usage, accounting for 79%, and total cost of ownership and return on investment, accounting for 54%. Security is being proactively integrated into the project initiation and technology selection phases, becoming a key prerequisite for enterprise AI deployment.

This has become a consensus among leading enterprises.

In the 'Frontier AI Safety Commitment' signed by 16 global leading AI enterprises, it is explicitly stated that 'when developing and deploying frontier AI models and systems, risks must be effectively identified, assessed, and managed, with thresholds set for intolerable risks,' confirming that security has become a core indicator of industry consensus.

This risk perception is also reflected in the actual processes by which enterprises select partners and advance projects.

For example, the IT head of a large manufacturing enterprise revealed to Industry Pioneer that in the past, as long as the model successfully navigated business scenarios in the pilot phase, it could proceed to the next assessment stage. However, in the latest round of Agent capability testing, the enterprise required testing to include negative test cases such as prompt injection, jailbreak risks, and unauthorized calls; otherwise, the solution would not advance to the review stage.

Another example is a CIO in the securities industry who explicitly required in an internal email that the AI platform must support enterprise private deployment or VPC isolation and prohibit the use of any business data for third-party training; otherwise, it would not proceed to the second round of assessment. The emergence of such clauses reflects that enterprises are making data security and access control core conditions for selecting suppliers from the outset.

It is evident that security is no longer an isolated cost center but a core condition determining whether AI can be widely adopted and trusted for operation. More importantly, security is becoming the most crucial currency of trust in the enterprise ecosystem. In partner selection, industry collaboration frameworks, and client contract negotiations, AI security guarantees have become one of the core clauses at the negotiating table, directly influencing contract signings and the success or failure of commercial collaborations.

Among these, only those who can strike a more stable balance between efficiency gains and security red lines will truly lead in the next phase of AI competition.

2. The 'Forward Shift' of Security in AI Selection:

Transforming the Security Competition Landscape

In 2025, China conducted its first real-world public testing of AI large-scale models, uncovering 281 security vulnerabilities, including 177 unique to large-scale models, accounting for 63%. These vulnerabilities encompass traditional security threats not covered by existing systems, such as prompt injection, jailbreak attacks, and adversarial examples.

Traditional security vendors' strategies can no longer withstand the new attack methods of the AI era.

The widespread adoption of AI technology has fundamentally altered the underlying logic of cyberattacks. Security vendors face increasing responsibilities, yet their value becomes harder to quantify. However, this is not a problem of vendor capability but a structural shift toward shared responsibility in the industry. The impact on the industry is profound. On one hand, security capabilities will inevitably become 'embedded,' integrated into cloud platforms, model foundations, and business systems, with the space for independent delivery continuously shrinking. On the other hand, security vendors that fail to provide governance-level value will be marginalized into replaceable capability modules.

To avoid being internalized, vendors must establish themselves as an indispensable part of the system's operation.

Traditional cybersecurity vendors, represented by 360, Qi'anxin, Sangfor, and NSFOCUS, have not completely overhauled their strategies but instead view AI as a capability enhancer, choosing to embed large-scale model capabilities into their existing security products and platforms.

Take 360 as an example; in 2023, it officially released the 'AI-Native Security Large Model,' claiming to be based on over 40PB of security sample data and decades of attack-defense experience for APT attack detection, automated threat intelligence mining, and security incident analysis. According to its disclosure, in terms of APT alert deduplication and false positive compression, model-assisted analysis can reduce manual analysis costs by over 50%.

The advantages of this path are evident. According to CCID Consulting data, in China's government and enterprise cybersecurity market, leading vendors generally have a coverage rate exceeding 60% in industries such as government, finance, and energy, meaning traditional vendors firmly control the entry points for government and enterprise security.

Unlike traditional vendors, cloud service providers such as Alibaba Cloud, Tencent Cloud, and Baidu Intelligent Cloud have chosen to start from the infrastructure and platform layers, directly 'building in' security capabilities throughout the AI lifecycle.

In practical implementation, these vendors commonly enable security control strategies by default in model hosting, inference calls, plugin access, and Agent orchestration, strongly binding identity, permissions, data, and model versions with the AI usage process. For example, Alibaba Cloud incorporates API call authentication, prompt auditing, and RAG data access permissions as default capabilities in its large-scale model service platform; Tencent Cloud integrates model calls with enterprise IAM, log auditing, and data classification in its enterprise large-scale model platform; Baidu Intelligent Cloud restricts external tool call permissions in its Agent construction framework to reduce the risk of model 'unauthorized execution.'

For these vendors, since security capabilities are embedded in the main path, their marginal costs are almost zero. Especially in addressing new attack surfaces such as prompt injection, RAG retrieval pollution, and Agent tool abuse, platform-level constraints are significantly more scalable than post-event detection.

Another important group of players is vertical security vendors focusing on specific scenarios. Take Shumei Technology as an example; it has long specialized in content security, anti-fraud, and gray industry behavior modeling. In the context of generative AI, Shumei has migrated its existing risk control models to AI abuse governance, identifying malicious prompts, automated fraud script generation, and false content batch generation. According to public cases, its AI abuse detection hit rate exceeds 90% on some social and content platforms.

The advantage of these vendors lies in their focused expertise and deep model confrontation (antagonistic) experience, providing irreplaceable value in high-risk scenarios.

In recent years, a group of native AI security vendors has also rapidly emerged. These companies did not evolve from traditional security systems but directly focus on the model itself and the agent layer, reducing risks from the design stage. These vendors typically iterate quickly, are highly sensitive to new antagonistic attacks, and possess a first-mover advantage in model-level security.

Overall, under the sustained pressure of generative AI, the division of labor in the security industry is being reshuffled. Vendors at different positions are starting from their respective entry points to collectively support a 'new security system' that urgently needs reconstruction and has not yet taken shape.

3. The Capability Boundaries of AI Security:

Unable to 'Eliminate,' Only Able to 'Mitigate Damage'

As security is pushed to the forefront of AI selection, an unavoidable question arises: Is stronger security always better? Does sufficient security exist?

The answer is not optimistic. OpenAI has provided a set of judgments in public research: In scenarios such as AI browsers and Agents, prompt injection poses a structural risk. Even with continuous reinforcement, the security system cannot achieve 100% interception; the optimal state can only reduce the attack success rate to 5%-10%.

This is equally clear in the judgment of Liang Kun, CTO of Shumei Technology: 'Currently, achieving 100% blockade of gray industry activities is not realistic.'

However, it must be clarified that not all AI scenarios prioritize security first.

In many exploratory and peripheral businesses, enterprises still prioritize effectiveness. For example, internal knowledge assistants, marketing content generation, and data analysis Copilots either do not access sensitive data or lack execution permissions. Even if model deviations occur, the risks are relatively controllable. In these scenarios, enterprises are more concerned with return on investment than the completeness of security governance.

The real turning point occurs when AI begins to enter core business processes. In scenarios involving customer data, transaction decisions, production scheduling, and risk control audits, enterprises often rapidly tighten their strategies, making security a hard constraint.

This cognitive shift has directly led to three noticeable changes in usage patterns.

The first shift is from 'functional' to 'controllable'.

Once security is prioritized, enterprises typically start to restrict the range of data that models can access. Data that was once fully accessible is now segmented for hierarchical, domain-specific, and scenario-based utilization. RAG retrieval no longer "recalls from the entire library" but is limited to audited knowledge collections. This may lead to a decrease in model accuracy for certain tasks and impact recall rates, necessitating the business side to invest more effort in data governance and structuring.

However, this is not a technological setback. For enterprises, they prefer to sacrifice some effectiveness rather than endure uncontrollable risks.

The second transition is from "usable" to "auditable."

As AI is integrated into formal production environments, the capability to audit and trace becomes a crucial threshold. Can prompts be traced? What retrieval content did the model reference? Which tools did the Agent invoke, at what time, and with what permissions? These questions, once considered engineering details, are now included in acceptance checklists.

This directly increases system complexity and costs, such as longer call chains, increased latency, and higher computational resource consumption. Nevertheless, in ToB scenarios, explainability often surpasses extreme efficiency. Being able to clarify what transpired has become more important than how quickly it runs.

The third shift is from "automated" to "semi-automated."

In numerous core business scenarios, enterprises have opted not for fully automated Agent execution but instead adopted a "suggestion + human review + execution isolation" model. AI offers decision-making suggestions, humans complete the final confirmation, and critical operations are isolated from production systems. This mode clearly extends processes and restricts scheduling scale, but it aligns with the current risk tolerance of enterprises.

At this stage, the role of security is no longer to enhance interception rates but to prevent system失控 (loss of control). Through these practices, enterprises have gradually established a clear internal stratification. Peripheral businesses can tolerate more uncertainty, with security requirements taking a backseat; core businesses must prioritize security, with significantly lower allowed automation levels.

It is worth noting that this stratification is not static. As security capabilities mature and governance experience accumulates, some processes that initially required human intervention may gradually be entrusted to AI.

From this perspective, security in the AI era is no longer a matter of "existence" but "degree." By restricting data, tightening permissions, and introducing auditing, inevitable risks are controlled within an acceptable range for enterprises. The deepening of these security practices not only modifies enterprises' paths for AI governance but also fundamentally changes the industry's perception of AI security.

Epilogue:

It is foreseeable that for a considerable period, AI security cannot be completely resolved through a one-time solution.

During this process, the utilization of AI by enterprises will continue to diverge, with edge businesses prioritizing efficiency and output, while core businesses will maintain long-term caution. Automation will not simply "achieve success in one step" but will gradually advance around permission control, audit mechanisms, and responsibility boundaries. The capabilities of AI will continue to enhance, but the scope for its autonomous decision-making will not necessarily expand in tandem.

For the security industry, this also signifies a long-term adjustment. The value of relying solely on post-event detection will continue to diminish, while the ability to participate in system design, permission governance, and operational constraints will become increasingly critical. Security is no longer just a standalone product but more akin to a prerequisite for the operation of AI systems.

From this perspective, the key to whether AI truly enters core businesses on a large scale does not lie in how much the model capabilities have improved, but rather in whether, once problems arise, the system can be promptly halted, the process can be traced back, and responsibilities can be clearly defined.

Until these issues are resolved, security will remain the most practical and challenging hurdle to overcome in the implementation of AI.