Global Computing Power Industry: Treading a Fine Line with OpenAI

![]() 01/21 2026

01/21 2026

![]() 396

396

In the third week of January 2026, the global tech community was rocked by two significant announcements from OpenAI. Though seemingly unrelated, these announcements are two sides of the same coin, accurately reflecting the industry's starkly divided state of survival.

The first aspect is 'extreme expansion.' On January 14, OpenAI announced a strategic partnership with chip unicorn Cerebras, valued at over $10 billion. Cerebras is set to deliver 750 megawatts of computing power dedicated to AI reasoning by 2028. This deal not only represents OpenAI's largest investment in computing power outside the Nvidia ecosystem but also underscores its insatiable demand for computing resources, which has outstripped the capacity of a single supplier. Shortly after, OpenAI revealed on its official blog that its annualized revenue had surpassed $20 billion in 2025, a tenfold increase from $2 billion in 2023. This growth trajectory aligns almost perfectly with the expansion of its computing power capacity (a 9.5-fold increase over three years). This clearly demonstrates that computing power equates to revenue, and expansion is vital for survival.

The second aspect is 'realistic anxiety.' Just two days later, on January 16, OpenAI announced plans to test advertising in ChatGPT within the United States. This move signifies the company, once a beacon of 'Silicon Valley idealism,' succumbing to commercial realities under immense financial pressure. Although OpenAI's CFO described this initiative as part of a diversified revenue model aimed at 'making intelligence more accessible,' the grim reality revealed by leaked financial data cannot be concealed: OpenAI's reasoning costs have surpassed its revenue, and increased user engagement accelerates financial losses. The introduction of advertising is a desperate measure to address cash flow issues amidst soaring infrastructure commitments, which could reach $1.4 trillion.

OpenAI's contradictory situation mirrors the entire AI industry. On one hand, there is an industry consensus and capital frenzy driven by genuine demand and technological breakthroughs; on the other hand, there is a precarious business model and systemic financial risks. Where will this contradiction lead the global computing power industry?

01

OpenAI's Strategic Gambit

For a considerable time, Nvidia has dominated the high-end AI training and reasoning market with its H100/B200 series GPUs. However, as the demand for computing power from AI giants like OpenAI grows exponentially and the urgency for cost control intensifies, this monopolistic landscape is being disrupted.

The core rationale behind OpenAI's $10 billion-level partnership with Cerebras lies in Cerebras's unique wafer-scale system technology. Unlike traditional GPUs, which require complex interconnection technologies to link thousands of chips into a cluster, Cerebras integrates up to 4 trillion transistors on a single wafer, forming a massive processor. This revolutionary architectural design enables it to significantly outperform traditional GPU systems in handling large-scale AI reasoning tasks.

For OpenAI, which is striving to commercialize products like ChatGPT, the strategic significance of this order extends far beyond merely expanding its computing power pool; it directly addresses the core challenge of large model commercialization—achieving low-latency, low-cost reasoning services under high concurrent requests. Sachin Katti, OpenAI's head of infrastructure, explicitly stated, 'Computing power is the most critical factor in determining OpenAI's revenue potential.' This deal is a crucial step in OpenAI's deliberate pursuit of low-latency, cost-effective reasoning solutions to accelerate commercialization.

In fact, OpenAI is constructing a 'dumbbell-shaped' chip procurement structure. On the high-performance training end, where extreme performance is crucial, it continues to rely on Nvidia's top-tier GPUs to ensure boundary-pushing advancements in model capabilities. However, on the much larger-scale reasoning end, which is more sensitive to cost and latency, it aggressively diversifies by introducing Cerebras, Google's TPU, and self-developed ASICs. As early as June 2025, OpenAI broke from convention and began leasing Google's TPU chips. According to industry data, Google's TPU is more than twice as energy-efficient as GPUs in reasoning scenarios, and its training cost is only 20% of Nvidia's solution. Additionally, OpenAI's self-developed AI reasoning chip project, in collaboration with Broadcom, is steadily progressing. This chip, utilizing TSMC's advanced 3-nanometer process, is expected to enter production in 2026.

This series of moves clearly indicates that the world's leading AI companies are adopting a dual-engine strategy of 'Nvidia chips + alternative chips.' Morgan Stanley predicts that, influenced by this trend, Nvidia's market share in the AI chip market may decline from its current high of around 80% to 60%. This shift is not merely a market fluctuation but a clear signal of the semiconductor industry's evolution from 'general-purpose GPU' dominance to a 'training generalization, reasoning specialization' architecture. The enormous reasoning market is being rapidly divided among Cerebras, Google's TPU, OpenAI's self-developed chips, and AMD.

Meanwhile, OpenAI's influence in the semiconductor industry extends beyond chip procurement diversification to massive investments in infrastructure such as data centers, fostering deep integration between the semiconductor and energy sectors.

As of now, OpenAI has committed to acquiring over 26 gigawatts (GW) of computing power resources from suppliers like Oracle, Crusoe, CoreWeave, Nvidia, AMD, and Broadcom. This energy consumption scale is equivalent to the power demand of a medium-sized country, making 'electricity' a scarcer strategic resource than chips. Microsoft CEO Satya Nadella once admitted, 'We're not short of AI chips, but we're short of electricity,' with numerous GPUs remaining idle due to unstable power supplies. This infrastructure war is essentially an energy war.

In this round of construction, Crusoe's partnership with OpenAI is particularly notable. Crusoe's data center project in Abilene, Texas, has expanded to a massive 1.2 GW capacity, with eight buildings constructed in phases, each capable of supporting 50,000 Nvidia GB200 NVL72 chips. The key innovation of this project lies in its attempt to solve the 'power shortage' bottleneck restricting AI development. By collaborating with energy technology company Lancium, Crusoe integrates large-scale backup battery energy storage, solar resources, and natural gas turbines as backups to construct an energy self-sufficient computing power base. This design positions Crusoe as a key infrastructure provider addressing OpenAI's 'power bottleneck' problem, directly converting idle GPUs into usable computing power.

Meanwhile, computing power leasing company CoreWeave is also aggressively expanding. This company has deployed over 250,000 Nvidia GPUs across 32 data centers worldwide and has secured 1.3 GW of power capacity. CoreWeave has signed a cooperation agreement with OpenAI worth up to $22.4 billion, including a $6.5 billion agreement added in September 2025. Contracts of this magnitude not only support CoreWeave's valuation exceeding $10 billion but also solidify the heavy asset investment model of 'chips-data centers-cloud services.'

Microsoft and Oracle's moves are even more ambitious. As OpenAI's most important partner, Microsoft has deployed 400,000 GB200 GPUs for it, with total cloud service commitments reportedly soaring to $250 billion. Oracle has signed an annual $30 billion data center agreement and plans to build an additional 4.5 GW of power capacity across multiple U.S. states to meet demand.

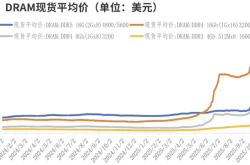

These astonishing figures indicate that the value focus of the semiconductor industry chain is shifting—extending from mere chip design and manufacturing to comprehensive infrastructure encompassing power management, liquid cooling, and ultra-large-scale cluster scheduling. Future competition will not only revolve around chip performance but also a comprehensive battle for energy, land, and integrated engineering capabilities.

02

The Financial Reality of the AI Industry

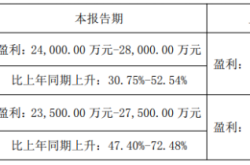

Behind the grand procurement and infrastructure data, the financial statements of OpenAI and even the entire AI industry reveal potential systemic risks facing the industry chain. According to leaked Microsoft data, OpenAI's revenue in the first half of 2025 was only $4.3 billion, while its reasoning costs during the same period exceeded its revenue. This is not merely a loss issue but a fundamental inversion of the business model—the more users engage, the faster the company loses money.

HSBC's analysis predicts that OpenAI's cash burn will reach $17 billion in 2026, nearly doubling from $9 billion in 2025. Following its current business trajectory, by 2030, OpenAI's cumulative leasing costs will reach as high as $792 billion, rising to an astonishing $1.4 trillion by 2033. This means that even if OpenAI achieves $130 billion in revenue in 2030, it will still need to raise substantial additional funds to cover the shortfall.

This phenomenon of 'inverted reasoning costs' profoundly explains why OpenAI is eager to introduce alternative solutions like Cerebras and TPUs and hastily launch advertising. To maintain operations, OpenAI has adopted an aggressive 'supplier financing' model, securing immediate resource investments by signing long-term, massive procurement commitments (such as its $250 billion commitment to Microsoft and its $300 billion commitment to Oracle). This model tightly binds the semiconductor supply chain to OpenAI's commercial prospects. Oracle's $30 billion agreement will not begin to be recognized as revenue until the 2028 fiscal year, meaning suppliers are not merely selling products but also making risk investments in OpenAI's future payment capabilities.

More worryingly, this risk is being amplified and diffused through financial instruments. Morgan Stanley estimates that by 2028, global data center construction will cost $2.9 trillion, with half needing to be resolved through external financing. This has spurred explosive growth in shadow banking tools such as private credit and high-yield 'junk bonds.' For example, Meta has borrowed $29 billion from the private credit market to build data centers. According to JPMorgan Chase statistics, the AI-related industry already accounts for 15% of U.S. investment-grade debt, surpassing the banking sector. A dangerous 'debt pyramid' is forming, and once leading AI companies default due to commercialization failures, their risks will rapidly spread to the entire credit market through these complex financial instruments, triggering a chain reaction.

03

The Debate Over the AI Bubble

OpenAI's current predicament has become the epicenter of a fierce global debate over the 'AI bubble.' The core of this debate is the clash between unwavering confidence on the supply side and deep-seated concerns on the demand side.

The confidence on the supply side stems from collective actions across the entire industry chain. Supporting this optimism is, first and foremost, the 'ultimate vote of confidence' cast by foundry giant TSMC, located at the most upstream part of the industry chain. At its January 15, 2026, earnings call, TSMC announced a record-breaking capital expenditure plan, expecting to invest $52-56 billion in 2026, a significant increase of 27-37% from $40.9 billion in 2025. This figure not only far exceeds market expectations but also, more importantly, reveals its resource allocation: a staggering 70-80% will be invested in the most advanced process technologies such as 3-nanometer and 2-nanometer, directly serving the next-generation products of AI chip giants like Nvidia, AMD, and Apple.

The performance of leading cloud service providers is also an indicator of real demand. Microsoft's report for the first quarter of fiscal year 2026 showed a 40% year-over-year increase in Azure cloud service revenue, explicitly attributing the growth to 'AI workloads.' Similarly, Google Cloud's API calls for its Gemini model doubled in just five months, reaching 85 billion. These are no longer distant forecasts but real revenues already reflected in balance sheets.

Meanwhile, the capital market frenzy continues. Besides OpenAI's staggering $800 billion valuation, Elon Musk's xAI completed a $20 billion Series E funding round in January 2026, reaching a valuation of $250 billion. OpenAI's main competitor, Anthropic, doubled its valuation in just four months, seeking over $25 billion in a new funding round at a $350 billion valuation. This cross-regional, cross-company capital injection reflects global investors' widespread optimism about the long-term value of AI.

The concerns on the demand side stem from systemic structural risks. OpenAI's 'money-burning model' dilemma is not an isolated case. Data shows that median Series A AI companies need to consume up to $5 in cash for every $1 of new revenue generated. More alarmingly, a report from MIT points out that up to 95% of organizations implementing generative AI tools have seen zero return on investment. This reveals a fatal problem: the current value creation capability of AI is far from sufficient to support its enormous operating costs.

Concerns about the bubble are no longer groundless worries of external observers but have become a consensus within the industry. Several tech giant leaders, including Google CEO Sundar Pichai and Amazon founder Jeff Bezos, have publicly acknowledged the existence of 'irrational factors' and a 'bubble' in the AI industry. One of the 'godfathers of AI,' Yoshua Bengio, bluntly stated, 'We're likely to hit a wall... that could be a real financial collapse.' Microsoft CEO Satya Nadella has also expressed tension over the AI bubble.

04

The Decisive 24 Months

The fates of OpenAI and the global semiconductor industry are now tightly intertwined. Based on current financial models and capacity plans, the market outcome will be determined within the next 24 months, leading to two extreme scenarios:

Firstly, should leading AI firms be unable to demonstrate the long-term viability of their business models, infrastructure contracts valued at hundreds of billions of dollars could default. This would set off a cascading crisis in the credit market. The vast production capacities of TSMC and other semiconductor foundries would then face severe over-supply issues. Consequently, global semiconductor industry revenues might nosedive from the projected US$700 billion in 2025 to below US$400 billion.

Secondly, if AI companies manage a 'soft landing' by leveraging advertising revenue, enterprise services, and more efficient chip configurations, the semiconductor industry could enter a golden era. The widespread adoption of ASICs (Application-Specific Integrated Circuits) and custom chips would become a reality. Energy efficiency and infrastructure would emerge as new core competencies. Moreover, AI would transition from a resource-intensive 'money-burning' tool to a pivotal engine driving the global economy.

Currently, the entire AI industry is treading on a precarious path, akin to walking a tightrope. Over the next two years, OpenAI's ability to monetize ChatGPT's nearly 1 billion users into sustainable revenue streams and to reduce reasoning costs to manageable levels will be pivotal. This will not only determine OpenAI's own survival but also shape the trajectory of the global tech industry for the next five years. The countdown has commenced, and every strategic move in this high-stakes game carries immense value.