ByteDance's SeedEdit is launched, is AI photo editing the new focus of large tech companies' AI applications?

![]() 11/14 2024

11/14 2024

![]() 474

474

First off, I don't know a thing about design; after all, I'm not in the design field.

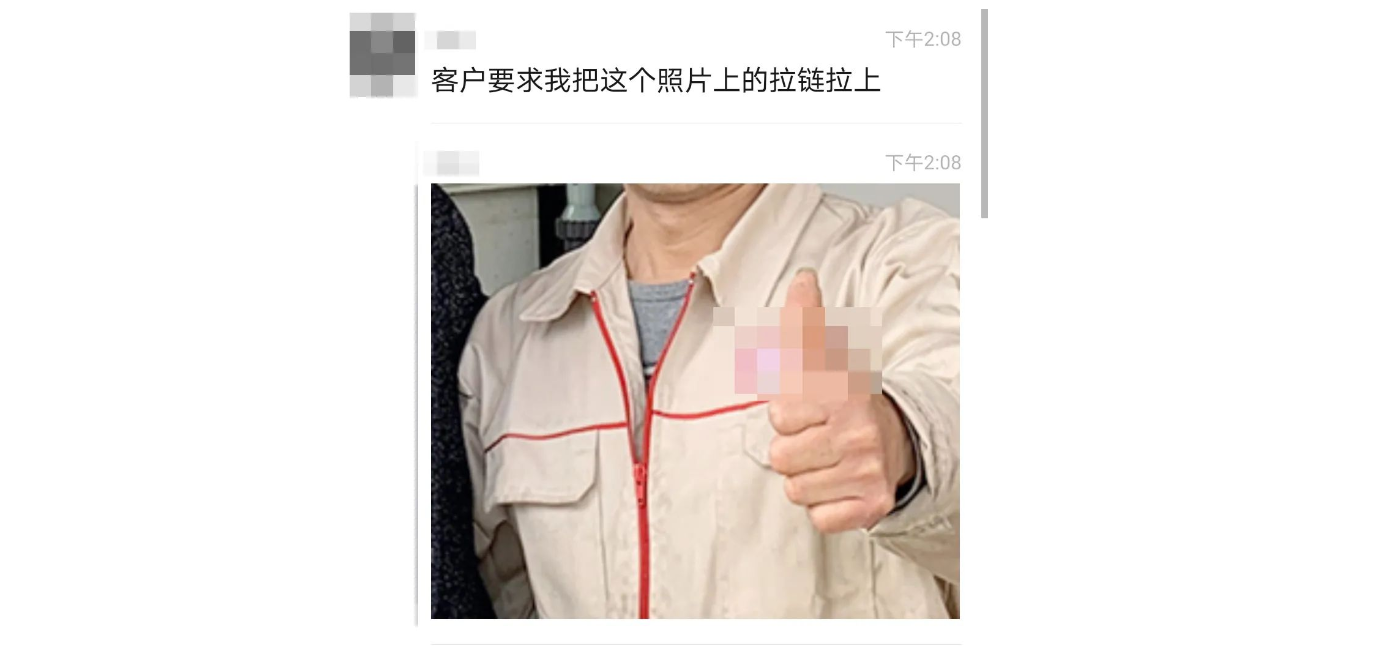

But for anyone who spends time online, you've probably heard about those legendary, once-in-a-lifetime design challenges and the bizarre requests only a client could come up with.

They say, 'Your image is great, so turning the elephant in the picture around shouldn't be a problem, right?'

They say, 'Your black is a bit monotonous; I'd like to see a colorful black.'

Let's not even talk about how designers feel when they see these; even as someone who writes for a living, I find these annotations tough to handle.

The thing is, you can't really say much. You know the other end of the line is the client, and they genuinely don't understand these things.

In the end, it's just work. No matter how outrageous the client's requests are, you have to do them. Even if they ask you to zip up their photo, all you can do is take a screenshot and post it on social media for a laugh, then try your best to solve the problem for the sake of living.

(Image source: Sina Weibo)

However, every problem eventually has a solution, though this one might be a bit special.

Yesterday, ByteDance's Doubao large model team showcased their latest universal image editing model, SeedEdit, on their official account.

Officials stated that this model aims to 'make one-sentence photo editing a reality.' Users only need to input simple natural language to perform diverse image editing operations, including photo retouching, wardrobe changes, beautification, style transformations, and adding or removing elements in specified areas.

Sounds incredible? I think so too.

Turn the elephant around

Experiencing this feature is actually quite simple.

According to officials, the model is currently available for testing on the Doubao PC client and Jimeng web client, but not yet on the Doubao mobile app.

Next, simply click on 'Image Generation' in the sidebar, and you should see an option to upload a reference image. This is the entry point for the SeedEdit model.

The task is simple: upload an image and input what you want to change.

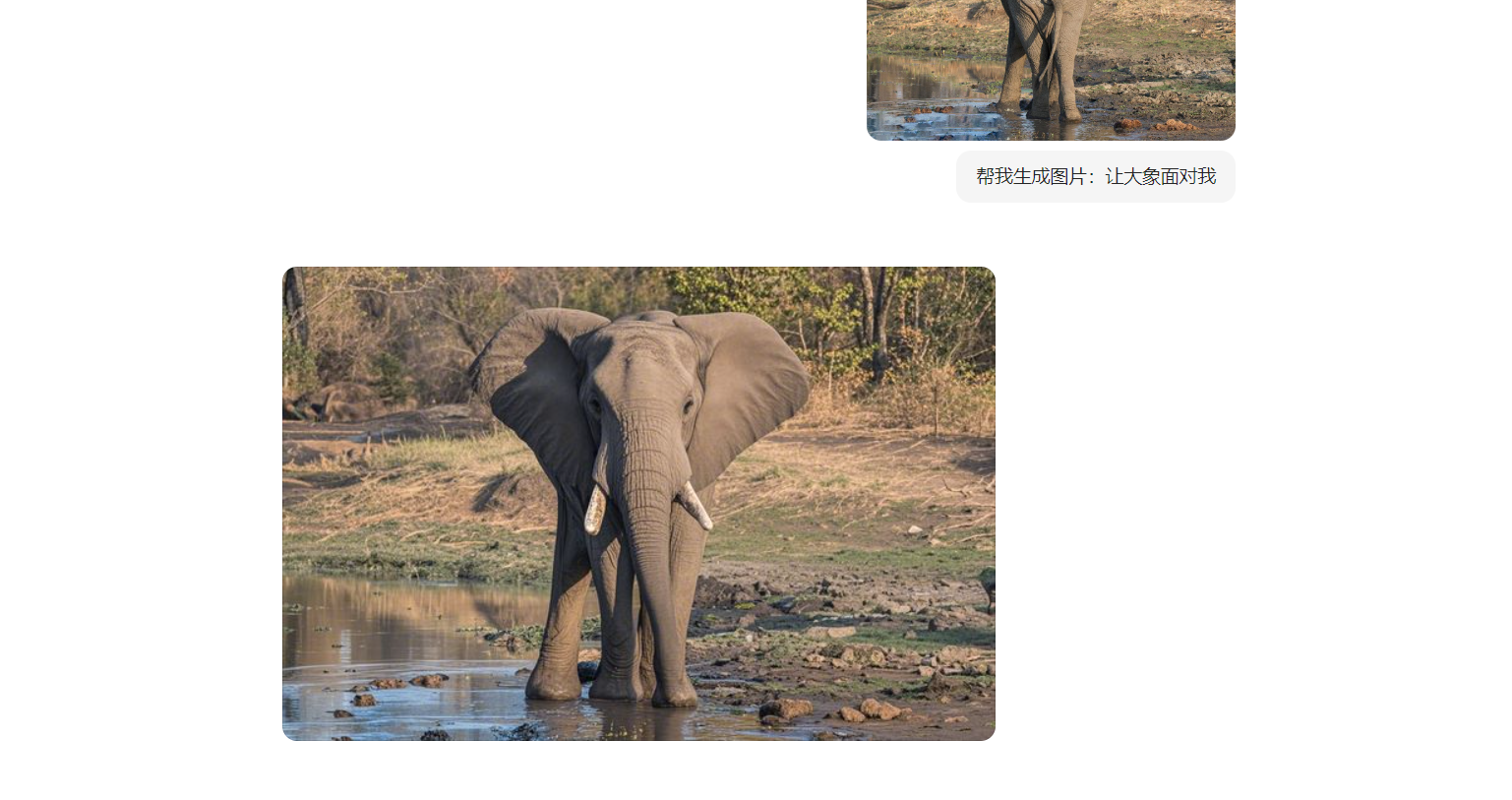

For example, with a photo of an elephant drinking water with its back to us, what should I do if I want it to turn around?

The answer is to input, 'Make the elephant face me.'

(Image source: Lei Tech)

Compare the two images.

As you can see, the elephant's front face generated by SeedEdit is very logical, with well-done ear shapes, foot positions, body colors, and a highly consistent surrounding environment. Of course, some differences in rock shapes are noticeable if you look closely.

(Image source: Lei Tech)

The generated image can be edited again, which is really great.

(Image source: Lei Tech)

However, further operations seem unachievable.

Based on an image I modified using Doubao, I continued to make photo editing requests, but whether it was 'Make the elephant run,' 'Make the elephant spray water with its trunk,' or 'Make the elephant turn to the side,' it was difficult to get satisfactory results.

When I asked it to spray water, the water did come out, but not from its trunk; it came out of its tusks.

Getting a large model to understand what common sense is isn't easy.

(Image source: Lei Tech)

Let's try another portrait or model photo.

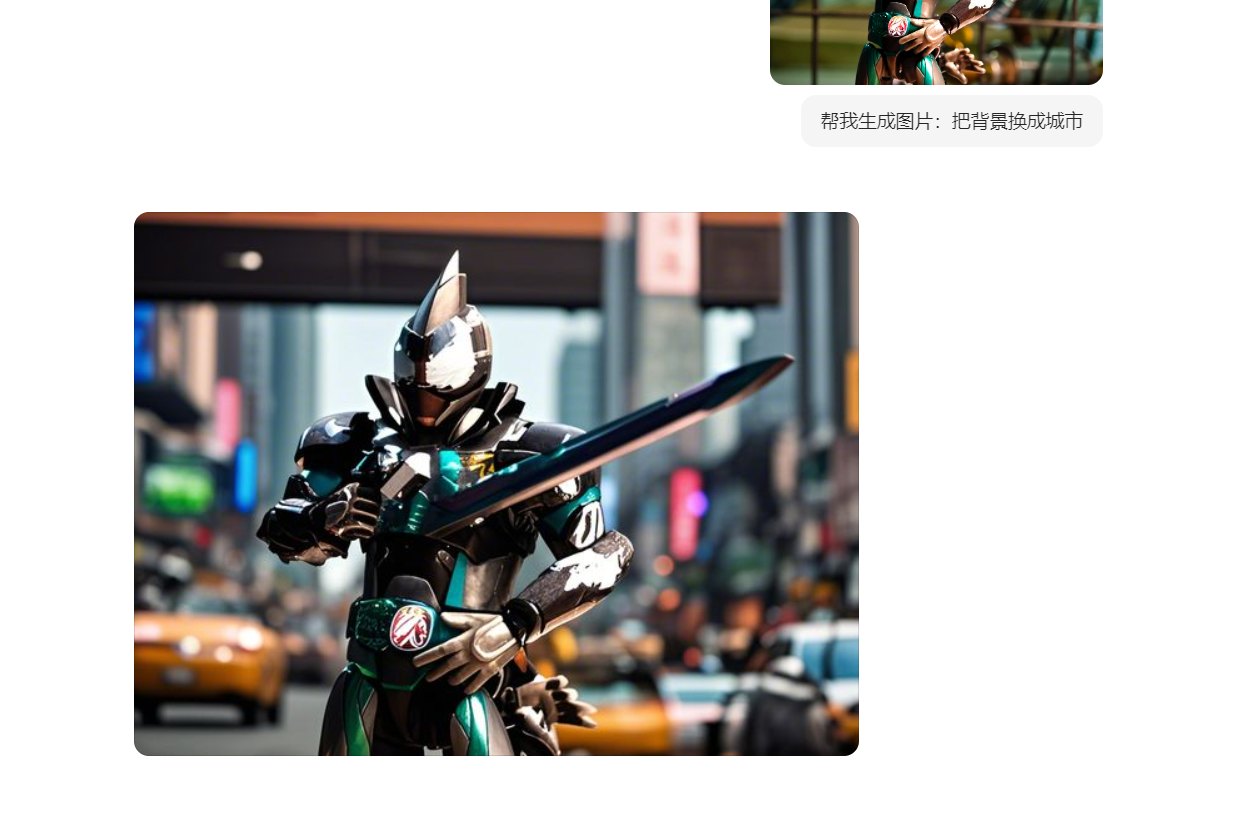

Due to limited space at home, I usually settle for makeshift backgrounds when photographing figurines; I don't have the time or energy to create scenery.

But now, I asked it to 'Change the background to a city.'

(Image source: Lei Tech)

The effect is a bit flat? Then change it to 'Sunset lighting texture.'

You know what, it really hits the spot. Throughout the process, I only gave Doubao two simple requests, and the experience was really smooth.

For poor gunpla builders, the tedious steps of setting up scenery and lighting might actually be omitted.

(Image source: Lei Tech)

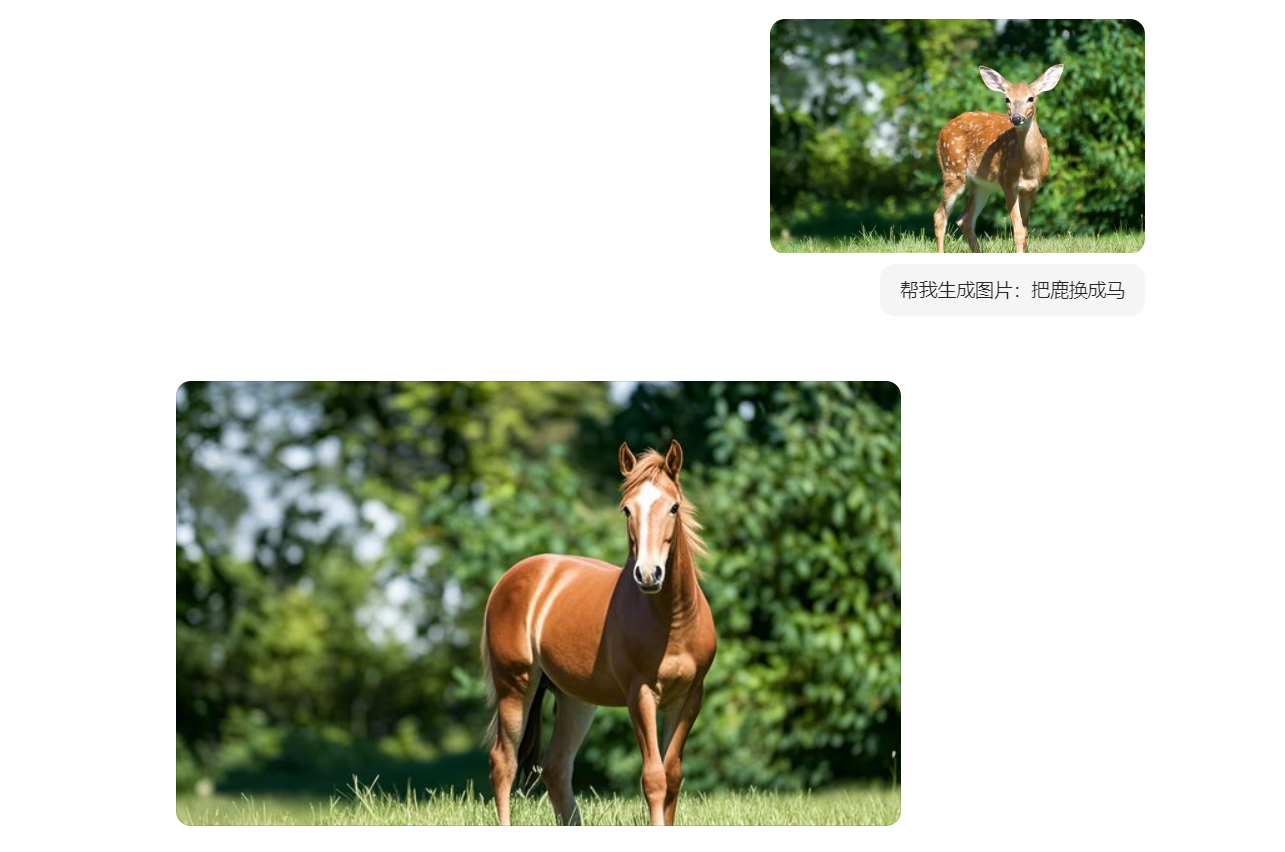

Of course, these are just minor tweaks to the original image. What if I want to replace the main subject directly?

Like 'Call a deer a horse.'

(Image source: Lei Tech)

The actual generated effect is really good. Not only is the grass background well-preserved, even the textures on the horse's body are replaced.

If you don't look at the original image, it's hard to notice any proportion issues.

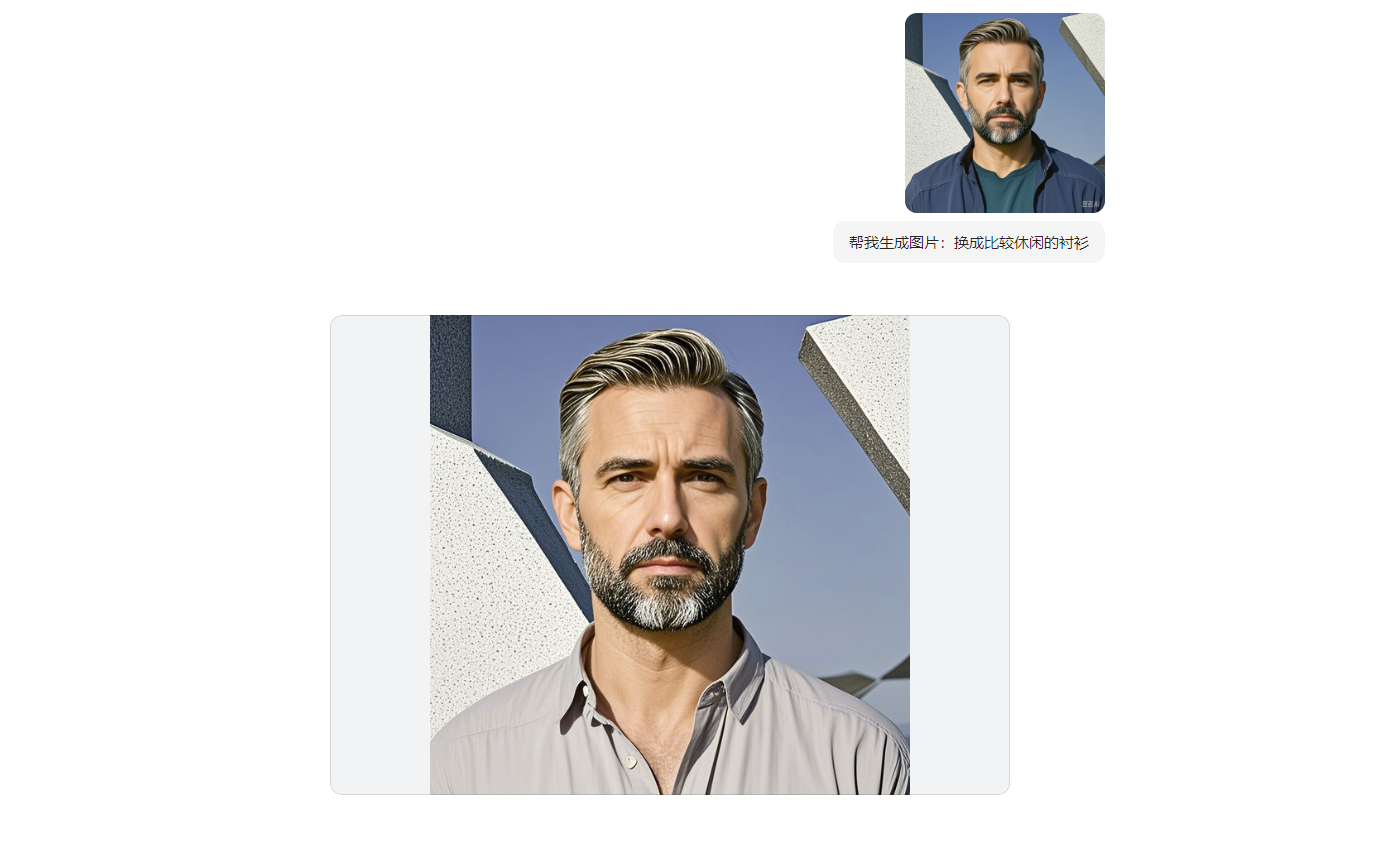

Changing clothes is also fine, with shadows and wrinkles well-adjusted.

(Image source: Lei Tech)

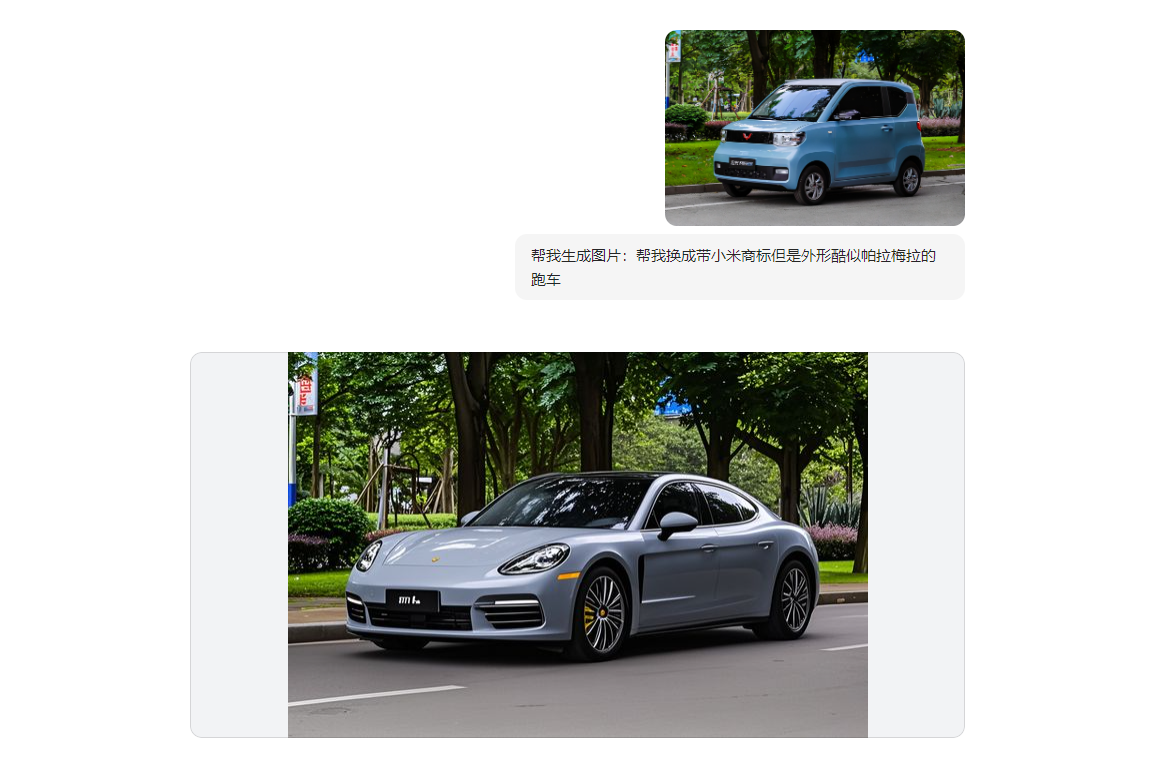

I tried a car. Currently, SeedEdit doesn't recognize Xiaomi SU7.

But I randomly uploaded a photo of a Wuling Hong Guang Mini EV and input an exceptionally complex editing command.

(Image source: Lei Tech)

The final generated car, while not a Maserati, at least has the shape of a sports car.

AI photo editing, about to explode

In fact, AI has already amazed us in the field of painting.

But in the realm of image editing, AI technology is relatively behind, and the inability to perform precise edits has long been a persistent problem in the industry.

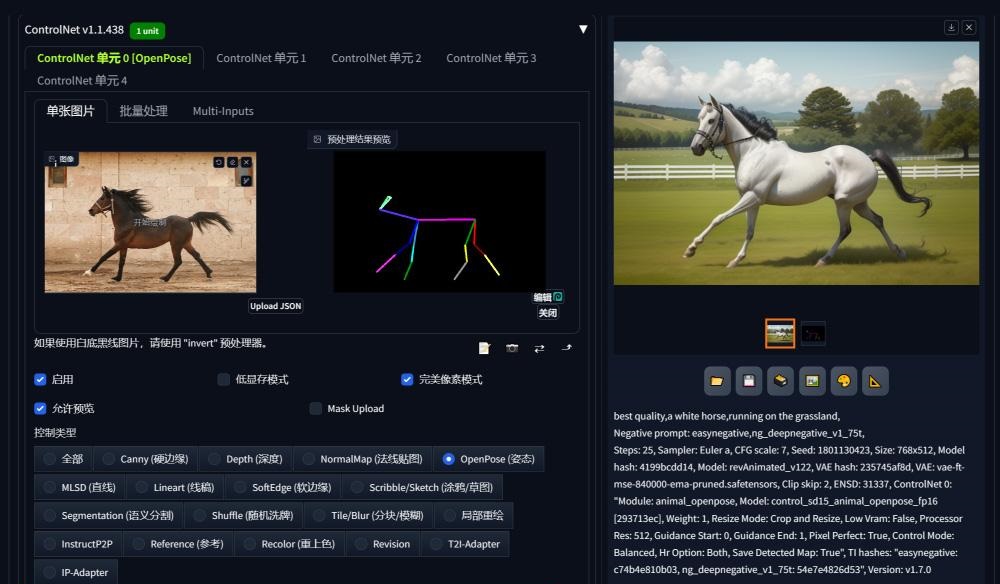

Before this year, such needs were generally met through the ControlNet plugin for Stable Diffusion.

It can take additional input images, convert them into control maps through different preprocessors, which then serve as additional conditions for Stable Diffusion's diffusion process. With only text prompts, image details can be arbitrarily modified while maintaining the main features of the image.

(Image source: Sina Weibo, identifying features and redrawing them)

Locally deploying AI applications is generally out of reach for most beginners.

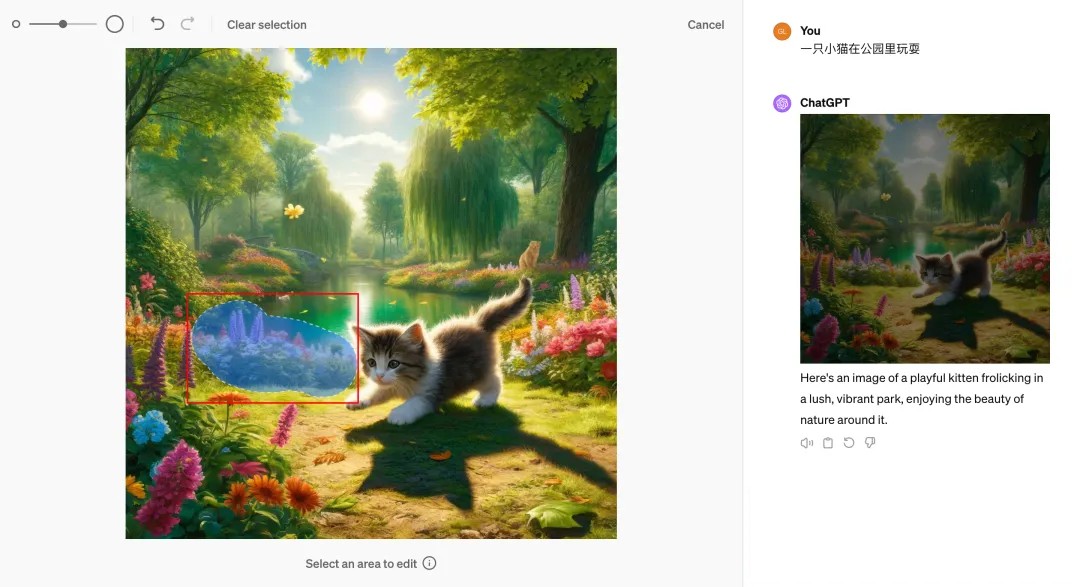

So this year, platforms like ChatGPT/DALLE3, Midjourney, and Baidu Super Canvas have all launched partial redraw applications, attempting to function as online image editors.

However, these applications mostly require manual brushing to select the object you want to modify and then inputting various prompts to make changes.

(Image source: Lei Tech)

To achieve ideal photo editing quality, one must master the correct AI terminology, which sets a high barrier.

If, and I mean if, we only need to provide the input image and a text description of what the model should do, and the model can then edit the image according to the description, wouldn't that be much easier?

ByteDance's SeedEdit is indeed moving in this direction.

However, as more images are edited, issues arise. Currently, there are still some problems with this model when generating images.

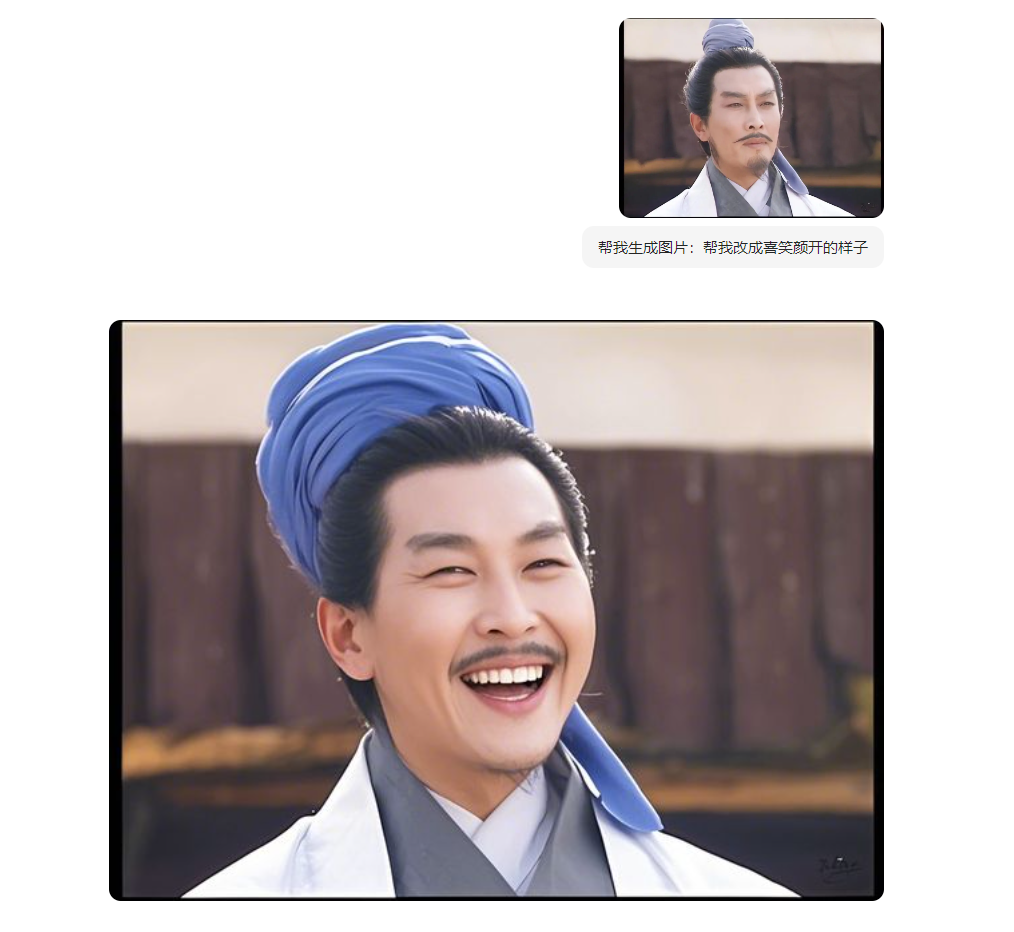

First, there's a lack of consistency in portrait fronts and backs.

Whenever facial retouching is involved, the final image will differ drastically from the original, making it almost unrecognizable.

(Image source: Lei Tech)

Second, there's a lack of directionality in image content.

For images with many elements, SeedEdit currently has difficulty determining which element you want to modify. Even if it occasionally identifies the correct one, the resulting image can be abnormally distorted.

(Image source: Lei Tech)

Finally, text processing ability is still inadequate.

Just like early AI painting, SeedEdit currently fabricates text content. The three lines of small text below seem somewhat logical, but after staring at them for a while, I still can't recognize what they say.

(Image source: Lei Tech)

In my opinion, the emergence of SeedEdit fills a gap in domestic large models for semantic AI photo editing applications.

It's foreseeable that as AI image editing technology continues to develop, future smartphones and computers may integrate this functionality, just like AI removal and AI expansion, making it accessible to everyday people. Whether a beginner or a pro, everyone will have the opportunity to easily use it and visually express their understanding of beauty.

Can photo editing be done with just your hands? Maybe it's not a dream after all.

Source: Lei Tech