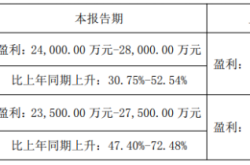

Doubao Mobile Assistant Stirs Up the Tech World! Permission Dispute Arises as AI Phones Seek Perfect Solution

![]() 12/05 2025

12/05 2025

![]() 414

414

There's no stopping the march of technological progress.

In the blink of an eye, the Doubao Mobile Assistant turned into a topic of "Doubao Mobile, Hold On!"

Some time back, the Doubao team from ByteDance teamed up with ZTE to launch the Nubia M153. This phone came pre-installed with a technical preview version of the Doubao Mobile Assistant. On the M153, the Doubao team leveraged its GUI-Agent capabilities to craft a series of features that essentially "take over phone operations for the user." This gives us a sneak peek into what AI phones of the future, say in 2025, might look like.

Image Source: Doubao Mobile Assistant

But trouble wasn't far behind. Some users reported that the Doubao Mobile Assistant triggered risk alerts in certain financial apps. These alerts pointed out that features like "screen sharing" and "accessibility" were active on the phone and cautioned users to be careful with their funds. Some apps even went as far as disabling relevant services due to these triggered risk controls.

Shortly after, WeChat clarified its stance, stating that it hadn't implemented any specific blocking of Doubao. The issues users faced were more likely a result of existing general risk control measures. Doubao promptly disabled the relevant operational features in those scenarios and initiated a process to unblock affected users. At the same time, they further elaborated on their permission invocation methods, data handling practices, and security boundaries, reiterating that there was no hacking or privacy breach.

When it came to the most contentious INJECT_EVENTS permission, Doubao didn't shy away from providing a direct response:

INJECT_EVENTS is indeed a system-level permission, and its technical implementation hinges on Android system-level permissions, which come with stricter usage constraints. With this permission, related products can simulate click events across different screens and applications to fulfill user tasks involving phone operations.

The Doubao Mobile Assistant requires users to actively grant this permission before it can be invoked to enable phone operation features. The use of this permission has also been clearly stated in the permission list. To the best of our knowledge, current AI assistants in the industry all need this permission (or a similar accessibility permission) to offer phone operation services.

From Leitech's vantage point, Doubao's explanation is both reasonable and well-founded. Their willingness to address key controversies head-on is also commendable. However, in Leitech's view, while this debate over AI phone assistant permissions was sparked by a risk control misstep, it highlights an inevitable challenge for the AI phone industry's development. Doubao, at the center of this storm, has simply brought to light a detail that requires industry-wide refinement ahead of schedule.

The Trilogy of AI Agents

To grasp the industry context behind this controversy, we must first understand how AI "interacts" with mobile apps. From a technical standpoint, "AI operation of apps" can be broken down into two key steps:

1. Enable AI to comprehend apps;

2. Enable AI to manipulate apps.

However, the Android system wasn't originally designed to allow "one intelligent agent to control another app." To enable AI to control other apps from a system level (rather than an app level), the mobile phone industry has proposed three distinct "AI operation" approaches.

The first approach relies on app accessibility labels and Android system accessibility services, creating a "simulated user" operation route. We know that modern smartphones all come equipped with accessibility services, such as text label services for the visually impaired. When developers create apps, they add "accessibility labels" to each button. When the phone's accessibility screen reading function is activated, the phone system reads these labels and announces the corresponding content, enabling visually impaired users to understand the function of the currently selected button.

Image Source: smartisan.com

By analyzing the internal label structure of an app, an AI Agent can decipher the software interface elements and understand the function of each button. Once it comprehends the app, the AI Agent can then utilize the simulated touch functionality of accessibility services (based on the same principle as mobile phone key elves) to autonomously operate the app.

However, we know that the domestic mobile internet landscape is in a constant state of flux, with mainstream apps introducing new features every few weeks. Accessibility labels are often the most neglected aspect of the development process. The harsh reality is that the visually impaired community has little say on the internet. This results in certain pages or buttons lacking labels entirely or having only meaningless labels like "button" or "window." Faced with such labels, even the most intelligent AI is at a loss.

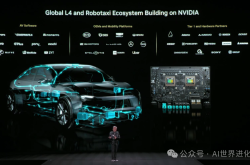

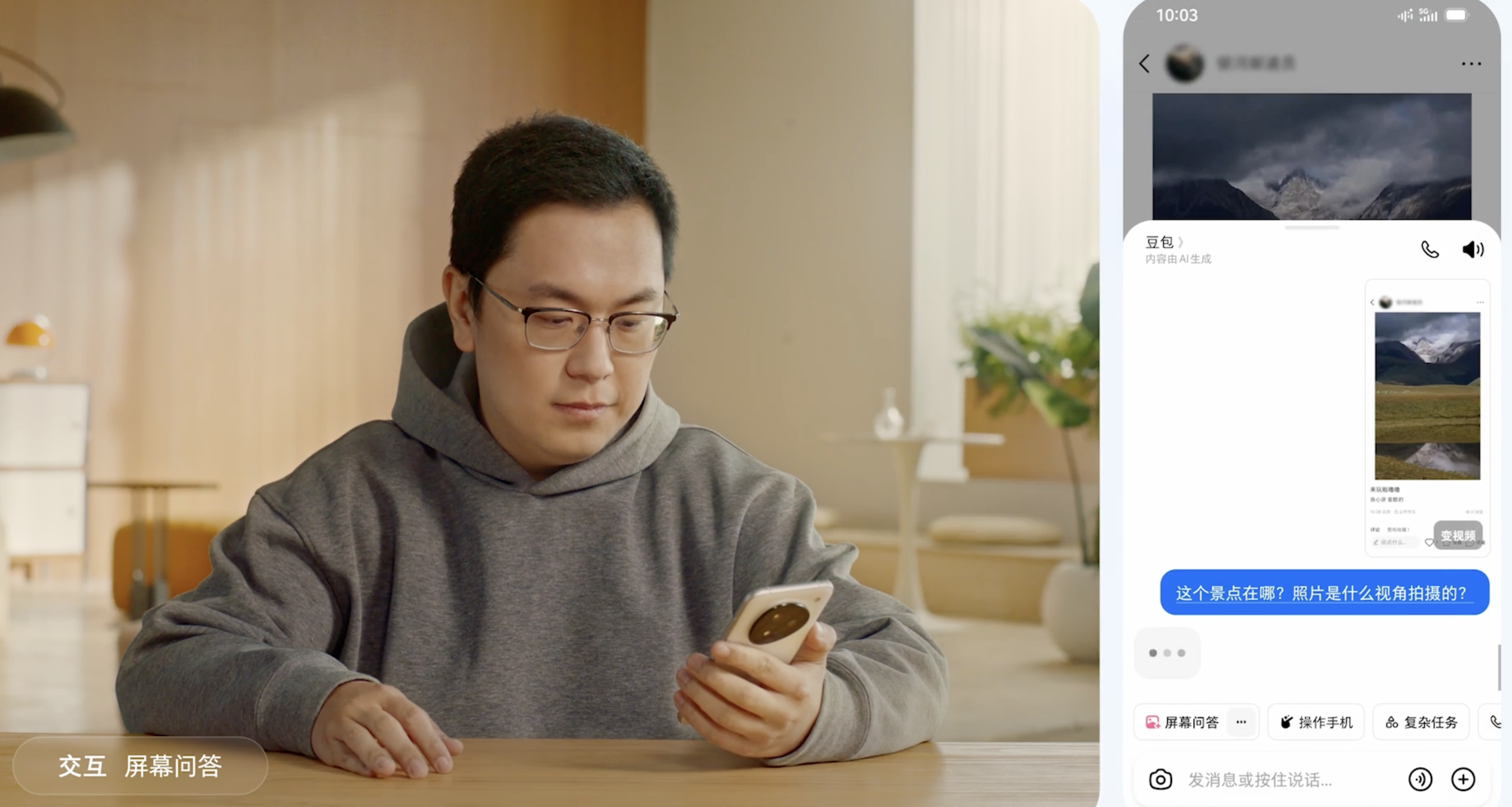

Since poor accessibility support hinders AI from understanding the app's structure, why not let AI "directly view the screen" like humans do? This leads to the second approach of AI interaction: AI utilizes the screen capture capability provided by the system to obtain the current screen image in real-time and then employs a visual large model to decipher the functional meaning of each element in the image.

After comprehending the current screen content, the AI Agent uses accessibility (simulated clicks) or INJECT_EVENTS (application injection triggering) to manipulate the app, completing the AI workflow. The controversy surrounding the Doubao Mobile Assistant also centers on this "INJECT_EVENTS."

Image Source: Doubao Mobile Assistant

As mentioned earlier, accessibility-based clicks are essentially "AI-assisted clicks," but accessibility cannot reliably cover all interaction methods, and many interfaces still require lower-level event injection. In this scenario, INJECT_EVENTS isn't "cracking the app" but rather employing a more fundamental, native interaction simulation method to enable AI to perform more comprehensive operations on any app. Given the current state of the Android system, the "Doubao approach" is also the only viable route in the Android ecosystem that allows AI to truly operate apps at this stage.

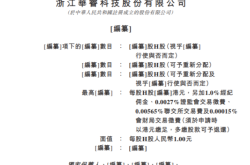

Ultimately, however, the two technical approaches mentioned earlier still essentially involve AI simulating human operations. A true AI phone should eliminate the inefficient graphical user interface (GUI) middle layer and allow AI to directly invoke the functional components of apps. Under this philosophy, the third approach—the MCP route—was born.

Those unfamiliar with AI may not be acquainted with MCP (Model Context Protocol). Simply put, MCP is a standardized capability protocol that "aligns" the functions between apps, transforming app functionalities (components) into modules that can be cross-app invoked by AI.

For instance, if we encapsulate the food ordering function as a "capability component," when ordering food, the AI no longer needs to rely on graphics or text to understand the options in a merchant's menu. Instead, it can directly locate the "Longjiang Pork Knuckle Rice" option from the component backend and add it to the shopping cart, then invoke the MCP module for payment to complete the transaction seamlessly.

Why is the "Doubao Approach" More Advanced?

In Leitech's view, the "GUI-Agent + INJECT_EVENTS" solution adopted by Doubao is indeed the most optimal and comprehensive technical path for AI Agents at this stage. Unlike "Approach One," which relies on reading accessibility labels, GUI-Agent can fully leverage the advantages of large models in multimodality, benefiting from the rapid iteration of domestic AI models.

Compared to the MCP route, the "Doubao Approach" doesn't require waiting for third-party apps to support MCP. After all, MCP allows AI to bypass the app's graphical interface, which is equivalent to asking apps to abandon their own traffic entry points. Even though we all recognize that the MCP solution will inevitably become mainstream, the transition from GUI to MCP is destined to be a lengthy process. It's certain that a significant number of apps will maintain their traditional forms for a considerable period, and GUI-Agent will remain indispensable.

Furthermore, although Doubao's GUI-Agent is seen as a "transitional solution," it has also laid the groundwork for the MCP era in advance. No matter how mature standard protocols become in the future, for AI to reliably complete tasks, it must first learn to operate in real app environments. The operational paths and data transfer algorithms can only be refined through GUI operations, not gleaned from API documentation.

It can be said that the extensive experience accumulated by Doubao through GUI-Agent will undoubtedly position it as a leader in the MCP era.

Is MCP the Optimal Solution for AI Phones?

Of course, as mentioned earlier, even though the development of the MCP ecosystem is still in its infancy and GUI-Agent remains the mainstream solution for AI phones, just as touchscreen phones replaced button phones with richer interaction methods and USB-C unified various connectors, it's certain that the MCP solution, with its superior experience and greater potential, will eventually supplant the GUI-Agent solution and become the "default route" in the AI era.

With the arrival of the "MCP era," the linear relationship between AI phones and apps will also undergo a transformation: Apps will directly expose structured capability components to AI, and the system can perform unified permission management for each invocation, actually enhancing security compared to the current "screen capture + GUI Agent + simulated clicks" approach.

Image Source: Doubao Mobile Assistant

At the same time, the openness of MCP also enables cross-app AI collaboration. Currently, interactions between different apps still rely on "unorthodox" methods like link jumping and clipboard data storage. However, in the MCP era, AI can invoke the capabilities of different apps within the same contextual window, achieving true "workflow integration."

On December 4th, Luo Yonghao took to Weibo to state that "there's no stopping the march of technological progress" and also lauded ByteDance's stride forward in the GUI-Agent route: "AI assistants will undoubtedly become ubiquitous, and our lives will become completely inseparable from them. Future generations will remember this historic day."

Image Source: Weibo

Given the current landscape, the Doubao Mobile Assistant has already given us a "preview" of what future AI phones will look like. As we approach 2026, the AI phone industry will undoubtedly ramp up its investment in the GUI-Agent track, using real market demand to drive the MCP transformation process of the app ecosystem.

From this perspective, the Doubao Mobile Assistant is the key to unlocking the golden age of AI phones.

Doubao ByteDance AI Phone

Source: Leitech

Images in this article are from the 123RF Authentic Library.