DeepSeek's Latest Experimental Model V3.2-Exp: Introducing Sparse Attention Mechanism for Enhanced Inference Efficiency

![]() 11/17 2025

11/17 2025

![]() 501

501

DeepSeek has made its latest experimental model, V3.2-Exp, available as open-source.

Building upon the V3.1-Terminus model, V3.2-Exp incorporates the DeepSeek sparse attention mechanism, thereby enhancing both training and inference efficiency in scenarios involving long contexts.

The team remains dedicated to researching the Transformer architecture, with a particular emphasis on boosting computational efficiency when handling lengthy text sequences.

To boost efficiency, DeepSeek has pioneered the use of Sparse Attention (DSA), achieving a fine-grained level of attention that not only improves training and inference efficiency in long-context scenarios but also preserves the quality of model outputs.

To assess the impact of DSA, the team deliberately configured the training of DeepSeek-V3.2-Exp to mirror that of V3.1-Terminus. The findings reveal that DeepSeek-V3.2-Exp performs on par with V3.1-Terminus.

DeepSeek has also released the technical report for V3.2-Exp. Let's explore the details further.

In this initial phase, the model retains a dense attention mechanism, with all model parameters frozen except for the lightning indexer.

The indexer undergoes 1,000 training steps, with each step involving 16 sequences of 128K tokens, culminating in a total of 2.1 billion tokens processed.

Following the indexer warm-up, a fine-grained token selection mechanism is introduced, and all model parameters are fine-tuned to align the model with the sparse pattern of DSA.

It's worth noting that the team isolates the indexer's input from the computational graph to facilitate independent optimization.

The post-training phase also utilizes the same sparse attention mechanism as the sparse continuous pre-training phase. To rigorously evaluate the impact of DSA, DeepSeek-V3.2-Exp adheres to the same post-training procedures, algorithms, and data as DeepSeek-V3.1-Terminus.

For each task, a specialized model tailored to the specific domain is developed, with all expert models fine-tuned from the same pre-trained DeepSeek-V3.2 base checkpoint.

In addition to writing tasks and general question answering, the model covers five specialized domains: mathematics, competitive programming, general logical reasoning, agent coding, and agent search.

Each specialized model undergoes extensive reinforcement learning (RL) training, employing different models to generate training data for both long-chain thinking reasoning and direct response generation. Experimental results show that models trained on distilled data perform only marginally worse than domain-specific expert models, and this performance gap can be effectively bridged through subsequent reinforcement learning training.

DeepSeek-V3.2-Exp continues to utilize GRPO as its reinforcement learning training algorithm. Unlike previous DeepSeek models that underwent multi-stage reinforcement learning, V3.2-Exp consolidates reasoning, agent, and human alignment training into a single reinforcement learning phase. This strategy effectively balances performance across various domains while mitigating the catastrophic forgetting issues often associated with multi-stage training.

The reward model is categorized into two types: agent tasks utilize rule-based outcome rewards, length penalties, and language consistency rewards; general tasks employ a generative reward model, with each prompt having its own set of evaluation criteria. The reward design strikes a balance between two key factors:

Length versus accuracy;

Language consistency versus accuracy.

In benchmark tests assessing model capabilities, DeepSeek-V3.2-Exp exhibits significantly improved computational efficiency in long-sequence scenarios. However, when compared to DeepSeek-V3.1-Terminus, no notable decline in performance is observed in either short-context or long-context tasks.

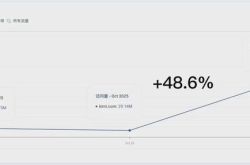

Throughout the training process on BrowseComp and SWE Verified, both V3.2-Exp and V3.1-Terminus models demonstrate steady performance improvements, with highly consistent curves that reflect the training stability of DSA.

DSA achieves substantial end-to-end acceleration in long-context scenarios, requiring significantly less computation than MLA in DeepSeek-V3.1-Terminus.

The DeepSeek team reports that they are actively conducting further large-scale testing in real-world scenarios to identify any potential limitations of the sparse attention architecture.

For more in-depth technical details, please refer to the report at: https://github.com/deepseek-ai/DeepSeek-V3.2-Exp/blob/main/DeepSeek_V3_2.pdf