H200 Approval: A ‘Piercing Arrow’ for NVIDIA on the Path to a $6 Trillion Market Cap?

![]() 12/10 2025

12/10 2025

![]() 441

441

After a period of rumors (including media reports that NVIDIA CEO Jensen Huang recently debated White House conservatives and stated that China might not allow the sale of the H200), U.S. President Trump has made a high-profile announcement: the U.S. government will permit NVIDIA to sell H200 chips to China, and similarly, NVIDIA's competitors can also sell chips with specifications equivalent to the H200 to China.

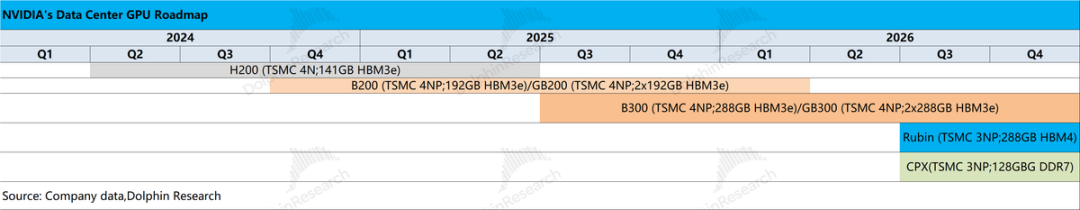

Of course, the latest architecture, Blackwell, and the next-generation Rubin are not included on the list. Moreover, the U.S. government will take a 25% cut from each sale.

In the fourth quarter, NVIDIA has been stagnant for some time due to issues such as supply chain financing, the rise of ASICs, a collapse in the Chinese market, and the AI investment bubble.

Dolphin Research stated in its recent analysis of the power dynamics in the AI supply chain, 'AI Bubble 'Original Sin': Is NVIDIA the Addictive 'Golden Pill' of AI?' that:

Under the pressure of a $4-5 trillion market cap, NVIDIA continues to deliver chips, supporting half of the U.S. stock market, and selling itself as the 'black gold' of the global AI infrastructure era.

One of the key tasks in accomplishing this mission is to spread NVIDIA's technology globally. For example, Trump took GPU vendors to sign deals in the Middle East and other regions. As a result, NVIDIA's market share in China instantly dropped from 95% to zero, leading Jensen Huang to pessimistically remark that the U.S. would lose the AI war.

Six months ago, the U.S. government was reluctant to approve the sale of even the H20, and now it has begun selling the H200 and chips of the same standard. This raises the question:

1) What changed in the past six months?

2) Will the approval of the H200 lead to unimpeded sales?

3) How much elasticity can regaining the Chinese market bring to NVIDIA?

Here is a detailed analysis.

I. What Changed in the Past Six Months?

As you know, when U.S. players use the H200, China gets a crippled version, the H20. However, in subsequent negotiations, after U.S. competitors had already received the new Blackwell series, Chinese counterparts could not even use the H20. Later, although the U.S. government unilaterally lifted restrictions, domestic policies blocked sales again.

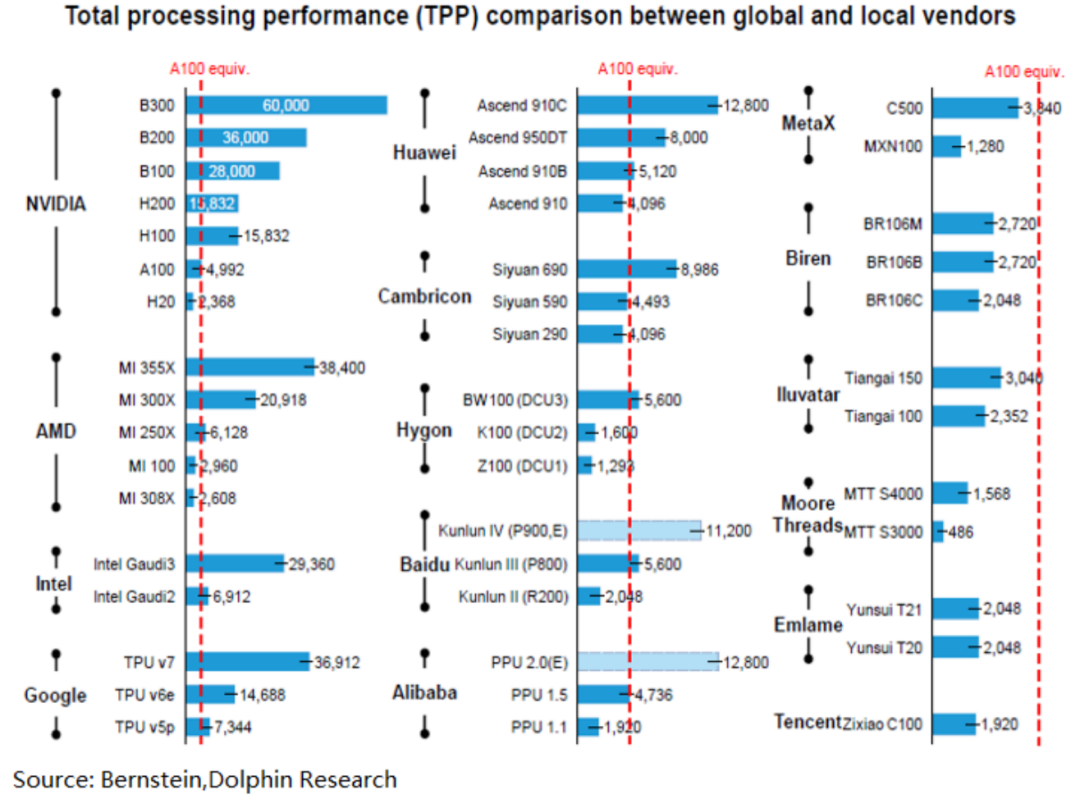

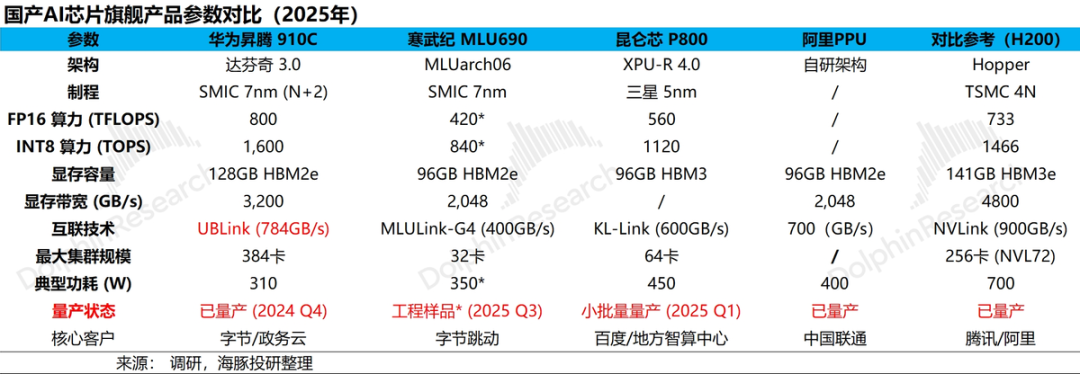

Behind this decision is the fact that the H20 lacks significant advantages over domestic counterparts in 'hard' parameters. Without considering the CUDA ecosystem and large-scale supply issues, it falls short in many areas, such as computing power, memory, interconnect bandwidth, and clustering capabilities, compared to domestic players like Huawei.

The U.S. has traditionally targeted high-end restrictions in negotiations, and once domestic production ramps up, it releases previously restricted products at bargain prices. This latest move appears to follow the same pattern, reflecting the rapid progress of China's AI industry and flexible policy negotiations based on industrial advancements.

II. Will the H200 Be a 'Competent Competitor' in 2026?

Although the latest Blackwell series was not approved this time, the H200 still offers six times the performance of the previous H20 and is currently in service at major overseas companies.

Since the H200 began mass production in the second quarter of 2024, less than two years have passed. Given that depreciation periods for major companies typically range from 4-6 years, H200 products are still in regular use.

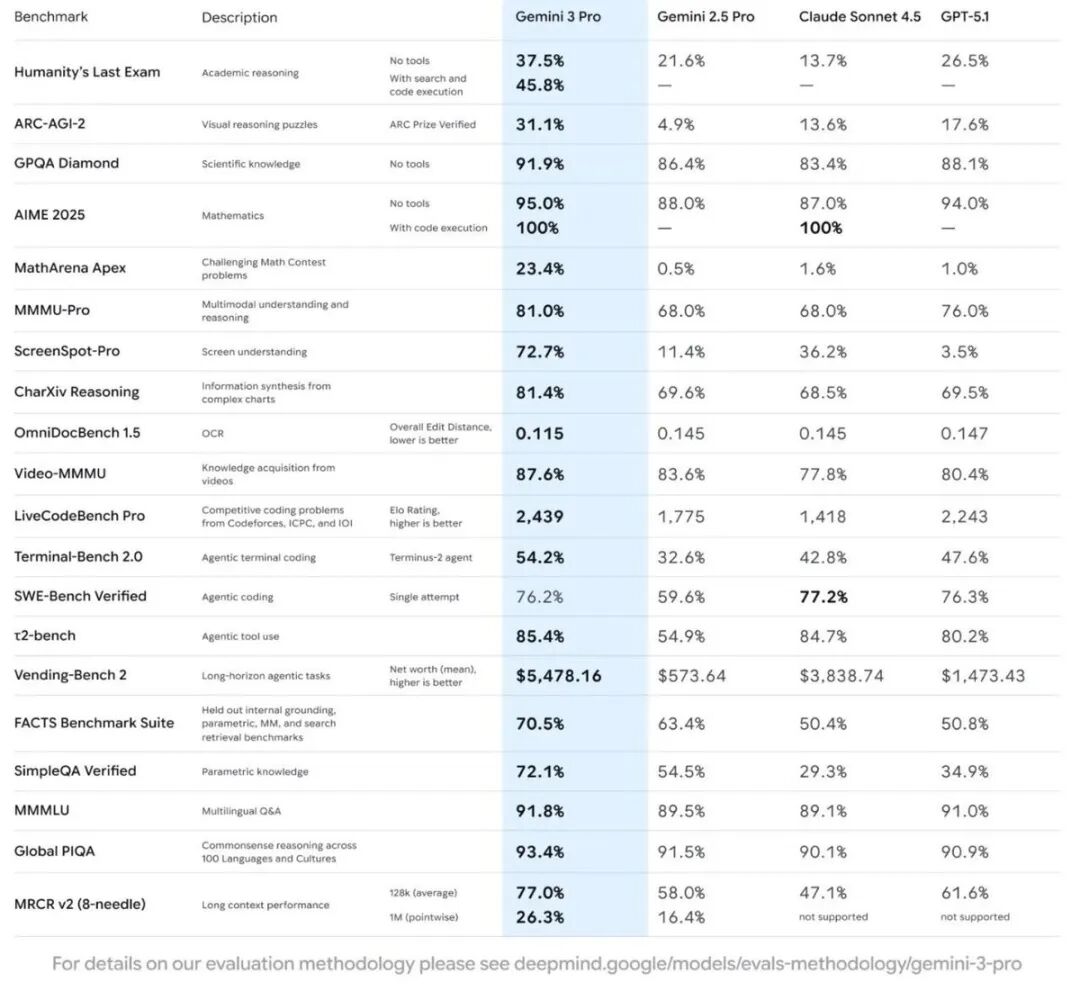

Currently, leading overseas large models are primarily trained on H100s (the H200 mainly features HBM upgrades compared to the H100), and no models have been pre-trained on Blackwell yet. Deployed Blackwell chips are mainly used for inference engines and fine-tuning acceleration, while the next generation of overseas large models is expected to be trained on Blackwell-based models.

In other words, the H200, now available in the U.S., has a generational gap compared to the Blackwell, the new training chip for upcoming U.S. models (NVIDIA typically releases new generations every two years, with Blackwell requiring a longer ramp-up period due to its system-level solution delivery).

Compared to current Chinese counterparts, the H200 still holds a significant computing power advantage. Apart from Huawei, most other domestic players have not yet resolved mass production issues.

The core issue with domestic computing power cards remains the lack of training cards, with no truly usable training computing power cards available. The H200 can build FP8 training supercomputers with performance equivalent to U.S. counterparts, accelerating large model development (e.g., GPT-4-level MoE models) and industrial applications (e.g., intelligent agents, cloud services).

Currently, FP8 is the mainstream training/inference format, with FP4 only being explored by some companies (e.g., OpenAI). The H200 does not natively support FP4 but can be compatible with FP4 models through 'FP4 storage + FP8 computation,' narrowing the gap with Blackwell.

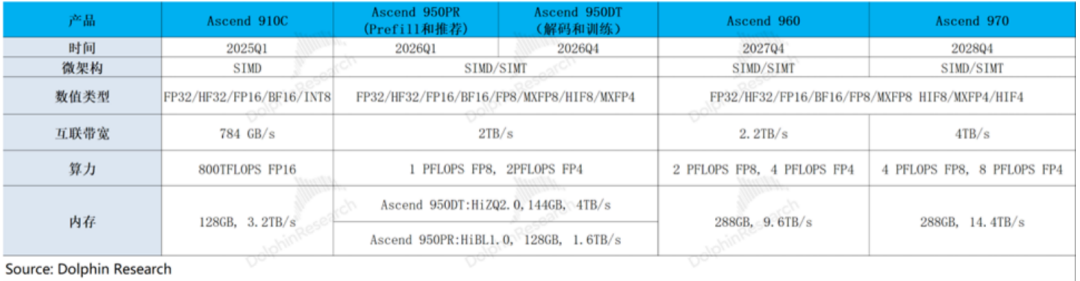

In terms of roadmap evolution, Huawei's next-generation 950 chip (suitable for FP8) will be the first domestic chip usable for training, with strong memory configurations, inter-card interconnectivity, and clustering capabilities.

Even if the 950 chip catches up to the H200 in total processing performance (TPP) a year later, it will still need to wait until Huawei's Ascend 960 series in Q4 2027.

In other words, if Huawei adheres to its roadmap, NVIDIA's H200 chip will lead domestic AI chips by about two years. If the gap were only a year or less, the H200 might face the same 'warm welcome, cold shoulder' situation as when the H20 was approved for sale in the U.S.

A two-year hardware product gap is still significant compared to the six-month iteration cycle of current downstream models, leading some domestic leading models to train overseas and then transfer back.

III. H200: How Long Will It Sell in China, and Where Will It Be Used?

From the current pace, the H200 seems to have a sales window of one and a half to two years in China, but can NVIDIA rely on the H200 to regain a 95-100% market share in China?

Dolphin Research is deeply skeptical about this.

First, in inference scenarios, the performance requirements are not particularly stringent, and domestic players have high cost-effectiveness demands due to end-user issues. However, in training scenarios, domestic competitors are still relatively 'scarce.'

Second, from a policy perspective, to ensure sufficient industrial chain vitality for future domestic chips, supporting upstream foundry capital expenditures and chip R&D, Chinese cloud vendors must allocate a significant portion of their capital expenditure plans to support the domestic industrial chain.

Third, AI software and hardware technologies are rapidly iterating, and the speed of technological iteration will become a new bargaining chip at the negotiation table. Given the uncertainty surrounding future technical standards and the negotiation process from H20 to H200, AI computing power autonomy and full-stack self-research will remain a constant demand for domestic cloud giants.

Combining these three points, Dolphin Research estimates that NVIDIA's H200 may be precisely positioned for a 1-2 year sales window, targeting 'training' scenarios in China's domestic computing power deployment, while inference scenarios, whether actively or passively, will likely rely on domestic chips.

In terms of actual H200 sales in China, purchases are likely to follow a policy-permitted system, where Chinese cloud vendors must clearly state usage scenarios and explain why the H200 is essential.

IV. Why Is NVIDIA 'Dependent' on China?

Despite its immense success, NVIDIA remains anxious as it enters 2026:

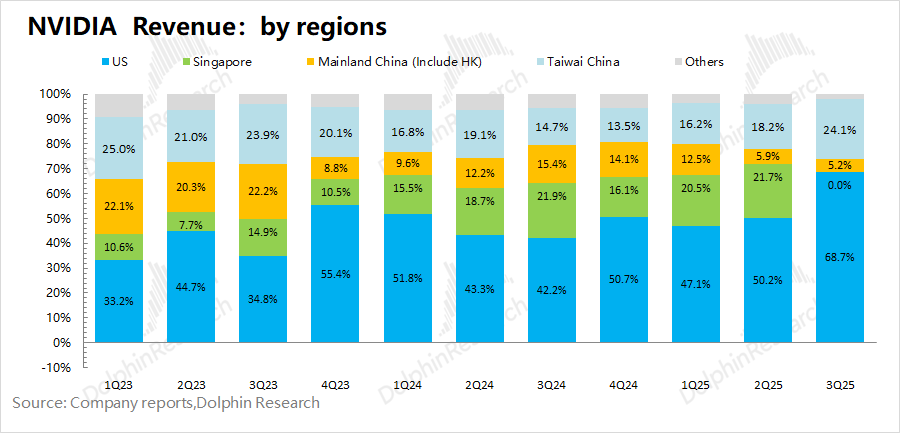

a) The Chinese market has been largely lost: Originally, NVIDIA could sell H20 chips in China, but after the ban, the Chinese market's share in NVIDIA's recent financials has dropped to around 5% (originally contributing about 20% of the company's revenue).

Combined with NVIDIA's disclosure in post-earnings communications that H20 sales revenue was approximately $50 million, most of the revenue from China last quarter came from non-AI chips like PCs and gaming.

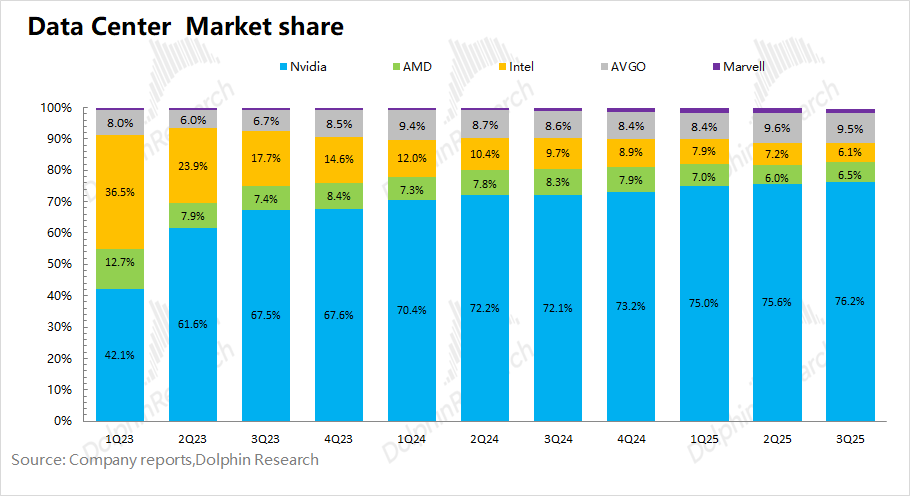

b) Potential threats from the 'Google Gemini + Broadcom' alliance:

Under NVIDIA's high monopoly premiums, large CSPs like Google and Amazon have started developing their own chips. Especially with Google's continuous procurement of TPUs, Broadcom has grown into the 'second-in-command' in the AI chip market (currently holding around a 10% market share).

On the other hand, the Gemini and Anthropic models have shown strong performance, with the former not using NVIDIA products at all and the latter only using some. This means NVIDIA's products are not a 'must-have' for large models, and the 'Google Gemini + Broadcom' alliance presents a new approach.

Although Google's TPUs are currently mainly for internal use, recent market reports suggest that Google is negotiating external supply deals with Meta. Once Google TPUs become externally available, it will pose a more direct threat to NVIDIA's market competitiveness.

For NVIDIA, AI chip revenue from the Chinese market has nearly collapsed to zero after the H20 ban, and now it faces potential threats from Google TPUs to its gross margin/orders. NVIDIA's current market cap is only 22x PE for the next fiscal year's net profit, significantly lower than Broadcom's 40x PE, reflecting the market's view that NVIDIA's scarcity is diminishing as the era of cost-effective computing power arrives.

As a result, Jensen Huang is eager to re-enter the Chinese market. Although NVIDIA will have to pay a 25% sales share to the U.S. government for the H200, which will drag down its overall gross margin (75%) (assuming an original H200 gross margin of 65%, the final gross margin after paying the 25% share would be around 40%).

Since the company's previous outlook did not consider AI chip revenue from the Chinese market, if the Chinese government approves the sale, the H200 will contribute incremental revenue in the short term.

V. Is Regaining the Chinese Market NVIDIA's Trump Card for a $6 Trillion Market Cap?

In conclusion, regaining market access will bring incremental revenue, but with the H200 precisely targeted at China's 'two-year training computing power gap,' the scale and sustainability of the incremental revenue it brings to NVIDIA will be significantly reduced.

Here is Dolphin Research's analysis of the incremental revenue NVIDIA can regain by re-entering the Chinese market and how it will affect valuations. The answer is clear: regaining the Chinese market is not NVIDIA's trump card for a $6 trillion market cap.

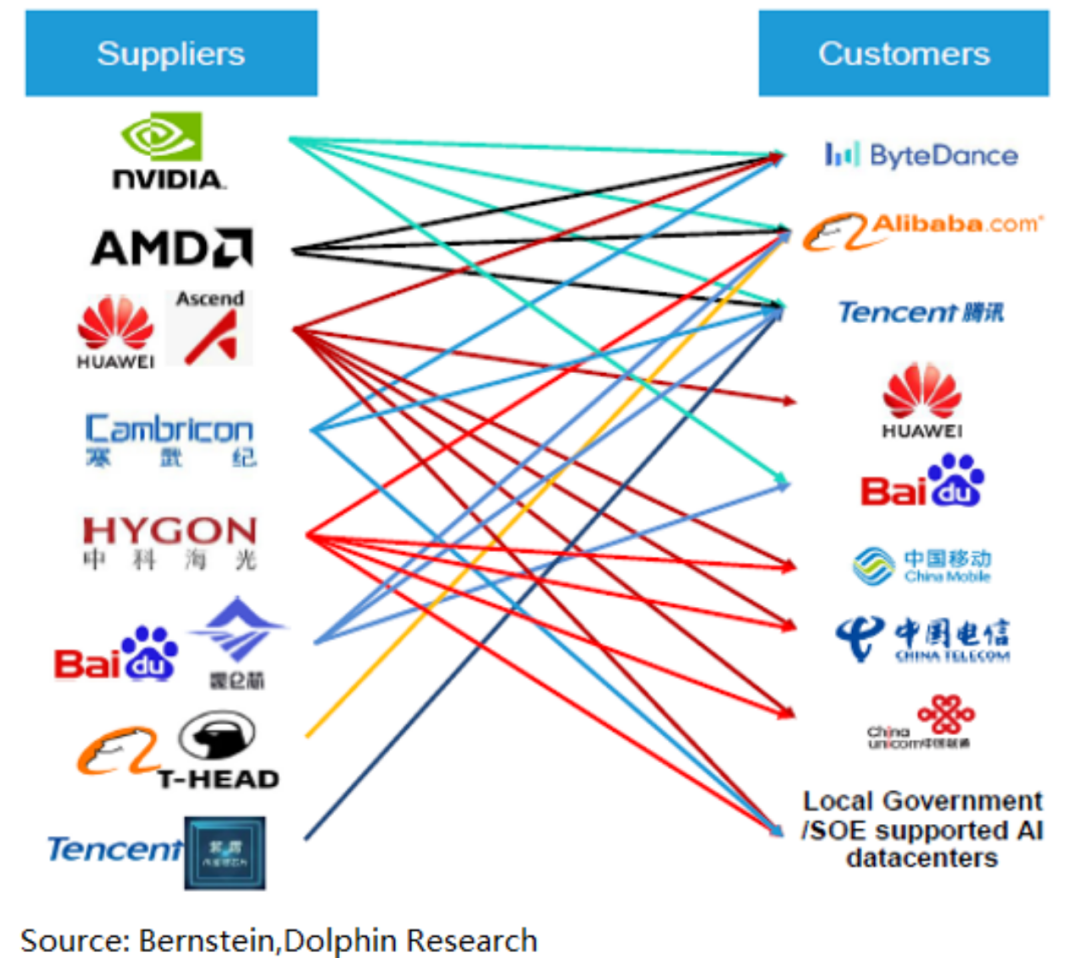

First, Dolphin Research categorizes direct customers needing AI chips in China into three groups: internet giants (ByteDance, Tencent, Alibaba, Baidu), Huawei Cloud, and operators/government-enterprise clouds (China Mobile, China Unicom, China Telecom, etc.).

a) Internet giants: Primarily adopt a 'NVIDIA/AMD + domestic computing power + self-developed computing power' approach and will be the main potential buyers if the H200 is approved.

b) Huawei Cloud: Relies entirely on its own 'Huawei computing power' ecosystem, a self-sufficient model.

c) Operators/government-enterprise clouds: Entirely rely on domestic computing power from Huawei and others and will not adopt overseas computing power chips. Approval of the H200 will not drive procurement demand.

Moreover, the potential procurement demand driven by the approval of the H200 mainly stems from model training needs, meaning that cloud service providers (ByteDance, Alibaba, Tencent, Baidu) that would purchase the chips do so primarily to meet training demands rather than inference demands. Given current computing power usage, the token consumption ratio for inference and training is already 35:65 and is increasingly shifting toward inference.

Thus, H200 sales will depend on market share within cloud giants' Capex expenditures over the next two years. Here is Dolphin Research's reasoning process: Combining the capital expenditures and market conditions of domestic cloud service providers, Dolphin Research expects total capital expenditures by Chinese cloud vendors to reach $123 billion in 2026, a 40% year-over-year increase.

Around 30% of China's AI capital expenditures are allocated to the purchase of AI chips, leading to an estimated AI chip market size of approximately USD 38.1 billion next year. In the 2025 Chinese AI chip market, NVIDIA is projected to hold around a 40% share (covering the majority of training demands and some inference demands).

As domestic training chips still lag behind the H200, assuming NVIDIA secures a 35% market share in China's 2026 market (representing 35% of expenditures by major firms during procurement), the liberalization of the H200 could approximately contribute USD 13.3 billion in incremental revenue to NVIDIA's next fiscal year. Since NVIDIA's previous guidance did not account for revenue contributions from China, the liberalization of the H200 represents a pure incremental addition to the company's AI revenue.

Assuming a gross profit margin of 65% for the H200, and considering the U.S. government takes a 25% share of sales, this equates to an actual gross profit margin of approximately 40% for the H200. Taking into account NVIDIA's operating expense ratio of around 10%, the USD 13.3 billion in revenue generated from the liberalization of the H200 would only contribute approximately USD 4 billion in incremental profit to the company.

As the company's previous outlook did not account for AI computing revenue from the Chinese market, Dolphin Research estimates the company's net profit for the 2027 fiscal year to be approximately USD 192.3 billion (assuming a 57% year-over-year revenue increase, a 75% gross profit margin, and a 15.9% tax rate). With a current market capitalization of USD 4.5 trillion, this corresponds to a PE ratio of approximately 22 times for the company's 2027 fiscal year.

Even with the liberalization of the H200, the performance increment brought to the company by China's AI computing chips is only USD 4 billion (representing only about a 2% increase in performance), having a negligible impact on the company's valuation.

Compared to the liberalization of the H200 in the Chinese market, the market remains more concerned about the competitive impact of 'Google Gemini + Broadcom,' which is also a major factor suppressing the company's valuation, primarily due to concerns about the company's market competitiveness in 2027 and beyond. If TPUs become available for external supply, it will have a direct impact on the company's gross profit margin and market share.

The liberalization of the H200 will indeed provide a short-term boost to the company's performance, but as analyzed above, its impact on the company's performance is not significant (only a 2% increase), and the company's stock price responded similarly after the event (rising by about 1%). As for the future approval status of computing chips, it will remain a bargaining chip.

Relatively speaking, NVIDIA should focus on strengthening its cooperative relationships with downstream clients and enhancing its own competitiveness to address competitive threats posed by custom ASIC chips such as TPUs.

- END -

// Reprint Authorization

This article is an original piece from Dolphin Research. If you wish to reprint it, please obtain authorization.

// Disclaimer and General Disclosure Notice

This report is intended solely for general comprehensive data purposes, aimed at users of Dolphin Research and its affiliated institutions for general reading and data reference. It does not take into account the specific investment objectives, investment product preferences, risk tolerance, financial situation, or special needs of any individual receiving this report. Investors must consult with independent professional advisors before making investment decisions based on this report. Any person making investment decisions using or referring to the content or information mentioned in this report does so at their own risk. Dolphin Research shall not be held responsible for any direct or indirect liabilities or losses that may arise from the use of the data contained in this report. The information and data contained in this report are based on publicly available sources and are intended for reference purposes only. Dolphin Research strives for, but does not guarantee, the reliability, accuracy, and completeness of the relevant information and data.

The information or views mentioned in this report shall not, under any jurisdiction, be considered or construed as an offer to sell securities or an invitation to buy or sell securities, nor shall they constitute advice, inquiries, or recommendations regarding relevant securities or related financial instruments. The information, tools, and data contained in this report are not intended for, nor are they intended to be distributed to, jurisdictions where the distribution, publication, provision, or use of such information, tools, and data would contravene applicable laws or regulations, or would require Dolphin Research and/or its subsidiaries or affiliated companies to comply with any registration or licensing requirements in such jurisdictions, nor are they intended for citizens or residents of such jurisdictions.

This report merely reflects the personal views, insights, and analytical methods of the relevant authors and does not represent the stance of Dolphin Research and/or its affiliated institutions.

This report is produced by Dolphin Research, and the copyright is solely owned by Dolphin Research. Without the prior written consent of Dolphin Research, no institution or individual shall (i) make, copy, reproduce, duplicate, forward, or distribute in any form whatsoever any copies or reproductions, and/or (ii) directly or indirectly redistribute or transfer to any other unauthorized persons. Dolphin Research reserves all related rights.