Hot Spot丨Google and Meta Team Up, Leveraging TPU+PyTorch to Target NVIDIA's Vulnerabilities

![]() 12/23 2025

12/23 2025

![]() 636

636

Preface:

Google and Meta have unveiled a deeper collaboration aimed at bolstering native support for Google's TPU within the PyTorch framework.

This strategic move is designed to circumvent NVIDIA's CUDA ecosystem and directly challenge the core of its competitive advantage.

This isn't merely a case of forming an [Anti-NVIDIA Alliance]; rather, it represents an effort by cloud service providers and super-app developers to redistribute control over computing resources.

Image Source | Network

NVIDIA's CUDA Stronghold and Industry-Wide Concerns

The market generally perceives NVIDIA's strengths as lying in its leading GPU performance, advanced manufacturing processes, and robust HBM and NVLink technologies. However, these are merely surface-level advantages.

NVIDIA's truly irreplaceable asset is its entrenched CUDA ecosystem.

CUDA has achieved three key feats: it has shaped developer mindsets, integrated software toolchains, and secured model and code assets.

The outcome is clear: opting for NVIDIA means the lowest development costs, while switching platforms entails rewriting code and facing uncertain risks.

This constitutes an ecological tax, rather than a hardware premium.

Since PyTorch's introduction in 2016, this open-source framework, spearheaded by Meta, has swiftly become the lingua franca for AI developers. Meanwhile, NVIDIA's engineers have consistently ensured that models developed with PyTorch deliver optimal performance on their GPUs.

A substantial amount of performance optimization, operator implementation, and engineering expertise has been accumulated within the CUDA+PyTorch combination, resulting in prohibitively high migration costs.

In Silicon Valley, few developers engage in low-level coding for specific chips; frameworks like PyTorch have emerged as the default abstraction layer connecting developers to hardware, with CUDA serving as the technological bedrock of this abstraction.

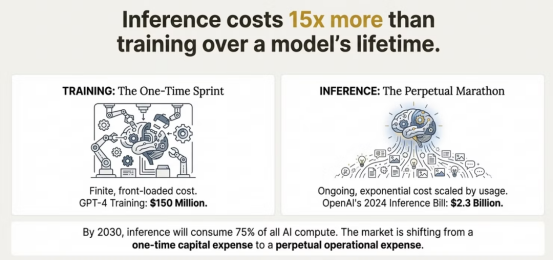

This monopolistic landscape has induced collective anxiety across the industry. Even giants like Meta, with a GPU procurement budget projected to reach $72 billion by 2025, face the dilemma of escalating bidding wars and soaring inference costs.

The industry's appetite for alternative solutions is growing. As AI inference costs continue to climb, OpenAI's inference spending surged to $2.3 billion in 2024, 15 times the cost of training GPT-4.

The collaboration between Google and Meta has emerged precisely in response to these industry pain points.

Technological Breakthroughs and Strategic Implications of the TorchTPU Initiative

Meta is not an adversary of NVIDIA; in fact, it ranks among NVIDIA's largest customers, with its LLaMA, recommendation systems, and advertising models heavily reliant on GPUs.

So, why is Meta championing PyTorch×TPU? The rationale is straightforward: computing power sovereignty.

Meta's AI scale implies that even a 10% fluctuation in costs can be astronomical, and any computing power bottleneck can disrupt product timelines.

Through PyTorch, Meta can lower the barriers to using [non-CUDA platforms], preserving more computing power options for itself and compelling NVIDIA to relinquish its absolute negotiation advantage. This is a long-term bargaining power strategy.

Recently, Google officially advanced its strategic initiative codenamed [TorchTPU], with the primary objective of achieving seamless compatibility between TPU and PyTorch, enabling the world's most mainstream AI framework to operate efficiently on Google's self-developed chips.

Unlike past sporadic support for PyTorch, Google has invested unprecedented organizational focus and strategic resources this time, even contemplating open-sourcing parts of its software to expedite customer migration.

As the creator and steward of PyTorch, Meta's deep involvement renders this technological breakthrough even more disruptive.

The essence of the TorchTPU initiative is to dismantle the technological barriers between TPU hardware and the PyTorch ecosystem.

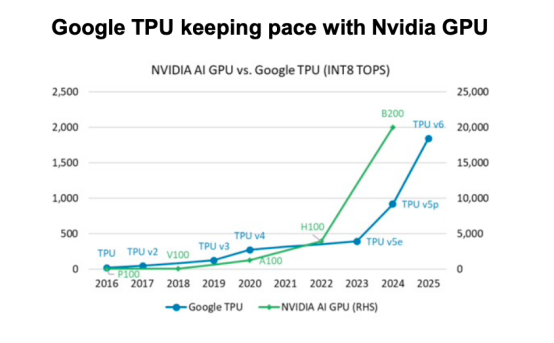

Google's seventh-generation TPU Ironwood already boasts formidable hardware competitiveness, with a peak performance of 4614 TFLOPS at FP8 precision, 192GB of high-bandwidth memory, and significantly superior energy efficiency compared to NVIDIA's B200.

However, for an extended period, the absence of a robust software ecosystem has hindered the realization of these hardware advantages.

Through collaboration with Meta, Google is revamping TPU's software stack. Developers can now seamlessly migrate PyTorch models to TPU without extensive code rewrites, and Google's TPU Command Center further simplifies deployment.

This fusion of cost-effective hardware and a mainstream ecosystem directly challenges NVIDIA's core strengths.

According to tests, in tensor-intensive tasks such as LLM inference and image generation, TPU offers four times the cost-effectiveness of NVIDIA's H100, executes BERT services 2.8 times faster than A100 GPUs, and reduces energy consumption by 60-65%.

This collaboration signifies a precise strategic alignment for Google and Meta.

For Google, TPU is no longer confined to internal use; following the Google Cloud division's leadership in TPU sales in 2022, its sales volume has become a pivotal revenue growth engine for Google Cloud.

Commencing in 2025, Google has commenced direct sales of TPU to customer data centers, accompanied by organizational restructuring. Veteran Amin Vahdat has been appointed as the head of AI infrastructure, reporting directly to CEO Sundar Pichai.

The success of the TorchTPU initiative will fully unlock TPU's commercialization potential. According to Morgan Stanley projections, TPU production will reach 5 million units by 2027 and 7 million units by 2028, with every 500,000 units sold generating $13 billion in revenue for Google.

For Meta, the strategic value of this collaboration is equally profound. As one of NVIDIA's largest customers, Meta has long been constrained by its over-reliance on GPUs.

By promoting the integration of PyTorch and TPU, Meta can not only secure more affordable inference computing power but also diversify its hardware infrastructure, gaining greater leverage in procurement negotiations with NVIDIA.

It is reported that Meta plans to lease TPU via Google Cloud in 2026 and invest billions of dollars in 2027 to acquire hardware for deploying its own data centers, catering to compute-intensive tasks such as fine-tuning the Llama model.

The most revolutionary aspect of the TorchTPU initiative lies in its open-source orientation. Google is considering open-sourcing parts of its software stack, creating a stark contrast to NVIDIA's closed CUDA ecosystem.

As the preferred framework for over half of the world's AI developers, PyTorch already enjoys a robust open-source community foundation. Its deep integration with TPU will forge the first genuine challenger driven by an open-source ecosystem, directly impacting NVIDIA's software stronghold.

Historically, NVIDIA has locked in developers and customers through its CUDA ecosystem, fostering a path dependency where increased usage leads to heightened reliance.

The TorchTPU initiative, armed with open-source principles, reduces migration costs for developers, enabling more companies to evade the [NVIDIA tax].

The symbolic significance of TPU+PyTorch lies in the transition of AI computing power from [chip-centricism] to [ecosystem and system competition].

Entry of Industry Giants May Accelerate Market Transformation

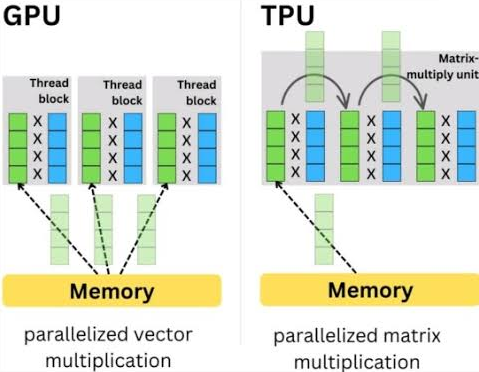

To put it metaphorically, GPUs resemble versatile athletes proficient in multiple sports, while TPUs are akin to Olympic sprint champions, excelling in specific domains.

As AI computing power demand shifts from training to inference, it is projected that by 2030, inference will consume 75% of AI computing resources, with a market size reaching $255 billion. This technological route duel will directly shape the industry's future trajectory.

In a future where AI inference becomes the predominant computing power demand, this [specialization] often proves more competitive than [versatility].

This technological duel has already begun to influence market dynamics. Morgan Stanley predicts that by 2026, ASIC shipments will surpass GPUs for the first time, and the AI data center market will transition from GPU dominance to a multi-polar balance of power.

Currently, several industry giants have commenced migrating to TPU. After image generator Midjourney switched to TPU in 2024, its inference costs plummeted by 65%, from $2 million per month to $700,000, with throughput tripling.

Claude's developer, Anthropic, has secured a multi-billion-dollar deal with Google, committing to adopt up to one million TPUs, which will free up over 1GW of computing capacity by 2026.

Apple has also commenced using TPU to train AI models, becoming a significant customer for Google Cloud's TPU.

According to Google Cloud executives, TPU adoption alone could account for 10% of NVIDIA's revenue, implying an annual shift of billions of dollars in market share.

With the entry of giants like Meta, this market transformation may accelerate.

In addition to Google's TPU, Amazon's Trainium, Microsoft's Maia, and dedicated chips from startups like Cerebras and Groq are also flooding the market, further intensifying its diversification.

Nomura Securities predicts that the potential market size for AI data centers will reach $1.2 trillion by 2030, providing ample space for the co-development of multiple participants.

The collaboration between Google and Meta will not immediately alter the market landscape, but it has already shifted the long-term narrative.

NVIDIA is no longer the sole infrastructure provider for the AI era; computing power is beginning to resemble cloud computing, moving towards multi-supply and multi-ecosystem models.

Super platforms are reclaiming control over the underlying infrastructure.

Conclusion:

This is not a short-term battle for victory or defeat but rather a protracted war for dominance over AI infrastructure in the coming decade.

The truly noteworthy aspect is not whether NVIDIA will face challenges but rather how AI's innovation speed and cost structure will be redefined when computing power is no longer monopolized by a single entity.

And that is the most disruptive potential behind TPU+PyTorch.

Partial References: Top Tech: "Google's TPU Has [Rattled] Jensen Huang", Calm Lab: "Google and Meta Challenge NVIDIA! Launching a Computing Power Breakthrough Battle?", Poor Review X.PIN: "Google's Decade-Long Masterstroke Has Ended NVIDIA's Golden Era?", Synced: "NVIDIA in Danger: Google and Meta to Enable TPU Support for PyTorch, Breaching CUDA's Moat", Semiconductor Industry Observation: "No Wonder Qualcomm Is Panicking"