China’s GPU Cloud: Navigating Toward Full-Stack Dominance

![]() 01/04 2026

01/04 2026

![]() 349

349

China’s homegrown GPU cloud is undergoing a significant evolution.

On January 4th, global consultancy Frost & Sullivan unveiled its 2025 China GPU Cloud Market Research Report. The findings reveal that Baidu Intelligent Cloud commands a 40.4% share of China’s self-developed GPU cloud sector, securing the top spot. Around the same time, various media outlets reported that Baidu’s chipmaking subsidiary, Kunlunxin, has filed for a listing on the Hong Kong Stock Exchange’s main board. Established in 2011, Kunlunxin has spent over a decade honing its expertise, emerging as a key player in today’s AI cloud competition.

In the past year, “GPU cloud” has emerged as a recurring theme in industry and investor circles, yet its definition remains unclear to many. Simply put, GPU cloud is the foundational computing layer of today’s AI cloud. As AI deployment scales, the focus of cloud computing competition has shifted from CPU-based general computing to GPU-driven AI infrastructure—a shift that determines whether large-scale models can be trained, deployed, and run efficiently.

Frost & Sullivan’s report outlines three key criteria for “China’s GPU cloud”: vendors must develop their own AI acceleration chips, independently build and manage large-scale AIDC clusters, and deliver end-to-end AI computing services via public, private, or hybrid clouds. By this standard, despite numerous domestic AI computing participants, those with closed-loop capabilities spanning chips, clusters, and cloud services remain rare. Baidu Intelligent Cloud’s market leadership stems from its consistent deployment of these capabilities across finance, energy, and automotive sectors, with real-world business scenarios providing repeated validation and refinement.

Reflecting on 2025, the AI cloud market was once defined by price wars and raw computing power races, even spilling over into airport advertising battles. However, as AI integrates deeper into industries, a consensus has emerged: the future of AI cloud will hinge on chip-level and full-stack competition, prioritizing cost-effectiveness to win customers. Consequently, leading cloud providers globally are accelerating vertical integration, fostering deep collaboration between chips, system software, scheduling platforms, and upper-layer model services.

Frost & Sullivan also highlights that China’s self-developed GPU cloud market has entered the “10,000-card era,” transitioning from “usable” to “reliable and sustainable” stages. Yet, the report notes that short-term commercialization still hinges on policy support and demand from key industries, lacking full market-driven momentum. Against the backdrop of restricted high-end GPU access and the need to mature domestic solutions, China’s GPU cloud has chosen a path centered on autonomous control, software-hardware synergy, and scenario-specific adaptation, with the market consolidating around vendors with full-stack capabilities and long-term investment capacity.

01

Computing Power: From Resource and Price Battles to Systemic Innovation

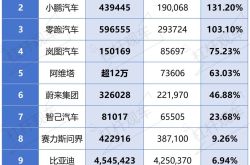

In 2025, China’s computing power landscape transformed dramatically, driven by industry responses to multiple crises. Notably, the U.S.-China tech rivalry disrupted the global GPU supply chain, leaving domestic firms grappling with shortages and soaring costs. IDC data shows that while AI server shipments grew by just 16.8% in 2025, sales surged nearly 90%, reflecting a sharp rise in advanced computing acquisition costs. Frost & Sullivan’s report underscores that training AI models at scale with specially supplied H20 chips requires 40%-60% more computing time and over 35% higher electricity costs compared to H100, significantly inflating operational expenses. Meanwhile, evolving AI workloads have intensified demands for computing scheduling and system stability.

Facing these challenges, leading cloud providers in 2025 raised their procurement of self-developed GPUs to the 30%-40% range, transforming homegrown computing power from a strategic alternative into a core pillar of AI cloud. Critically, this round of self-development focused on deep customization for inference, video coding/decoding, and big data analytics, achieving superior cost-effectiveness in real-world business scenarios through full-stack optimization from chips to system software and upper-layer frameworks.

The technical paths and competitive landscape of China’s self-developed GPU cloud are solidifying: Baidu has created a synergy of “Kunlunxin Chips + Baige AI Heterogeneous Computing Platform + PaddlePaddle Framework”; Huawei Cloud has built a software-hardware ecosystem with “Ascend Chips + CANN + MindSpore”; and Alibaba Cloud is advancing full-stack integration via “Shenlong Computing Architecture + Apsara OS + PAI Platform.” Frost & Sullivan predicts that by 2026, vendors with complete full-stack capabilities will dominate over 60% of the high-end market, gaining decisive advantages in emerging fields like autonomous driving, biopharmaceuticals, and scientific computing.

Baidu Intelligent Cloud’s market progress reflects its long-term, systematic investment in AI infrastructure. Shen Dou, Executive Vice President of Baidu Group and President of Baidu Intelligent Cloud Business Group, revealed that Kunlunxin has undergone over a decade of refinement. In the first half of 2025, Kunlunxin’s P800 32,000-card cluster officially launched, marking a domestic computing milestone. Shen Dou disclosed that Baidu now runs most of its inference tasks on the P800 cluster, with a 5,000-card single cluster built for training multimodal models and a training cluster size exceeding 10,000 cards.

In November, Baidu unveiled a “Five-Year, Five-Chip” technology roadmap, featuring the Kunlunxin M100 (optimized for large-scale inference), the Kunlunxin M300 (tailored for ultra-large-scale multimodal training and inference), next-gen P800 super-nodes (Tianchi 256 and Tianchi 512), and thousand-card and four-thousand-card super-nodes based on the Kunlunxin M series.

Chips are the backbone of AI infrastructure, but how computing power is organized and scheduled defines a cloud provider’s core capability. Baidu integrates Kunlunxin, GPUs, storage, and networks into a unified computing system via its Baige Computing Management Platform, currently supporting stable operation of a 32,000-card self-developed cluster and evolving toward million-card-level scheduling—with a 2030 roadmap targeting a million-card Kunlunxin single cluster. For large model training and inference, Baige optimizes heterogeneous computing power dynamically through “decoupling, adaptation, and intelligent scheduling,” ensuring over 98% effective training runtime in 10,000-card tasks while introducing intelligent self-healing mechanisms to reduce fault recovery time from hours to minutes.

Building on AI Infra, Baidu Intelligent Cloud extends its capabilities to Agent Infra. While current models are highly intelligent, their industrial value hinges on systematic organization and invocation. Through the Qianfan Platform, Baidu offers its Wenxin Large Model and over 150 mainstream models, providing data services, tool integration, model customization, and general-purpose products like digital employees, intelligent customer service, and multimodal vision platforms to collaborate with clients in creating enterprise-level agents for core business services.

This path is a shared strategy among major self-developed GPU cloud vendors, reflecting the consensus that AI cloud competition is deepening into software-hardware integration and systemic capabilities. Leading players are accelerating their pursuit of full-stack expertise and vertical optimization to drive AI cloud services into the heart of industries.

02

Industry: AI’s Evolution from Application Innovation to Systemic Transformation

In 2025, the value of China’s self-developed GPU cloud became increasingly concentrated in key industries. AI deployment in finance, energy, transportation, automotive, and manufacturing prioritizes long-term controllability, stable delivery, system reliability, and predictable costs—making general-purpose GPUs or standalone cloud services inadequate for core business operations. Self-developed GPU cloud, through its full-stack capabilities, has become the foundation for industry intelligence upgrades, with large models and intelligent agents penetrating production systems as essential deployment tools.

Central state-owned enterprises (SOEs) led AI exploration and deployment in 2025, with over 65% of central SOEs, all systemically important banks, 95% of mainstream automakers, over 50% of game developers, and leading embodied intelligence firms choosing Baidu Intelligent Cloud for AI collaboration.

In telecommunications, the three major carriers ramped up computing investments. Among the over 200 billion-yuan data center contracts tracked by Digital Frontier, carriers accounted for nearly 30%, with accelerated inference computing construction and domestic accelerator chip adoption as key trends. Against this backdrop, Kunlunxin secured a billion-yuan inference computing contract from China Mobile in August 2025, driving a surge in Baidu’s stock price. Baidu Intelligent Cloud also partnered with China Mobile to launch the “One Cloud, Three Intelligences” solution, supporting digital transformation for individuals, households, and government/enterprise sectors.

In finance, AI applications shifted from sporadic trials to large-scale deployment, with nearly half of global financial institutions initiating large model and intelligent agent projects, such as ICBC launching over 1,000 intelligent agents. An IDC report shows Baidu Intelligent Cloud leading China’s 2024 generative AI market in finance with a 12.2% share. In terms of computing power, China Merchants Bank trained a model with hundreds of billions of parameters using just 32 Kunlunxin P800 servers. On the application front, the industry formed dual mainlines for employees and customers: Galaxy Securities collaborated with Baidu Intelligent Cloud to create an “OTC Trading Agent,” tripling conversion rates from inquiry to order placement; Taikang worked with Baidu to develop a training assistant, halving onboarding time for certified staff. In core risk control areas, CITIC Baixin Bank improved feature mining efficiency by 100% and enhanced risk model differentiation by 2.41% through intelligent risk control.

The automotive industry has been among the most enthusiastic adopters of AI. An Omdia report shows that in the first half of 2025, each of China’s 23 Fortune 500 automakers used an average of 3.8 supplier services, with Baidu Intelligent Cloud penetrating 19 leading automakers, achieving an 83.7% market coverage rate and becoming the top choice. Scenario-wise, code generation and intelligent cockpits saw the fastest adoption: NIO’s nearly 400 employees use code intelligent agents to boost efficiency, with AI-generated code accounting for over 30%; autonomous driving evolved to the VLA paradigm, requiring alignment of visual, language, and action modalities with parameter counts reaching hundreds of billions. Platforms like Baige and one-stop evaluation toolchains empower R&D deployment. In broader scenarios, leading automakers formed systematic layouts, such as Geely Auto building an AIOS system based on Baidu Kunlunxin and intelligent agent tools, with employees developing over 3,000 personal agents.

Embodied intelligence is viewed as the next strategic market, comparable to smartphones and automobiles. In 2025, industry-wide shipments of robot bodies and dexterous hands are expected to exceed 10,000 units for the first time. Since entering the market in late 2023, Baidu Intelligent Cloud has supported the “national team” of embodied intelligence and over 20 key supply chain enterprises, focusing on embodied brain-body coordination, data, and body R&D. At the model level, the Baige platform became China’s first cloud service platform to fully support the three mainstream VLA models—RDT, π0, and GR00T N1.5—doubling R&D efficiency for embodied models at the Beijing Humanoid Robot Innovation Center. At the data level, it co-created real-machine and simulation data collection solutions with multiple enterprises. For scenario deployment, it collaborates with firms to migrate and reuse ultra-low-latency teleoperation solutions from the intelligent driving era, lowering commercialization barriers.

In energy and power, leading firms are embracing industry-specific large models. Baidu Intelligent Cloud partnered with State Grid to create the Guangming Power Large Model, covering over 100 scenarios and reducing manual tower inspections by 40%; it also supported Southern Power Grid’s large model R&D, helping build a new computing cluster in 2025, while the jointly developed “Distribution Network Monitoring Agent” can complete alarm analysis and notify sites within one minute.

Additionally, in travel, the digital employee “Dongdong” was launched on the China Eastern Airlines App, covering core processes like ticket booking and check-in.

In the face of challenging scenarios, including industrial production scheduling, resource allocation, and path planning, Baidu unveiled the world's inaugural commercially available self-evolving super-intelligent agent, named 'Famou'. This innovative agent excels at swiftly identifying globally optimal solutions. Since its launch in November 2025, more than a thousand enterprises have expressed interest by applying for trial access. In the automotive R&D sector, it has significantly reduced the time required for single-time aerodynamic verification at Alta, an independent automotive design technology company, from 10 hours to a mere 1 minute. In the realm of scientific research, it has aided Beijing University of Technology in shortening the research exploration cycle for new models of PEM electrolyzer hydrogen production systems from several weeks to just a few hours.

As artificial intelligence (AI) continues to permeate various industries, China's domestically developed GPU cloud is assuming an increasingly pivotal supporting role. The possession of full-stack capabilities has emerged as a fundamental prerequisite for the rapid evolution of industry intelligence. By 2026, competition among cloud service providers is expected to enter a phase of intense full-stack rivalry. Those providers capable of establishing and continually refining closed loops that integrate chips, systems, and industry-specific scenarios will be better positioned to gain the upper hand in the forthcoming phase of AI cloud competition.