Is 'Map-Free' Driving in Autonomous Vehicles Really Without Maps?

![]() 01/16 2026

01/16 2026

![]() 493

493

In the field of intelligent driving, one of the most discussed topics in the past year or two has undoubtedly been the 'elimination of high-definition maps.' Once upon a time, high-definition maps were regarded as the 'crutch' for autonomous driving. It seemed that without those centimeter-accurate static data packages, vehicles would be like blind people groping in the dark. However, with Tesla's full-scale rollout of its intelligent driving software based on an end-to-end architecture, and domestic leading players such as Huawei, XPeng, and Li Auto promoting slogans like 'operable nationwide, on any road,' the industry trend has dramatically reversed. Suddenly, high-definition maps seem to have transformed from industry darlings into burdens. Questions from the public have followed suit: since everyone is advocating 'map-free' driving, does autonomous driving really not need maps anymore? If not, how do vehicles navigate complex urban roads?

What Does 'Map' in Map-Free Driving Refer To?

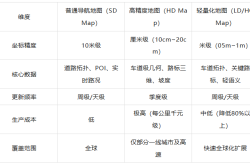

To understand these questions, we must first clarify what exactly is being 'eliminated' in so-called 'map-free' driving. In autonomous driving, the 'map' referred to by automakers and suppliers specifically denotes high-definition maps (HD Maps). These maps are entirely different from the navigation maps (SD Maps) commonly used on our smartphones, such as those from Gaode or Baidu.

Navigation maps are designed for human use, with tolerances in the meter range. They only need to inform humans whether to turn left or right, along with approximate road names and building locations. In contrast, high-definition maps are designed for machines, containing detailed geometric shapes of roads, types of lane markings, heights of curbs, precise three-dimensional coordinates of traffic lights, and even recording road slopes and lateral inclinations.

During the stage when single-vehicle perception capabilities were not yet mature, high-definition maps served as a 'standard answer' provided to vehicles in advance. By scanning the surrounding environment with sensors and matching it with high-definition maps, vehicles could achieve extremely accurate positioning and control.

Why Has the 'Myth' of High-Definition Maps Been Shattered?

Early intelligent driving research actually reached a consensus: if perception capabilities were insufficient, maps would compensate. This model performed exceptionally well in highly structured and infrequently changing scenarios such as highways. However, once vehicles entered the intricate urban road networks, the drawbacks of high-definition maps began to snowball.

The most direct pain point brought by high-definition maps is the 'freshness' issue. Roadworks, lane changes, temporary construction, road closures, and repainted markings are common occurrences in urban areas. Due to the difficulty and complexity of production, the update cycle for high-definition maps is typically measured in quarters or even half-years. When road conditions in the real world have undergone drastic changes, but high-definition maps remain stuck in a state from months ago, intelligent driving systems relying on them will experience various malfunctions and even pose safety risks.

The cost of producing high-definition maps is also exorbitant. Traditional collection methods require survey vehicles equipped with LiDAR, inertial navigation, and high-precision positioning systems. The cost of mapping decimeter-level maps is approximately ten yuan per kilometer, while for more precise centimeter-level maps containing richer semantic information, the cost can reach thousands of yuan per kilometer. Covering millions of kilometers of urban roads nationwide with high-definition maps and maintaining frequent updates would require astronomical financial investments. For automakers pursuing profitability and scalability, this is undoubtedly an unsustainable long-term burden, especially amid increasingly fierce price wars where reducing hardware costs and software service fees is crucial for survival.

Furthermore, policy regulations and surveying qualifications also hinder the widespread adoption of high-definition maps. Road data collection involves national security and sensitive geographic information, strictly regulated by law. Only a few enterprises with Class A qualifications for producing electronic navigation maps (such as mapping giants Baidu, Gaode, and NavInfo) can legally conduct high-definition surveying. If automakers want to undertake it themselves, the barriers are extremely high; if they purchase maps from suppliers, they face issues such as disconnected data loops and lengthy map review cycles. Huawei's Yu Chengdong once admitted that merely collecting high-definition maps for 9,000 kilometers in Shanghai took a year, only to find that the information for previously mapped sections had already become outdated. This 'frustration' of collection never keeping up with changes has compelled intelligent driving research to shift direction. Since humans rely on real-time observation and reasoning by their eyes and brains when driving, without needing a centimeter-accurate map, why can't machines do the same?

The Development of Real-Time Perception Technologies

To discard that expensive and inflexible 'crutch,' intelligent driving systems must achieve a leapfrog upgrade in perception capabilities. The core of the current 'map-free' technical route is to enable vehicles to generate maps in real time during driving. This is akin to a seasoned driver with an exceptional memory and quick reflexes. Although he may not have traveled this route before, he can discern lane markings, signs, and surrounding vehicles with his eyes and swiftly outline a safe and feasible route in his mind. Technically, this relies on the BEV (Bird's Eye View) perception network, Transformer architecture, and the recently popular Occupancy Network.

As a pioneer in this field, Tesla's FSD (Full Self-Driving) system has completely abandoned LiDAR in favor of a pure vision approach. It captures images through eight cameras distributed around the vehicle's body and uses BEV technology to convert these 2D images with perspective distortions from different viewpoints into a unified 3D virtual space in real time. In this process, algorithms no longer identify objects in isolation but model the entire environment globally.

The more advanced Occupancy Network divides the surrounding space into tiny three-dimensional voxel blocks. It does not dwell on identifying what a specific obstacle is but directly determines whether these voxel blocks are occupied and by what type of object. This approach perfectly solves the challenging problem of recognizing 'irregular obstacles.' Even if it is a strangely shaped dump truck or a fallen construction barrier by the roadside, as long as it occupies space, the system can perceive it and take evasive action.

Although many domestic manufacturers retain LiDAR as a safety redundancy in hardware, they have also fully shifted to a 'perception-centric' logic in software architecture. Huawei's ADS 3.0 introduces the GOD (General Obstacle Detection) perception network, achieving 3D understanding of panoramic information with a perception depth and breadth capable of covering an area equivalent to 2.5 football fields. Meanwhile, the RCR (Road Topology Reasoning) network is responsible for 'reasoning' out road connectivity like a human brain in the absence of high-definition maps. When facing a complex intersection with unclear or even no lane markings, RCR can infer which paths lead to the target direction based on the intersection's shape, traffic light indications, and surrounding traffic flow. This neural network-based reasoning ability enables vehicles to no longer mechanically follow coordinate points on a map but possess an understanding of traffic logic.

Another key technology for real-time map generation is MapTR (Map Transformer). Traditional perception schemes first identify fragmented lane markings and road signs and then painstakingly piece together a map in post-processing. In contrast, MapTR directly models map elements structurally. It treats map features such as lane markings and curbs as ordered point sets and directly predicts the entire road topology in an end-to-end manner. This approach significantly enhances the real-time performance and accuracy of map generation, allowing the system to refresh the surrounding road structure at extremely high frame rates, thereby supporting smoother decision-making and path planning. Domestic suppliers such as Zvision Technology have further optimized this on this basis, adding lane centerline representation and directional learning capabilities, enabling the system to distinguish between oncoming and same-direction lanes, especially in complex scenarios with frequent forks, where this real-time topology construction capability performs more stably and reliably than static maps.

Map-Free Does Not Mean Truly Without Maps

Having reached this point, we can answer the question posed at the beginning. The so-called 'map-free' driving at this stage actually means 'eliminating high-definition maps,' not 'doing away with all maps.' If the vehicle's network connection were severed, forcing the system to operate in a completely unknown black box environment, its performance would undoubtedly suffer significantly. This is because, no matter how powerful the perception system is, it remains constrained by the physical detection range of sensors and line-of-sight obstructions. For instance, cameras cannot see the direction of an overpass two kilometers away or traffic lights obscured by large trucks. To fill these perception gaps, automakers generally adopt a compromise solution: 'lightweight maps' or 'standard-definition maps' (SD Maps).

Lightweight maps (LD Maps) can be seen as a streamlined or downgraded version of high-definition maps. They remove those highly volatile and extremely precision-demanding centimeter-level coordinates, retaining only basic road topology, lane-level connectivity, speed limit information, and traffic light locations. These maps typically have meter-level accuracy, but their production costs are 80% to 95% lower than those of high-definition maps, and their update frequency can reach weekly or even daily levels. More importantly, lightweight maps can be updated based on a 'crowdsourced update' model. Thousands or even millions of mass-produced vehicles on the road act as mobile detectors. When they detect discrepancies between real-world road conditions and the map, they upload these differences to the cloud in real time. New map layers are then automatically annotated by large models and distributed back down. This 'living map' created by the convergence of real vehicle traffic is far more powerful than static maps collected by a few survey vehicles.

Comparative Analysis of Different Map Types Across Various Dimensions

Even in Huawei's ADS 3.0 system, which claims to be 'truly map-free,' navigation maps still play an indispensable role. They provide macroscopic path planning, informing the system where to exit the highway or make a U-turn at which intersection. Without this 'global base map,' the autonomous driving system would resemble a foreigner navigating a strange (mo sheng, meaning 'unfamiliar' in Chinese, but kept as pinyin here) city without a smartphone map—able to avoid immediate potholes but struggling to reach the destination.

At this stage, maps are no longer the dominant factor in perception for autonomous driving but exist as a special form of 'sensor a priori information.' They provide vehicles with expectations beyond their line of sight, allowing the system to anticipate tunnels or complex roundabouts ahead and adjust perception strategies and acceleration/deceleration plans in advance.

Why Has 'Map-Free' Become Inevitable?

The inevitability of 'map-free' driving essentially stems from a choice between commercial viability and technology. From a commercial perspective, achieving large-scale adoption of autonomous driving requires shedding the expensive costs of manual data collection. The autonomous driving industry generally believes that once map-free solutions can match the safety performance of map-based solutions, enterprises will never revert to the high-definition map route, as it would violate commercial logic. Low-cost pure vision or lightweight map solutions can significantly reduce the prices of intelligent vehicles, enabling models priced at 150,000 yuan or even 100,000 yuan to possess advanced intelligent driving capabilities—this is the key to driving industry explosion.

However, regardless of how autonomous driving technology evolves, the industry must maintain a sense of reverence for safety, the 'anchor.' For Level 3 and above autonomous driving, passenger safety allows no room for error. High-definition maps are still considered crucial 'insurance' in extreme weather conditions or scenarios with sensor limitations. Some worry that excessive reliance on real-time perception could lead to catastrophic consequences if perception algorithm accuracy fluctuates when vehicles travel through heavy rain, dense fog, or tunnel entrances with drastic lighting changes. To address these edge scenarios, the autonomous driving industry is exploring more complex 'end-to-end' large models. By imitating billions of miles of real-world driving data, vehicles can develop a 'driving intuition' like humans, not merely relying on visual cues but also understanding physical rules and game theory logic.

In the foreseeable future, autonomous driving will not completely sever ties with maps. Instead, maps will become more 'imperceptible' and 'invisible.' They may no longer exist as standalone software packages but will be deeply integrated into the cloud-based data loop. The vehicle end will be responsible for real-time modeling and decision-making, while the cloud end will provide macroscopic guidance and long-term memory. This triangular structure of 'strong perception, lightweight maps, and heavy computing power' will gradually replace the cumbersome and expensive model that relied on costly survey vehicles.

Final Thoughts

Although autonomous driving no longer requires those rigid, slow-to-update, and expensive high-definition maps, it will always need a 'living map' capable of providing direction and macroscopic predictions. This transition from map-based to map-free driving is not a technological regression but a leapfrog evolution of intelligent agents from 'rote memorization' to 'drawing inferences from one instance.'

-- END --