ChatGPT Unveils Age Prediction: AI Learns to Customize Protections Based on User Conduct

![]() 01/23 2026

01/23 2026

![]() 412

412

On January 20, 2026, OpenAI formally rolled out the 'Age Prediction' feature in the consumer edition of ChatGPT. Rather than depending on users' self-reported ages, the system now automatically discerns users under 18 through multi-faceted behavioral cues, including account tenure, active times, and interaction styles. Subsequently, it activates personalized safety protocols, bolstered by parental controls and third-party verification systems. This heralds a new chapter in AI platform protection for minors, transitioning from 'self-declaration' to 'behavioral identification.'

The Technical Rationale Behind ChatGPT's Age Prediction

For an extended period, minor protection on AI platforms has hinged on a passive model of 'user self-reported age + content rating'—where users merely tick 'over 18' during registration to gain full access. This method is not only easily bypassed but also fails to tackle scenarios where minors utilize adult accounts.

OpenAI's 'Age Prediction' feature disrupts this conventional logic. It is anchored in a multi-dimensional prediction model that scrutinizes account and behavioral indicators, encompassing:

Account Dimensions: Fundamental details such as registration duration, account activity, and payment status.

Behavioral Dimensions: Daily active periods (e.g., regular late-night usage), interaction frequency, content inclinations in queries, dialogue length, and tone.

Supplementary Dimensions: Age information furnished during registration serves as a secondary reference, not the sole determinant.

The model's primary strength resides in 'dynamic identification.' Unlike one-off age declarations, it perpetually analyzes user behavior and refreshes age predictions. Even adult users who persistently exhibit minor-like behavior (e.g., frequently posing juvenile-themed queries, engaging in late-night exchanges) may be flagged as 'suspected minors' and trigger protective measures. Conversely, minors emulating adult behavior are unlikely to completely elude detection.

A Balanced Strategy for Minor Protection

For accounts identified as minors, ChatGPT implements five tiers of safety measures to obstruct high-risk content, including:

1. Explicit display of violent or bloody imagery;

2. Hazardous viral challenges that might entice minors to mimic (e.g., extreme pranks, perilous experiments);

3. Role-play content involving sex or violence;

4. Descriptions or guidance pertaining to self-harm or suicide;

5. Content promoting extreme aesthetics, unhealthy dieting, or body shaming.

To avert false positives from impacting adult users, OpenAI has integrated Persona, a third-party identity verification service. Users inaccurately classified as minors can swiftly verify their identity via selfie uploads, reinstating full account functionality upon successful verification. This harmonizes security with user experience.

Moreover, the system provides customizable parental controls, affording parents adaptable management options. They can set 'quiet hours' (prohibited usage periods, such as during school or bedtime), oversee account memory permissions (to avert repeated access to sensitive content), and even receive alerts if the system detects signs of acute psychological distress (e.g., frequent self-harm-related queries).

Why Did OpenAI Introduce Age Prediction Now?

This feature launch is not merely an 'active innovation' by OpenAI but a reaction to regulatory pressures and industry trends.

On one hand, OpenAI is under scrutiny from the U.S. Federal Trade Commission (FTC) over allegations of 'negative impacts of AI chatbots on adolescents,' along with multiple lawsuits. Parents have previously voiced grievances that ChatGPT failed to obstruct harmful content, exposing minors to violence, pornography, and psychological harm. Launching age prediction is a pivotal move to address regulatory reviews and alleviate legal risks.

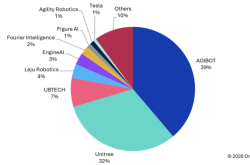

On the other hand, minor protection has emerged as a global priority for the AI sector. As AI tools proliferate, more adolescents utilize ChatGPT for learning and entertainment, yet their immature minds render them susceptible to harmful content. Competitors like Google Bard and Anthropic Claude have introduced varying degrees of minor protection, mostly relying on content ratings and voluntary declarations. OpenAI's 'behavioral identification + dynamic protection' model embodies a more sophisticated industry exploration.

From an industry standpoint, AI platform safety is transitioning from 'content filtering' to a dual model of 'user identification + content rating'—not only evaluating 'whether content is harmful' but also 'whether users should access it.' This is the pivotal direction for future AI safety evolution.

Can Age Prediction Genuinely Safeguard Minors?

Despite its seemingly robust design, the 'Age Prediction' feature confronts several controversies and challenges, primarily centered on three facets:

1. Can behavioral cues fully denote age?

The model's foundation rests on the 'correlation between behavior and age,' yet this correlation is not absolute. For instance, some adult users may frequently engage with ChatGPT late at night for work or study or exhibit a preference for juvenile-themed queries, leading to misclassification as minors. Conversely, some mature minors may evade detection by emulating adult interaction styles. While OpenAI aims to enhance model accuracy, attaining 100% precision remains improbable in the near term.

2. Does behavioral analysis encroach upon user privacy?

Age prediction necessitates collecting and scrutinizing vast quantities of user behavioral data, encompassing active times, interaction content, and usage patterns. This raises concerns about privacy breaches—how does OpenAI guarantee data is not misused or shared with third parties? Although OpenAI has not elucidated its data usage policies, balancing 'behavioral identification' with 'privacy protection' will be paramount amid stricter global data compliance regulations.

3. Can protections encompass all risk scenarios?

The five categories of high-risk content blocked by ChatGPT primarily target 'explicit harmful information,' yet 'implicit risks' (e.g., enticing minors to commit online fraud, disseminating extremist ideologies, or leaking personal information) remain unaddressed. Additionally, parental controls hinge on proactive parental involvement. If parents lack awareness or technical acumen, the feature's efficacy diminishes.

ChatGPT's 'Age Prediction' feature signifies a momentous breakthrough in AI industry protection for minors—it embodies AI platforms' capacity to 'customize protections based on user behavior,' shifting from passive content filtering to proactive user identification and precise safeguards.

Nevertheless, we must acknowledge that technology alone is not a panacea. Age prediction is merely the 'initial step' in minor protection. Future endeavors necessitate collaboration among platforms, parents, and regulators to continually refine technology, enhance regulations, and bolster guidance. Only then can we forge a safe and healthy AI environment for adolescents, ensuring AI technology empowers rather than endangers minors.

For OpenAI, launching age prediction is a pivotal move to address regulatory pressures and restore its reputation. For the AI sector, it signals a 'safety upgrade'—when technological innovation aligns with robust safety measures, AI can genuinely attain maturity and compliance.