How Did AI Outsmart Humans to Win Championships?

![]() 01/23 2026

01/23 2026

![]() 421

421

Can you believe it? A photograph, not even captured with a camera, won first place in a photography competition judged by real people.

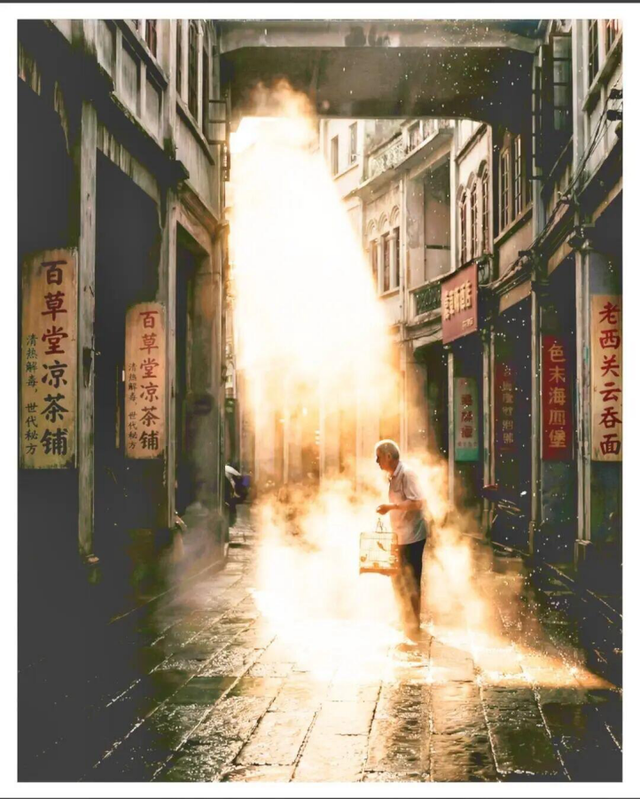

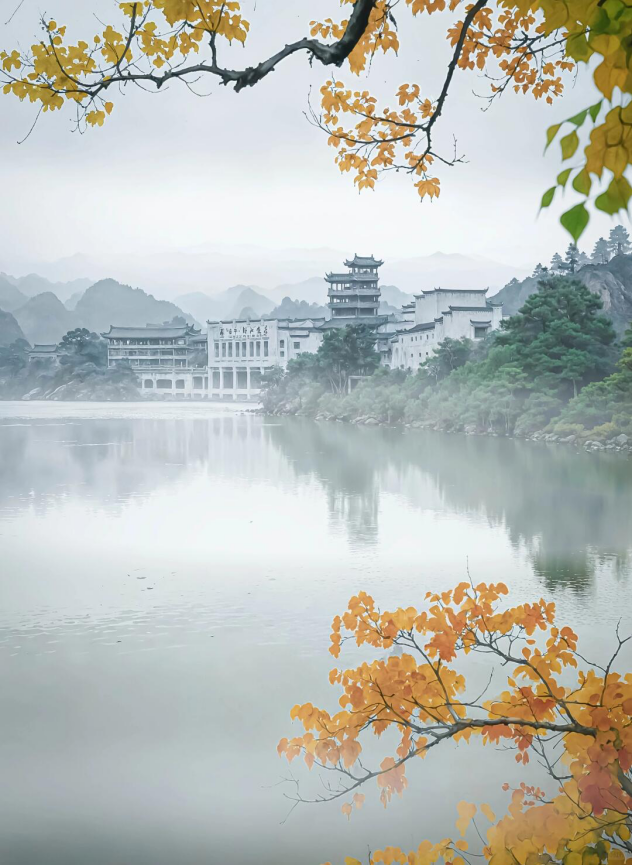

In early 2026, a significant controversy erupted within the photography community. The grand prize-winning human documentary work, titled 'Old Light of Arcade Houses,' in a 'City Memory Photography Competition' hosted by a hotel group, was exposed by netizens as an AI-generated image. Upon closer inspection, the seemingly nostalgic and warm Lingnan arcade house street scene revealed numerous telltale signs of AI manipulation: repeated signage for herbal tea shops, distorted and misaligned fingers, and chaotic text structures on plaques...

It was precisely this typical AI-generated work that managed to deceive five professional judges, each with extensive photography qualifications, securing the top spot among thousands of entries. Only after a public outcry did the second-place winner quickly ascend to take over.

This incident is not an isolated one. Over the past three years, AI has frequently outsmarted humans to win creative competitions worldwide, including painting and fiction. Participants have deliberately concealed AI involvement in their submissions, with works winning judges' favor through their highly realistic surface quality, only to be exposed through public scrutiny or self-confession.

Such incidents have provoked public outrage not merely due to technological overreach but because AI is systematically poaching human competition championships under the guise of human creativity, often only being revealed after the fact.

So, what do these recurring instances of AI outsmarting humans to win championships signify? Does this indicate humanity's defeat in our most cherished creative domain to our own invention?

The story begins at the 'City Memory Photography Competition' hosted by a renowned domestic hotel group. Intended to capture cultural essence and preserve urban memories, the contest invited five professional judges from photography and art associations.

Among nearly a hundred shortlisted works, entry #42, titled 'Old Light of Arcade Houses,' stood out, claiming first place in the comprehensive award.

Initially, everyone was moved by this old photo's unique texture. The warm yellow tones created a nostalgic atmosphere, the composition was steady, and light leaking from building shadows carved rich layers on aged structures. An elderly man carrying a birdcage in the foreground seemed to narrate the street's leisurely pace.

People naturally assumed this was a work captured by a photographer after patient waiting, lighting adjustments, and repeated attempts.

Only days later did netizens, examining details with increased contrast, discover inconsistencies: two consecutive shops on the left displayed identical 'herbal tea' signage, the elderly man's fingers connecting to his palm appeared disconnected, and multiple Chinese characters contained stroke order errors.

What angered people even more was realizing only after the awards had been presented, news released, and applause given that the championship had been claimed by AI under false pretenses.

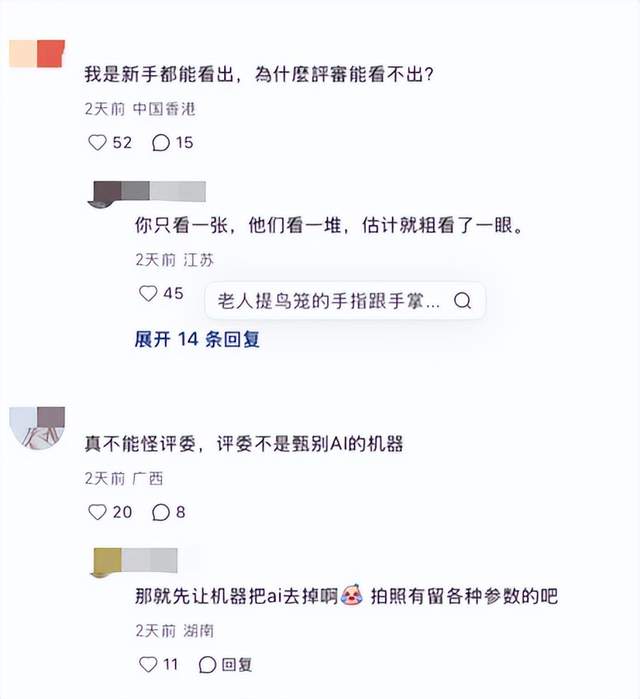

After the incident, discussions focused on 'how the judges missed it' and 'whether the organizers vetted the entries.' But from the judges' perspective, they felt even more helpless.

Without obvious technical flaws or flashy imagery, as a work highly compliant with rules, aesthetically neutral, and emotionally safe, it would be nearly impossible to rationally eliminate it from genuine human photography without knowing its AI origin.

'Old Light of Arcade Houses' represents just the tip of the iceberg. Similar incidents have repeatedly occurred across global creative fields in recent years, following nearly identical patterns: concealing AI identity during the competition, post-award exposure or confession, revealing the winner wasn't human after all.

The 2022 Colorado State Fair's digital art champion was exposed for using Midjourney; the Japanese Akutagawa Prize winner Rie Kudan admitted AI assistance in writing 'Tokyo Tower of Sympathy'; Tsinghua University professor's work 'Machine Memory Land' only humorously confessed AI generation after winning.

What makes this infuriating is that public appreciation involves emotional investment in artistic conception and story authenticity beyond technical admiration.

Traditionally, we believed artistic creation stemmed from unique expressions of individual life experiences, painful contemplation, and bursts of inspiration. Now, numerous entries demonstrate that for 'winning awards' alone, algorithms can outperform humans more efficiently and precisely.

So why can AI repeatedly deceive judges and advance in competitions?

When AI works are presented as AI creations, discussions tend to revolve around technical, ethical, or even open-ended aspects. But when they compete under the guise of 'human creation,' winning awards, and being honored as creators, outrage erupts.

Why does public reaction intensify when a human-attributed work is revealed as AI compared to when it fails?

Because it strikes at humanity's most fundamental belief: nothing moves us more than authentic emotions, yet we've been deceived by synthetic narratives.

Virtually all controversial cases share one trait: before exposure, they appeared entirely worthy of championship.

So why can AI deceive with championship-level quality to win so many human trophies?

A major reason is that current evaluation systems are almost entirely outcome-oriented, precisely what machines and algorithms excel at.

Technologically, AI has learned to please. Photography, painting, and even literary competition criteria (essentially highly quantifiable, templated indicators) have been mastered by AI through studying massive award-winning works, deriving statistical patterns of 'quality.' In photography, it knows judges prefer golden ratios, soft lighting, low saturation, and cinematic qualities; in genre writing, AI passes Turing tests with mature narrative syntax.

Caption: A Fujian provincial photography competition's first prize was exposed as AI-generated.

In other words, photographers might miss shots due to poor lighting, writers produce typos during off days, but AI merely operates within learned 'mainstream aesthetic averages' to generate works satisfying most judges.

Current blind review rules inadvertently roll out the red carpet for AI. Most creative competitions use anonymous judging where judges see only works, not authors, tools, or creative processes. The 'double-blind' system, intended for fairness, becomes AI's camouflage. Without author information, judges assume all entries are human, not verifying originality or authenticity. AI exploits this loophole—it needn't prove humanity, only appear 'like' an excellent human creator.

Humanly, concealing AI involvement carries nearly zero cost but high rewards. Participants often gamble: submissions require no raw files, AI disclosure, or creative logs. Winning brings prizes, exposure, and resume boosts; even if exposed later, most organizers quietly withdraw awards without holding accountable or banning. This high-reward, low-risk scenario naturally tempts some to try: 'Nobody will notice anyway.'

Ultimately, AI works defeat humans not through greater creativity or inspiration but by eliminating inevitable human flaws at the outcome level. If competitions solely compare visual refinement or textual fluency, humans cannot compete algorithmically across many dimensions.

But does this mean human creators face total defeat? Absolutely not.

Unlike past digital forgeries requiring sophisticated software skills, this 'AI cheating' needs only precise prompts. Participants concealed AI generation, exploiting humanity's default trust in 'photography as reality.' Once broken, this trust causes far greater damage than losing a single award.

It resembles the 'boy who cried wolf' tale. The first claim of 'this image is AI-made' might be dismissed; the second raises doubts; by the third or fourth, even genuine human works face initial suspicion.

Over time, our first reaction to flawless photos, eloquent texts, or exquisitely composed paintings becomes suspicion: 'Could this be AI?' We search for flaws to prove human existence, inverting artistic appreciation into flaw-hunting—a satirical reversal of creative values.

So what should we do? Banning AI is impossible and unwise. Implementing 'literary inquisition'-style AI review mechanisms would be costly, prone to false positives, and stifle creative freedom.

The issue isn't whether AI can be used, but under what circumstances and identities. Allowing anonymous AI works in competitions claiming documentary photography or original fiction is fundamentally unfair to those genuinely waiting on streets or revising drafts.

To address this, competition design could begin by granting AI works more public visibility. Like sports events distinguishing gender, age, or assistive devices, creative competitions could clearly separate pure human creation from AI-generated/assisted categories. The AI track encourages experimental human-machine collaboration; the human track preserves authentic human experiences and social memories, ensuring fair evaluation in appropriate contexts.

Meanwhile, the creative process itself must be revalued. Photographers wait days for perfect moments; writers revise twenty drafts for original plots; painters fill desks with sketches before final works. If competitions encouraged or required creators to submit raw files, sketches, metadata, or creative logs, it would dispel public doubts and restore gravity to creation.

Of course, voluntary compliance isn't enough. Rules must be clear, consequences definite. Competition charters should explicitly prohibit AI-generated content in certain tracks and define concealing AI use as academic or professional misconduct. When violations occur, organizers must impose substantial penalties beyond mere award withdrawal—disqualification, prize recovery, and industry notifications. Only when violation costs far exceed benefits can gambling be deterred.

Ultimately, AI championship thefts appear as technological superiority but result from institutional lag and human temptation. Our task isn't to banish AI but redefine 'what deserves creative reward.' Only thus can human creativity avoid losing on its own field to a self-made opponent.