Is Computing Power Falling Short? Let Ingenuity Step In: Deepseek and Others Are Breaking Free from the Computing Power Fixation

![]() 01/26 2026

01/26 2026

![]() 568

568

The Fallacy of Computing Power is Being Exposed

In the tech sphere, Silicon Valley has long harbored an air of superiority akin to that of a "creator". Since the inception of large-scale AI models, OpenAI's CEO, Altman, has been propagating a brute-force aesthetic: intelligence is equated with computing power, computing power with graphics cards, and graphics cards with success.

However, this notion is now being dismantled by a group of Chinese "algorithm enthusiasts".

Recently, a study by Google revealed that DeepSeek's R1 and Alibaba Cloud's qwq-32B models can emulate human collective intelligence.

In simpler terms, when faced with complex challenges, these models no longer operate in isolation but undergo an internal evolution akin to a "society of thought". Interactions among diverse personality traits and domain expertise yield more potent capabilities.

The large model industry is replete with "stackers", yet few have prompted Google to delve into their underlying logic. This is not merely a technical triumph but also a "brute-force efficiency" path carved out by Chinese companies amidst computing power constraints.

Deepseek: More 'Bang for the Buck'

For an extended period, the development trajectory of large models in Silicon Valley has been highly convergent, if not singular: amass the most expensive hardware, form the largest clusters, and inject the most aggressive capital.

From GPT-3 to GPT-4, and subsequently to GPT-4o, this route has been repeatedly validated as effective. Meta hoarded hundreds of thousands of GPUs for Llama 3, while Microsoft and OpenAI's "Stargate" project entails investments in the hundreds of billions of dollars, encompassing the entire computing power, data center, and power infrastructure chain. This approach is straightforward; it essentially trades resource redundancy for certainty in leadership.

In essence, when computing power is abundant and capital is plentiful, engineering efficiency often takes a backseat; what truly counts is "scaling up first".

However, Google's research unveils a different reality: DeepSeek and its counterparts excel not because they outspend OpenAI but because they are more "cost-effective".

The study highlights that the leap in capabilities of Chinese models like DeepSeek does not stem from an exponential expansion of computing power but from a restructuring of the internal reasoning process.

When confronted with intricate instructions, these models do not adhere to a single generation path. Instead, they internally parallel multiple problem-solving strategies, continuously comparing, refining, and converging on more stable answers.

This "multi-agent negotiation" mode does not chase the limits of single-reasoning speed but significantly reduces error rates and rework costs per unit of computing power. In environments with limited computing power, this ability to "take fewer detours" often outweighs "running faster".

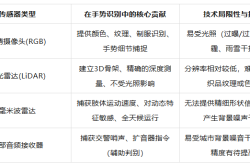

Against this backdrop, technologies like Mixture of Experts (MoE) and Group Relative Policy Optimization (GRPO) have gained significant traction in domestic models. MoE circumvents full-model activation for every reasoning by dividing model capabilities among different "expert modules"; GRPO enhances stability and controllability during reasoning through grouped comparisons and relative optimization.

The common objective of these technological choices is singular: to maximize "intelligence output" from limited computing power.

This is not a fortuitous technological decision but an engineering philosophy repeatedly honed by real-world constraints.

If Silicon Valley is still betting on an "armored advance" industrialization route, Chinese models are more akin to cultivating a lightweight yet efficient technological system, offsetting computing power disadvantages through meticulous structural, strategic, and scheduling designs.

This "algorithmic efficiency" is not idealistic but a typical survival-driven evolution. When resources are no longer boundless, efficiency transforms from a bonus into the most core and hardest-to-replicate competitive barrier.

The Erosion of Big Players' Moats

In Google's research cases, the Chinese models repeatedly cited are not the loudest or most frequently launched players but DeepSeek and Alibaba Tongyi Qianwen. This is not a mere "technical accident" but a sign that a "dividing line" has emerged in China's AI competition.

Over the past two years, domestic big players have been fixated on parameter benchmarking, leaderboard rankings, and "outperforming GPT-4" at launch events, busily flaunting incomprehensible leaderboards on social media. These tactics garner more attention in the short term.

However, from the perspective of foundational research and engineering evolution, the marginal value of such competitive approaches is rapidly diminishing. What truly garners global research attention are teams persistently working on the "dirty work" of model architecture, reasoning efficiency, and cost structure.

Backed by High-Flyer Quantitative, DeepSeek's technical team, steeped in financial engineering contexts, has almost stringent demands for computing power costs, latency control, and stability. In quantitative trading, computing power is never an infinite variable; "how to derive more reliable conclusions with less computation" is itself a core capability.

Thus, DeepSeek never focused on application-layer narratives from the outset but instead relentlessly pursued reasoning costs and model structure. Even without a 10,000-card cluster, as long as the architecture is ingenious enough, it can still approach cutting-edge capabilities.

Alibaba Tongyi Qianwen, on the other hand, has opted for a more platform-oriented path.

By continuously open-sourcing model foundations, Alibaba transforms part of the costs of model training, evaluation, and scenario validation into collaborative experiments with global developers. While this may appear to weaken "exclusive advantages" in the short term, it significantly accelerates the model's evolution in real, complex scenarios over the long run.

In this open system, models are more prone to exposing flaws and correcting them faster, thus advancing further in simulating "collective intelligence" and complex decision-making.

In contrast, certain big players still indulging in "wrapper applications" or solely focused on buying traffic are witnessing their moats crumble. The prosperity of the application layer, built on outsourcing foundational capabilities, often cannot withstand widening gaps in underlying models.

The internet industry is never short of crossovers, but truly successful ones are rare. Because once the foundational logic is misaligned, no amount of product packaging can sustain long-term prosperity.

In AI, if no new cognitive frameworks or methodologies are contributed at the algorithmic or architectural level, competition will ultimately be compressed into application-layer price wars and redundancy—the most easily disrupted terrain.

The 'Socialization' of Intelligence

The most unsettling aspect of Google's research is this: if AI can already simulate "collective intelligence" internally, how much value remains for human elites?

The study mentions that the "internal debate" capabilities exhibited by models like DeepSeek R1 essentially mean that many roles in human organizations heavily reliant on process collaboration will be inevitably redefined.

In the past, major corporate decisions often required multiple departments: market research, strategic analysis, risk assessment, financial projections...

Now, an AI agent with strong reasoning capabilities can already conduct hundreds or thousands of internal simulations.

This is not "automation" but the internalization of decision-making mechanisms.

For companies like ByteDance, Meituan, and Baidu, which highly depend on data and algorithms, this signals a transition from "human-machine collaboration" to "machine-machine collaboration".

For manufacturing giants like Xiaomi and BYD, this means supply chains and production systems will, for the first time, possess "self-reflective capabilities".

They no longer just execute commands but can question the commands themselves.

This is why more and more companies are introducing strong reasoning models into non-frontend scenarios—not for chatting but for judgment.

Can Wisdom Truly Compensate for Insufficient Computing Power?

Returning to the original question: can wisdom truly compensate for insufficient computing power?

The answer from DeepSeek and Qianwen is: when resources are no longer infinite, wisdom is forced to evolve.

History has repeatedly shown that technological paths truly changing the world often emerge from constraints, not greenhouses.

Today, Chinese AI is quietly but effectively rewriting the competitive logic of large models.

It may not be the fastest, but it is likely more stable; not the most expensive, but more sustainable.

And this may precisely be the key dividing line in the next phase of intelligent competition.

Musk has repeatedly emphasized, "All work will be done by AI in the future unless you choose to work for interest." As Chinese AI approaches the threshold of "collective intelligence", such predictions are accelerating from science fiction to reality.