$200 Million Alliance! Snowflake×OpenAI Deep Collaboration: No Monopoly in AI, Only Ecosystem Winners

![]() 02/03 2026

02/03 2026

![]() 376

376

On February 2, a $200 million collaboration was officially announced, once again stirring up the enterprise AI sector. Cloud data giant Snowflake and AI leader OpenAI have entered a multi-year strategic partnership. This move not only grants Snowflake's 12,600 customers access to OpenAI models across three major cloud platforms but also establishes a core objective of jointly developing AI agents. This is not merely a technical collaboration but a symbolic shift in enterprise AI competition—from 'model competition' to 'ecosystem alliance.'

Within just three months, Snowflake has made two separate $200 million AI investments—first partnering with Anthropic and now with OpenAI. Meanwhile, OpenAI completed a similar collaboration with ServiceNow just two weeks ago. Behind these high-value deals lies a fundamental restructuring of enterprise AI competition. The era of 'single-model dominance' is over; a new battleground of ecosystem competition around data, models, and scenarios is emerging.

$200 Million for Mutual Binding: AI Agents as the Anchor Point

This high-profile collaboration is essentially a precise 'capability complementarity' bid rather than a simple technology licensing deal. From the agreement terms, the depth of binding between the two sides far exceeds that of ordinary partners, focusing on three key dimensions that directly address core pain points in enterprise AI adoption.

First, model integration achieves 'full-cloud coverage,' breaking down platform barriers. Under the agreement, OpenAI's full suite of models will be accessible to all Snowflake customers via the Snowflake Cortex AI suite, supporting Amazon AWS, Microsoft Azure, and Google GCP—the three major cloud platforms. This means enterprises can directly leverage OpenAI's cutting-edge models (including the latest GPT-5.2) within their familiar Snowflake data platforms, regardless of their original cloud provider, without needing to build additional cross-platform architectures. This significantly lowers the technical barriers to AI adoption. Meanwhile, Snowflake employees will gain full access to ChatGPT Enterprise, boosting internal R&D and operational efficiency.

Second, the collaboration focuses on AI agent development to tackle enterprise scenario adoption challenges. The core effort lies in developing new AI agent solutions within the Snowflake platform based on OpenAI's agent technology (including application SDKs and AgentKit), while empowering enterprises to build their own dedicated AI agents. For businesses, AI agents automate complex data workflows—such as automatically capturing internal data, performing cleansing and analysis, generating compliant reports, and even providing decision recommendations based on data insights. This achieves a true 'data + intelligence' closed loop, moving beyond isolated model calls. Barış Gültekin, VP of AI at Snowflake, stated that OpenAI and Snowflake's engineering teams will collaborate deeply: 'Our mutual customer status allows us to more accurately capture enterprise needs and drive deep integration of technology and scenarios.'

Finally, the partnership deepens mutual empowerment to build technological synergy. This collaboration is a 'two-way street' rather than a one-way output: OpenAI will use Snowflake as its core data platform for model experiment tracking, analysis, and testing, leveraging Snowflake's security and compliance capabilities to manage large-scale experimental data. Meanwhile, Snowflake will integrate OpenAI's model capabilities to fill gaps in its generative AI offerings and strengthen its 'data + AI' integration advantage. As Sridhar Ramaswamy, CEO of Snowflake, put it: 'By bringing OpenAI models into enterprise data, we enable organizations to build AI on top of their most valuable assets while retaining security controls and leveraging world-class intelligence for transformation.'

Notably, this $200 million collaboration is not a 'one-time investment' but a multi-year commercial commitment, prioritizing reliability, performance optimization, and real-world customer outcomes. This means the partnership will not stop at surface-level technical integration but will continuously iterate to meet enterprise needs, forming a long-term ecological bond—a core trend in current enterprise AI collaborations: shifting from 'short-term transactions' to 'long-term co-construction.'

Snowflake's 'Model-Neutral' Ambition

The most intriguing aspect of this collaboration is Snowflake's back-to-back $200 million deals with Anthropic and OpenAI within three months. This 'no single bet' approach is not Blind expansion (blind expansion) but a carefully crafted 'model-neutral' strategy, reflecting a fundamental shift in enterprise AI procurement logic.

For Snowflake, 'model neutrality' is a key move to solidify its position as the leading data cloud provider. As a top-tier global data cloud company, Snowflake's core strength lies in its vast enterprise customer data and mature security compliance systems, though it lacks expertise in generative AI model development. Partnering with both Anthropic and OpenAI allows Snowflake to avoid vendor lock-in while offering customers diversified choices—after all, different models excel in different areas. For example, OpenAI leads in general-purpose scenarios and conversational generation, while Anthropic's Claude series outperforms in long-text processing and compliance. Enterprises can flexibly choose based on their business needs.

In an interview, Barış Gültekin explicitly outlined Snowflake's strategy: 'We intentionally remain model-neutral. Enterprises need choice and cannot be constrained by a single supplier. OpenAI is an important partner, but we also collaborate with Anthropic, Google, Meta, and others to build a diversified model ecosystem.' The core of this strategy is to position Snowflake as the 'hub platform' for enterprise AI—regardless of which model customers choose, they can complete data integration, model deployment, and scenario implementation on Snowflake, thereby locking in core enterprise data assets and consolidating its dominance in the data cloud space.

Snowflake's dual layout (layout) essentially aligns with the pragmatic trend in enterprise AI procurement. Today, more businesses are abandoning the 'single-model bet' approach in favor of building 'model matrices'—selecting tailored models for different business scenarios to achieve optimal cost-effectiveness. For instance, using OpenAI for general office tasks, Anthropic for finance and healthcare (high-compliance fields), and Google Gemini for data analysis. This 'no all-in-one model, only the right tool' mindset is driving the enterprise AI market from 'model worship' to 'pragmatism.'

Snowflake is not alone. Workflow automation platform ServiceNow announced a similar multi-year collaboration with OpenAI and Anthropic in January 2026, following the same logic. Amit Zavery, a ServiceNow executive, Speak frankly (bluntly stated) that partnering with both AI labs was a deliberate choice: 'We want to give customers and employees the ability to choose models based on tasks rather than forcing a single solution.' This 'multi-model parallel' approach is becoming the mainstream choice for enterprise AI ecosystem layout (ecosystem layout translates to 'ecosystem layout'), shifting market competition from 'model duels' to 'ecosystem battles.'

OpenAI's Serial Alliances: Beyond Models, Securing Enterprise Adoption Channels

The collaboration with Snowflake marks another key move in OpenAI's recent enterprise expansion. Just two weeks prior, OpenAI struck a similar deal with ServiceNow, positioning its models as the preferred intelligence capability for ServiceNow's enterprise customers, with a shared focus on AI agent development. Within half a month, back-to-back large-scale collaborations with two enterprise software giants reflect OpenAI's strategic pivot: from 'model R&D' to 'scenario adoption,' rapidly securing core entry points into the enterprise AI market by partnering with infrastructure leaders.

Reviewing OpenAI's enterprise collaboration path, its core logic remains clear: avoid direct competition with rival model vendors and instead ally with infrastructure leaders in the AI tech stack, leveraging their scenarios and customer bases for scalable model deployment. For OpenAI, models are its core strength, but the 'last mile' of enterprise AI adoption—data integration, scenario adaptation, and security compliance—are not its forte. Snowflake, ServiceNow, and similar companies fill these gaps.

The collaboration with Snowflake brings OpenAI three core benefits. First, it rapidly reaches 12,600 enterprise customers, significantly expanding its enterprise market coverage. Snowflake's clients span global mid-to-large enterprises, particularly in data-intensive sectors like finance, retail, and healthcare—all key target groups for OpenAI. Through this partnership, OpenAI can achieve scalable penetration without individually acquiring each customer. Second, it resolves compliance challenges for enterprise adoption. Snowflake excels in data security and compliance, meeting regulatory requirements in finance, healthcare, and other heavily regulated industries. Compliance is a major pain point for enterprise AI adoption—by partnering with Snowflake, OpenAI gains a 'compliance endorsement,' drastically reducing customer concerns about adopting its models. Third, it obtains massive enterprise data feedback to drive model optimization. Using Snowflake as an experimental data platform, OpenAI can access vast real-world enterprise data, helping refine its models for industry-specific needs and creating a virtuous cycle of 'adoption-feedback-iteration.'

Notably, OpenAI's collaboration strategy does not aim to 'cover all bases' but precisely targets 'scenario gateway' enterprises. Snowflake controls enterprise data access, while ServiceNow controls enterprise IT service and workflow access—both directly connect to core business scenarios, enabling OpenAI's models to embed deeply into enterprise workflows rather than remaining at the 'tool level.' This 'model + gateway' approach offers stronger stickiness than simple technology licensing, positioning OpenAI more favorably in the competitive enterprise AI market.

However, OpenAI faces challenges in its enterprise market expansion. On one hand, competition from rival model vendors is intensifying, with Anthropic, Google, Meta, and others accelerating their enterprise collaborations, particularly Anthropic's rise, which has diverted some market share. On the other hand, OpenAI maintains a low profile, refusing to share transaction details beyond press releases. While this mystery sustains buzz, it may also raise concerns among enterprises during partnership decisions—after all, long-term collaborations require sufficient transparency and certainty.

No Monopoly in the Enterprise AI Market

The Snowflake-OpenAI collaboration and recent flurry of high-value enterprise AI deals point to a clear conclusion: no single company will dominate the enterprise AI market. A multi-player, ecosystem-driven landscape will prevail in the long term. Behind this restructuring lie three driving forces: market demand, technology evolution, and capital allocation.

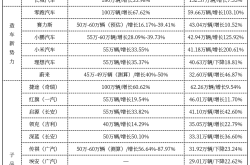

First, market demand has made 'multi-model parallelism' the mainstream. Enterprise AI has moved from the 'trial phase' to 'scalable adoption.' Global enterprise generative AI spending surged to $37 billion in 2025, up 3.2x from 2024, with $19 billion on AI applications and $18 billion on infrastructure. As scenarios diversify, businesses increasingly recognize that no single model fits all use cases—OpenAI excels in general dialogue and content generation, Anthropic in long-text processing and compliance, Google Gemini in multimodal and data analysis, and Meta's Llama series in open-source flexibility. Thus, building 'model matrices' and dynamically selecting models by scenario has become optimal.

Latest survey data from a16z confirms this trend: 81% of enterprises now use three or more models simultaneously, up from 68% a year ago. More businesses are selecting AI models like craftsmen choosing tools—using OpenAI for general office tasks, Anthropic for financial compliance, and Google models for data analysis. This pragmatic approach directly drives enterprises to partner with multiple AI vendors, preventing market monopolies.

Second, diverging capital perspectives reflect market diversity. Currently, different investors present conflicting views on enterprise AI market leaders. A report by Menlo Ventures (a major investor in Anthropic) claims Anthropic led with 40% market share by late 2025, OpenAI fell to 27%, and Google ranked third at 21%. Conversely, Andreessen Horowitz (a16z, an OpenAI investor) reports OpenAI remains the market leader, with 78% of surveyed enterprises using its models in production.

These contradictory findings, while seemingly chaotic, reveal the market's pluralistic nature. On one hand, investor surveys carry 'vested interest' biases, naturally favoring their portfolio companies. On the other hand, model preferences vary by industry and enterprise size—large financial firms prioritize Anthropic's compliance edge, while small-to-medium tech companies prefer OpenAI's ecosystem maturity, and Google dominates among Google Cloud users. This differentiated adoption prevents any single vendor from monopolizing all segments, fostering a 'co-existence and competition' landscape.

More importantly, ecological game has replaced model competition as the core contradiction in the market. As a16z pointed out in its report, the endgame for enterprise AI is not a battle of models but a “battle of workflows”. The competition for foundational models is rapidly becoming “tool-based” and “commoditized”. Enterprises are no longer pursuing the “most cutting-edge model” but rather “solutions that can be integrated into existing workflows and solve practical problems”. Therefore, pure model development is no longer sufficient to support enterprises in establishing a foothold in the market. It is essential to collaborate with players across infrastructure, scenario applications, data services, and other sectors to build a complete ecosystem.

Currently, the enterprise AI market has formed several major ecological camps. The first camp is “Microsoft + OpenAI”. Leveraging office and development tools like Microsoft 365 and GitHub, it natively embeds OpenAI models into enterprise workflows, controlling access to hundreds of millions of knowledge workers. 65% of enterprises say they are more inclined to choose AI solutions from existing suppliers, making Microsoft's ecological barriers difficult to shake. The second camp is “data vendors + multiple models”. Represented by Snowflake, it builds an integrated “data + AI” platform by partnering with multiple model vendors like OpenAI and Anthropic, focusing on data-intensive scenarios. The third camp is “vertical scenarios + dedicated models”. Represented by ServiceNow and Salesforce, it combines its own vertical scenario advantages with multiple model vendors to develop dedicated solutions, focusing on niche field (Note: “ niche field ” is translated as “niche areas” in the following context for better fluency) such as workflow automation and customer management. The fourth camp is “open-source models + cloud vendors”. Google and Meta attract developers through open-source models and leverage their own cloud service advantages to capture the small and medium-sized enterprise market.

These four camps are not entirely adversarial; instead, there is significant room for cooperation. For example, Snowflake's platform can integrate with Microsoft Azure's cloud services, and ServiceNow's solutions can leverage OpenAI's models, forming an “ecologically complementary” landscape. This relationship of “competition with cooperation” further strengthens the market dynamic of multiple strong players coexisting.

Snowflake's $200 million collaboration with OpenAI marks the beginning of a warring states era in enterprise AI. This partnership does not follow a winner-takes-all script but instead achieves a “1+1>2” win-win situation by leveraging each other's strengths—Snowflake enhances its AI capabilities and solidifies its position as a leader in the data cloud; OpenAI gains access to deployment channels and expands its enterprise market; ultimately, the primary beneficiaries are the broad base of enterprise customers, who gain more flexible, secure, and scenario-specific AI solutions.

Looking back at the development of enterprise AI, from the early model-driven competition to today's ecological coexistence, the market is gradually returning to rationality: The ultimate value of AI lies not in the technological monopoly of a single enterprise but in the deep integration of technology and scenarios to help enterprises achieve efficiency improvements and digital transformation. For model vendors, mere technological leadership is no longer sufficient; they must learn to embrace ecosystems and adapt to scenarios. For enterprise software vendors, only by remaining open and neutral and building a diversified ecosystem can they retain customers and establish a market presence. For enterprise customers, pragmatic choices and the construction of a model matrix tailored to their needs are essential for AI to deliver real value.