Who’s “Taking Over” Your Mac?

![]() 02/06 2026

02/06 2026

![]() 517

517

If you notice your computer or phone behaving in a “semi-automated” manner, exercise caution.

Recently, thousands of tech enthusiasts have granted full administrative control of their computers to an AI project named OpenClaw—a tool that has been online for less than 168 hours and still lacks a definitive name. In just one week, OpenClaw garnered 60,000 GitHub stars and even received endorsement from Silicon Valley luminary Andrej Karpathy.

However, this is no ordinary productivity tool; it’s a cyber adventure in “permission hijacking.” For those unfamiliar with GitHub, here’s what you need to know: these so-called “digital employees” are circumventing your firewalls, peeling back your privacy layer by layer, and exposing it to hackers globally. This isn’t merely the collapse of AI hype; it’s the most perilous “exposed” moment of 2026. From viral fame to two rebrandings, OpenClaw has aggressively stripped away the AI agent industry’s fig leaf of “efficiency.”

01 Eroding Trust Boundaries: Your “Butler” Is Letting the Wolves In

OpenClaw positions itself as an “orchestration layer” that manages ticket bookings, financial tasks, and email responses via WhatsApp, iMessage, or Slack. To function, it must reside on your Mac mini or private server, demanding deep system-level access.

What does this entail? You’re not just installing software; you’re hiring an unlicensed orchestrator who leaves the backdoor ajar 24/7.

Founder Peter Steinberger’s elite background lends an air of credibility: he sold PSPDFKit for approximately $119 million and developed OpenClaw out of “boredom” after achieving financial freedom. Yet, this “wealthy geek’s experiment” clashes with corporate security demands. According to Token Security, 22% of enterprise employees granted OpenClaw access without IT audits within its first week of fame. Researchers discovered hundreds of OpenClaw control panels exposed online, with the default port 18789 lacking even basic authentication.

Imagine hiring a butler who leaves your door unlocked and posts a sign: “I manage this house—I know where all the bank cards and safe keys are. Come on in!”

02 The Fatal MCP Protocol: “Possession” via a Single Email

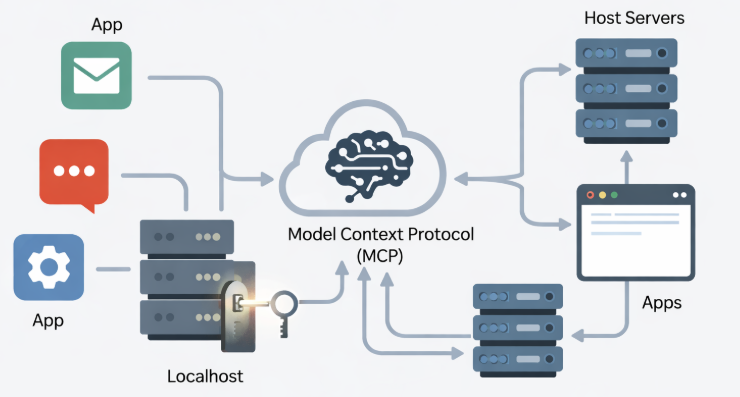

Technically, OpenClaw relies on the trendy MCP (Model Context Protocol) for cross-application execution. However, its implementation suffers from catastrophic “trust collapse”: it blindly trusts all local connections, ignoring modern networks’ reliance on reverse proxies.

What does this mean? You receive a spam email, don’t click any links or attachments—but as your AI assistant scans it in the background, hidden commands instantly “hijack” its brain. Security expert Jamieson O’Reilly demonstrated that attackers can extract server SSH keys through prompt injection, exploiting this “internal trust model” collapse. This “cognitive context theft” is more alarming than traditional viruses: it exploits your trust in the tool, leading to legal and timely harm.

Even more absurd is its fragile supply chain. O’Reilly staged a textbook attack: he uploaded an unvetted plugin to the skill library, and 16 developers across 7 countries fell victim by trusting fake download metrics. In this ecosystem, code lacks both auditing and signatures—users’ blind trust rests solely on manipulated download numbers.

03 The Computational Tax Truth: This “Assistant” Costs More Than a Human

Many flocked to OpenClaw for its “open-source, free” promise, unaware it’s a “money-burning machine” in AI disguise.

Unlike $20/month subscriptions, OpenClaw taps into big tech’s developer APIs, charging per operation. Since agents need constant access to your conversation history, local files, and preferences, “contextual memory” causes token consumption to spiral out of control.

Let’s crunch the numbers: debugging the environment alone costs $10 in API fees. Summarizing news and managing to-dos daily easily exceeds $30/month, excluding hardware and electricity costs. Using top-tier models for “maximum efficiency”? A single follow-up question like “Why exclude this news?” burns 64 cents per query.

These costs burst the “AI efficiency” bubble. You spend thousands on a Mac mini and hundreds monthly on “computational tax”—only to get an email assistant that might leak your bank cards. Is this productivity or a “tech tax” for cloud giants?

04 Fragility Under Tech Giants: The Lobster’s Molting Power Play

The suffocating renaming controversies during OpenClaw’s week of fame were the climax of a commercial farce.

Originally named Clawdbot, it rebranded overnight after Anthropic’s “polite” legal threat over trademark similarities to Claude. The rename to Moltbot triggered instant domain and GitHub handle hijacking by malicious bots. Then, fake $CLAWD tokens surged to a $16M market cap before crashing.

The irony? An AI project aiming for full automation proved powerless against real-world legal and branding battles. This exposes the AI ecosystem’s dependence on big tech: giants need only lawyers, not tech blocks, to socially kill a viral project.

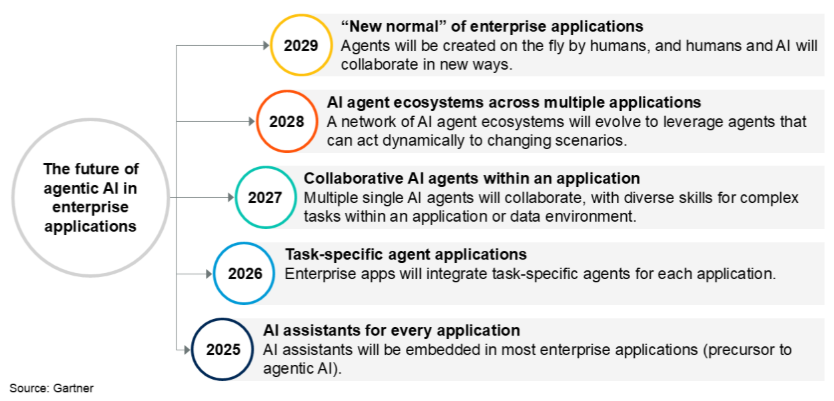

Gartner predicts that 40% of enterprise apps will integrate AI agents by 2026. This “efficiency anxiety”-driven wild growth forces developers to grant external access before securing foundations. Peter Steinberger’s troubles reflect industry-wide growing pains between rapid expansion and traditional IP/security models.

05 Epilogue

OpenClaw’s meteoric rise and fall serve as a 2026 AI wake-up call.

It reveals a harsh truth: true “digital assistants” aren’t just large models stuffed into root-access scripts. This development logic—lacking identity governance, encryption, and internal traffic verification—exposes thousands of sensitive assets to hackers.

As AI agents evolve from chatboxes to quasi-“operating systems,” security must upgrade from “blocking spam” to “preventing identity hijacking.”

Don’t let your digital butler become a traitor. In the Agent era, the biggest vulnerability isn’t code—it’s trust itself.

Disclaimer

This content is based on deep analysis of corporate announcements, technical patents, and authoritative media reports, aiming to explore technological paths and industrial trends. Product parameters and performance descriptions cited herein derive from official disclosures and represent theoretical analyses based on existing data, not absolute real-world feedback. Given the OTA iteration nature of tech products (especially EVs and robots), discrepancies between stated data and actual performance may exist—refer to official corporate releases for final details. Views expressed are for reference only and do not constitute investment or purchase advice.

— THE END —

Share your thoughts in the comments! Like · Share · Repost to support the author