Industry | Google Reshapes AI Infrastructure with Ironwood TPU, Potentially Reshuffling the Future AI Chip Landscape

![]() 12/04 2025

12/04 2025

![]() 559

559

Foreword:

Meta is negotiating to deploy Google TPUs in data centers by 2027, with a potential contract worth billions of dollars. Google's seventh-generation TPU chip, Ironwood, has officially launched, with single-chip computing power surpassing Nvidia's flagship products for the first time.

When Ironwood's computing power parameters settled at 4614 TFLOPS and Anthropic placed an order worth tens of billions of dollars for 1 million TPUs, the era of [Nvidia's dominance] in the AI chip market may soon come to an end.

Survival Against the Odds: The Birth and Turnaround of TPU

In 2013, an internal forecast at Google alarmed management: if voice searches by global Android users were fully processed using neural networks, the required computing power would be twice that of all Google's data centers combined, and the cost of simply expanding data center scale was prohibitively high.

At the time, Google faced three options: continue relying on CPUs, purchase Nvidia GPUs, or develop its own dedicated chips.

CPU computing efficiency was too low and was directly excluded. While Nvidia GPUs were mature and usable, they were not optimized for deep learning, leading to efficiency losses, and relying on a single supplier posed strategic risks.

After all external solutions were rejected, developing its own ASIC chip became Google's only path forward.

This desperate decision ultimately gave birth to the industry-changing TPU.

In 2015, the first-generation TPU was quietly launched, bringing a 15-30x performance boost and a 30-80x energy efficiency improvement with its 28nm process technology.

Its systolic array architecture, designed specifically for inference scenarios, enabled efficient data flow within processing units, minimizing memory access losses.

However, TPU's early days were not smooth. After Google announced TPU performance metrics in 2016, Nvidia publicly challenged them, claiming that in GoogLeNet inference tests, the Pascal P40 processed data twice as fast as the TPU at a lower cost per unit.

The industry generally questioned whether, with GPUs iterating annually, the development costs and flexibility drawbacks of custom chips would make them unsustainable in the long run.

Yet Google's vision was far more forward-looking than the market imagined. The core advantage of TPU never lay in single-card peak performance but in the total cost of ownership (TCO) and strategic autonomy under Google's specific workloads.

The 2016 AlphaGo vs. Lee Sedol The War of the Century (century-long battle) became TPU's coming-out party.

Over the next decade, TPU completed a triple jump: from inference to training, from single scenarios to general workloads, and from internal use to commercial output.

① 2017 TPU v2: Defined the bfloat16 numerical format, supported model training for the first time, and achieved a peak performance of 11.5 PetaFLOPS with a 256-chip Pod cluster.

② 2018 TPU v3: Fully transitioned to liquid cooling technology, solving the heat dissipation challenge of 450W single-chip power consumption, laying the physical foundation for hyperscale clusters, and driving liquid cooling to become mainstream in AI data centers.

③ 2022 TPU v4: Introduced OCS optical circuit switching technology, enabling dynamic reprogrammable interconnects, and supported training trillion-parameter models with a 4096-chip cluster, where the PaLM 540B model was born.

④ 2023 TPU v5p: Bridged training and inference scenarios, expanded cluster scale to 8960 chips, entered Google's core profitable businesses like advertising and search for the first time, and began mass purchases by Meta and Anthropic.

⑤ 2024 TPU v6: Focused on inference scenarios, improved energy efficiency by 67%, and became the primary inference engine for Google Search, YouTube recommendations, and the Gemini model, heralding TPU's inference-first era.

⑥ 2025 TPU v7 Ironwood: Single-chip FP8 computing power reached 4614 TFLOPS, slightly surpassing Nvidia's B200, with a 9216-chip cluster achieving 42.5 ExaFLOPS, officially launching a direct challenge to Nvidia.

Beyond Performance: Ironwood's Systemic Advantages

The seventh-generation TPU Ironwood's seismic impact on the industry stems from its transformation from Google's internal black technology to a complete, open-market solution with systemic advantages.

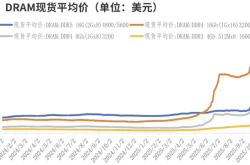

Ironwood's single-chip raw power places it among the global elite, with FP8 precision peak performance of 4614 TFLOPS, slightly exceeding Nvidia's B200 at 4500 TFLOPS.

Equipped with 192GB HBM3e memory and a bandwidth of 7.2TB/s, it's just a step away from the B200's 8TB/s.

Single-chip energy efficiency reaches 29.3 TFLOPS/W, double that of the sixth-generation TPU and far surpassing Nvidia's B200.

Memory capacity is six times that of the previous generation, with bandwidth increased by 4.5x, easily handling inference tasks for models with tens to hundreds of billions of parameters.

Support for the FP8 computing format reduces data transmission by 50% while keeping model accuracy loss below 2%, significantly lowering latency and power consumption.

Google's true ace is its cluster scalability. Ironwood supports clusters of up to 9216 liquid-cooled chips, totaling 42.5 ExaFLOPS.

This is equivalent to 24 times the performance of the world's strongest supercomputer, El Capitan, and 118 times that of the closest competitor under specific FP8 workloads.

The foundation of this scale is Google's self-developed 2D/3D torus topology and OCS optical circuit switching technology.

Unlike Nvidia's 72-GPU clusters built on NVLink + high-order switches, Google abandoned traditional centralized switch designs, directly connecting all chips via 3D torus topology and enabling dynamic optical path reconstruction with OCS technology.

OCS uses MEMS micro-mirrors to switch optical signals in milliseconds with virtually no latency, instantly bypassing faulty chips. The direct benefit of this systemic design is a significant reduction in inference costs.

Ironwood clusters provide 1.77PB of high-bandwidth HBM, with nearly equidistant access for all chips, greatly improving KV cache hit rates and reducing redundant computations.

Google's internal tests show that Ironwood's inference costs are 30%-40% lower than GPU flagship systems under equivalent workloads, with even greater advantages in extreme scenarios.

For AI companies processing billions of model calls daily, this cost advantage can reshape the competitive landscape.

To lower migration barriers for customers, Google developed the [Google-style CUDA], TPU Command Center, and supports interaction with TPUs through PyTorch ecosystem tools without requiring mastery of Google's proprietary Jax language.

Ironwood also comes with a complete software stack. More importantly, Google launched the Agent2Agent ecosystem protocol and contributed extensively to open-source inference frameworks like vLLM and SGLang, bridging critical gaps in TPU's open-source ecosystem integration.

The maturation of the software ecosystem has transformed Ironwood from a Google-exclusive custom tool into a universal solution compatible with mainstream AI frameworks and models, paving the way for its commercialization.

The market is already voting with its feet: Anthropic chose 1 million TPUs for its computing foundation, Meta considered integrating TPUs into core data centers, and more AI companies are adopting GPU+TPU heterogeneous deployment models.

Facing Ironwood's challenge, Nvidia emphasized in an urgent statement that it [can run all AI models], inadvertently exposing its inefficiency in dedicated scenarios.

Google follows a dedicated optimization + full-stack integration route. TPU is designed specifically for deep learning, especially Transformer workloads, with its systolic array architecture far outperforming general-purpose GPUs in matrix operations.

More critically, Google controls the entire stack from chip design, compilers, frameworks (TensorFlow/Jax), distributed training systems, to data center infrastructure, enabling end-to-end optimization.

This full-stack capability allows Google to implement systemic innovations beyond Nvidia's reach. Its goal is to build a complete AI supercomputer-as-a-service ecosystem, letting customers obtain low-cost, highly available computing power without worrying about underlying infrastructure.

Commercialization Breakthrough: Google Aims to Disrupt Nvidia's Dominance

For the past decade, TPU primarily served Google internally, but starting in 2024, Google launched a highly targeted commercialization offensive, mirroring Nvidia's support for CoreWeave but with greater lethality.

Google introduced dual models of cloud leasing + on-premises deployment. Customers can either rent TPU computing power on-demand via Google Cloud without hardware procurement and maintenance costs or deploy TPUs directly in their own data centers through the TPU@Premises program for low latency and data security.

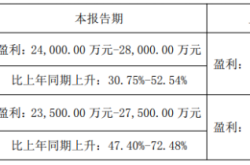

Google first secured AI opinion leader Anthropic with a massive order for 1 million TPUs, including 400,000 Ironwood chips sold directly by Broadcom and 600,000 leased through Google Cloud, with a total transaction value exceeding $50 billion.

This partnership not only provided the strongest endorsement for TPU but also made Anthropic's Claude series models a [benchmark application] for the TPU ecosystem.

Next, Google targeted Meta, one of Nvidia's core customers.

According to insiders, if the deal goes through, Meta could generate annual revenue equivalent to 10% of Nvidia's data center business.

Once Meta successfully deploys TPUs, it will trigger a chain reaction, prompting more enterprises to reduce their reliance on Nvidia.

To break developers' dependence on CUDA, Google intensified its support for PyTorch, launching the PyTorch/XLA extension and Torchax tools, enabling users to migrate PyTorch models to TPUs without code rewrites.

Simultaneously, Google contributed TPU-optimized kernels to open-source inference frameworks like vLLM and SGLang, bridging critical gaps in the open-source ecosystem.

While Jax's popularity still lags behind CUDA, Google's strategy focuses on lowering migration costs rather than replacing CUDA.

For cost-conscious enterprises, as long as migration costs are low enough, TPU's energy efficiency advantages will drive adoption—this is Google's opportunity.

From a market trend perspective, the rise of ASICs is inevitable. Nomura Securities predicts that ASIC total shipments will surpass GPUs for the first time in 2026.

As the most mature ASIC product, TPU shipments are expected to reach 2.5 million units in 2025 and exceed 3 million in 2026.

For the entire AI industry, this competition is a major boon. Google TPU's commercialization will effectively break Nvidia's pricing monopoly and reduce overall AI computing costs.

According to estimates, Google's underlying costs for providing equivalent inference services are just 20% of OpenAI's.

Lower costs will enable more small and medium-sized enterprises and developers to access advanced AI computing power, driving AI application proliferation across industries.

Conclusion:

The essence of computing power competition lies in ecosystem and cost battles.

Ironwood's launch marks the transition of AI infrastructure from GPU cluster dominance to Cloud + Dedicated Chips + Hybrid Deployment 2.0 era.

In this new era, whoever provides more efficient computing power, a more complete ecosystem, and lower total costs will hold the discourse power (discourse power).

Regardless of the final outcome, diversified competition in the AI chip market will ultimately benefit the entire AI industry.

Partial sources referenced: Tencent Technology: 'A Comprehensive Guide to Google TPU', Semiconductor Industry Observer: 'This Underdog Chip Finally Made a Comeback?', Zhineng Zhixin: 'Google's Inference-Era Architecture: Ironwood TPU', First Voice: 'Google Ironwood TPU Launches a Surprise Attack: Nvidia GPU Faces Challenger?', Omni Lab: 'Google's Decade-Long Chipmaking Peak: The Seventh-Generation TPU Opens Up, Differing from Nvidia's Traditional GPUs', Top Tech: 'Google TPU Makes Jensen Huang 'Nervous''