Sino-US AI Computing Power Mid-Game Duel: The Clash of Openness Versus Closure

![]() 12/05 2025

12/05 2025

![]() 432

432

Recently, Google's TPU, riding the wave of momentum from Gemini3, has significantly expanded its incremental prospects, with Meta considering a multi-billion-dollar investment to bolster its support. Institutions have revised their TPU production forecasts upward by 67%, projecting 5 million units. Leveraging a full-chain closed-loop system encompassing 'chips-optical switching networks-large models-cloud services,' Google's intelligent computing system has reclaimed its leading position in the AI race, marking a further stride in the American strategy of closed monopoly.

Meanwhile, open-source models led by DeepSeek are hot on its trail. Earlier this month, DeepSeek V3.2 and its enhanced long-thinking variant were unveiled, with the former matching ChatGPT's performance in tests and the latter directly challenging the top closed-source model, Gemini. This heralds the gradual maturation of China's open-source and open approach, with the domestic intelligent computing system showcasing strong ecological synergy potential at the application layer.

Thus, the Sino-US AI industry duel has reached its mid-game, with the 'open collaboration' versus 'closed monopoly' dynamic becoming increasingly pronounced. Especially in the layout of the intelligent computing ecosystem, the two major camps may be gearing up for a peak competition (a clash of systemic capabilities).

01 From Gemini 3 to TPU v7: The Pinnacle of Software-Hardware Integrated Closed Loop

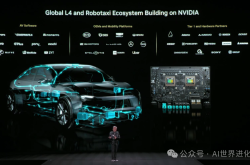

Undoubtedly, the sudden surge in popularity of Google's TPU owes much to the model capability validation of Gemini3. As an ASIC chip tailored for Google's TensorFlow framework, the TPU lays the groundwork for its full-stack closed loop through a software-hardware integrated design, while also capturing the external user market during breakthroughs in upper-layer applications, even being once hailed as the strongest alternative to NVIDIA's GPUs.

The term 'software-hardware integration' implies that hardware design is entirely customized to meet the needs of upper-layer software and algorithms. For instance, the training and inference processes of Gemini 3 are highly compatible with TPU clusters, and this customized dedicated mode also showcases extreme value in terms of power consumption efficiency—the power consumption of TPU v5e is merely 20%-30% of that of NVIDIA H100, and the performance per watt of TPU v7 doubles compared to its predecessor.

Currently, Google has forged a closed and efficient loop through vertical integration of 'chips + models + frameworks + cloud services.' On one hand, this significantly enhances its own AI R&D and application development efficiency; on the other hand, it carves out its own domain under the NV mainstream system, seizing another dominant position in the intelligent computing race. Meta's intention to acquire TPUs has further fueled the popularity of this system.

Some industry insiders point out that from Apple to Google, the American vertical closed approach has nearly reached its zenith, reflecting the pervasive monopoly desires of tech giants to consolidate and expand their interest domains at the industrial chain level. However, from an ecological development perspective, the closed model lacks a long-term vision, easily leading to a loss of innovation vitality in the downstream sectors of the industry and forming a highly centralized landscape dominated by a single entity.

Furthermore, from the perspective of TPU application scenarios, the software-hardware integrated closed loop is clearly a game reserved for giants. An analyst noted that Google's clustered design and 'software black box' necessitate users to reconfigure an entire set of heterogeneous infrastructure. Without the demand for training trillion-parameter models, the pulsating array of TPUs cannot be fully utilized, and the electricity savings may not offset the migration costs.

Meanwhile, due to the extremely closed TPU technology route, which is incompatible with mainstream development environments, users also require a professional engineering team to master its XLA compiler and reconstruct the underlying code. In other words, only enterprises of the scale of Google and Meta are qualified to switch to the TPU route, and only when the computing power scale reaches a certain level can the energy efficiency advantages of customized products be fully leveraged.

Undeniably, leading enterprises like Google have achieved rapid single-point breakthroughs in specific sectors through vertical integration and self-built closed loops, contributing to the thriving landscape of American tech giants. However, in the context of the Sino-US AI duel, the American approach of closed monopoly has leveraged its first-mover advantage to secure its position in the race, making passive, follower-style catch-up efforts increasingly inadequate to meet the development needs of China's intelligent computing industry.

Beyond the 'small yard with high fences,' how to fully leverage the advantages of the national system and unite all forces to break down barriers and build bridges has become crucial to narrowing the gap between the Sino-US AI systems.

02 Multivariate Heterogeneous Ecological Synergy: The Open Path Leads to the Next Race Point

Compared to the American oligopoly model, China's intelligent computing industry is reshaping an open ecosystem based on a multivariate heterogeneous system, with layer-by-layer decoupling. From top-level design to industrial implementation, 'open-source, openness + collaborative innovation' has become a consensus across the domestic software and hardware stack.

At the policy level, the Action Plan for the High-Quality Development of Computing Infrastructure proposes building a reasonably laid out, ubiquitously connected, and flexible computing internet, enhancing the integration capabilities of heterogeneous computing power and networks, and achieving cross-domain scheduling and orchestration of multivariate heterogeneous computing power. Moreover, relevant departments have repeatedly emphasized encouraging all parties to innovate and explore construction and operation models for intelligent computing centers and multi-party collaborative cooperation mechanisms.

Extending to the AI application layer, the Opinions on Deepening the Implementation of the 'AI+' Action also require deepening high-level openness in the AI field and promoting accessible technology open-source. It is evident that China has proposed a distinct approach in the AI and intelligent computing fields—not blindly chasing closure in a closed route but seeking differentiated catch-up in an open pattern (landscape).

In fact, the top-level design is entirely based on the practical needs of the industry. Under US technological blockades, China's intelligent computing industry faces two major challenges: bottlenecks in single-card computing performance and high computing costs. In addition to continuous breakthroughs in core technologies such as chips, models, and basic software, a more effective current approach is to develop larger-scale, more diverse, and efficient intelligent computing clusters to break through AI computing bottlenecks.

Industry survey results show that there are no fewer than 100 computing clusters in China with a scale of thousands of cards, but most of them use heterogeneous chips. It can be imagined that if different hardware systems remain closed to each other, with non-unified standard interfaces and incompatible software stacks, it will be difficult to achieve effective integration and utilization of intelligent computing resources, let alone meet the application needs of large-scale parameter models.

According to mainstream industry views, domestic AI computing power exhibits diversified and fragmented characteristics while also possessing considerable scalability advantages. The immediate priority is not to pursue a single technology route in isolation but to first break down the 'technical walls' and 'ecological walls' as soon as possible, achieve open cross-layer collaboration across the industrial chain, truly unleash the overall ecological potential of computing power, and move from single-point breakthroughs to integrated innovation.

Specifically, the open route aims to promote collaborative innovation in the industrial ecosystem based on an open computing architecture. For example, by establishing unified interface specifications, it encourages chip, computing system, large model, and other upstream and downstream enterprises in the industrial chain to jointly participate in ecological construction, reducing redundant R&D and adaptation investments, and sharing the benefits of technological breakthroughs and collaborative innovation.

Meanwhile, as collaboration standards within the open architecture tend to unify, it becomes possible to further develop commercialized software and hardware technologies to replace customized and proprietary systems, thereby reducing the application costs of computing products and achieving computing power accessibility across the entire industrial stack.

Clearly, under the Chinese open system, domestic AI computing power is breaking free from the generalization and popularization dilemmas faced by Google's TPU, widely linking the intelligent computing ecosystem with various developers and users, ultimately forming a systematic collaborative combat capability, and more flexibly and efficiently empowering the implementation of AI+.

At that point, the Sino-US AI duel will transcend single-card competition and single-model comparisons, fully ushering in an ultimate duel of ecosystem capabilities.

The copyright of the cover image and accompanying pictures in the article belongs to the respective copyright owners. If the copyright holders deem their works unsuitable for public browsing or free use, please contact us promptly, and our platform will make immediate corrections.