Huang Renxun Declares CPUs Face Extinction! Nvidia Aims for GPU Supremacy, but Tech Titans Won't Let It Happen

![]() 12/09 2025

12/09 2025

![]() 443

443

Will GPUs Overtake CPUs? Unlikely.

Recently, the U.S. government announced its decision to permit Nvidia to sell its H200 AI chips to 'approved customers' in China and other regions, stipulating that the U.S. government would receive a 25% share of Nvidia's H200 sales revenue in those areas. This move has once again thrust GPU computing into the limelight.

Just a few days ago, Huang Renxun's comments at a public event drew significant attention. He expressed doubts about the continued necessity of CPUs in the future era of accelerated computing and AI-driven intelligence. The underlying message was clear: CPUs might eventually be completely replaced by GPUs, aligning with Huang's long-held vision of 'accelerated computing.'

Over the past few years, with the rise of AI large models, GPU-centric architectures have become the standard for training large models, AI inference, autonomous driving, and cloud computing infrastructure, significantly reducing the role of CPUs.

Nvidia has been the primary beneficiary of this trend, with GPU revenue in data centers skyrocketing from $15 billion in 2023 to $115.2 billion (FY2025). The upcoming FY2026 earnings report in January may reveal another significant increase.

Meanwhile, China's leading general-purpose GPU company, Moore Threads, witnessed its stock price soar from 114.28 yuan to around 650 yuan on its debut, fueling a GPU craze in the Chinese market. While I anticipated their success, the capital market's enthusiasm for GPU firms surpassed my expectations. After going public, Moore Threads announced on December 9 that it would unveil a full-stack MUSA-based development strategy and future vision at its inaugural MUSA Developer Conference, along with a new GPU architecture.

The question arises: With the explosive demand for large model training, GPUs are becoming more crucial than ever. But will CPUs truly be replaced by GPUs? Here's Leitech's perspective.

Will GPUs Entirely Replace CPUs? Unlikely!

Traditionally, CPUs have been the cornerstone of general-purpose computing due to their robust single-thread performance, mature instruction sets, and well-established ecosystems, enabling them to handle complex tasks in operating systems and applications. These attributes have made CPUs the brain of nearly all intelligent devices. Whether in PCs, smartphones, or large servers, CPUs remain essential for task scheduling, regardless of computational power.

GPUs, on the other hand, excel in extreme parallel computing, with arrays capable of running thousands or even tens of thousands of cores simultaneously. This makes them far superior to CPUs in efficiency and speed for 'repetitive and matrix-based' tasks like deep learning training, image rendering, and scientific computing.

However, this focus on parallel computing renders GPUs ill-suited for complex tasks, as they lack the strong single-core capability needed for rapid logical judgment and task decomposition. In this analogy, GPUs are like skilled factory workers, efficient at batch processing repetitive tasks but limited in individual thread capability. CPUs, meanwhile, are akin to managers who oversee production planning and factory operations.

Thus, CPUs remain indispensable hardware in both PCs and servers, handling database operations and system scheduling—their traditional strongholds. Meanwhile, model training, parallel inference, and batch vector computing are where GPUs excel.

In my view, GPUs and CPUs are not mutually exclusive rivals. Their relationship is synergistic rather than competitive. However, as large models become the primary workload in data centers, GPUs' prominence has indeed grown rapidly. Huang's 'replacement' is more metaphorical, suggesting GPUs are becoming the core driver in the new computing era rather than a literal substitute.

So, could GPUs completely replace CPUs? Theoretically possible, but achieving this would require designing a core architecture specialized in general-purpose tasks, increasing core cache, and developing more complex control units. It would also necessitate a complete overhaul of existing instruction sets and system architectures to adapt to a GPU-centric computing environment.

In simpler terms, this would mean dismantling and rebuilding the current computing system from scratch. Even ignoring whether Intel and AMD would cooperate, the decades of global internet infrastructure accumulation alone would make this plan extremely challenging to implement.

Well, that's enough speculation. However, it's undeniable that GPUs have already become dominant in the cloud computing industry. From AIGC to enterprise-scale large model training, the surge in parallel computing demand has driven fundamental changes in cloud infrastructure. GPU clusters have become the top choice for major cloud providers (though some might view this as catching up, given that previous computing clusters were mostly CPU-based).

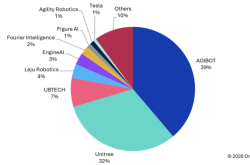

Tech Giants Develop In-House GPUs, Challenging Nvidia's Dominance

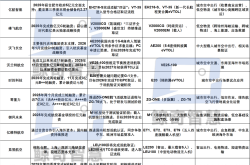

Major domestic and international internet and tech companies are accelerating the deployment of new GPU clusters. Alibaba Cloud, for example, has developed its own Hanguang chip while introducing next-gen GPU clusters powered by third-party cores from Nvidia and others to support large models.

Additionally, Baidu's Kunlun team has optimized its in-house chips for AI computing scenarios, integrating them with the PaddlePaddle ecosystem to create a unified solution. This enables businesses to rapidly deploy and apply local AI large models, offering a more secure and reliable alternative to cloud servers for industries like chemical production. Baidu recently announced plans to spin off Kunlun Chips for an independent IPO, aiming to capitalize on the GPU-driven AI computing boom.

Amazon has built a fully self-developed AI computing cluster using ARM CPUs, GPUs, and NPUs across its Graviton, Trainium, and Inferentia chip families, catering to diverse needs from inference to training through heterogeneous computing.

Google, meanwhile, is renowned for its Tensor Processing Unit's exceptional energy efficiency and computing power, alongside its general-purpose Axion CPU. Currently, Google's cloud centers use Nvidia GPUs for training clusters but are gradually shifting inference clusters to servers powered by in-house chips.

It's evident that nearly all leading vendors are purchasing third-party GPUs while advancing their in-house chip programs. The primary goal isn't to eliminate Nvidia but to avoid being entirely dependent on external suppliers for computing power. Especially as large model inference becomes mainstream, energy efficiency and cost control matter more than peak performance for cloud providers. Self-developed chips offer irreplaceable advantages in these areas over the long term.

Thus, even if computing clusters shift entirely to GPUs, Nvidia will struggle to monopolize the market, as everyone has their own strategies.

Shift in Computing Cores: GPUs Become a Battleground

The reconfiguration of cloud computing power is just one part of the story. Changes on the terminal side are equally significant and more representative, as they directly indicate the future direction of computing architectures over the next decade. From PCs to smartphones to automobiles, more AI tasks are being executed locally rather than relying solely on the cloud.

Large model inference, intelligent assistants, AI recommendations, and real-time image/text generation applications all require terminal devices to possess sufficient high-parallel computing power. This trend inevitably diminishes the importance of traditional CPU architectures. Take smartphones, for example: nearly all flagship SoCs now emphasize AI computing power, particularly integrating GPU and NPU acceleration. Qualcomm, MediaTek, and Apple's M-series chips all deliver tens or even hundreds of TOPS of AI performance. System-level AI features like offline speech recognition, real-time image enhancement, and native AI assistants have shifted from CPU to GPU/NPU execution. This is why 'AI smartphones' have become a core selling point for new flagship devices, as older phones lack the GPU and NPU capabilities to match the AI performance and features of newer models.

The same applies to the PC industry. Since the rise of AI PCs, the most critical metric for complete machines is no longer CPU clock speed but 'edge AI computing power,' with GPUs shouldering most of the workload. Whether it's Intel's Arc integrated graphics, AMD's RDNA architecture, or Qualcomm's Adreno GPUs, they are increasingly handling high-density matrix computation tasks.

While many AI PC chips highlight their NPUs' superior energy efficiency, they cannot fully replace GPUs in graphics rendering and model parallel computing. Consequently, GPUs have become even more critical in the PC space, prompting vendors to significantly increase R&D investment in integrated graphics over the past year.

The most striking example, however, is autonomous driving. Whether for perception models, sensor fusion, or path planning, instantaneous processing of billions of data points is required—tasks that naturally rely on GPUs' high parallelism. Horizon Robotics' Journey series, Tesla's FSD chip, Nvidia's Orin, and the next-gen Thor platform all adopt heterogeneous architectures centered around GPUs. Especially in L3 and L4 autonomous driving, where real-time performance is crucial, GPUs are nearly irreplaceable.

You'll notice that devices previously not requiring GPUs now incorporate them to meet AI inference computing demands. Thus, the era of GPUs has truly arrived, and their dominance won't be limited to servers—it will also spark new competition on the terminal side, reshaping the entire industry.

CPU Vendors Launch Counteroffensive: AI Computing Ecosystems Become Key

I don't believe Intel and AMD will remain passive. Not only is the CPU far from obsolete, but no participant in the AI ecosystem wants to see Nvidia dominate unchallenged.

Nvidia's strength lies in its ecosystem, not hardware. CUDA, after over a decade of development, has become the de facto standard, making it difficult for other hardware vendors to compete. Without sufficient ecosystem migration capabilities, even chips with comparable performance struggle to gain developer support.

Thus, challenging Nvidia requires building one's own AI ecosystem. AMD's approach is to promote ROCm, which is more open and frequently updated than CUDA, gaining recognition from many vendors for its MI300 series.

Intel has adopted a two-pronged strategy: leveraging the Gaudi accelerator to compete in training and inference markets while developing more powerful AI processors for CPUs, capitalizing on its leadership in the PC ecosystem to deploy solutions directly on the terminal side.

The semiconductor industry is undergoing profound changes, with a GPU-centric new landscape emerging. While Nvidia will remain the king of GPUs, CPU vendors won't disappear. They will continue to solidify their roles through heterogeneous computing, software ecosystem integration, and advantages in local deployment scenarios.

In my view, the real competition in computing isn't about eliminating one another but gaining greater influence over computing structures, software ecosystems, and system architectures. Here, Huawei holds significant advantages, with its Ascend series chips, MindSpore AI framework, CANN, and HarmonyOS ecosystem forming a complete chain from underlying hardware to AI frameworks and operating systems.

As the world enters the 'AI-native era,' whoever builds a complete AI ecosystem will seize the initiative in the next computing wave.

Alibaba Cloud, Baidu, Moore Threads, GPU, CPU

Source: Leitech

Images in this article are from 123RF Royalty-Free Image Library