Waymo: Pioneering "Verifiably Safe" AI for Autonomous Driving

![]() 12/15 2025

12/15 2025

![]() 566

566

Produced by Zhineng Technology

Waymo has unveiled a technical paper titled "Demonstrably Safe AI for Autonomous Driving." As Uber pushes forward with its global Robtaxi fleet, focusing on "capability," "scale," and "commercialization," why is Waymo placing such a strong emphasis on "verifiably safe" autonomous driving?

Despite having logged over 100 million miles of driving and significantly slashing accident rates in real-world operations, why is Waymo still meticulously dissecting its system and openly sharing details about its AI architecture? To fully grasp these questions, we must first understand the differences between Waymo and Tesla, which will then provide a clearer view of Waymo's latest autonomous driving roadmap.

Autonomous driving stands as one of the most formidable AI challenges in the real world. Nowadays, the focus isn't just on whether end-to-end models mimic human behavior; in the realm of Robotaxi, the pressing question is whether they can be "proven safe."

Waymo's core philosophy is unequivocal: safety is paramount, from the model's design and architecture to its training methods.

Rather than banking solely on "larger end-to-end models," Waymo has constructed an entire AI ecosystem centered around safety verification. This ecosystem includes a "driver" model, a "simulator," and an "evaluation system," all interconnected in a closed loop and supported by a unified Waymo foundational model.

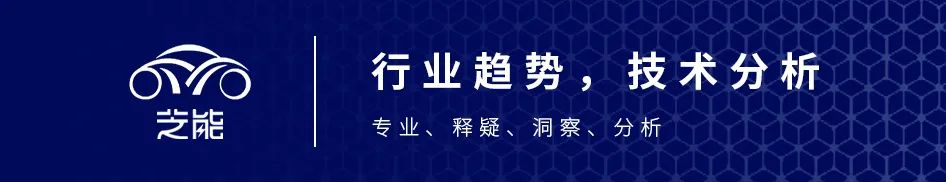

Unlike purely end-to-end or highly modular approaches, Waymo's foundational model is essentially a "world model." It achieves end-to-end learning internally through high-dimensional embedding vectors, maintaining compact, yet materializable, structured representations of objects, semantic attributes, and road structures.

This hybrid design, though seemingly intricate, offers substantial engineering value. It enables the model to learn complex behaviors while providing mechanisms for safety verification, physically consistent closed-loop simulation, and interpretable evaluation.

Waymo is relentlessly pursuing an understanding of "why the model behaves in a certain way and within what boundaries it remains safe."

In terms of model architecture, Waymo has introduced a dual-system architecture comprising "fast thinking" and "slow thinking."

◎ System 1 acts as a real-time sensor fusion encoder, merging data from cameras, LiDAR, and millimeter-wave radar to swiftly generate object and semantic understanding for millisecond-level safety decisions.

◎ System 2 is a Vehicle Logic Model (VLM) based on visual language models and broader world knowledge, performing semantic reasoning for rare, complex, or even anomalous scenarios.

This approach tackles the "common sense blind spots" prevalent in end-to-end models: when non-geometric but semantically perilous events occur on the road, the system knows to avoid them rather than mechanically adhering to drivable space.

Note: This concept may ring a bell. What sets Waymo apart is its method for enabling these models to evolve continuously and safely.

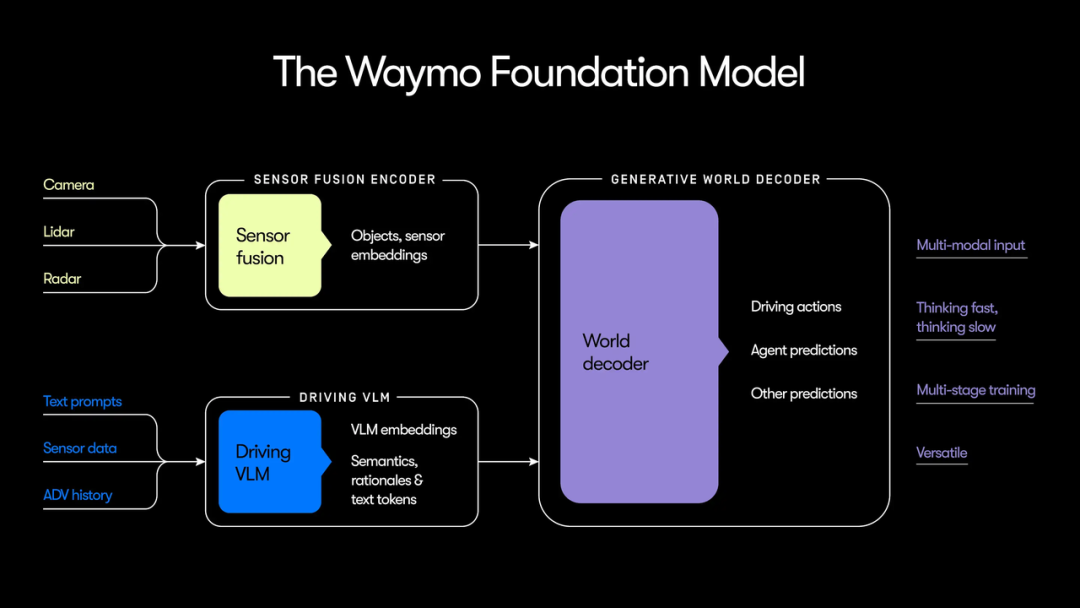

Waymo clearly differentiates between "teacher models" and "student models." The teacher model is massive, used to generate high-quality driving strategies, construct high-fidelity simulated worlds, and conduct rigorous behavioral evaluations. The student model, through knowledge distillation, inherits the teacher model's capabilities while being compressed to a computational scale suitable for real-time operation in vehicles and scalable cloud-based simulations.

This approach essentially leverages large models to explore capability boundaries and small models to handle engineering implementation, steering clear of directly deploying unverifiable complex silicon in vehicles.

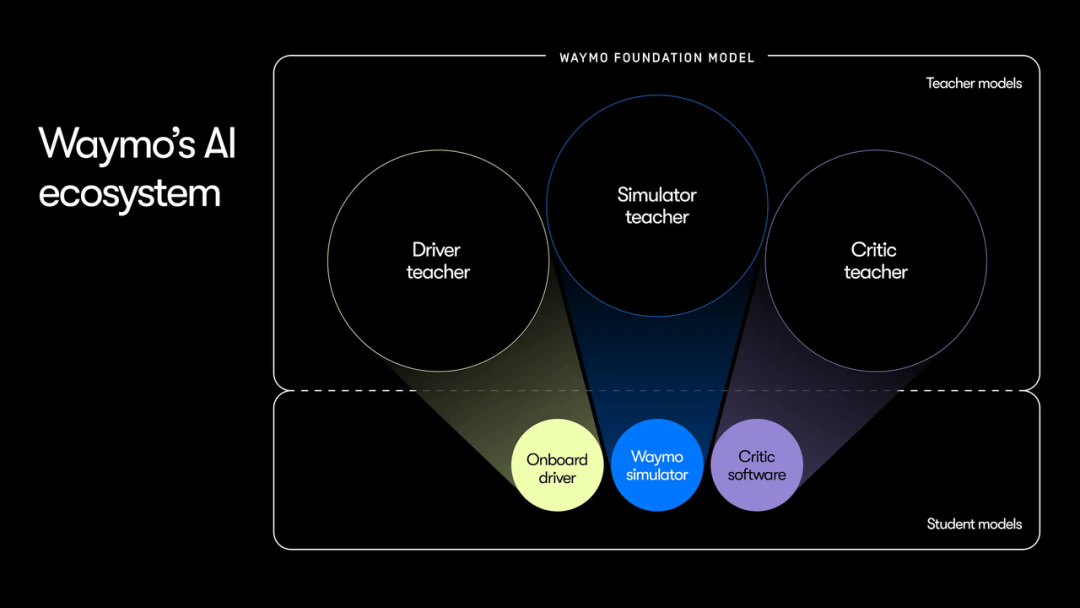

Building on this foundation, Waymo has constructed a closed learning loop.

◎ The evaluation system automatically identifies any undesirable or edge-case driving behaviors from real full autonomous driving logs.

◎ These behaviors are then converted into improvement samples for training the driver model.

◎ New strategies must first undergo rigorous stress testing in high-intensity simulated environments and then risk verification by safety frameworks before being deployed on real roads.

This process may seem slow, but it addresses a long-ignored industry issue: how to ensure that system risks do not "accumulate invisibly" as capabilities continuously improve.

What Waymo possesses now is not just "more data" but massive, continuously growing real full autonomous driving data that cannot be replicated through human driving or simple simulations. Only under complete autonomous control does the system reveal its true decision-making patterns and potential flaws.

Waymo directly incorporates this data into its training and evaluation loop, enabling the system to evolve not from "human experience" but from its "own complete autonomous driving experience," a feat with few parallels in the industry.

Summary

What Waymo has disclosed this time is a methodology. Amid Tesla's aggressive progress, Waymo argues that as autonomous driving enters the scaling operation phase, the barrier lies not in who has a larger model or more parameters but in who can establish a verifiable, iterable, and scalable safety system.