What Are the Pros and Cons of Pure Vision Autonomous Driving?

![]() 01/19 2026

01/19 2026

![]() 483

483

Recently, numerous individuals have been reaching out via the background to inquire about the advantages and disadvantages of pure vision autonomous driving. With its cost-effective and highly redundant technical approach, pure vision autonomous driving is emerging as a significant development direction in the field at this juncture. Nevertheless, it has also sparked controversy due to its inferior perception capabilities compared to LiDAR in various scenarios. Today, Intelligent Driving Frontier will delve into the pros and cons of pure vision autonomous driving.

What Is Pure Vision Autonomous Driving?

Pure vision autonomous driving refers to vehicles that forgo reliance on active sensors like LiDAR and millimeter-wave radar, instead depending solely on onboard cameras and image processing algorithms to perceive their surroundings. This method emulates how human drivers perceive the road with their eyes, capturing images from multiple angles using high-definition cameras and employing deep learning algorithms to interpret these images. This enables the identification of vehicles, pedestrians, traffic signs, lane markings, and other pertinent information, ultimately leading to informed driving decisions.

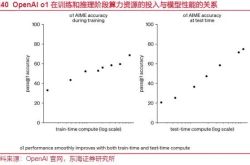

The core task of pure vision autonomous driving involves extracting useful information from two-dimensional images and subsequently inferring three-dimensional spatial structures and dynamic changes through algorithms. This facilitates functions such as determining the distance, relative speed, and potential paths of vehicles ahead. These inferences are not straightforward geometric calculations but rather 'experiences' gleaned by deep neural networks through extensive training data. 'Inferring the world from images' forms the bedrock of the pure vision approach and is the source of its strengths and weaknesses.

Technical Advantages of Visual Perception

The cost of visual sensors is considerably lower than that of active sensors like LiDAR. Camera hardware is affordable, compact, and easy to deploy on a large scale, which is crucial for controlling overall vehicle costs. In contrast to the previously exorbitant prices of LiDAR, which could reach tens of thousands of yuan, the investment in cameras is minimal.

Visual data also provides a wealth of semantic information. Cameras capture optical images that are rich in details, including colors, textures, and symbols. This information is invaluable for comprehending complex scenarios such as road signs, traffic light states, and dangerous gestures. In contrast, while LiDAR outputs precise point cloud data, it lacks the semantic richness found in image data.

For pure vision autonomous driving, the input is unified image data, which facilitates more focused and consistent algorithm development and iteration. In multi-sensor fusion systems, each sensor's data format varies, necessitating complex data alignment and fusion architecture design during development. Conversely, with a pure vision approach, developers only need to accumulate data and iterate models around image perception algorithms, streamlining the data processing flow.

Moreover, by continuously training visual models, autonomous driving systems can achieve more precise recognition and classification of objects in diverse complex environments. The details in image data also offer more clues for predicting the intentions of dynamic objects, such as sudden changes in the direction of the vehicle ahead or pedestrians potentially crossing the road.

Technical Disadvantages of the Pure Vision Approach

While the advantages of pure vision are apparent, there are also numerous drawbacks, which is why many automakers still opt for LiDAR as their primary perception hardware.

The most significant issue with pure vision is the instability of distance and depth inference compared to active sensors like LiDAR and millimeter-wave radar. Cameras capture two-dimensional images, and accurately inferring three-dimensional spatial structures and measuring distances from these images rely on internal model estimates and reasoning. While this inference can perform well in ordinary scenarios, it may lead to misjudgments or instability in extreme lighting conditions, severe occlusions, or long-distance scenarios. In contrast, LiDAR directly measures return times by emitting laser beams to obtain precise three-dimensional information, making it a more reliable ranging method in many cases.

Cameras are also highly susceptible to lighting and weather conditions. Rain, fog, heavy snow, and backlighting can significantly diminish image clarity or contrast, affecting the visual algorithm's ability to recognize environmental elements. In comparison, radar-type sensors perform more stably in harsh weather conditions such as rain and fog. For instance, millimeter-wave radar can provide stable and effective information in low-visibility environments, a capability that pure vision struggles to achieve.

The generalization ability of pure vision autonomous driving in complex scenarios is also constrained. Training a pure vision system necessitates a large number of samples to cover various possible road conditions and dynamic combinations. However, the real world is characterized by changeable and uncertain factors, making it impossible for training data to be exhaustive. In unseen extreme or special combination scenarios, deep learning models may fail to make correct judgments, posing potential safety risks.

Many visual systems also demand extremely high computational power for image preprocessing, feature extraction, three-dimensional reconstruction, and other tasks, posing significant challenges given the limited resources of onboard platforms. High computational power investment represents a 'hidden cost.' While the hardware itself may be inexpensive, ensuring real-time performance may necessitate more expensive computing platforms.

Directions for Technological Development

At this stage, many automakers' autonomous driving solutions adopt a 'fused perception' approach, which involves incorporating perception hardware such as LiDAR and millimeter-wave radar to complement the information provided by cameras. This fusion leverages the rich semantic information of visual data and the precise spatial information provided by LiDAR, enhancing the overall perception reliability and redundancy of autonomous vehicles in complex scenarios.

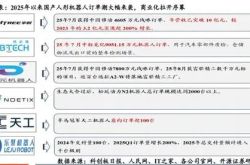

From a technological trend perspective, pure vision and multi-sensor fusion each have their applicable scenarios. Pure vision relies on image information and powerful algorithms to achieve environmental understanding at a lower cost. With improvements in computational power and model optimization, its perception capabilities can continually enhance. Multi-sensor fusion, on the other hand, offers inherent advantages in terms of environmental understanding stability and safety redundancy, particularly in complex or extreme operating conditions.

Final Thoughts

Pure vision perception is not merely a low-cost option for the autonomous driving industry but rather a systematic approach centered on images, exchanging perception capabilities through data scale and engineering closure. Its advantages lie in low hardware costs, rich semantic information, and a unified data ecosystem, enabling model iteration, online feedback, and large-scale scenario coverage. This accelerates productization and the widespread adoption of autonomous driving.

-- END --