Will CPUs Seize the Next “Storage-Like” Opportunity in the AI Application Era?

![]() 01/20 2026

01/20 2026

![]() 353

353

As the demand for computing power skyrockets today, will CPUs replicate the meteoric rise seen during the PC era? This is a question that merits deep contemplation. Presently, large-scale model inference, edge AI, and smart IoT are propelling computing pressure to unprecedented critical levels. Stocks of Intel and AMD are quietly on the rise, the Arm architecture is emerging as a dark horse, and even tech giants like Apple and Xiaomi are ramping up their CPU investments in self-developed chips.

Is this just a fleeting trend, or does it mark the dawn of a structural opportunity? With CPU utilization in cloud clusters nearing the red line and each terminal device demanding independent AI inference capabilities, are traditional processors on the cusp of a transformative explosion?

01. Inference Poised to Become a Pivotal Direction

As AI applications transition from laboratories to diverse industries, inference computing is usurping training as the primary battleground for AI computing power. According to a joint forecast by IDC and Inspur Information, training accounted for 58.7% of China’s AI server workload in 2023. However, the demand for inference computing power is projected to surge to 72.6% by 2027. With the maturation of large models, enterprises’ demand for computing power is no longer centered on exorbitant spending on training clusters but on efficiently and economically deploying models into real-world business scenarios. This shift has catapulted the traditional general-purpose processor, the CPU, back into the limelight.

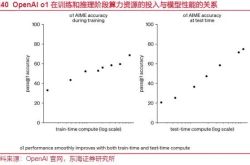

In inference scenarios, the cost-effectiveness of CPUs is being rediscovered. Compared to GPUs, which cost hundreds of thousands and consume staggering amounts of power, CPUs offer unparalleled competitiveness in terms of cost, availability, and total cost of ownership (TCO). Intel data reveals that leveraging CPUs for AI inference eliminates the need to construct new IT infrastructure, enabling the reuse of idle computing power from existing platforms and avoiding the management complexity introduced by heterogeneous hardware. More significantly, through technologies like AMX acceleration and INT8 quantization optimization, the inference performance of modern CPUs has undergone a qualitative leap. Actual tests indicate that optimized Xeon processors can achieve up to an 8.24-fold increase in inference speed on models like ResNet-50, with a precision loss of less than 0.17%. This model precisely caters to the needs of small and medium-sized enterprises—they do not require GPT-4-level computing power but cost-effective solutions capable of running 32B parameter models.

The strength of CPUs lies precisely in the “long-tail market” of AI inference. The first category encompasses the deployment of small language models (SLMs), such as DeepSeek-R1 32B and Qwen-32B, which excel in Chinese language capabilities within enterprise scenarios and have moderate parameter sizes that CPUs can fully handle. The second category involves data preprocessing and vectorization tasks, including text cleaning, feature extraction, and embedding generation, which are naturally suited to the serial processing capabilities of CPUs. The third category comprises “long-tail” inference tasks characterized by high concurrency but simple individual computations, such as customer service Q&A and content moderation. Here, CPUs can process hundreds of lightweight requests in parallel through multi-core processing, achieving higher throughput. The common feature of these scenarios is relatively lenient latency requirements but extreme cost sensitivity, making them ideal stages for CPUs to showcase their capabilities.

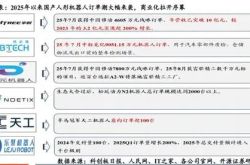

Since 2025, numerous listed companies have introduced related products to the market. Inspur Information (000977) took the lead in March by launching the YuanBrain CPU Inference Server NF8260G7, equipped with four Intel Xeon processors. Through tensor parallelization and AMX acceleration technology, the server can efficiently run the DeepSeek-R1 32B model, achieving over 20 tokens/s per user while handling 20 concurrent requests. Digital China (000034) unveiled the KunTai R622 K2 Inference Server at the WAIC conference in July, based on the Kunpeng CPU architecture. Supporting four acceleration cards within a 2U space, it adopts a “high-performance, low-cost” approach, targeting budget-sensitive industries like finance and telecommunications. The strategic layouts of these vendors send a clear signal: CPU inference is not a second-best option but a proactive strategic choice.

The deeper logic lies in the fact that AI computing power is shifting toward “decentralization” and “scenario-based” deployment. When every factory, hospital, and even every smartphone requires embedded inference capabilities, it is neither feasible nor necessary to rely solely on GPU clusters. As a general-purpose computing foundation, CPUs can seamlessly integrate AI capabilities into existing IT architectures, enabling a smooth transition to “computing as a service.” In this sense, CPUs are indeed becoming the “new storage” of the AI era: they may not be the most dazzling, but they are indispensable computing infrastructure.

02. CPUs May Become a Bottleneck Sooner Than GPUs

In the era of Agent-driven reinforcement learning (RL), the bottleneck effect of CPUs is emerging in a more subtle yet critical manner than GPU shortages. Unlike traditional single-task RL, modern Agent systems necessitate running hundreds or thousands of independent environment instances simultaneously to generate training data, making CPUs the de facto first bottleneck.

In September 2025, when Ant Group open-sourced the AWORLD framework and decoupled Agent training into inference/execution and training ends, it was compelled to utilize CPU clusters to host massive environment instances, with GPUs solely responsible for model updates. This architectural choice was not a design preference but an inevitable consequence of environment-intensive computing—when each Agent interacts with an operating system, code interpreter, or GUI interface, it requires an independent CPU process for state management, action parsing, and reward calculation, directly determining the number of trajectories that can be explored simultaneously.

The deeper contradiction lies in the asynchronous imbalance of the CPU-GPU pipeline. When the environment simulation speed on the CPU side cannot keep pace with the inference throughput of the GPU, policy lag worsens dramatically—GPUs are forced to idle while waiting for experience data, creating a fatal time difference between the strategy being learned by the Agent and the old strategy used during data collection. This lag not only diminishes sample efficiency but also induces training oscillations in on-policy algorithms like PPO, potentially leading to strategy divergence. The VideoDataset project open-sourced by Zhiyuan Robotics in March 2025 confirmed this: its CPU software decoding scheme became a training bottleneck, and switching to GPU hardware decoding increased throughput by 3-4 times, allowing CPU utilization to drop from saturation.

Industrial-grade practices in 2025 further exposed the systemic damage of CPU bottlenecks to convergence stability. Tencent’s AtlasTraining RL framework had to design a heterogeneous computing architecture specifically to coordinate CPU-GPU collaboration in training trillion-parameter models, as it discovered that minor differences in random seeds for environment interactions and CPU core scheduling strategies could affect final strategy performance through the butterfly effect of early learning trajectories. More severely, the non-stationarity of multi-agent reinforcement learning (MARL) exacerbates this issue—when hundreds of Agent strategies update simultaneously, CPUs must not only simulate environments but also compute joint rewards and coordinate communication in real-time, directly causing the state space complexity to grow exponentially.

Essentially, Agent RL shifts the computing paradigm from “model-intensive” to “environment-intensive,” with CPUs serving as the physical foundation for environment simulation. When Agents need to explore complex behaviors like tool usage and long-chain reasoning, each environment instance functions as a miniature operating system, consuming 1-2 CPU cores. At this juncture, no matter how many A100s or H200s are deployed, if CPU core counts are insufficient, GPU utilization will remain below 30%, and convergence time will extend from weeks to months.

By 2025, this bottleneck has spread from academic research to industrial practice, making solving the CPU bottleneck the core battlefield of RL infrastructure. In the Agent era’s computing power race, the decisive factor may not be the peak computing power of GPUs but whether sufficient CPU cores can “feed” those hungry intelligent agents.

- End -