How does LOFIC technology overcome the perception bottlenecks of pure vision autonomous driving under complex lighting conditions?

![]() 01/20 2026

01/20 2026

![]() 415

415

In the debate over technical approaches to autonomous driving, the pure vision solution has emerged as a primary choice for many OEMs due to its advantages of closer alignment with human driving logic, lower costs, and greater scalability. However, the performance of pure vision systems can be unsatisfactory under extreme lighting conditions, such as the abrupt changes in brightness when entering or exiting tunnels, intense backlighting at night, and the flickering of LED signals commonly seen on urban streets. This has led many to advocate for the direction of LiDAR.

Recently, when discussing the impact of extreme lighting conditions on cameras (related reading: How do extreme lighting conditions affect autonomous driving cameras?), some have proposed the Lateral Overflow Integration Capacitor (LOFIC) technology. Today, let's delve into this topic.

The Physical Boundaries of Photoelectric Conversion and the Shortcomings of Traditional HDR

Before discussing LOFIC technology, we must first understand the principle by which camera sensors capture light. Each pixel in an image sensor can be seen as a 'water tank' that collects photons and converts them into electrical charges. In an ideal state, the stronger the light, the more charges accumulate in the tank, resulting in a brighter image signal.

However, this physical structure has a natural limitation known as the 'Full Well Capacity' (FWC). When light is extremely intense, the charges in the pixel tank quickly fill up and overflow, leading to large areas of overexposure and loss of detail in the image. In autonomous driving scenarios, this characteristic is well illustrated when a vehicle exits a dark tunnel into blinding sunlight, causing the camera to experience temporary 'blindness' and fail to recognize road conditions or obstacles ahead.

To address such extreme lighting scenarios, the industry commonly employs High Dynamic Range (HDR) technology. The most prevalent method is 'multi-exposure synthesis,' where the sensor captures several images with varying exposure times in rapid succession and then combines them through post-processing algorithms. This approach allows short-exposure images to retain highlight details, while long-exposure images capture dark shadows.

However, in high-speed autonomous driving scenarios, this synthesis method introduces a critical flaw: motion artifacts (ghosting). Due to the time difference between multiple exposures, even if it's just a few milliseconds, objects in the frame can shift significantly for a vehicle traveling at high speeds. The synthesized image may exhibit double images or ghosting along the edges, severely interfering with the autonomous driving neural network model's ability to judge object edges, depth, and motion trajectories. This can even lead to misidentification of obstacles by the algorithm.

In addition to motion artifacts, autonomous driving faces another challenge arising from exposure logic: the flickering of LED signals. Nowadays, traffic lights, road signs, and vehicle taillights almost exclusively use LED sources. Since LEDs control brightness through Pulse Width Modulation (PWM), they actually flicker at extremely high frequencies, undetectable to the human eye. If the camera's exposure time is too short and captures the LED in an 'off' state, the signal may appear extinguished or continuously jumping in the system's output. This unstable visual input serves as a significant source of interference for perception algorithms that rely on visual signals for traffic light recognition and distance maintenance.

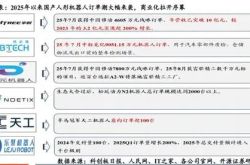

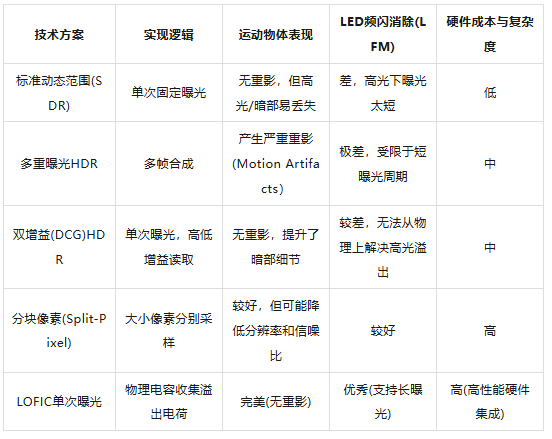

The table below illustrates the performance differences of various dynamic range technologies in high-speed autonomous driving scenarios, clearly revealing the limitations of traditional approaches.

The Physical Mechanism of LOFIC Technology

LOFIC technology represents a reconfiguration of image sensor pixel architecture. Its full name, 'Lateral Overflow Integration Capacitor,' refers to the addition of a high-density capacitor next to each pixel's photodiode (PD) to capture 'overflow charges.'

If we liken a traditional pixel to a water tank prone to filling up, LOFIC technology adds an overflow outlet on the side of the main tank, connected to a much larger reserve tank. When external light intensifies and the main tank's charges approach overflow, excess electrons flow through a controlled transistor switch into this lateral integration capacitor, preventing signal loss or saturation.

From a circuit perspective, this design allows the sensor to utilize two different modes simultaneously during a single exposure to collect light signals. In darker areas, the sensor closes the overflow switch and uses High Conversion Gain (HCG) mode to capture extremely weak light signals, ensuring clean dark images with reduced noise. In intensely bright areas, the sensor opens the overflow path, engaging the capacitor in Low Conversion Gain (LCG) mode, significantly expanding the total number of electrons the pixel can accommodate. This 'single exposure, dual-path parallel' processing logic enables the pixel's Full Well Capacity (FWC) to leap from the traditional 30,000 electrons to 270,000 or even more.

The most direct benefit of this physical charge collection method is that the sensor no longer needs to shorten exposure times under extremely bright light. With a 'secondary reservoir' to handle overflow electrons, the shutter time can be extended, allowing the camera to capture a complete LED flicker cycle in each frame. This means LOFIC physically resolves the LED flicker issue (LFM), ensuring traffic signals remain steadily illuminated in the video stream.

Since all bright and dark information is collected during a single shutter cycle, there is no temporal misalignment in the image, completely eliminating motion ghosting. For autonomous vehicles traveling at speeds exceeding 100 km/h, this 'spatiotemporally consistent' high-definition imagery is essential for perception algorithms to make accurate decisions.

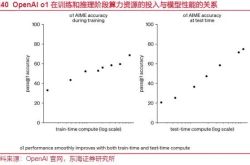

In practical applications, the expansion of dynamic range is remarkable. Dynamic range is measured in decibels (dB), with traditional automotive sensors typically ranging from 60dB to 90dB. Sensors employing LOFIC technology can easily exceed 120dB in a single exposure, reaching up to 140dB. Visually, this means a single frame can clearly display both the wall textures in a dark tunnel and the license plates of distant vehicles under sunlight outside the tunnel. This ultimate (extreme) reproduction of the real world provides a solid foundation for safe driving of pure vision systems in complex lighting environments.

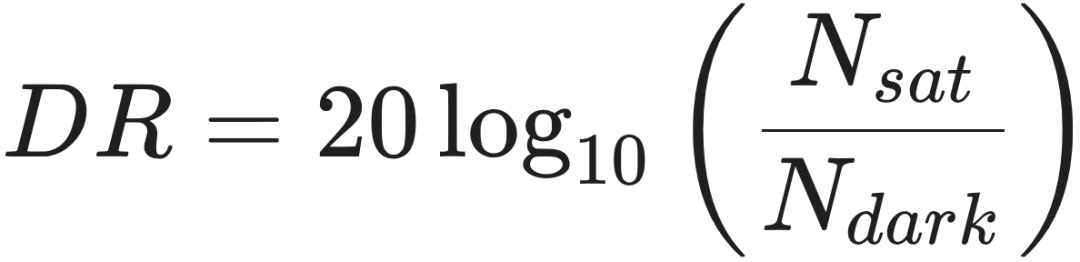

We can better understand this improvement through the mathematical definition of dynamic range. Dynamic Range (DR) is typically defined as the ratio of the maximum measurable signal (saturated signal N_{sat}) to the minimum measurable signal (dark noise N_{dark}):

In the LOFIC structure, N_{sat} increases by nearly an order of magnitude due to the introduction of the capacitor, while Dual Conversion Gain (DCG) technology reduces N_{dark}. This dual approach achieves an exponential leap in results. This hardware-level evolution brings visual solutions, which previously relied on complex post-processing algorithms for repairs, back to their original purpose of acquiring high-quality raw signals.

Deep Optimization of Autonomous Driving Algorithms by LOFIC

High-quality hardware data input serves as the nutritional source for algorithm models. In the perception architecture of autonomous driving, Transformer-based BEV (Bird's Eye View) models have become mainstream. The core logic of these algorithms is to extract 2D image features captured by multiple cameras installed around the vehicle and then 'stitch' them into a unified, top-down 3D space through a complex spatial transformation network. If we compare this process to assembling a jigsaw puzzle, each photo provided by the cameras represents a puzzle piece. If the pieces themselves are blurry, overexposed, or contain flicker interference, the resulting 3D perception will exhibit various logical flaws.

The empowerment of perception algorithms by LOFIC technology is first evident in the stability of feature extraction. Before entering the backbone network of the neural network, image data requires preprocessing. If the sensor output exhibits significant 'background noise' at alternating bright and dark areas or lacks detail in highlighted regions, the confidence level of the neural network in extracting features such as lane lines, curbs, or pedestrian contours will significantly decrease.

Especially in the BEV architecture, where the system needs to fuse images from different perspectives, the high linearity and motion artifact-free images provided by LOFIC enable exceptionally smooth feature alignment between perspectives. This significantly enhances the system's accuracy in detecting distant objects, allowing the vehicle to proactively anticipate potential hazards in abnormally lit areas ahead from a greater distance.

For cutting-edge algorithms like the 3D Occupancy Network, the importance of LOFIC is even more self-evident. The goal of the occupancy network is to determine whether each volume unit (voxel) in space is occupied. This requires the model to have a strong perception of depth information in the scene. Depth perception largely relies on texture and contrast details in the image. When visual sensors saturate under intense light, textures in the image disappear, turning into a blank white area, preventing the algorithm from deducing the depth of that region. LOFIC preserves fine textures in highlighted areas, enabling the algorithm to accurately identify road undulations and obstacle shapes in scenarios such as 'snow under direct sunlight at noon' or 'intense light reflections on wet road surfaces,' avoiding 'blind spots' in the perception system.

Furthermore, autonomous driving systems have extremely stringent real-time requirements. Multi-exposure synthesis HDR not only increases sensor power consumption but also imposes a significant computational burden on the backend Image Signal Processor (ISP). Synthesizing each frame consumes valuable computational resources and introduces processing delays. For autonomous driving systems processing dozens of frames per second at high speeds, even millisecond-level delays can pose dangers. LOFIC technology simplifies backend processing logic by completing high dynamic range information collection in a single hardware circuit pass, reducing overall system power consumption and latency. This allows computational resources to be allocated more toward higher-level planning and control tasks.

Industry Trends and Future Prospects

As autonomous driving technology advances toward Levels 3 and 4, the industry's tolerance for errors in visual perception is approaching zero. Against this backdrop, the technological competition among sensor manufacturers has shifted from merely 'comparing pixel counts' to 'comparing pixel quality.' LOFIC technology, with its distinct advantages in enhancing pixel dynamic range and eliminating flicker, is rapidly moving from laboratories to mass production.

From a market development perspective, the application of LOFIC technology is reshaping the standard parameters of automotive cameras. Future mainstream automotive cameras will no longer be simple imaging units but sophisticated intelligent perception nodes integrating multiple gain modes, built-in charge management capacitors, and hardware-level LFM capabilities. Although the introduction of this technology increases the hardware cost of individual sensors, its value to the entire vehicle is immense. It reduces reliance on redundant sensors, lowers research and development costs associated with processing noisy data in algorithm training, and, more importantly, fills an 'optical gap' in pure vision solutions, enabling them to challenge the position of LiDAR in more extreme environments.

Final Thoughts

Looking ahead, as sensor technology further miniaturizes and 3D stacking processes mature, LOFIC technology is expected to integrate more closely with on-chip AI processing units. Future sensors may complete preliminary semantic filtering and feature enhancement at the moment charges leave the pixel. Throughout this evolution, the Lateral Overflow Integration Capacitor, as a foundational physical innovation, will continue to serve its role as a 'secondary water tank,' enabling autonomous vehicles to consistently perceive the road ahead clearly, regardless of lighting variations. For pure vision autonomous driving, LOFIC represents not just a hardware improvement but a ticket to the era of all-weather autonomous driving.

-- END --