After Discussing AI with People, I Feel Everyone Is Like a Frog in Boiling Water

![]() 01/21 2026

01/21 2026

![]() 531

531

"What will our lives be like when AI truly becomes a part of our world?"

Those of us who watched sci-fi movies in our childhood have likely pondered this question at some point. Of course, the answers usually fall into two extreme scenarios: either AI will serve humanity, enabling us to live in lavish comfort, or AI will awaken, enslave humanity, and devastate the Earth.

However, when AI genuinely integrates into our lives and work, the transformations may not be so dramatic. Instead, subtle patterns emerge, and simple yet irreversible changes suddenly occur. Over the past year, it's become evident that people are increasingly using and relying on AI, and news about AI replacing human labor is prevalent across various countries and industries.

What will truly transpire when AI arrives? Over the past period, we've engaged in discussions about AI with many ordinary individuals like you and me. Rather than focusing on how to utilize this technology, we've concentrated on its impact on us. We've compiled some memorable snippets to share here, solely for your reference.

There are no definitive answers, no set stances—just records of observations and feelings.

Several years ago, I interviewed an AI developer team at an event. They had developed an app based on large model capabilities to guide users through romantic relationships, particularly in identifying whether they were acting as a 'simp' (someone who is overly submissive in a relationship).

At the time, although I posed questions related to AI development, I couldn't help but think, "If relationships are this challenging, why not avoid them altogether?"

Subsequent events proved that I was short-sighted. Such features were not only not redundant but gradually became mainstream. Recently, there have been numerous media reports about using AI for romantic relationships. Here, I'll only mention one friend who discussed this in-depth with us. Xiao Z works overseas and was in a long-distance relationship with their ex-partner for years. This relationship often left them drained. So, when they were unsure how to respond to their partner, they simply uploaded 120,000 chat records between the two to ChatGPT, training it into a personalized 'love model'.

From then on, when Xiao Z struggled in their relationship, they would consult ChatGPT, which could accurately advise them on what to do. For instance, it might determine that the other person was throwing a tantrum and suggest not to worry too much, as they would return to coax them in a couple of days. Most of the time, the outcomes matched AI's predictions perfectly. Moreover, when the two argued, ChatGPT would also console and comfort Xiao Z, offering guidance that was often more acceptable than advice from friends with their own biases.

This wasn't an isolated incident for Xiao Z. Using AI to respond in intimate relationships has become a new norm. Naturally, it has led to scenarios where both parties in a relationship use AI to chat with each other, unaware that it's just AI engaging in a 'blank fort strategy' (a tactic of feigning strength while actually being weak).

Once humans become accustomed to something, the next step is often dependency.

So, what happens next when we become accustomed to using AI to handle our emotions, feelings, and even relationships?

Over the past year, we've received numerous private messages from readers summarizing their experiences in one sentence: they've truly been replaced by AI in positions such as design, art, customer service, copywriting, and self-media editing.

Among them, Xiao W wrote us a lengthy private message. They graduated from an art college and, after a challenging search, found an internship at a company in their hometown's provincial capital, as many companies were reluctant to hire college students without internship experience. Once they secured the position, Xiao W cherished it, working diligently and feeling they had a good rapport with colleagues.

However, a few months later, the company informed them that they couldn't be confirmed as a regular employee because the design department was required to extensively use AI tools, leading to significant staff reductions. All colleagues who had mentored them were laid off, and none of the interns were spared. It was said that the company would no longer hire design interns in the future.

Xiao W felt powerless and angry. Their family advised them to learn AI, but after exploring many training institutions and online courses, they found these expensive programs vague and unhelpful in solving real problems. They also considered moving to a first-tier city to find design or art-related jobs in the AI field but heard that these companies had extremely stringent hiring requirements.

They didn't know what to do. Actually, we didn't know how to respond to them either.

Lao Q is another one of our readers, or perhaps a cyber acquaintance who stumbled upon our content and wanted to chat.

After adding our contact information, Lao Q introduced themselves as a programmer in Silicon Valley. They had gone to the U.S. to study computer science over a decade ago and stayed to work there. They reached out to discuss something: in a previous piece, we had talked about programmers needing to be wary of AI's impact, but Lao Q believed the reality was just the opposite.

Here's what happened. Lao Q's team had previously tried using code outsourcing from India and China to boost efficiency, but the results weren't as good as media reports suggested. The quality of the outsourced code was poor, and the workload from corrections might even exceed that of writing it themselves.

One day, Lao Q tried using Claude Code for work and found the experience incredibly smooth. As the first person in their team to benefit from AI code, they experimented with many tools and proudly shared with us that they had devised a workflow integrating three software programs seamlessly, leaving no trace of AI involvement. As a result, they barely had to do any work anymore. Their daily routine consisted of playing games at work, going on dates, and exercising after work—life couldn't be more comfortable. Lao Q firmly believed in AI's future, considering it the best invention ever.

Later, news emerged about massive layoffs in Silicon Valley due to AI. We immediately thought of Lao Q and tried to contact them for their perspective, but we didn't receive a response.

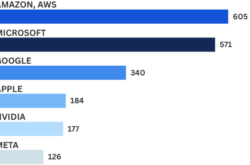

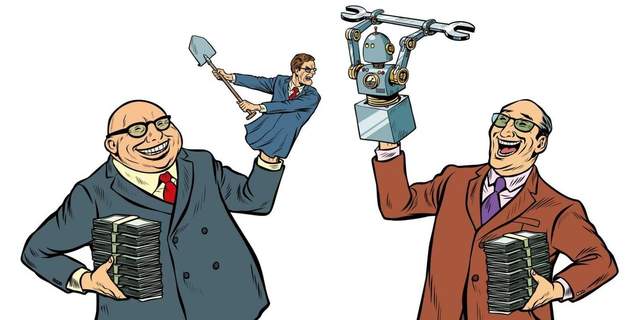

Over the past year, I've interviewed many business owners and executives. They all share one common trait: they're confident they can run a company with just one person or even no people at all. They believe that by leveraging AI, they can generate profits effortlessly. This trust often manifests as wave after wave of layoffs in their companies.

Mr. T, the founder of a software company, mentioned the word 'cognition' most frequently during our two-hour chat, followed by 'empowerment' and 'Agent'. During the interview, he mentioned that over the past year, he had laid off three out of the eight departments in his company, disbanding entire departments at once. He didn't believe there were any issues with how these departments operated or that AI could truly replace them yet. Instead, he felt that in the AI era, these departments were no longer necessary, and the company had to transform towards comprehensive AI integration.

I really wanted to ask him what happened to the laid-off employees, but out of politeness, I held back. Mr. T said the next step was to have AI manage the entire company's operations and judge employee performance. Eventually, he planned to resign from the board and let AI run the company. He hoped his company would be the first to embrace the AI era.

On the other end of the spectrum, another reader told us that their boss also firmly believed in AI. This company wasn't in the tech sector, and the boss mainly learned about AI through short videos. However, during every meeting, the boss would get angry and say, "I told you to use AI more, but you didn't listen!" or "In the future, we might as well be a company with no employees at all!"

Yet, they didn't know how to prove their value as human employees or demonstrate that AI wasn't as miraculous as portrayed in those short videos.

Both sides were at a standstill, filled with mistrust towards each other.

I have a small observation: when large models first became popular or even a few years earlier when AI was just playing Go, social media was flooded with claims that AI would soon replace humans and take our jobs. Back then, netizens were angry, and many friends were fearful. Although AI seemed distant, everyone was anxious about its arrival.

But today, AI is truly here. Some people have indeed lost their jobs or taken pay cuts because of it, while others have found life easier or even received promotions and raises. Some use AI for romantic relationships, medical consultations, job interviews, and exams.

Yet, our attitude towards AI has become numb and indifferent. It's here, it's replacing us, and we're using and relying on it—so what? What can we do?

We comfort ourselves by saying that when the internet first arrived, it also had a profound impact, and when mobile payments were introduced, many people struggled to accept a cashless society. But overall, things improved, and life got better. We believe the story with AI will follow a similar trajectory in the end.

However, the difficulties are real and present. The sense of confusion about what to do next is palpable. We're all like frogs in warm water. One voice tells us that warm water is good for our health and nothing's wrong, while another warns us that the water is heating up and trouble lies ahead.

But so what? What can we do?

The water's a bit warm, so let's just soak in it.

Getting hotter, but it's just the beginning.

Perhaps one day, we'll all be cooked.

When will that day come? No one knows.

Spring will come again; I'll just keep croaking.