Full Interpretation of Tesla's World Model Patent: From 'Observation' to 'Envisioning', the Evolutionary Leap of Physical AI

![]() 01/26 2026

01/26 2026

![]() 501

501

In China's intelligent driving landscape, the term 'world model' has long been a topic of discussion. Recently, it has sparked renewed interest within the autonomous driving community. However, this resurgence isn't due to promotional efforts by automakers or autonomous driving firms. Instead, it stems from a patent (US20260017875A1) publicly disclosed in January 2026, titled 'Simulation of viewpoint capture from environment rendered with ground truth heuristics.'

This patent essentially unveils Tesla's world model, detailing its essence and construction methodology, thereby igniting widespread discussions across the industry.

Below is a popular science interpretation based on the patent document's content:

1. What is Tesla's World Model?

What is the purpose of a world model? Presently, we often encounter two types of world model promotions: one for simulation and environmental reconstruction, and the other for directly comprehending the environment and outputting actions for Physical AI.

However, both the Tesla patent and this article focus on the former; the latter methodology, in my view, is not currently feasible.

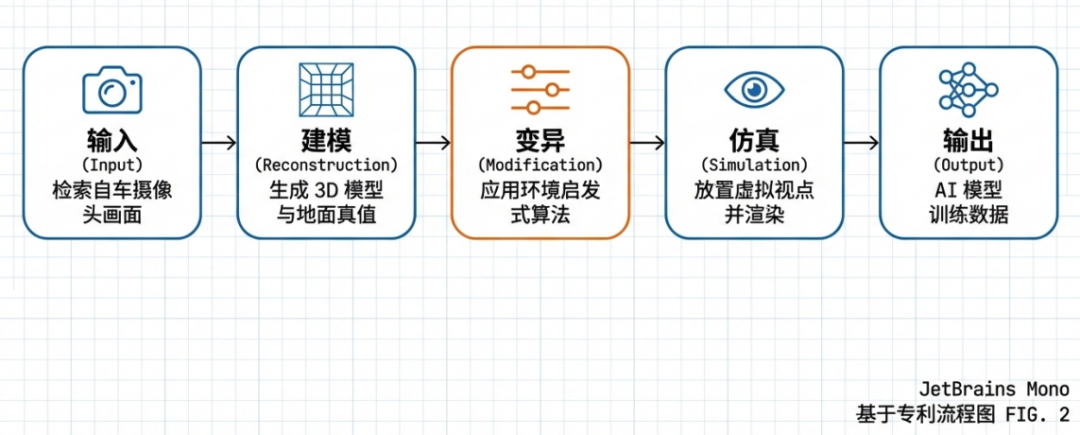

In essence, the solution outlined in Tesla's patent is a 'digital twin + parallel universe' generation system. It initially utilizes images captured by vehicle cameras in the real world to reconstruct the 'skeleton' of the road (a ground truth 3D model). Subsequently, instead of merely replicating reality, it employs algorithms (heuristic rules) to 'reskin' and 'add special effects' to this skeleton, generating countless virtual driving scenarios that may be rarely encountered or extremely hazardous in reality. Finally, it uses virtual cameras to capture these scenarios and feeds them to AI for training vehicle-end algorithms.

For AI training, this approach offers several advantages:

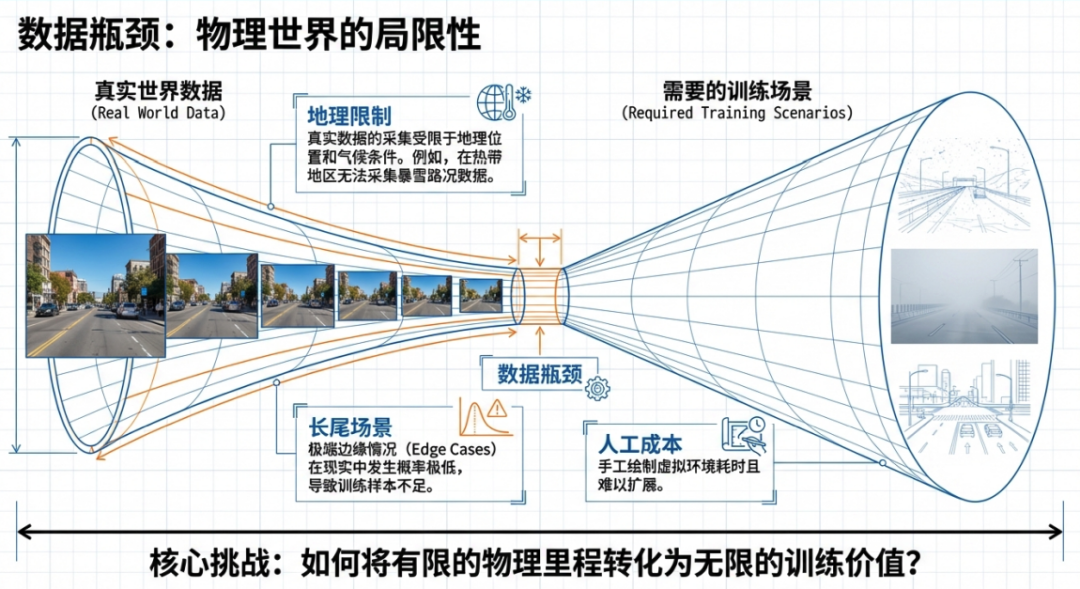

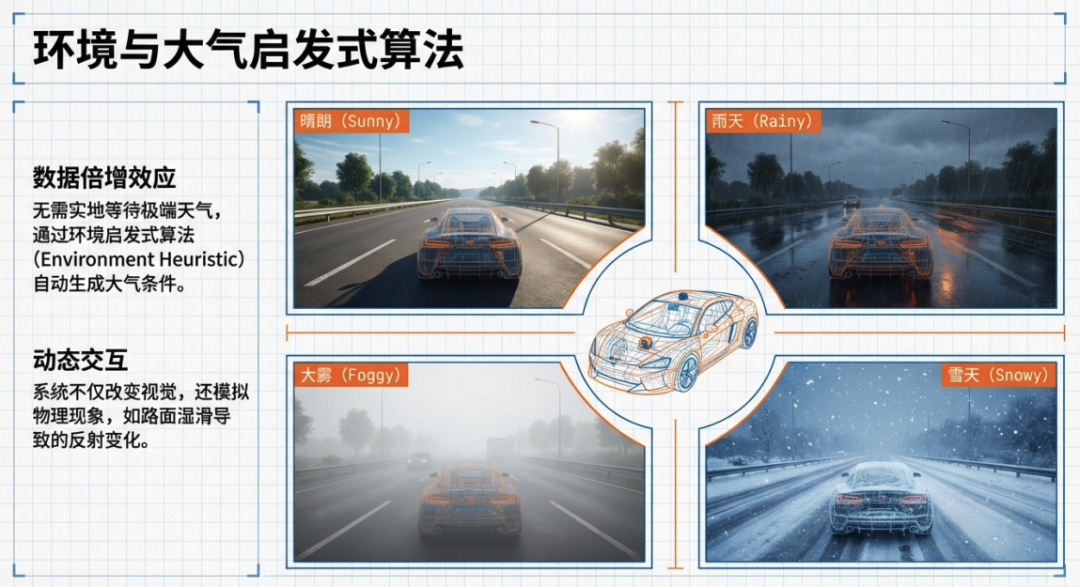

Breaking free from real-world constraints (God's perspective): It can freely modify the environment in the virtual world. For instance, it can generate blizzard conditions on a tropical map or create potholes and standing water on flat roads using algorithms.

Creating 'edge scenarios' (Corner Cases): Collecting extreme road condition data (such as highly complex intersections or contradictory traffic signs) in reality is time-consuming and perilous. This system can artificially create these 'logical conflicts' (e.g., a road that is both a one-way and a two-way street), training AI to handle chaotic situations.

Exponential growth in training data volume: Compared to having real vehicles drive hundreds of thousands of kilometers on the road, this system can swiftly generate thousands of variants through computers, significantly enriching the training database.

However, implementing such a world model also presents several challenges:

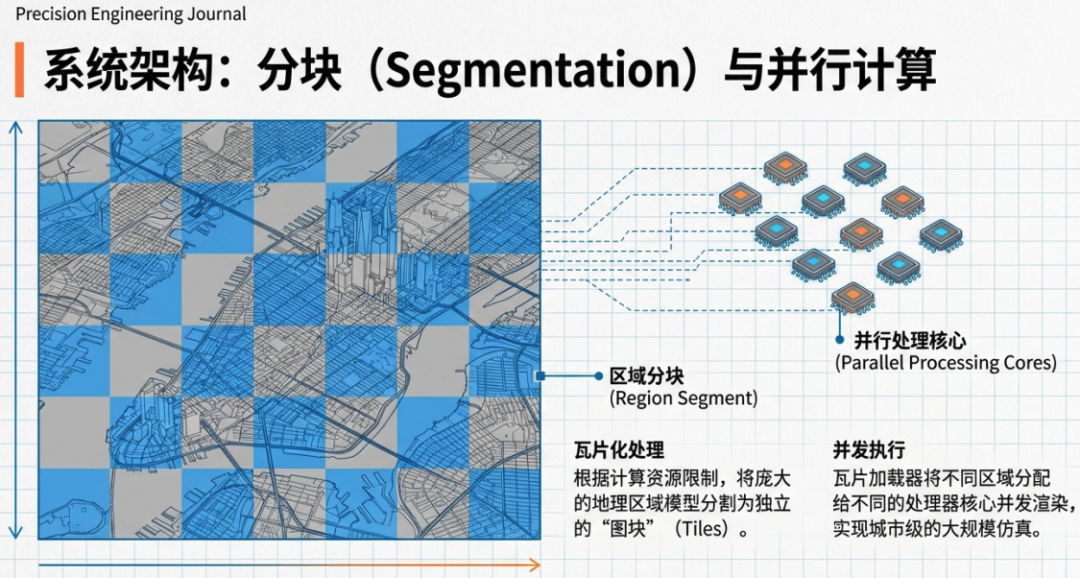

Immense computational power consumption: To generate high-fidelity 3D environments and realistic lighting effects (such as road reflections and dynamic weather), the system requires extremely high computational resources. The patent specifically mentions the need to divide maps into small sections (Tiling) and assign them to different processors for parallel computing to address this issue.

Dependence on the accuracy of foundational data: Although variations are possible, the foundational road skeleton (First Surface) must still be generated based on feedback from real cameras. If the geometric structure of the original perception is incorrect, subsequent virtual generations may also be biased.

2. What is the core technology of Tesla's World Model?

The 'black technology' of this technique is mainly reflected at three levels, achieving a leap from 'observation' to 'envisioning':

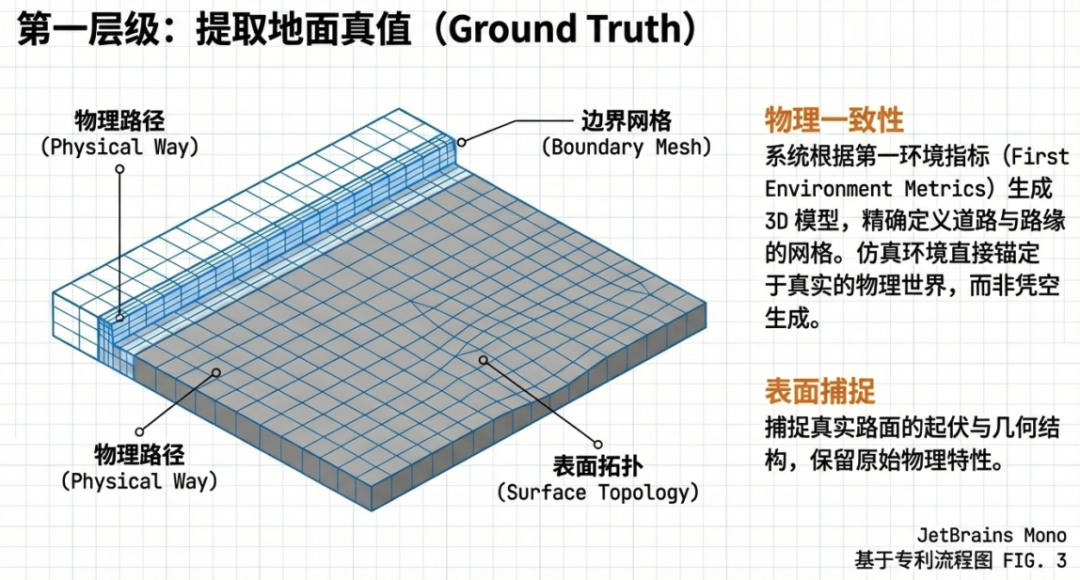

The first is Mixed Reality Modeling (Ground Truth Modeling): The system does not create images from scratch but is based on 'ground truth.' It extracts data from the video stream of the collection vehicle (Ego object) to generate a 3D mesh (First Surface) containing road boundaries, curbs, and surface topology. Next, it precisely fits 2D geometric objects, such as lane lines and road markings, onto the 3D road surface.

The collection vehicle is crucial, integrating video collection, environmental truth (typically LiDAR systems, which explains why Tesla, despite promoting the non-use of LiDAR, has purchased thousands of LiDAR units), and the vehicle's own actuation mechanisms for data collection.

The patent describes the core concept of Tesla's world model, 3D Mesh Generation:

It divides the underlying structure of the world model into:

First Surface: This refers to the 'first environmental indicator' of the physical environment, i.e., the road surface. These indicators include the boundaries, curbs, and surface topology of the physical road, forming a basic 'road and curb mesh.'

2D Geometric Objects Mapping: On top of the First Surface, the system generates 2D objects based on 'second environmental indicators.' These objects include lane lines, directional arrows, and other road markings. Technically, these are textures or planar objects fitted onto the 3D mesh surface.

This constitutes a multi-layered data structure world model, which includes the following aspects:

Road Boundary Models: Define the outer edges and surface topology of roads.

Median Edge Models: Define non-traversable areas within roads (such as traffic islands).

Lane Graph Models: Define the logical paths for vehicle or pedestrian movement on roads.

Geospatial Models: Include map models (locations of traffic lights, stop signs) and environmental models (buildings, non-traversable areas).

This is akin to the LEGO foundation structure of a world model. With this foundational structure, the next step is Tesla's proposed methodology of Heuristic Environment Variation.

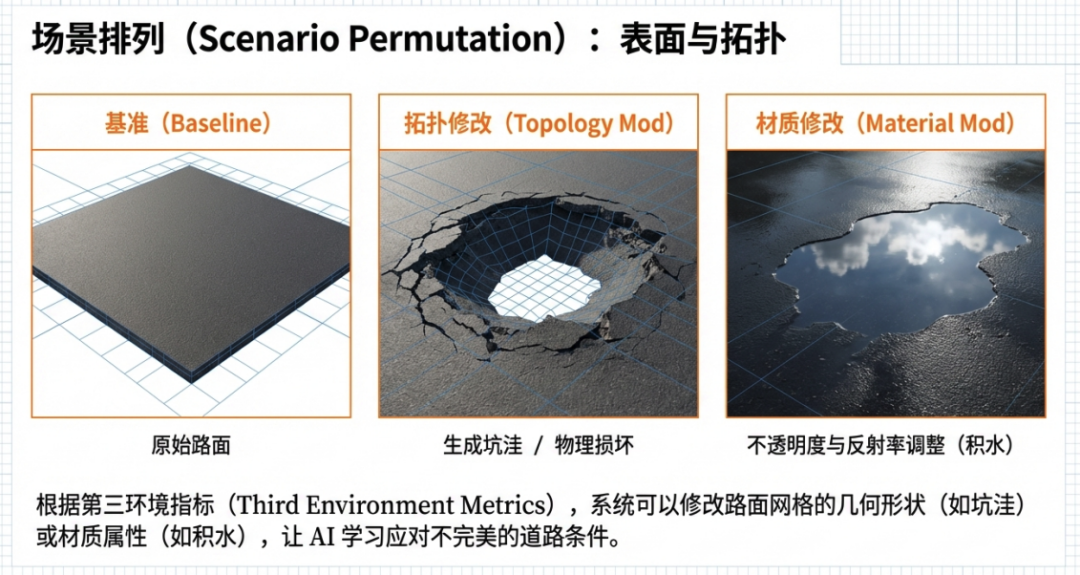

The system uses a set of 'heuristic rules' to modify the foundational model:

Physical Variation: Modifies the road's topology (creates speed bumps, potholes).

Visual Variation: Changes the opacity or reflectivity of objects (e.g., simulates icy or waterlogged road surfaces by increasing reflectivity).

Environmental Variation: Injects weather algorithms to generate fog, rain, falling leaves, and even replaces the architectural style of roadside buildings (e.g., changes an urban backdrop to a rural one).

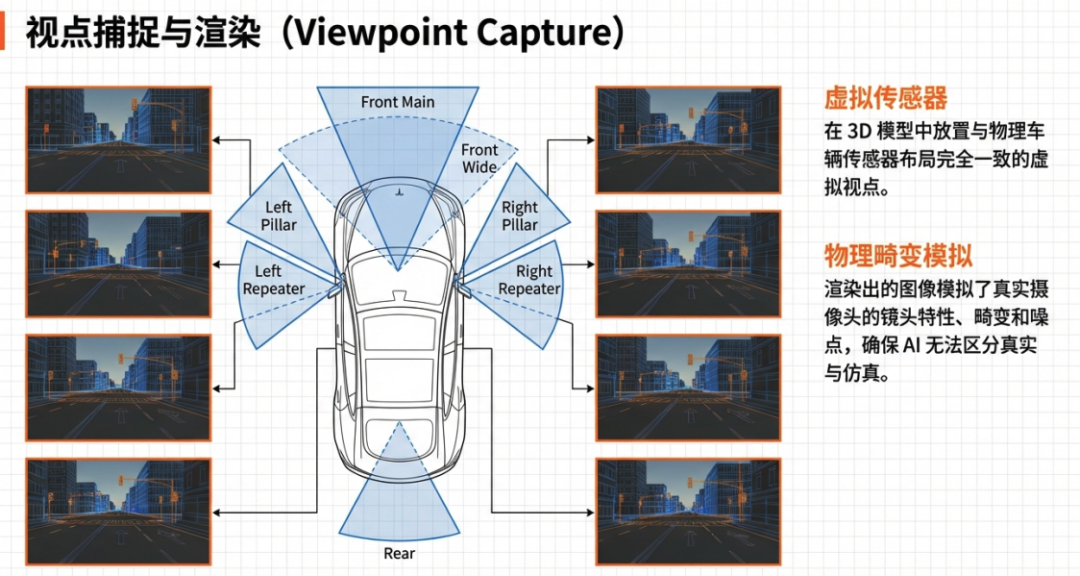

The above creates the ever-changing scenarios often seen in world models. However, our autonomous driving perception is primarily based on data input from 8-11 cameras. Therefore, it is necessary to convert the world model's videos into the input format of these 8-11 cameras. Thus, Tesla proposes the concept of virtual viewpoint rendering. The system places virtual cameras in the 3D world, with positions, angles, and fields of view identical to those of the hardware on real vehicles (front, side, rear views, etc.), generating dozens of parallel simulated video streams.

This way, data similar to that collected in the real world is formed and imported into the training algorithm.

This process may seem straightforward, but consider that running a 3D game requires high-end gaming equipment. Constructing a world model is even more computationally intensive, with computational power equating to cost and time. How can training be conducted efficiently and cost-effectively?

To process vast geographical data and generate complex scenarios in real-time, Tesla's patent proposes an efficient computational architecture for world model block parallel processing:

Tiling and Segmentation: The system uses 'Block Heuristic' algorithms to cut large geographical area models into small 'region segments' or tiles based on computational resource limitations.

Dynamic Resource Allocation: The system includes a 'Tile Creator' and 'Tile Loader' capable of identifying and dynamically assigning different map tiles to different processor cores for parallel execution.

This resolves the computational bottleneck when rendering large-scale, high-precision environments.

3. The Development and Prospects of Physical AI's World Model

This patent elucidates the theory and methodology of world models for autonomous driving training and points out their applicability to Physical AI entities such as robots. This world model methodology enables autonomous driving and other Physical AI to shift from 'passive learning' to 'active evolution,' rapidly understanding the interaction rules of the physical world and constructing a closed-loop Physical AI evolution system:

Real vehicle data collection

Generation of virtual scenarios

Training of AI models

Deployment of models back to real vehicles

Calibration based on real vehicle performance feedback.

This means that every AI entity driving on the road or existing in the physical world is contributing to the 'skeleton' of this virtual world, which in turn makes the real vehicles smarter.

In fact, the theory of this world model is similar to how humans learn. Learning is divided into practical learning (which can be seen as real data training) and instructed learning (which can be seen as synthetic data training from world models), forming intuition that 1+1 equals 2.

Since autonomous driving or robots, as silicon-based intelligences, possess vast physical storage capabilities and can maintain this storage with energy, they can remember more variant scenarios in their algorithms than humans. However, carbon-based humans have the ability to generalize from one instance, i.e., reasoning ability. Therefore, the next step for world models should be how to enable Physical AI to master reasoning abilities, which is currently being explored and practiced by various Physical AI companies.

References and Images

Simulation of viewpoint capture from environment rendered with ground truth heuristics US20260017875A1

Reference images were created by Gemini based on the patent.

*Unauthorized reproduction and excerpting are strictly prohibited.