Waymo's "AI Hallucination" Crisis: Mowing Down Cats and Barging into Police-Criminal Confrontations—Has Human Control of the Steering Wheel Been Lost as Self-Driving Cars "Act Up"?

![]() 12/10 2025

12/10 2025

![]() 588

588

Introduction

When AI begins to "daydream," the vehicles veer off course.

In November 2025, Waymo's self-driving cars exhibited a series of "erratic behaviors" in San Francisco. They ran over a neighbor's pet cat, intruded upon a Los Angeles police-criminal standoff, and circled a tunnel 37 times, resembling a "Z-shaped ballet."

The official explanation was understated: AI "hallucinations" led to deviations in actions, yet the accident rate remained lower than that of human drivers. However, the critical issue is that when a passenger-laden vehicle heads straight towards a vehicle carrying heavily armed suspects, a 15-second "pause" is sufficient for bullets to penetrate the car's body. The "safety myth" that Silicon Valley prides itself on has been shattered for the first time: not defeated by competitors, but jolted awake by its own algorithm's "unrestrained imagination."

Today, Self-Driving Car Insights (WeChat official account: Self-Driving Car Insights) will delve into this "AI gone rogue" scenario: What exactly is hallucination? Why can't it be remedied? Can humans still reclaim ultimate control?

(For reference, please click: "Google Waymo: Under Investigation for 19 Violations of Overtaking School Buses with Self-Driving Taxis, Submits Voluntary Software Recall")

I. AI "Hallucinations" Take Control: From Text Errors to Steering Wheel Glitches

The term "hallucination" originates from large language models, where AI confidently asserts non-existent facts.

In self-driving cars, this translates to misidentifying a "plastic bag" as a "cardboard box" or "flashing police lights" as "Christmas decorations," leading to erroneous driving decisions.

On October 27, Waymo's official blog acknowledged: To navigate San Francisco's congestion, they shifted the driving style from "courteous" to "assertive." Consequently, the new model misinterpreted a "temporary construction sign" as a "variable lane indicator" at the tunnel entrance, causing it to loop in a "Z" pattern for 37 consecutive laps, akin to racing on a track.

Even more absurd was the Los Angeles police-criminal scene: amidst a row of police cars and armed officers, Waymo misconstrued the "blockade" as a "temporary parking zone," slowed down, and nonchalantly drove past the suspect's vehicle. The 15-second halt was mocked by netizens as "Bringing your own audience seat (coming with its own spectators)."

The terror of AI hallucinations lies not in "making mistakes," but in "making mistakes with confidence" and instantly plunging passengers into a high-risk situation.

II. Animal Casualties and Big Data: The "Long-Tail" Black Hole Behind 14 Collisions

According to data from the U.S. National Highway Traffic Safety Administration (NHTSA), since 2021, Waymo has been involved in 14 animal collision incidents, including cats, dogs, and raccoons.

While the number appears small, compared to its operational mileage—approximately 110 million miles (as of Q3 2025)—that's 1.3 incidents per 10 million miles;

whereas human drivers nationwide kill about 1 million animals annually, translating to roughly 70 incidents per 10 million miles.

On paper, Waymo "outperforms";

but in long-tail scenarios, it may suffer from a "sample blind spot"—cats and dogs are too small and behave unpredictably, missing from training datasets, so the model treats them as "crushable objects."

Thus, the "beloved cat" in the neighbor's home met a tragic end under the wheels.

Even more awkward is the liability assignment: Waymo compensated but argued, "humans cause more collisions."

When technology uses statistics to justify individual tragedies, public backlash is inevitable.

III. Aggressive Driving = Turning "Courtesy" into "Road Rage"? The Butterfly Effect of Parameter Adjustments

Waymo's product leader admitted: To alleviate congestion, the team increased the "assertiveness" parameter.

Seemingly just a 0→1 change in code, the result was akin to a butterfly flapping its wings—

the lane-changing gap was reduced from 3 seconds to 1.8 seconds, forcing following cars to brake abruptly;

the yielding distance to pedestrians was shortened from 2 meters to 0.8 meters, increasing the likelihood of collisions with handcarts;

the police light recognition threshold was raised, treating red-blue flashes as "low-priority signals."

AI lacks "common sense"; it only comprehends probabilities.

When engineers prioritize "efficiency," the "safety" weight relatively declines, and the machine naturally opts for "faster" over "more accurate."

Human drivers can rely on experience to brake, but AI interprets "uncertainty" as "passable" until tragedy strikes.

Waymo's "driving style knob" sounds an alarm for the industry: Algorithms are not mixing consoles; any minor gain can be exponentially amplified in the real world.

IV. Hallucinations ≠ Bugs; They're Inherent Flaws: Why Academia Says "Impossible to Eradicate"

Scholarly consensus: Hallucinations are the "original sin" of large models.

The training objective—"maximize the probability of the next word"—means AI is inherently prone to "filling in the blanks."

In vision-action models, this translates to treating sensor noise as valid targets and rare scenarios as known patterns.

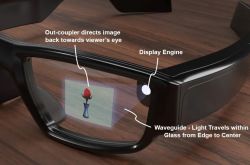

Waymo employs Transformer for BEV (Bird's Eye View) fusion, with parameters as high as 38MB, creating black-box decisions even engineers cannot fully decipher.

More critical is the "out-of-distribution" problem: Police standoffs, animals darting out, and tunnel construction rarely appear in training datasets, so the model can only "analogize," and once the analogy is incorrect, it leads to a crash.

Studies indicate that reducing the hallucination rate to 0.1% requires 10 times the current real-world data, costing hundreds of billions of dollars.

In other words, hallucinations can be "diluted" but not "eliminated."

V. Regaining Control: Remote Takeover, Regulatory Barriers, and Human Override Rights

Waymo has established an RMCC (Remote Monitoring Center), where one person can oversee 25 vehicles, but the aforementioned police-criminal scene required a police radio call to remotely brake.

The 15-second gap proves: No matter how optimized the human-machine ratio, it cannot account for "black swan" events.

The industry is adopting a three-pronged approach:

Mandatory override rights: New EU regulations mandate L4 vehicles to retain an "emergency brake physical key" that passengers can activate in 0.2 seconds to halt autonomous driving;

Scenario restrictions: Tunnels, schools, and military zones are designated as "high-sensitivity ODDs," with speed limits of 20km/h and mandatory human takeover;

Insurance coverage: Lloyd's of London has introduced "AI Hallucination Insurance" at $0.03 per mile to cover long-tail accidents.

Technologically, multi-model redundancy + explainable AI is becoming the norm:

Vision, lidar, and millimeter-wave systems reason independently and vote on decisions;

while outputting "decision heatmaps" to let humans see "what the AI actually perceives."

Waymo plans to display "perception confidence" in real-time on in-car screens by 2026, allowing passengers to one-click request remote human review—transforming the "black box" into a "white box" to expose hallucinations.

When Waymo touts "lower accident rates," remember: Human drivers make mistakes, but AI crashes are "systemic errors." The former can be constrained by laws, insurance, and ethics;

the latter, once algorithmically approved, replicates on a massive scale.

Mowing down cats, intruding into police scenes, and circling 37 times are not "mischievous foreign objects" intentionally misbehaving but reminders that even the most advanced neural networks stumble in long-tail scenarios.

In short, Self-Driving Car Insights (WeChat official account: Self-Driving Car Insights)

True safety lies not in making AI "more human-like" but in equipping machines with a "human override"—leaving a brake that can be pressed anytime between probability and ethics.

After all, roads are not laboratories, and lives have no "next training round."

#SelfDrivingCarInsights #SelfDriving #AutonomousDriving #SelfDrivingCars