Yin Qi Takes the Helm at Step.ai: Qianli Tech Unveils Its "Secret Weapon" as Model Integration Becomes Key to Intelligent Driving's Future

![]() 01/27 2026

01/27 2026

![]() 525

525

Author | Sean

Editor | Dexin

On January 26th, AI large model startup Step.ai made waves by officially announcing Yin Qi's appointment as chairman. On the same day, Step.ai secured a staggering RMB 5 billion Series B+ funding round, marking the largest single financing achievement in China's large model sector over the past year. The capital injection will fuel foundational model R&D, support the creation of a globally leading base model, and expedite the execution of the "AI + Terminals" strategy.

This announcement has sent significant ripples throughout the industry.

The reason for the industry's intense interest lies in the fact that just over a year ago, Yin Qi invested RMB 2.43 billion to acquire a stake in Lifan Technology (now rebranded as Qianli Tech) and assumed the role of chairman, embarking on a mission to deeply integrate AI with the automotive sector.

On one side stands Qianli Tech, a listed company specializing in intelligent driving solutions, smart cockpits, and Robotaxi services. On the other is Step.ai, a unicorn startup valued in the billions, dedicated to foundational model R&D and commercialization. What does it signify for one individual to lead both companies simultaneously?

To answer this, we must first understand who Yin Qi is.

As one of China's pioneering AI entrepreneurs and a leading figure in the field, Yin Qi has witnessed the industry's evolution from AI 1.0 to AI 2.0 firsthand. In 2011, he founded Megvii, propelling artificial intelligence into core scenarios within the Internet of Things and physical industries. Megvii later earned its place among the "Four Little Dragons of AI." From early acclaim and capital pursuit to navigating challenges in commercialization expectations, Yin Qi has experienced the full spectrum of AI's rise and fall.

Now, at 37, this seasoned AI veteran has chosen to position himself at the crossroads of the large model and intelligent vehicle sectors—a strategic move with far-reaching implications.

To grasp the significance of this decision, we must first recognize the strength of Step.ai—the large model company Yin Qi is set to steer.

I. Step.ai: The "Multimodal Leader" in the Large Model Race

Multimodal Champion

Among China's large model startup landscape, the four most prominent companies are Zhipu AI, Moonshot AI, MiniMax, and Step.ai.

Zhipu AI boasts academic backing from Tsinghua University and broad applications for its GLM series. Moonshot AI gained traction in the consumer market with Kimi's long-text capabilities. MiniMax's Conch AI has completed its IPO. Meanwhile, Step.ai was highlighted by MIT Technology Review as one of the "Chinese AI startups worth watching besides DeepSeek," though it has maintained a lower profile in public discourse compared to its foundational model R&D prowess.

Founded in 2023, Step.ai achieved unicorn status within its first year of financing. What truly distinguishes it is its full-modal foundational model layout and technical vision in the multimodal field.

A consensus within the industry is clear: "The race now hinges on whether a full-modal layout can reach the top tier."

Step.ai's AGI roadmap unfolds as follows: Unimodal → Multimodal → Unified Multimodal Understanding and Generation → World Model → AGI (Artificial General Intelligence).

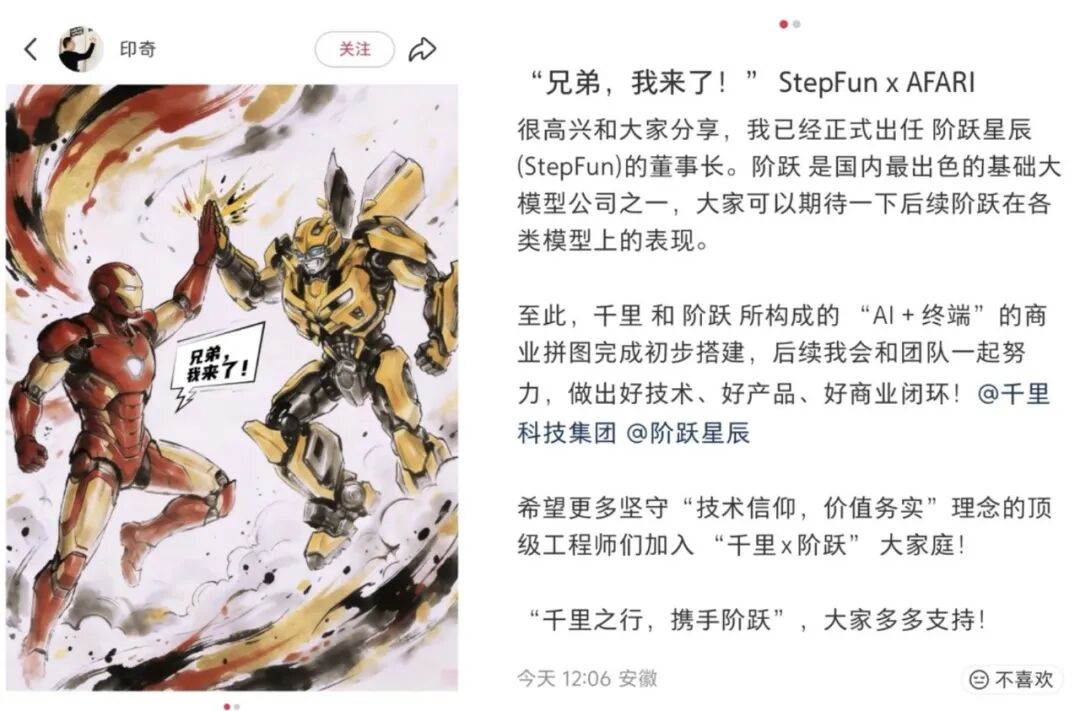

Currently, Step.ai has publicly released over 30 Step-series foundational large models, spanning language, vision, voice, reasoning, VLM, music, and other modalities, with multimodal models accounting for over 80%.

Domestically, only Alibaba, ByteDance, and Step.ai have achieved full coverage of the five major modalities: language, vision, voice, video, and reasoning.

(Above: Representative models from Step.ai's Step series)

(Above: Representative models from Step.ai's Step series)

Notably, since its inception, Step.ai has prioritized native multimodality and unified understanding-generation. While some model companies initially leverage multimodal generation models to create consumer-facing content products, Step.ai has remained focused on advancing native multimodal and unified understanding-generation capabilities, believing this to be the path to AGI.

This year, market attention has shifted toward the integration of AI and the physical world, anticipating an AI agent product that bridges AI and terminals. Such a product would possess perception, understanding, and execution capabilities to unlock higher-order tasks and generate greater value. The prerequisite for this advancement is precisely native multimodality and unified understanding-generation.

From a commercialization perspective, Step.ai has preliminarily validated the strong demand for large model capabilities in terminals. By the end of 2025, its terminal agent API call volume had grown nearly 170% for three consecutive quarters. In the mobile phone sector, 60% of China's top brands have established deep cooperation with Step.ai, covering flagship models from OPPO, Honor, ZTE, and others, with over 42 million model installations. In the automotive sector, Step.ai has formed deep partnerships with Qianli Tech and Geely, jointly launching the industry's first AgentOS smart cockpit equipped with an end-to-end voice model.

Why would a company with first-tier model capabilities partner with an intelligent vehicle solutions provider? To understand this, we must first recognize a broader industry trend.

II. AI + Terminals: Why Is This the Future Direction?

Tesla's Evolving Mission

On January 21, 2026, Tesla officially updated its company mission via its official Weibo account, stating: "Building a world of amazing abundance" (Our mission is to build a world of amazing abundance).

This marks Tesla's second mission update since its founding in 2003. The first occurred in March 2025, when it shifted from "Accelerating the world's transition to sustainable energy"—a 22-year commitment—to "For sustainable human abundance." Less than a year later, it further upgraded to "A world of amazing abundance."

What does this shift from "energy transition" to "a world of abundance" signify?

From its initial focus on electric vehicles and clean energy to today's broader vision, it reflects Tesla's strategic expansion. Tesla is no longer just an electric vehicle company but a comprehensive tech enterprise aiming to reshape societal foundations and drive global prosperity through AI and automation.

This mission update is closely tied to Tesla's 2025 release of "Master Plan Part IV," which centers on deeply integrating AI into the physical world. It seeks to redefine labor, transportation, and energy through products and services like autonomous driving, the humanoid robot Optimus, and Robotaxi.

In essence, electric vehicles, robots, and Robotaxis are all terminals, and the key to connecting them is AI capability. Tesla's AI capabilities, built on Full Self-Driving (FSD), are rapidly empowering its robot and Robotaxi businesses.

Elon Musk has repeatedly stated: "Optimus will ultimately be worth more than the car business and FSD combined." This confidence in Optimus reflects his judgment on the future of "AI + terminals."

The Essence of Competition: AI Capability

As is well known, Tesla is backed by xAI. Founded by Musk, xAI is a key supplier of end-to-end autonomous driving model capabilities for Tesla, with its core product being the Grok series of large language models. Musk has noted that xAI's Grok and Tesla's AI are at "opposite ends of the spectrum," with Grok attempting to solve AI problems through extensive AI training and inference computation.

From its inception, xAI has been inherently multimodal. Whether Grok 4.1's significant advancements in visual understanding, video generation, and voice interaction or its shared data loops and engineering experience with Tesla's autonomous driving business, these are hardly coincidental. They are extensions of capabilities honed in Tesla's real-world vehicle environments.

From Tesla's mission evolution, a clear strategic direction emerges:

Future competition will not revolve around building cars but around AI capabilities. The automobile is merely the first large-scale terminal for AI to enter the physical world, followed by robots, glasses, aircraft, and more. Whoever masters core AI capabilities will hold the power to define these terminals.

Against this backdrop, the deep collaboration between Qianli Tech and Step.ai becomes easily understandable. This partnership transcends simple supplier relations, representing a precise ecological positioning for the era of "AI-Defined Vehicles."

III. Qianli + Step.ai: How to Build a Closed Loop for "AI-Defined Vehicles"?

Qianli Tech's Business Landscape

To understand the collaboration between Qianli and Step.ai, we must first grasp what kind of company Qianli Tech is.

Qianli Tech was formerly Lifan Technology. In July 2024, Yin Qi invested RMB 2.43 billion through his company to acquire a stake and became chairman in November. In January 2025, Yin Qi proposed a full embrace of the "AI + Vehicles" strategy, and the company was officially renamed Qianli Tech in February.

Currently, Qianli Tech's core businesses revolve around two main directions:

Intelligent Driving Solutions: Providing intelligent driving solutions for third-party automakers. Smart Cockpit Solutions: Creating next-generation smart cockpit experiences.

In fact, collaboration between the two companies was already underway before Yin Qi became chairman of Step.ai.

At Step.ai's Ecosystem Open Day in February 2025, Qianli, Geely, and Step.ai announced deepened technical cooperation to promote "AI + Vehicle" integration. In June 2025, Qianli Tech released its intelligent driving solution, explicitly stating that its core "Qianli Intelligent Driving RLM Large Model" was jointly developed with Step.ai. In July 2025, at the World Artificial Intelligence Conference (WAIC), the three parties jointly unveiled a preview version of the next-generation smart cockpit Agent OS. In January 2026, Yin Qi became chairman of Step.ai, officially forming the "Qianli + Step.ai" strategic closed loop.

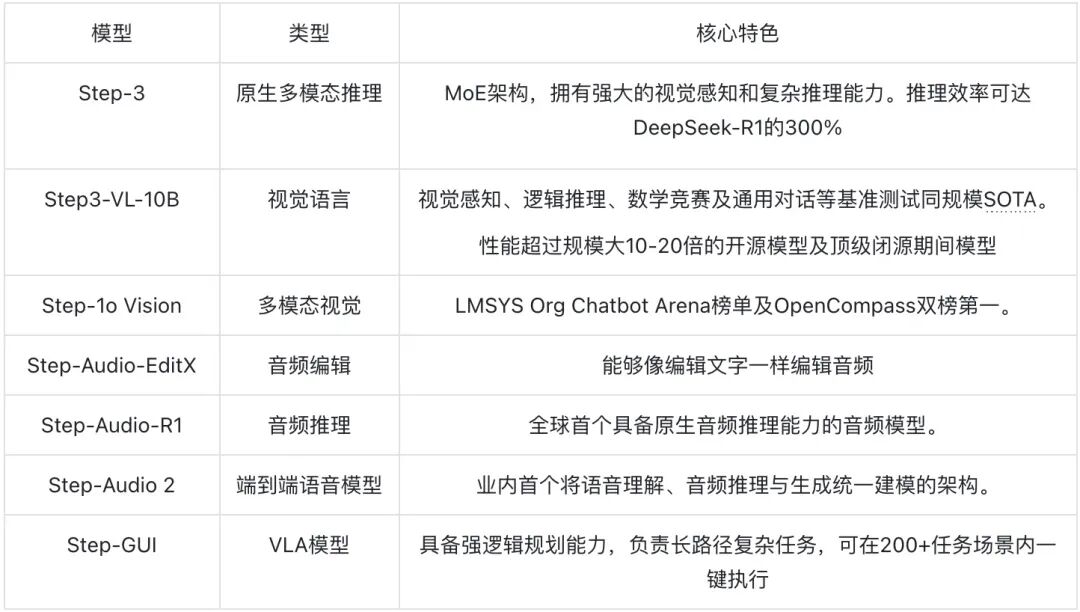

Intelligent Driving: The Race for "Model Inclusion"

In the intelligent driving sector, a concept is becoming an industry consensus: "model inclusion."

Model inclusion refers to the proportion of large models in intelligent driving systems. Large models are widely recognized as the core capability driving intelligent driving toward higher levels. A higher model inclusion means AI models dominate perception, decision-making, and planning, enabling the system to handle complex road conditions more human-like and provide safer, smoother driving experiences.

Qianli Tech is fully committed to developing high "model inclusion" intelligent driving solutions. Its core "Qianli Intelligent Driving RLM Large Model" was jointly developed with Step.ai.

Deep integration with Step.ai ensures Qianli can continuously access leading model capabilities from the ground up. This means Qianli does not need to build a large model team from scratch or invest billions of dollars in training foundational models but can directly leverage Step.ai's strengths to focus on transforming model capabilities into intelligent driving products.

In today's increasingly fierce "model inclusion" competition in intelligent driving, the value of this collaboration model is self-evident.

Cockpit: From Tool to "Intelligent Companion"

If intelligent driving makes vehicles "capable of driving," then the cockpit makes them "capable of thinking."

Traditional smart cockpits are essentially tools that execute simple commands—you ask for navigation to a destination, and it navigates; you request a song, and it plays. However, they do not understand you, remember you, or proactively think.

The emergence of large models is radically redefining smart cockpits.

In July 2025, Qianli Tech, Geely, and Step.ai jointly unveiled a preview version of the next-generation smart cockpit Agent OS at the World Artificial Intelligence Conference. Key features of this system include:

Multimodal hyper-natural interaction: Not only understands speech but also interprets facial expressions, gestures, and intentions. Human-machine co-driving based on fully integrated maps: Deep cockpit-driving linkage for true human-vehicle collaboration. End-to-end cloud-integrated fused memory: The cockpit remembers your preferences and habits, acting as a truly understanding companion.

These capabilities rely on Step.ai's powerful multimodal understanding and generation, evolving the cockpit from a "tool" into an "intelligent companion" with memory, deep thinking, and emotional communication like a real person.

Looking ahead, with Step.ai's industry-leading large model capabilities, the "Qianli + Step.ai" system positions Qianli Tech to potentially unify driving and cockpit experiences at a foundational level, creating disruptive innovations.

The Core Value of the System

For Qianli Tech, the core value of the "Qianli + Step.ai" system lies in its mastery of core algorithm capabilities and iterative autonomy for defining next-generation intelligent vehicles.

This is not a simple supplier relationship. With Yin Qi leading both companies, Qianli gains deep strategic synergy with Step.ai—full-chain coordination from foundational model R&D to product implementation.

In the era of "AI-Defined Vehicles," this capability represents core competitiveness.

IV. Yin Qi's AI Strategic Blueprint

From Tsinghua Yao Class to Megvii Technology

To understand Yin Qi's role in the "Qianli + Step.ai" system, it is necessary to review his AI journey.

Born in Wuhu, Anhui Province, in 1988, Yin Qi enrolled in the Automation program at Tsinghua University in 2006. Later, he was selected for the Yao Qizhi Experimental Class, a premier talent-cultivation program in computer science at Tsinghua University in China. In 2011, while pursuing his Ph.D. at Columbia University, he co-founded Megvii Technology alongside his Tsinghua classmates, Tang Wenbin and Yang Mu.

Megvii subsequently emerged as one of the "Four Little Dragons of AI," achieving global leadership in key areas such as computer vision. Additionally, it successfully aggregated a high density of talent, established a production-research system, and implemented technological industrialization. However, behind these accomplishments lay challenges, as the commercialization path was hindered by technological immaturity and a lack of large-scale application scenarios.

In an interview, Yin Qi candidly admitted: "The lesson from AI 1.0 is that any glory that cannot form a closed loop is fleeting."

This statement is crucial for understanding his subsequent strategic moves. During the AI 1.0 era, Megvii built robust technological capabilities but faced bottlenecks in commercial closed loops. This led Yin Qi to deeply recognize that technological capabilities must integrate with application scenarios and business models to sustainably create value.

Taking the Helm at Qianli: Charting the Course for "AI + Vehicles"

In 2024, Yin Qi embarked on a new strategic initiative: "AI + Vehicles."

Yin Qi has elaborated on his vision for "AI + Vehicles" in various forums. In March 2025, at Geely's AI Intelligent Technology Launch Event, he outlined three major trends for the future: the emergence of supernatural human-machine interaction, the widespread adoption of autonomous driving and automatic execution, and the dimensional upgrade of vehicle networking large models.

He also offered a more visionary prediction: "Future vehicles will increasingly resemble robots."

This perspective aligns closely with Elon Musk's strategic direction.

Strategic Maneuvers: The Closed Loop Takes Shape

If assuming leadership at Qianli represents Yin Qi's strategic move on the "terminal" front, then taking the helm at Step Stellar fills the crucial piece of the "AI Brain" puzzle.

By leading Step Stellar, Yin Qi's long-planned core strategic blueprint of "AI + Terminals" has officially materialized:

Step Stellar = AI Brain (underlying model capabilities)

Qianli Technology = Vehicle-end Execution (Intelligent Driving + Cabin)

Yin Qi views his dual roles as complementary. Qianli Technology primarily focuses on integrating AI with vehicles, recognizing the vehicle as the largest terminal and sector within AI. The core business scenarios of Qianli Technology, including intelligent driving, intelligent cabins, and future Robotaxi domains, all require a robust brain and model for support—a brain precisely provided by Step.

Thus, Yin Qi envisions Qianli constructing an AI-intelligent application scenario and sector centered around the vehicle. Step, in turn, serves as the foundational model brain and underlying AI capability supporting this vision. The technological products of these two companies are highly coherent, forming a continuum from foundational model research and development to soft-hard integrated productization and ultimately to market launch.

Leveraging this synergy, Yin Qi positions himself as an industrial integrator, capable of fostering deep strategic collaboration between Step Stellar and Qianli Technology. This accelerates the transition of AI large model capabilities into the physical world and establishes a commercial closed loop from underlying AI large models to terminal applications.

This approach precisely embodies the core lesson he distilled from AI 1.0: the necessity of forming a closed loop.

However, Yin Qi's ambitions clearly extend beyond the automotive sector.

It is reported that he is also actively planning the innovative blueprint for the next generation of AI terminals, incubating AI-native hardware. Intelligent driving, intelligent cabins, Robotaxi—these are merely the initial steps in his "AI + Terminals" vision.

From Megvii to Qianli to Step, Yin Qi's strategic layout logic has become increasingly clear: leveraging AI capabilities as the core and vehicles and other hardware as the carriers, he aims to bring AI large models into the physical world.

V. Conclusion

Returning to the question posed at the beginning of the article: Why would a seasoned AI entrepreneur simultaneously lead a vehicle company and a large model company?

In an era where Tesla has upgraded its mission from "energy transition" to "a world of abundance," where NIO has announced its ambition to become an "embodied intelligence company," and where XPENG positions itself as an "explorer of mobility in the physical AI world"—AI + Terminals has become the industry's consensus choice.

Whoever masters core AI capabilities will wield the power to define the next generation of intelligent vehicles, intelligent robots, and intelligent hardware.

The significance of the "Qianli + Step" system lies precisely here. It empowers Qianli Technology with core algorithmic capabilities and iterative autonomy, enabling it to define the next generation of intelligent vehicles. This transforms Qianli from a mere solution provider into an entity with a complete capability closed loop, spanning from the underlying layer to the terminal.

Yin Qi, a seasoned entrepreneur who has navigated the industrial cycle from AI 1.0 to 2.0, now stands as an industrial integrator, fulfilling his ideal of a "closed loop."

"Any glory that cannot form a closed loop is fleeting."

Now, Yin Qi is leveraging "Qianli + Step" to craft his closed-loop solution.

The prelude to AI large models entering the physical world has just begun.